Sigmoid function,Classification Algorithm in Machine Learning: Decision Trees ,

Ensemble Techniques: Bagging and boosting, Adaboost and gradient boost, Random

Forest,Naïve Bayes Classifier, Support Vector Machines. Performance Evaluation:

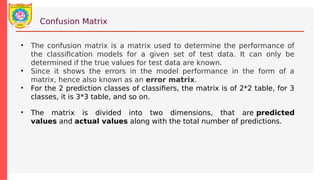

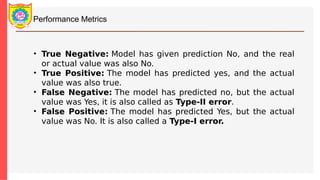

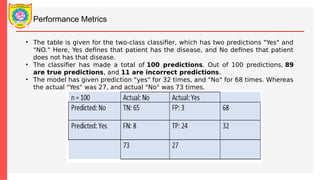

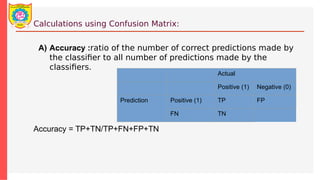

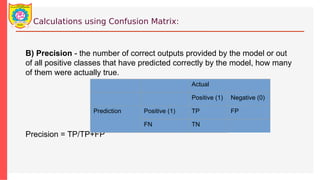

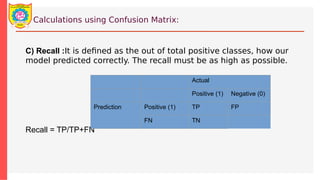

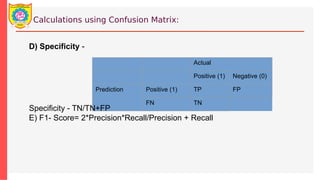

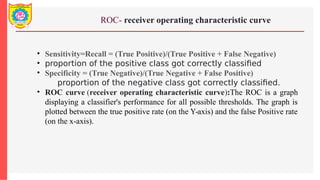

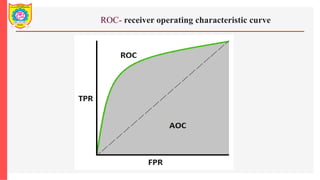

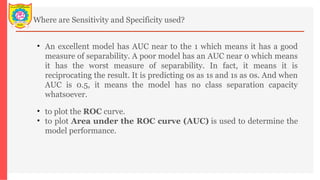

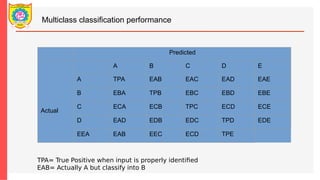

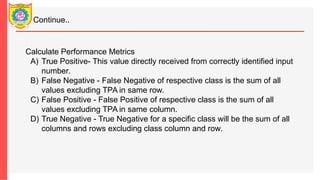

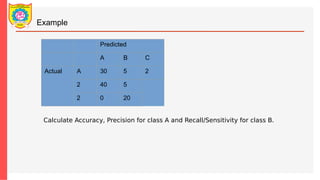

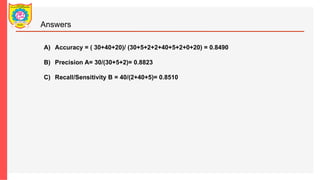

Confusion Matrix, Accuracy, Precision, Recall, AUC-ROC Curves, F-Measure