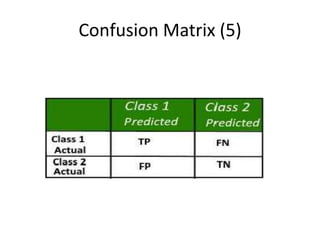

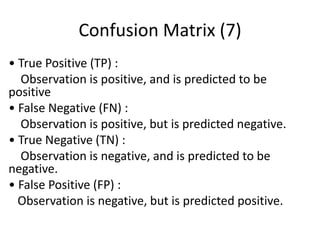

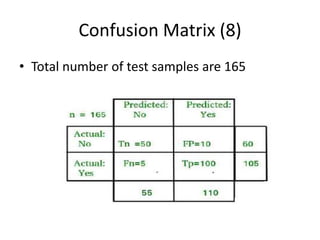

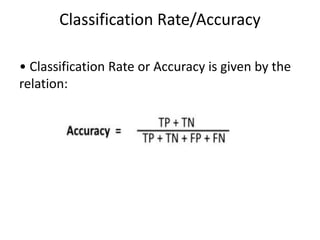

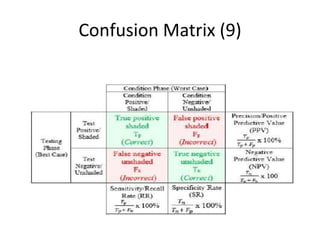

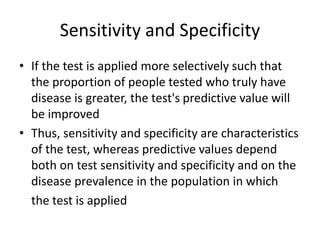

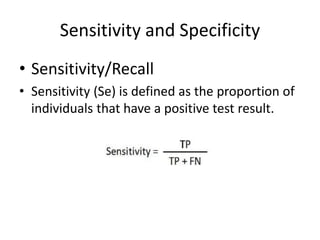

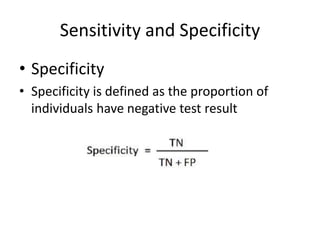

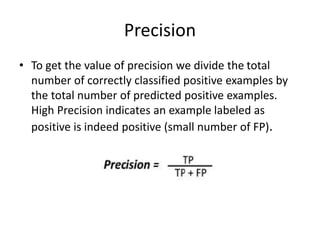

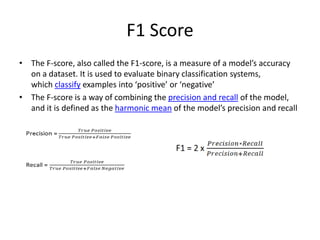

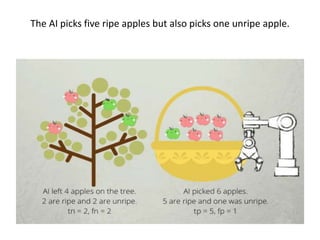

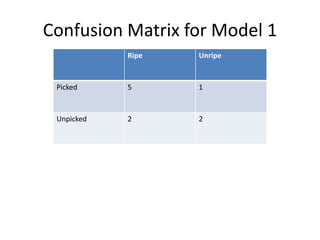

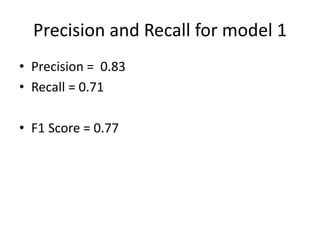

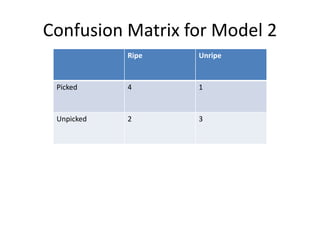

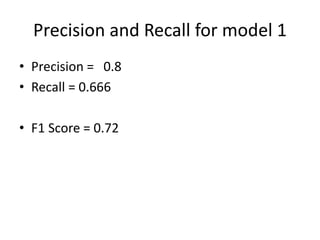

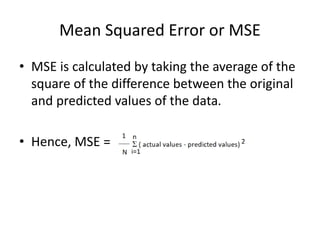

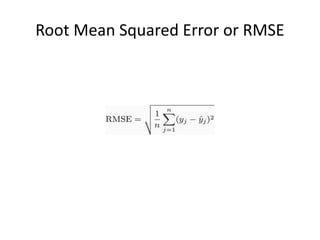

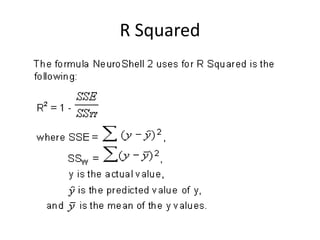

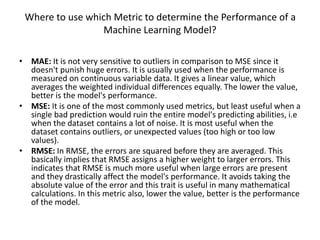

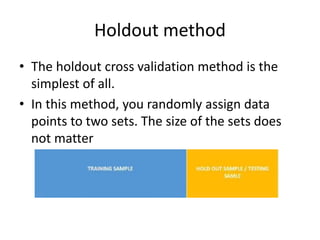

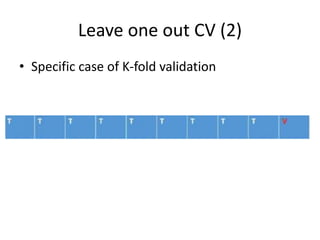

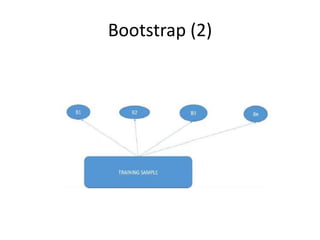

The document provides an overview of the confusion matrix, a key tool for evaluating the performance of classification models, detailing its components such as true positives, false negatives, and accuracy measures. It discusses metrics like precision, recall, F1 score, Mean Absolute Error (MAE), and Mean Squared Error (MSE) to help assess model performance, as well as cross-validation techniques to avoid overfitting. Additionally, it highlights the importance of considering class imbalances and the implications of different metrics on model evaluation.