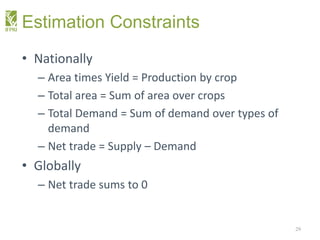

The document discusses the estimation of national accounts and supply-demand data using cross-entropy methods, highlighting the need for balanced datasets across various commodities. It outlines the use of primary data sources like FAOSTAT and AQUASTAT to inform estimations, and emphasizes an information theory approach to reconcile discrepancies in data through Bayesian methods. The paper also explains how to generate consistent databases through a maximum entropy principle and the use of prior distributions in estimation.