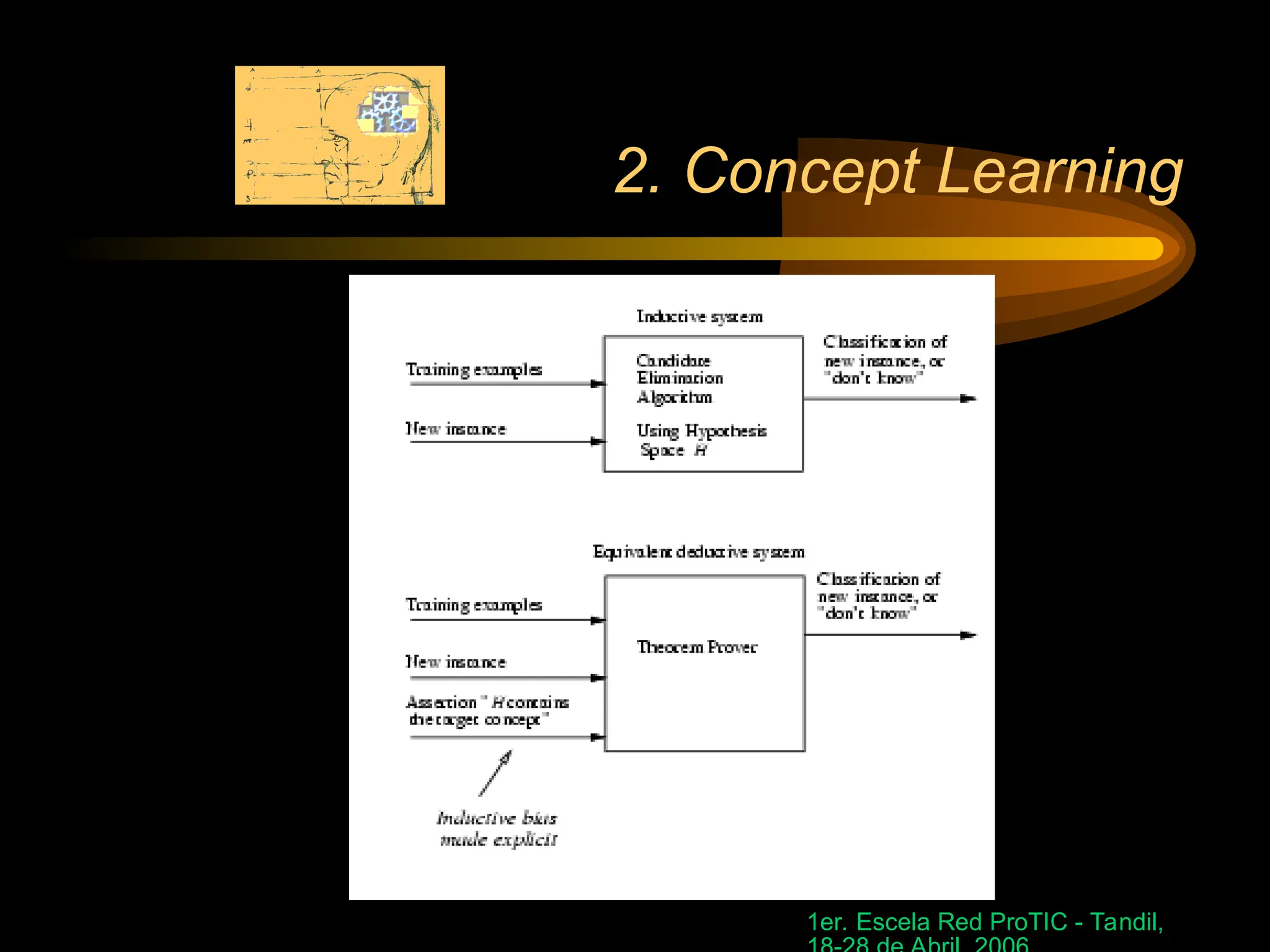

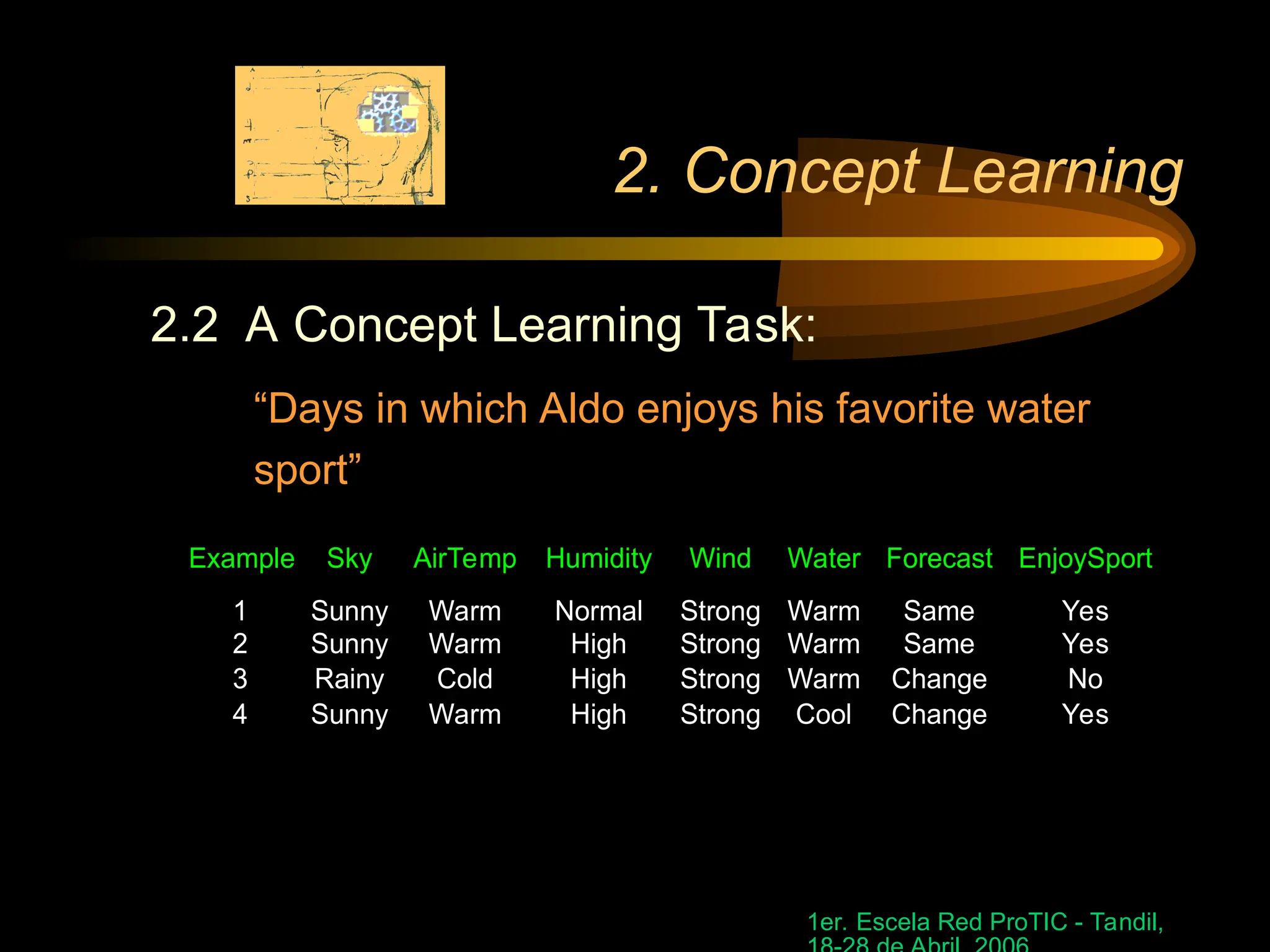

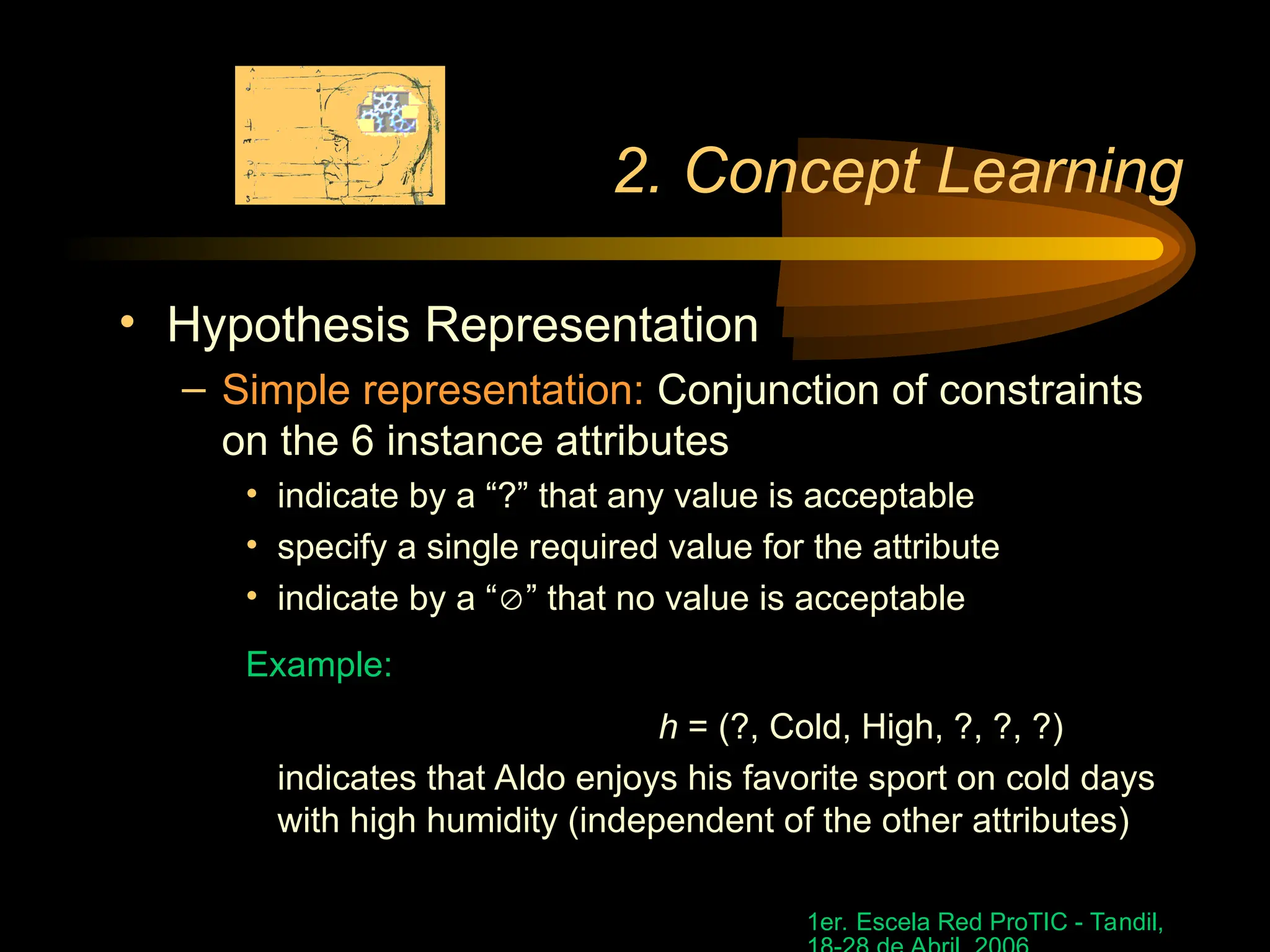

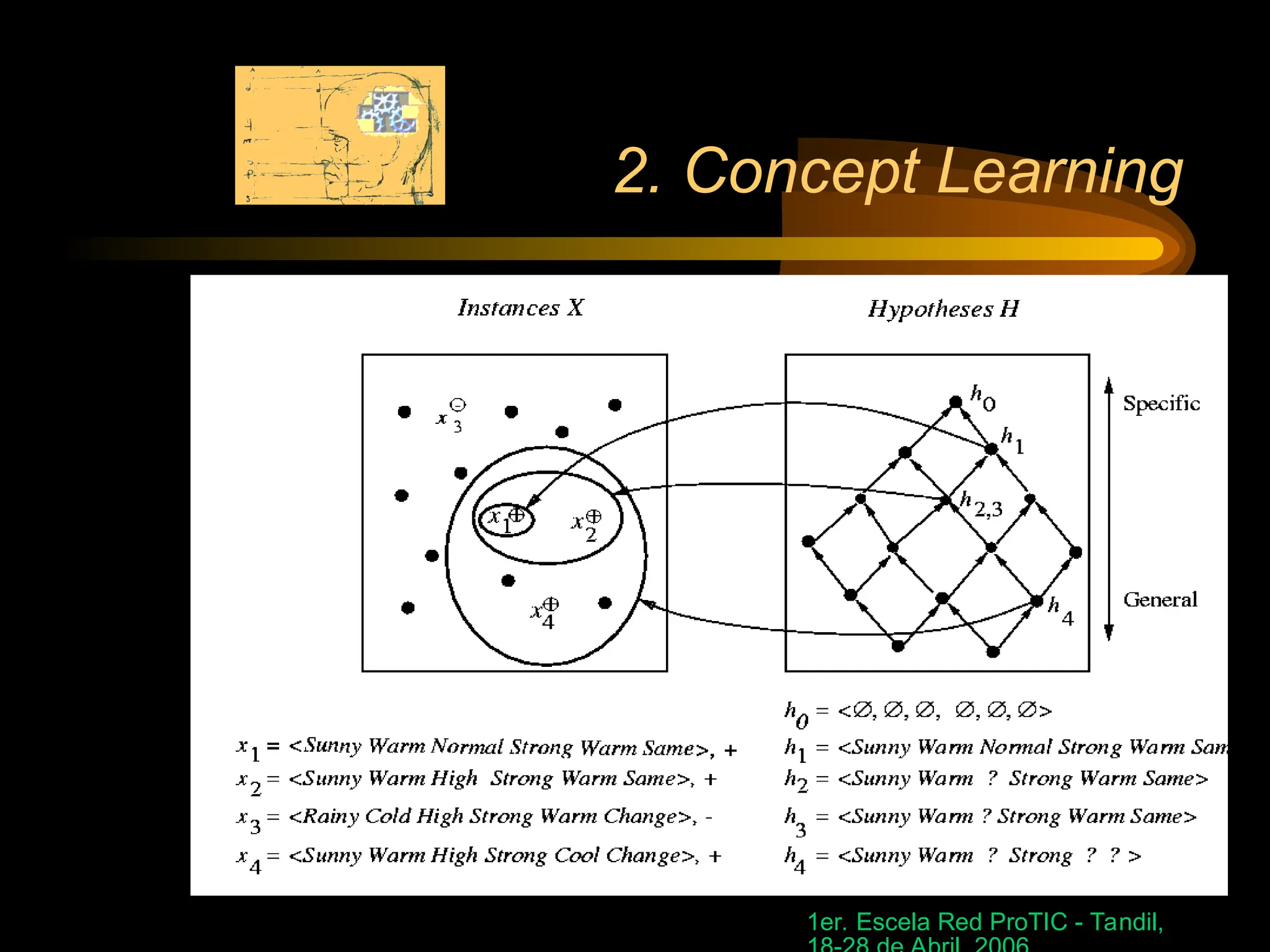

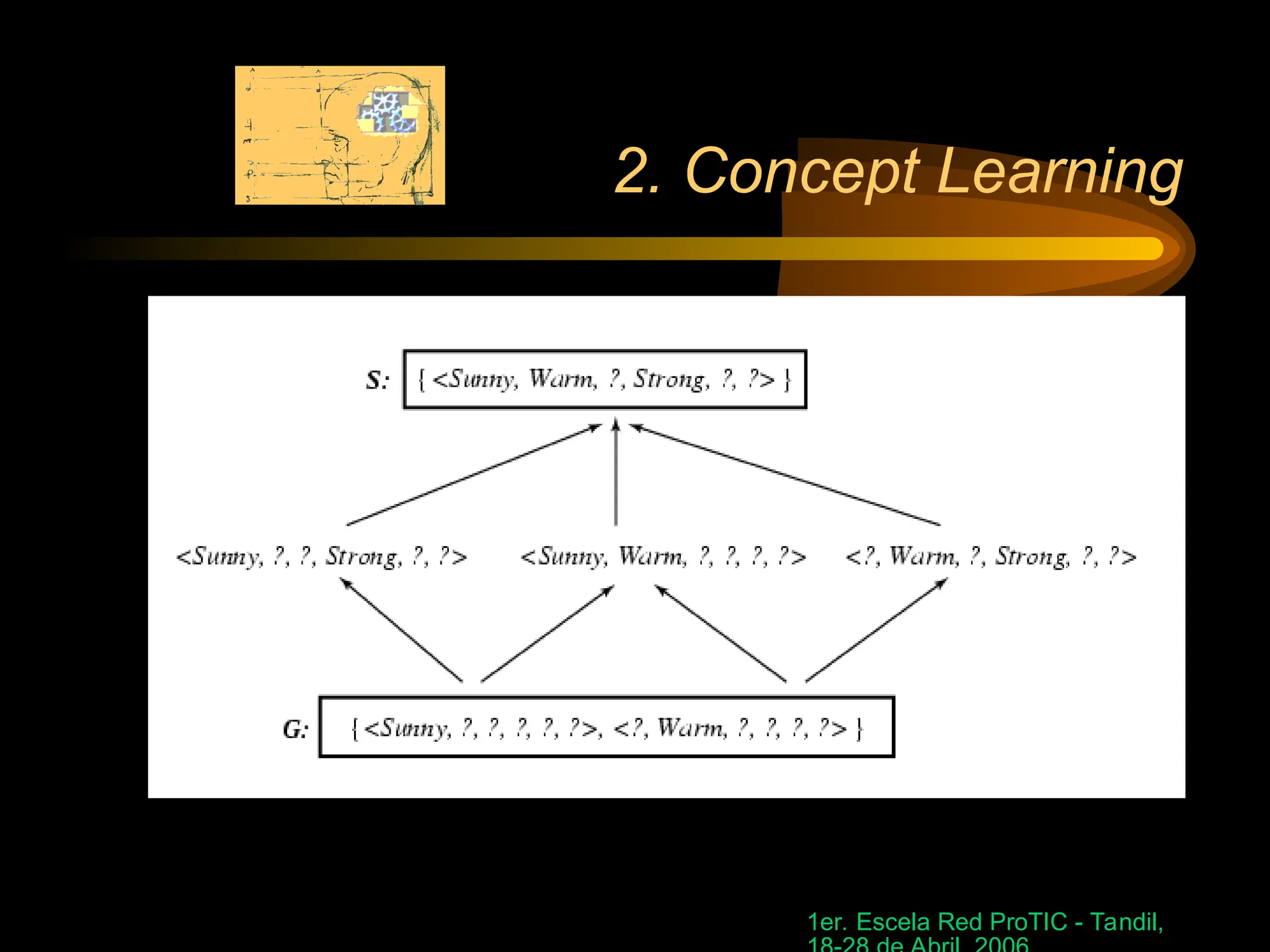

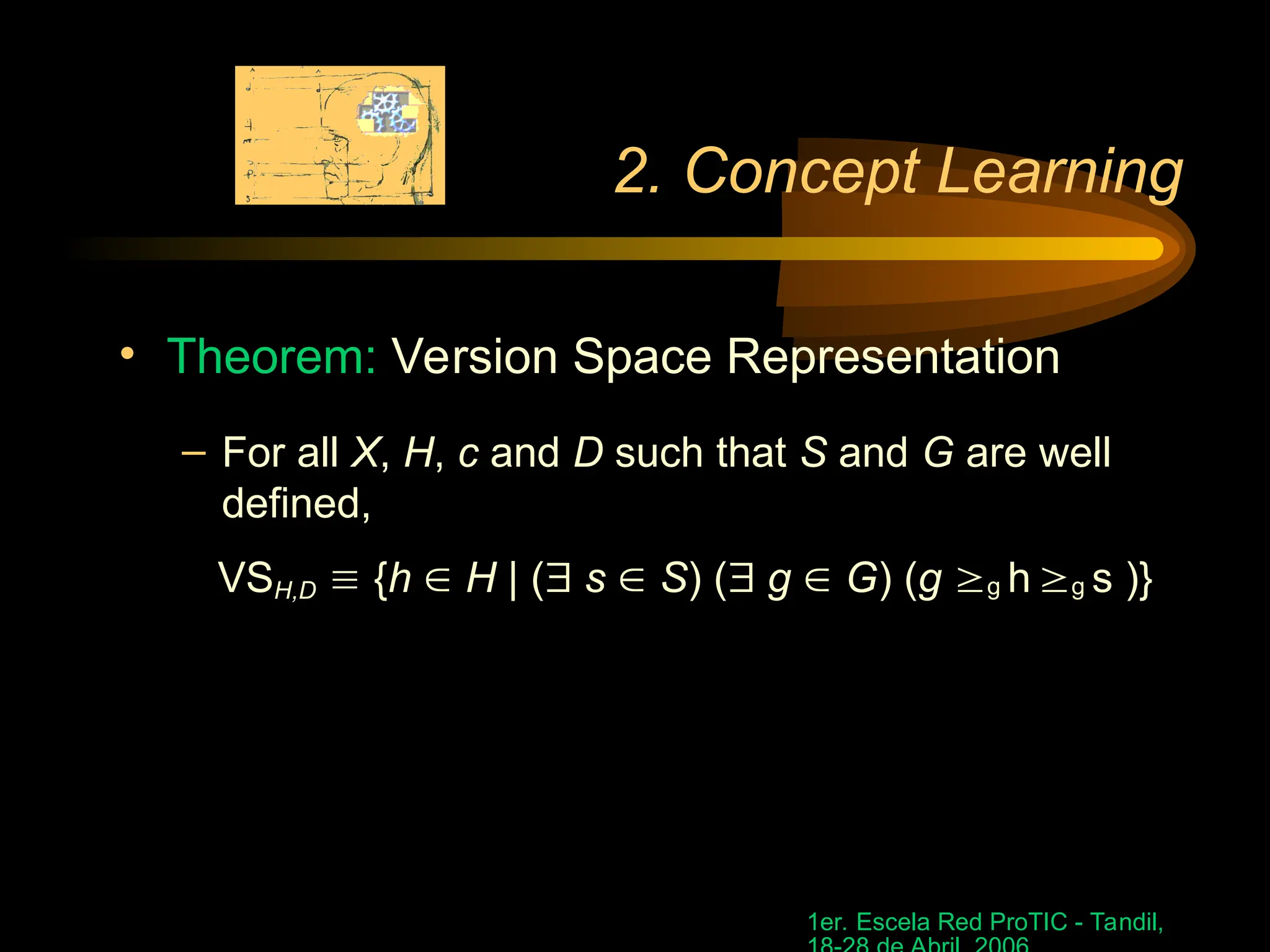

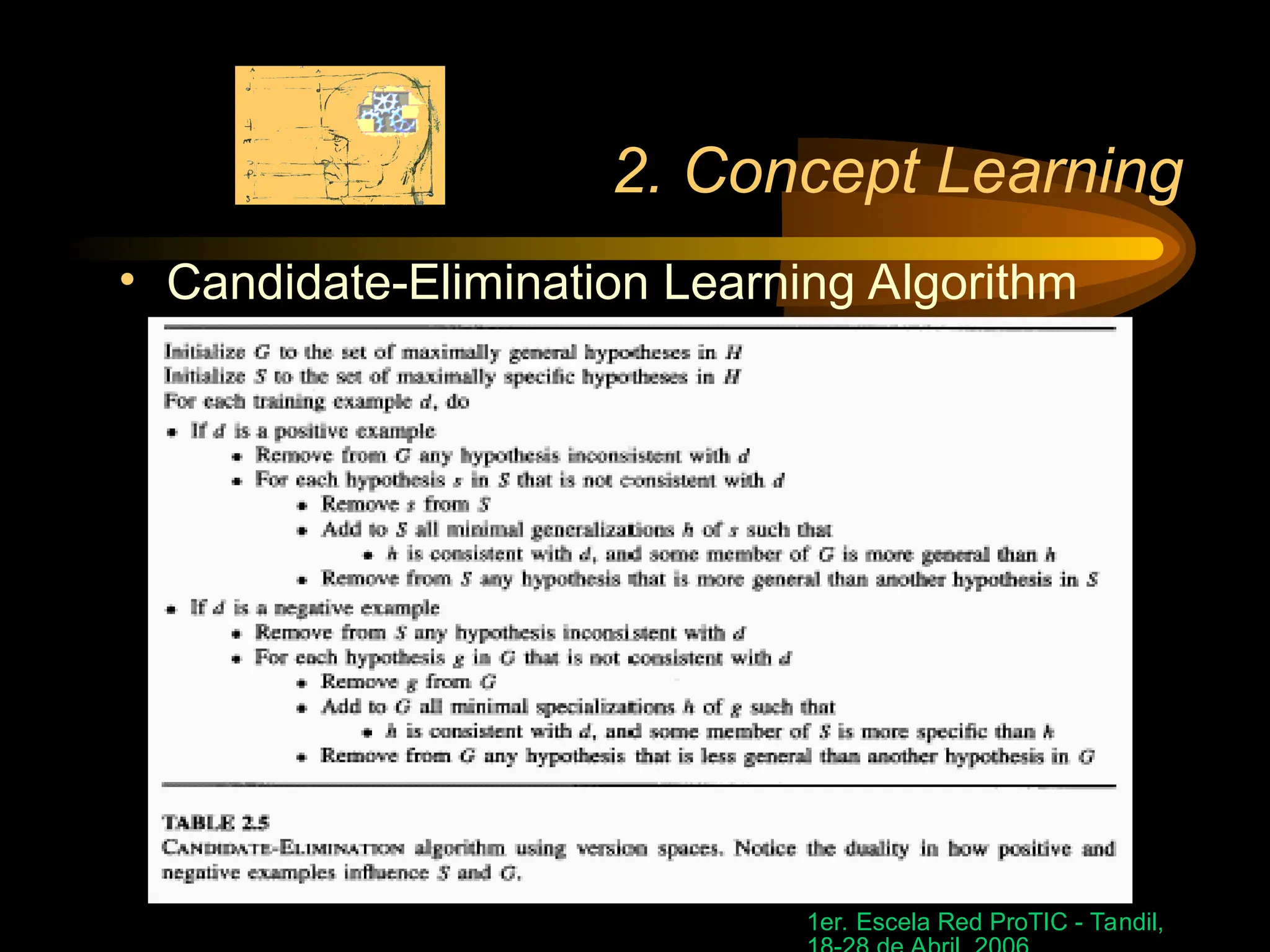

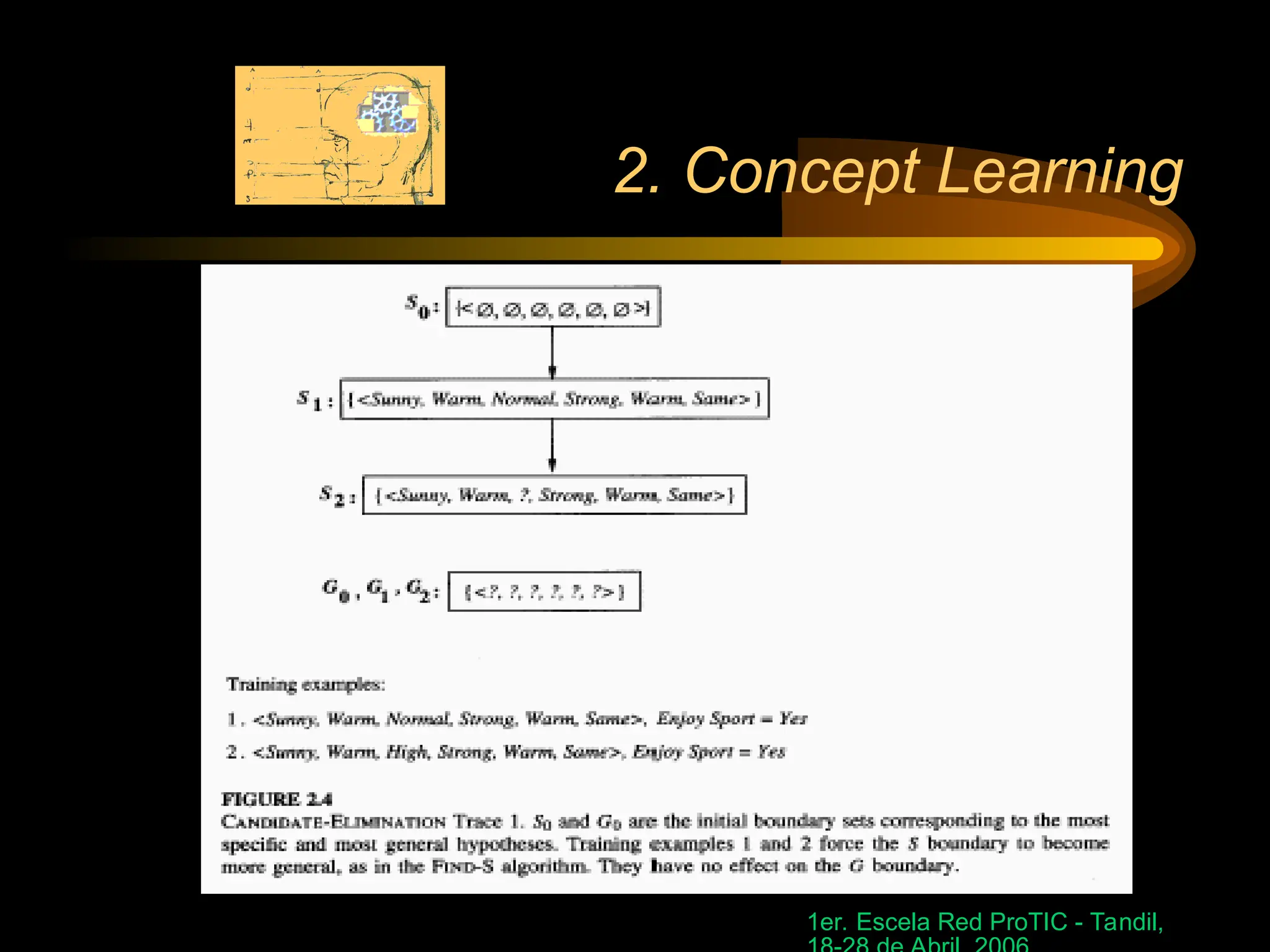

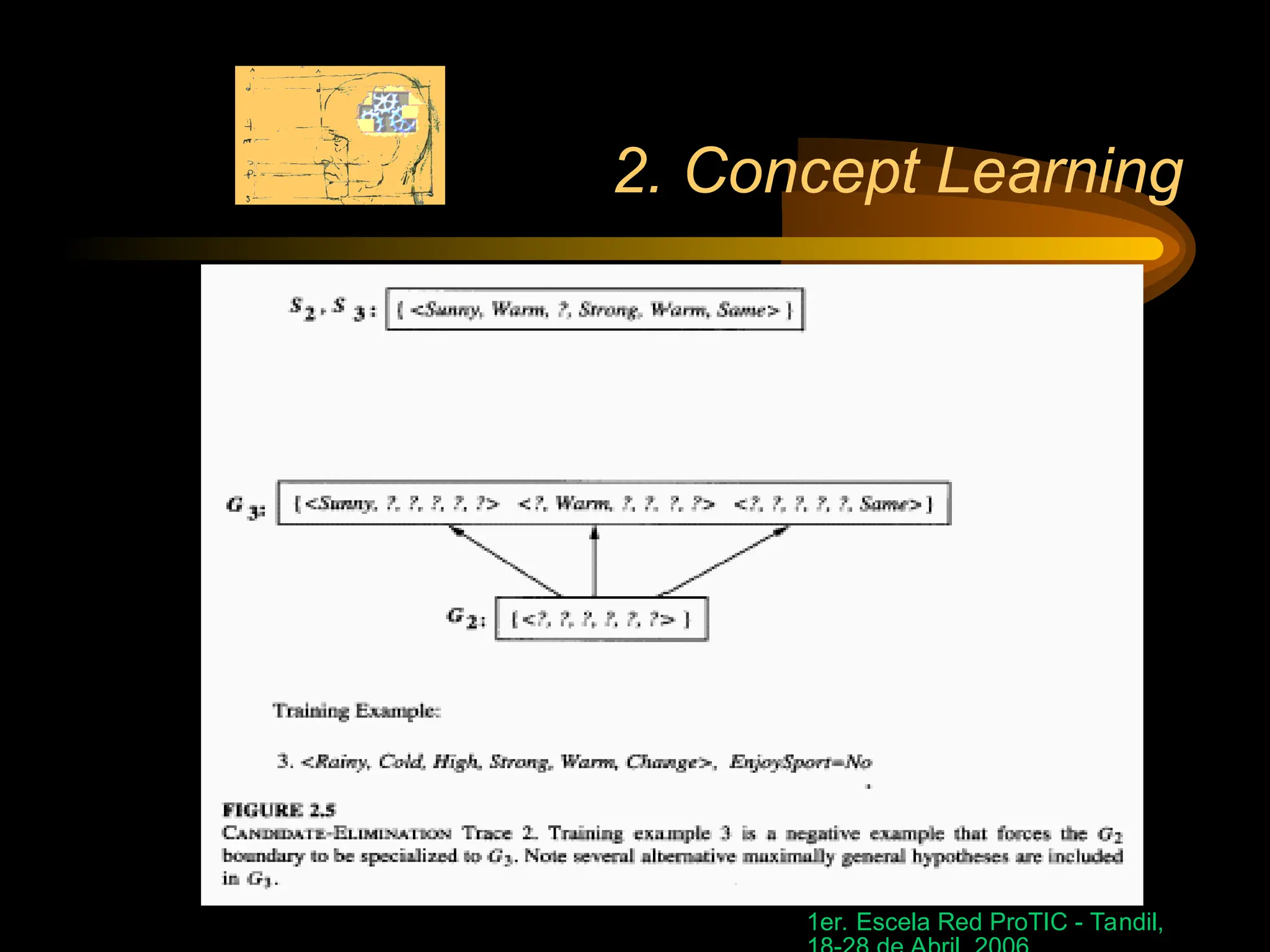

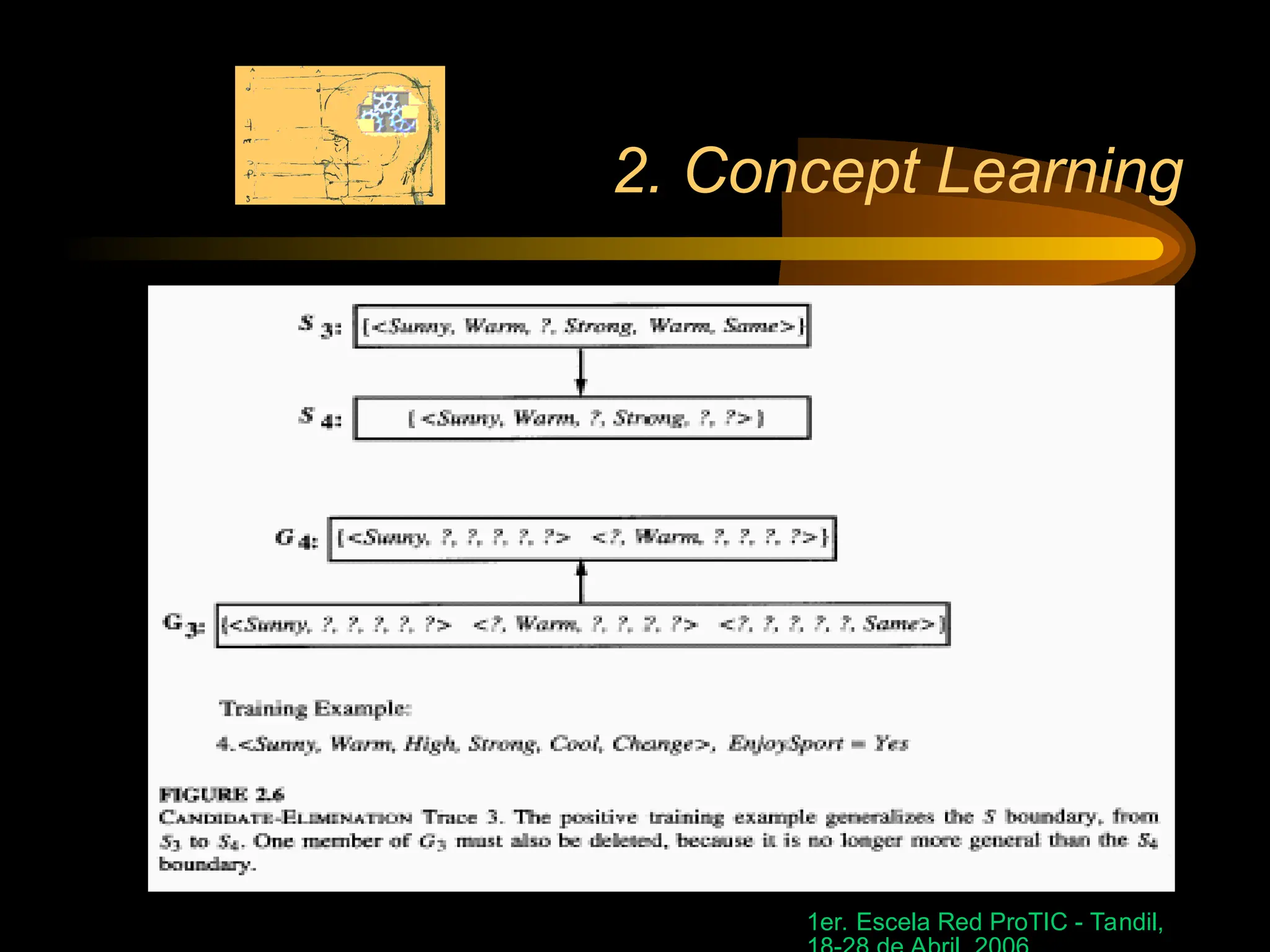

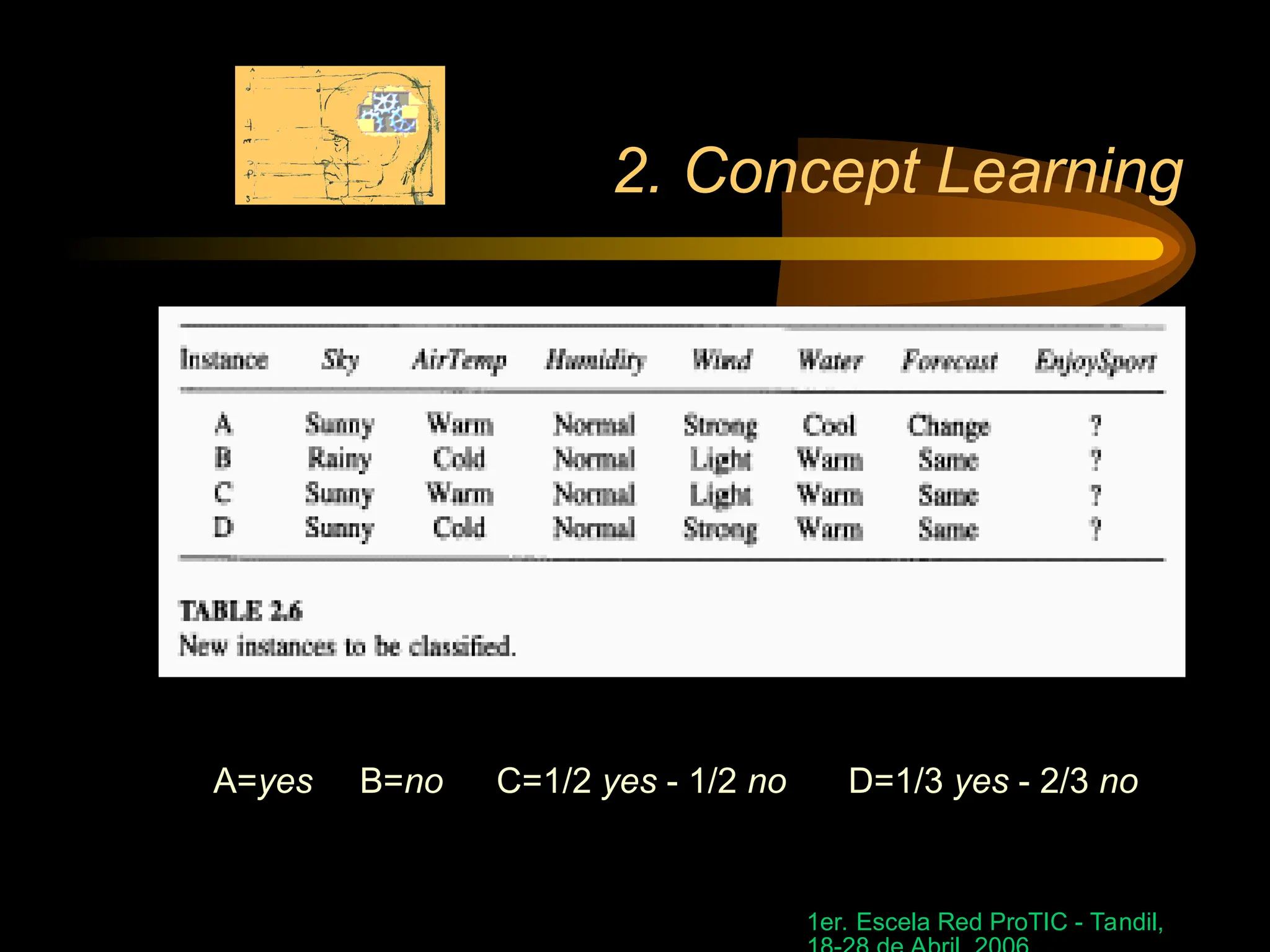

The document discusses concept learning, specifically focusing on inferring a boolean function from training examples and the representation of hypotheses. It explains the process of finding specific hypotheses using inductive learning, version spaces, and the candidate-elimination algorithm. Additionally, it addresses questions around convergence to correct hypotheses, the implications of bias in hypothesis spaces, and the effectiveness of inductive bias in learning tasks.

![1er. Escela Red ProTIC - Tandil,

2. Concept Learning

• General-to-Specific Ordering of hypotheses

h1=(sunny,?,?,Strong,?,?) h2=(Sunny,?,?,?,?,?)

Definition: h2 is more_general_than_or_equal_to h1

(written h2 g h1) if and only if

(xX) [ h1(x)=1 h2(x)=1]

g defines a partial order over the hypotheses space

for any concept learning problem](https://image.slidesharecdn.com/2conceptlearning-241015104031-853b792f/75/2_conceptlearning-in-machine-learning-ppt-9-2048.jpg)

![1er. Escela Red ProTIC - Tandil,

2. Concept Learning

Notation (Inductively inferred from):

(Dc xi) L(xi, Dc)

Definition Inductive Bias B:

( xiX) [(B Dc xi) L(xi, Dc)]

Inductive bias of the Candidate-Elimination algorithm:

The target concept c is contained in the hypothesis

space H](https://image.slidesharecdn.com/2conceptlearning-241015104031-853b792f/75/2_conceptlearning-in-machine-learning-ppt-30-2048.jpg)