This document discusses concept learning through inductive logic. It introduces the concepts of:

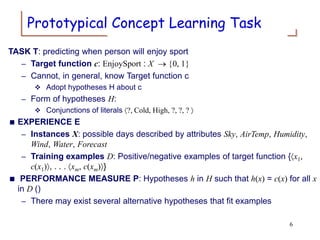

- Training examples that provide positive and negative examples of a target concept

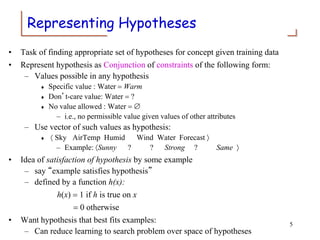

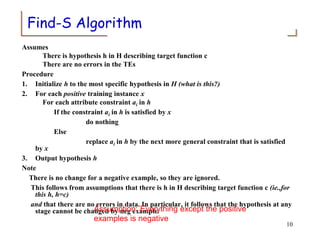

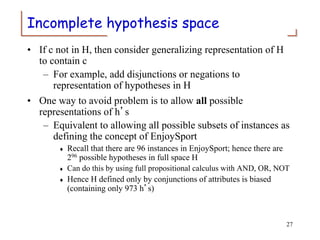

- Hypotheses that attempt to represent the target concept in the form of logical rules

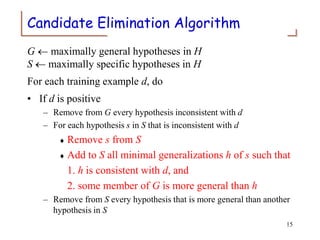

- The version space that contains all hypotheses consistent with the training examples

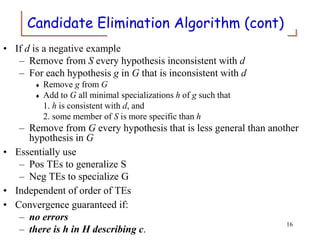

- Candidate elimination, an algorithm that iteratively generalizes and specializes hypotheses within the version space based on new training examples

- The need for an appropriate hypothesis space and inductive bias to allow learning from a limited number of examples and enable generalization to new cases.