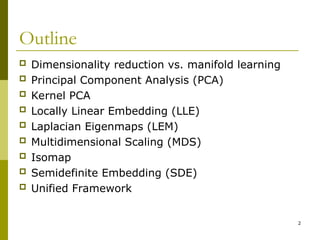

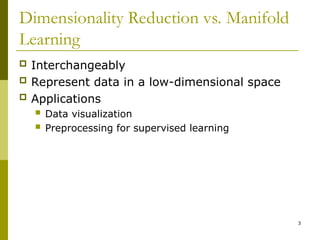

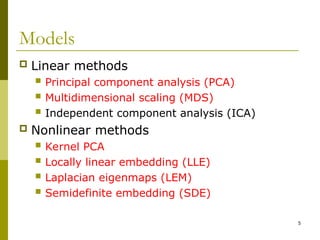

The document provides an overview of dimensionality reduction techniques, contrasting it with manifold learning and outlining several methods including Principal Component Analysis (PCA), Kernel PCA, and Locally Linear Embedding (LLE). It highlights the historical background, formulation, and applications of these techniques, emphasizing the unified framework of Kernel PCA. The document concludes with a summary of the seven dimensionality reduction methods discussed and their common basis.