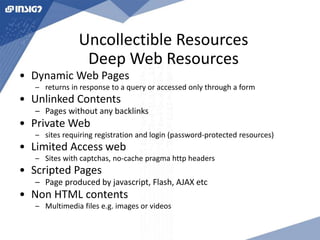

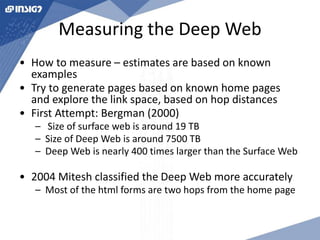

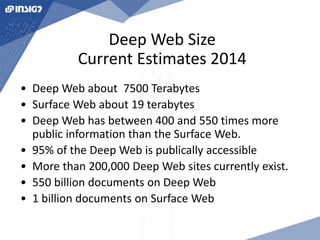

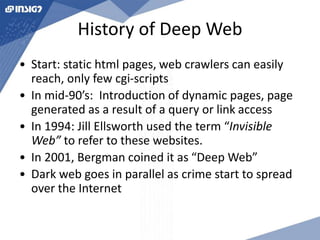

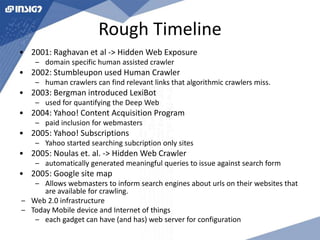

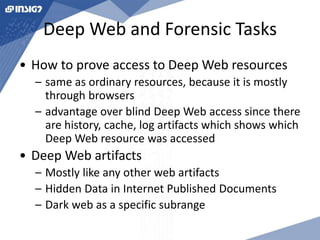

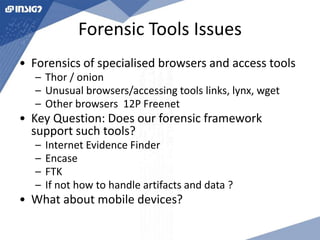

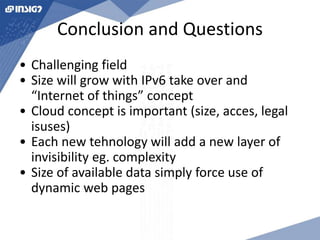

The document provides an overview of the deep web and digital investigations. It defines the deep web as data that is inaccessible to regular search engines but exists on the internet. This includes dynamically generated web pages, private websites requiring login, and files accessible only through direct filesystem access. The document estimates the deep web is 400-550 times larger than the surface web that is indexed by search engines. Standard digital forensic procedures can be applied to investigate the deep web, but tools may need to be adapted to handle specialized browsers and access methods used to retrieve deep web resources.