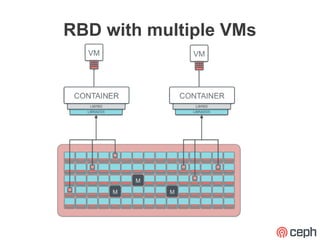

This document discusses using Ceph RBD (RADOS Block Device) in cloud computing environments. Key points include:

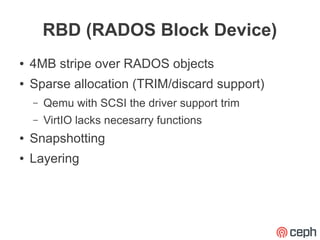

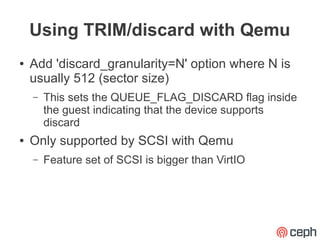

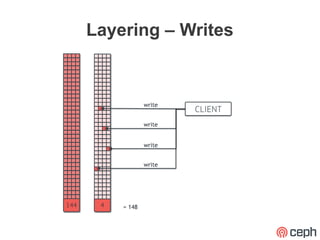

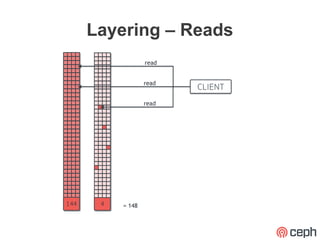

- RBD provides high performance block storage using object striping in Ceph. It supports features like discard/trim, snapshots, and layering.

- RBD has been integrated into OpenStack, CloudStack, and Proxmox for using Ceph block storage in virtual machines. OpenStack provides the most full-featured integration currently.

- Best practices for using RBD in clouds include enabling journaling, caching, and understanding workload characteristics like random I/O patterns and a 70/30 read/write split.

- While some basic functionality is still missing in some integrations,