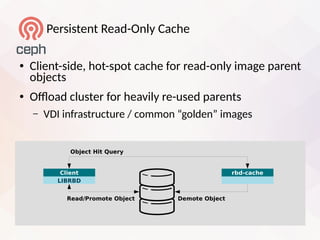

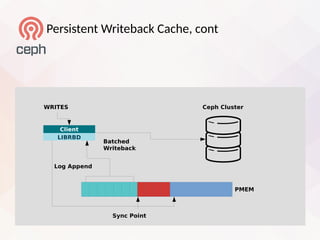

The document discusses the future developments of the RADOS Block Device (RBD) within the Ceph storage system, highlighting new features like deep-copy of images, live image migration, and enhancements in image cloning. It also mentions improvements to mirroring capabilities, support for active-active configurations, and ongoing performance optimizations. Additionally, it outlines upcoming features and work-in-progress aimed at enhancing usability and performance in Ceph deployments.

![Image Cloning (v2)

● Simplifed operatons for eiistng clone feature

– No change to data low handling

– Supported in krbd (new feature bit whitelisted)

● Parent snapshots no longer need to be protected

– Atomic reference countng in the OSD

– OSD “profle rbd[-read-only]” caps whitelist support

● Snapshots with linked children can be “deleted”

– In reality: moved to “trash” snapshot namespace

– Trashed snapshot auto-removed](https://image.slidesharecdn.com/01-rbdwhatwillthefuturebringjasondillaman-180419172121/85/RBD-What-will-the-future-bring-Jason-Dillaman-10-320.jpg)

![Image Cloning (v2), cont

● Support enabled automatcally when Mimic clients

required

– “ceph osd set-require-min-compat-client mimic”

– Confg override: “rbd default clone format = [1, 2, auto]”

● Usage:

– “rbd snap create <parent-image>@<snap>”

– “rbd clone <parent-image>@<snap> <child image>”

– “rbd snap rm <parent-image>@<snap>”

– “rbd snap ls --all parent”

SNAPID NAME SIZE TIMESTAMP NAMESPACE

4 29da36c8-9ff6-46b7-9888-9e9804c22525 1024 kB Sun Mar 18 20:41:13 2018 trash](https://image.slidesharecdn.com/01-rbdwhatwillthefuturebringjasondillaman-180419172121/85/RBD-What-will-the-future-bring-Jason-Dillaman-11-320.jpg)

![Performance Improvements

● Current client libraries

Flame Graph Search

AsyncConnec..

Mess..

sock_..

b..

sys_read

ip_l..

___sys_sendmsg

__GI___clone

ip_rcv

b..

i..

inet..

Context::..

boost::variant<lib..

do_soft..

fn-radosclient

rbd_aio_read

read

start_thread

librbd..

librbd::io:..

Connecte..

Objecter::ms..

__GI___clone

non-virtual ..

AsyncConn..

sy..

w..

l..

F..

PosixCon..

li..

MOS..

process..

__G..

librbd::io::Object..

l..

b..

n..

Objecter::_o..

librados::C_A..

As..

tcp_sendmsg

b..

ip_fnish..

Objecter::ha..

boost::detail::var..

thread_main

do_syscall_64

__vfs..

sock_sendmsg

librbd::io::ObjectD..

__sys_sendmsg

DispatchQueu..

operator

en..

boost::detail::var..

b..

Finisher::fnisher_th..

librados::IoCt..

S..

do..

t..

l..

tcp_..

__GI___clone

tcp_transmit_skb

[libstdc++.so.6.0.24]

f..

librbd::io::Object..

do_io

en..

__sendmsg

librbd::io::..

PosixConnectedSocketImpl:..

Co..

do_sys..

librbd::io::Object..

entry_..

b..

start_thread

tcp_sendmsg_locked

td_io_queue

Obj..

librbd::io::ImageReadRe..

_..

[unkn..

ip_output

EventCenter::process_events

librbd::io::..

fo

tc..

O..

__tcp_push_pendi..

[..

start_thread

fo_rbd_queue

msgr-worker

b..

Objecter::_o..

__neti..

_..

entry_SYSCALL_64

net_rx_..

__libc_r..

b..

boost::variant<lib..

__softi..

vfs_read

de..

ep..

Objec..

PosixConnectedSocketImp..

Asyn..librbd::io::ImageRequest..

boost::variant<lib..

boost::apply_visit..

Messenger::m..

Co..

boost::detail::var..

boost::detail::var..

AsyncCon..

do_soft..

AsyncConnection::process

O..

Objecter::op_..

librados::IoCtx..

Context::complete

librbd::io::ImageReques..

i..

ip_..

t..

li..

tcp..

tcp_write_xmit

tc..

__local..

r..

_M_invoke

As..

Epo..](https://image.slidesharecdn.com/01-rbdwhatwillthefuturebringjasondillaman-180419172121/85/RBD-What-will-the-future-bring-Jason-Dillaman-26-320.jpg)

![Performance Improvements, cont

● Tweaked client libraries

Flame Graph Search

inet_..

t..

Messenger::ms_fast_dispa..

Context::c..

start_thread

io..

f..

do_softi..

boost::apply_visitor<libr..

boost::variant<librbd::io..

librbd::io::ImageRequest<librbd..

Re..

Objecter::handle_osd_op..

librbd::io::ObjectReadReq..

librbd::io::ObjectDispatc..

Objecter::_op_su..

ip..

ip_l..

librbd::..

boost::detail::variant::v..

boost::variant<librbd::io..

librados::IoCtx::aio_o..

entry_S..

sock_..

ip_fnish..

__vfs_..

Ob..

tcp_sendmsg_locked

__tcp_push_pendin..

decod..sock_sendmsg

fo_rbd_queue

Epo..

do_syscall_64

entry_SYSCALL_64

boost::detail::variant::v..

msgr-worker

Obj..

tcp..

PosixConnectedSocketImpl::send

r..

boost::variant<librbd::io..

tcp_r..

librados:..

AsyncCo..

tcp_sendmsg

vfs_read

boost::detail::variant::i..

wa..

do..

__GI___clone

start_thread

librbd::io::ImageRequestWQ<librb..

fo

AsyncConn.. M..

ip_..

__G..

Ob..

en..

__netif..

tcp_transmit_skb

AsyncConnection::process

__sendmsg

librbd:..

Objecter::op_submit

PosixConnectedSocketImpl::..

librbd::..

ip_output

librbd::io::ObjectDispatc.. ip_rcv

__libc_r..

Message..

_M_invoke

Contex..

DispatchQueue::fast_dispa..

__sys_sendmsg

lib..

librbd::io::ImageReadRequest<.. s..

Context::c..

__local_..

do_softi..

tc..

l..

StripeGenerator::fle_to_exte..

operator

t..

thread_main

Objecter::ms_dispatch sy..

rbd_aio_read

librbd::io::ObjectDispatch..

OS..

librbd::io::ImageReadRequest<l..

Asy..

OS..

ep..

__..

do_io

AsyncConnection..

non-virtual thunk to Obj..

PosixConn..

do_sysc..

tc..

tcp_write_xmit

nf..

EventCenter::process_events

__GI___clone

As..

MOSDO..

AsyncConne..

[libstdc++.so.6.0.24]

read

enc..

sys_read

process..

Connected..

librados::IoCtxImpl::..

boost::detail::variant::v..

td_io_queue

net_rx_..

Objecter::_op_sub..

___sys_sendmsg

__softir..](https://image.slidesharecdn.com/01-rbdwhatwillthefuturebringjasondillaman-180419172121/85/RBD-What-will-the-future-bring-Jason-Dillaman-27-320.jpg)