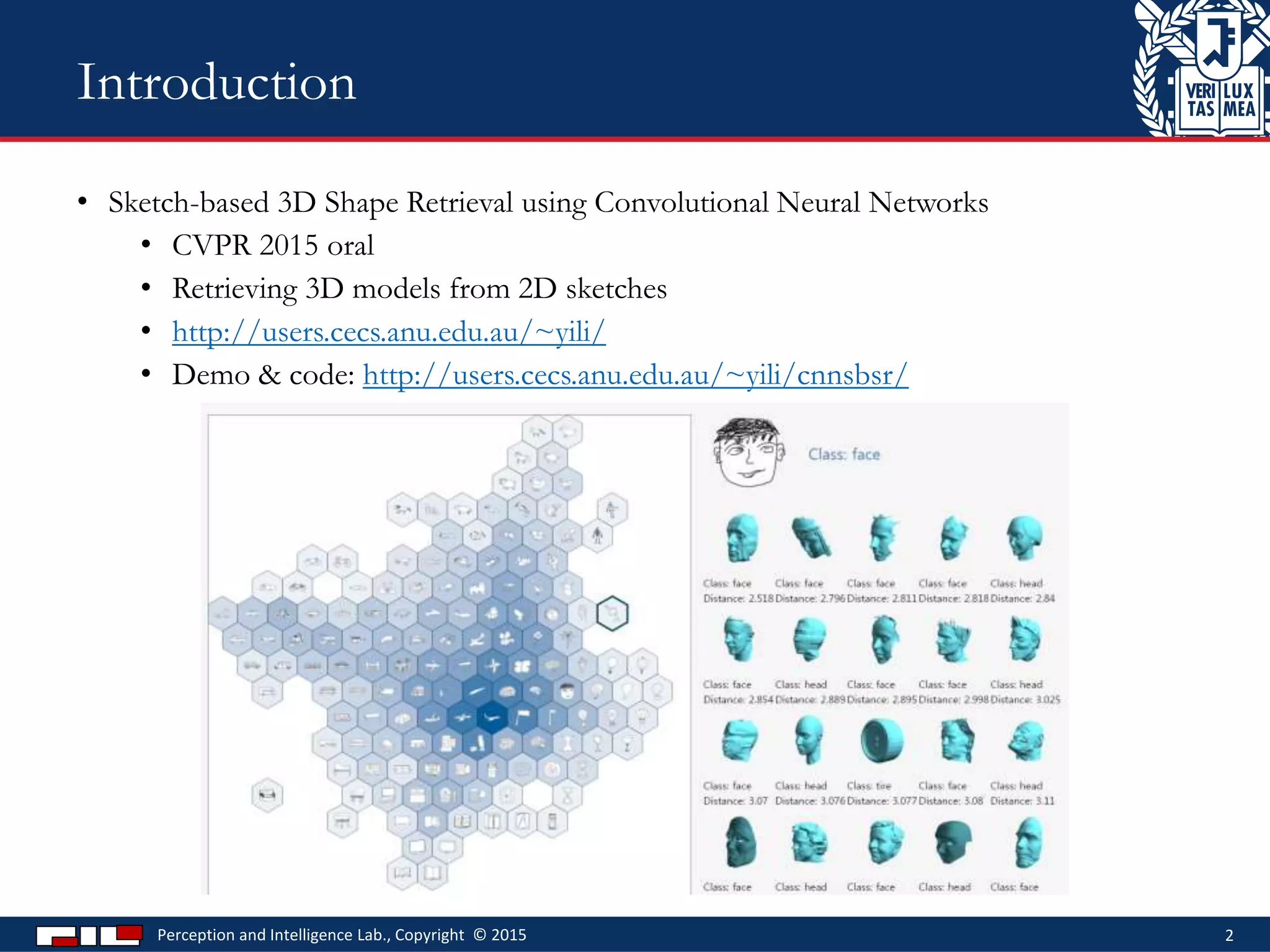

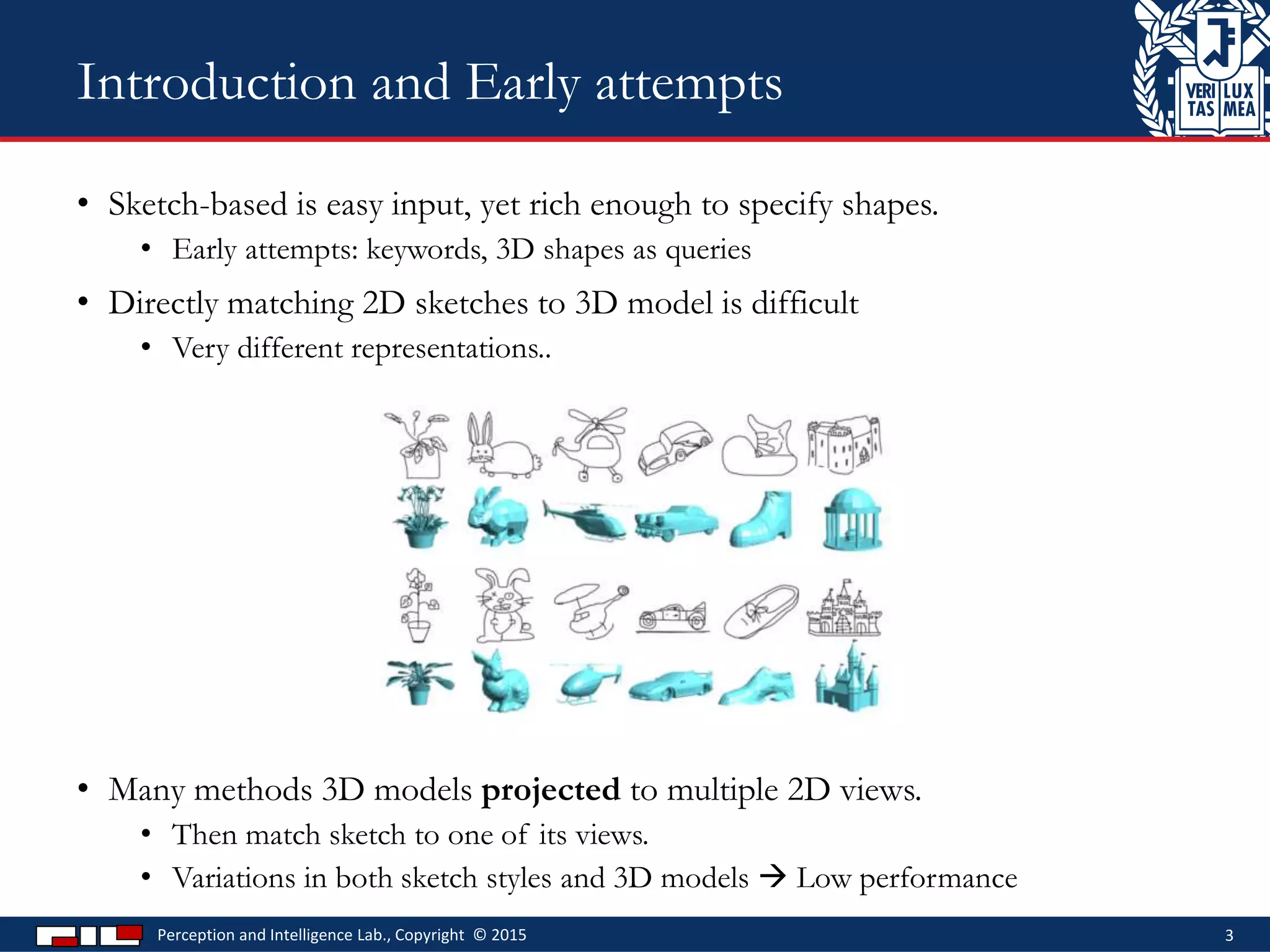

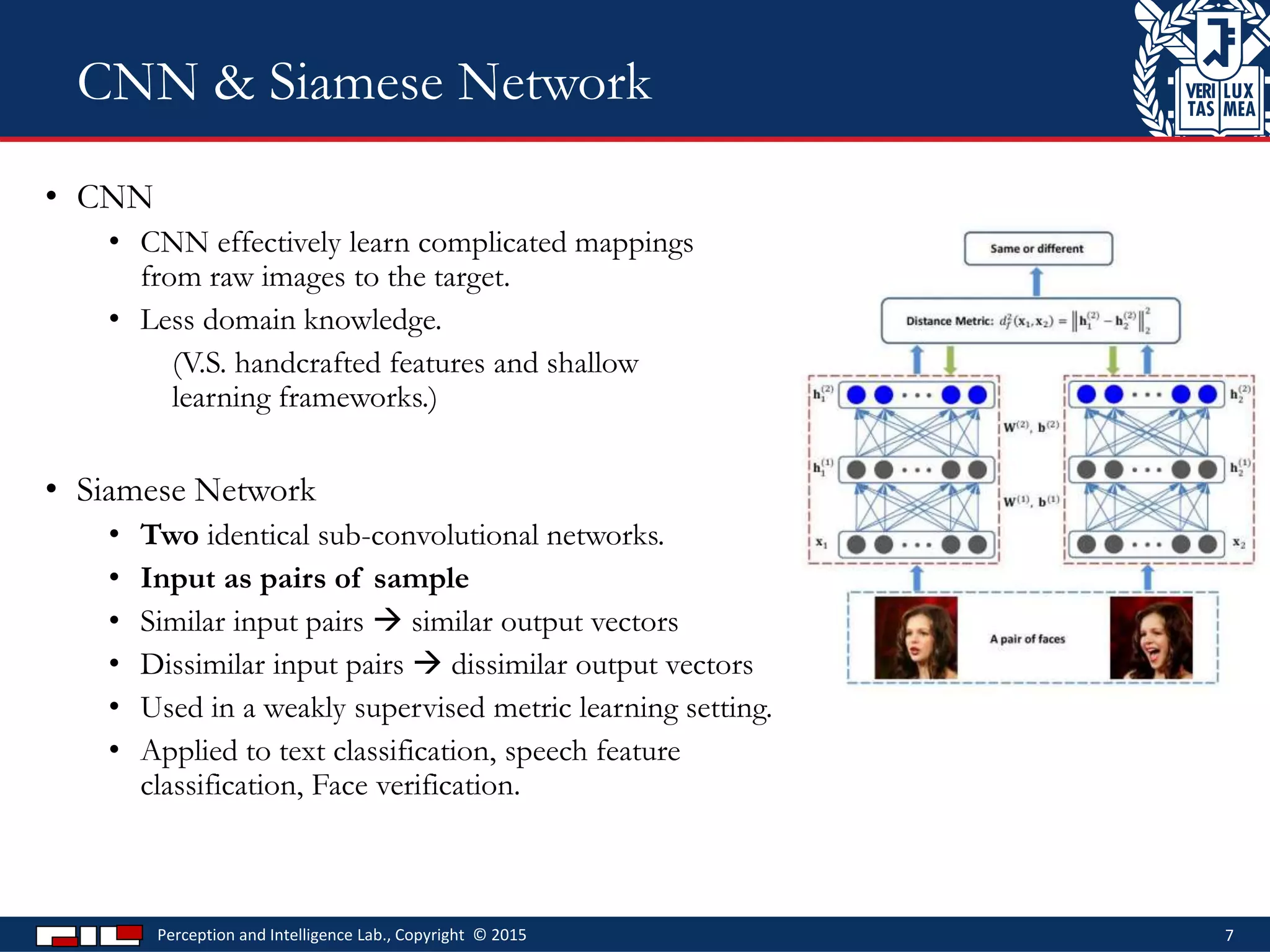

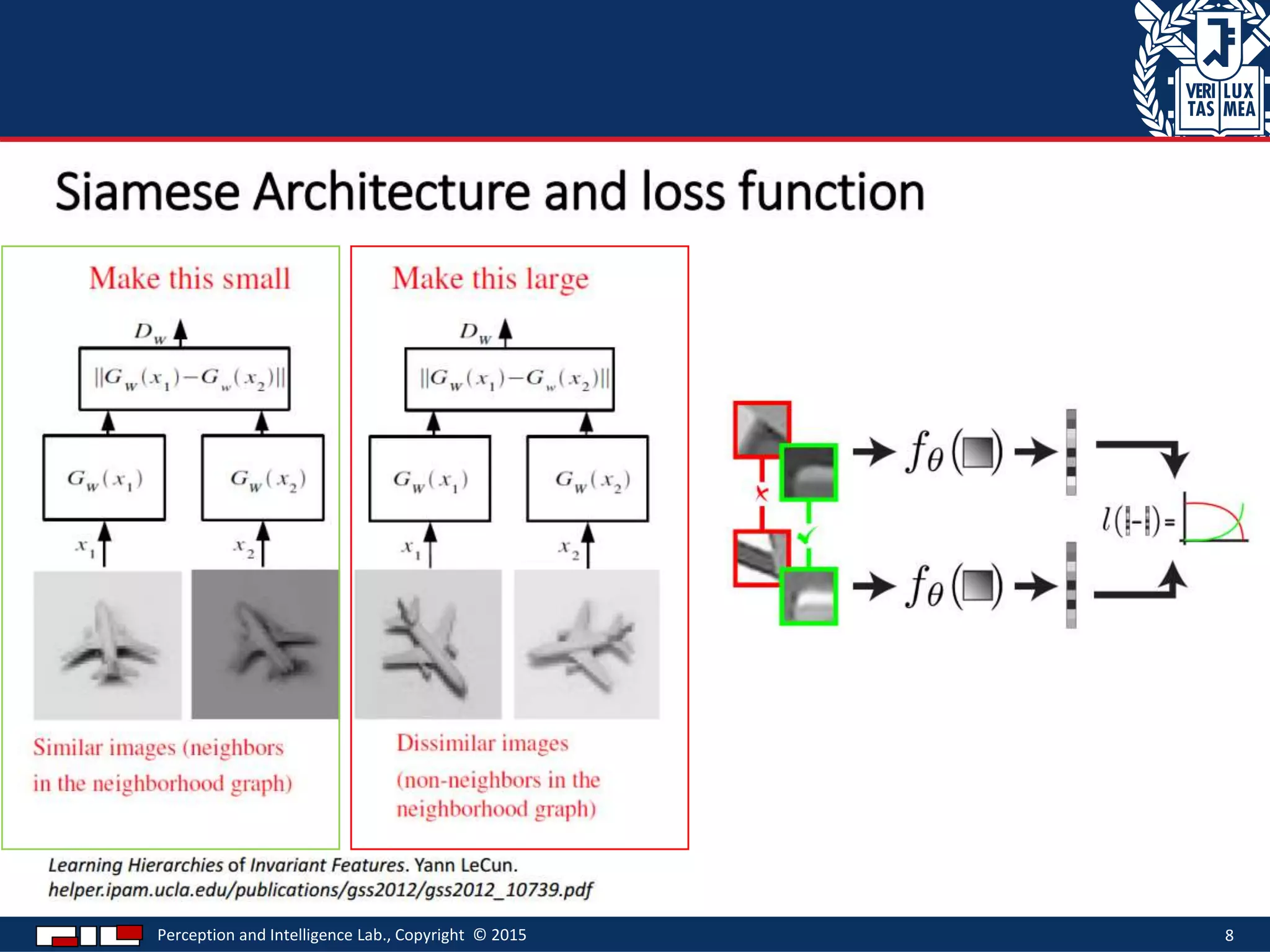

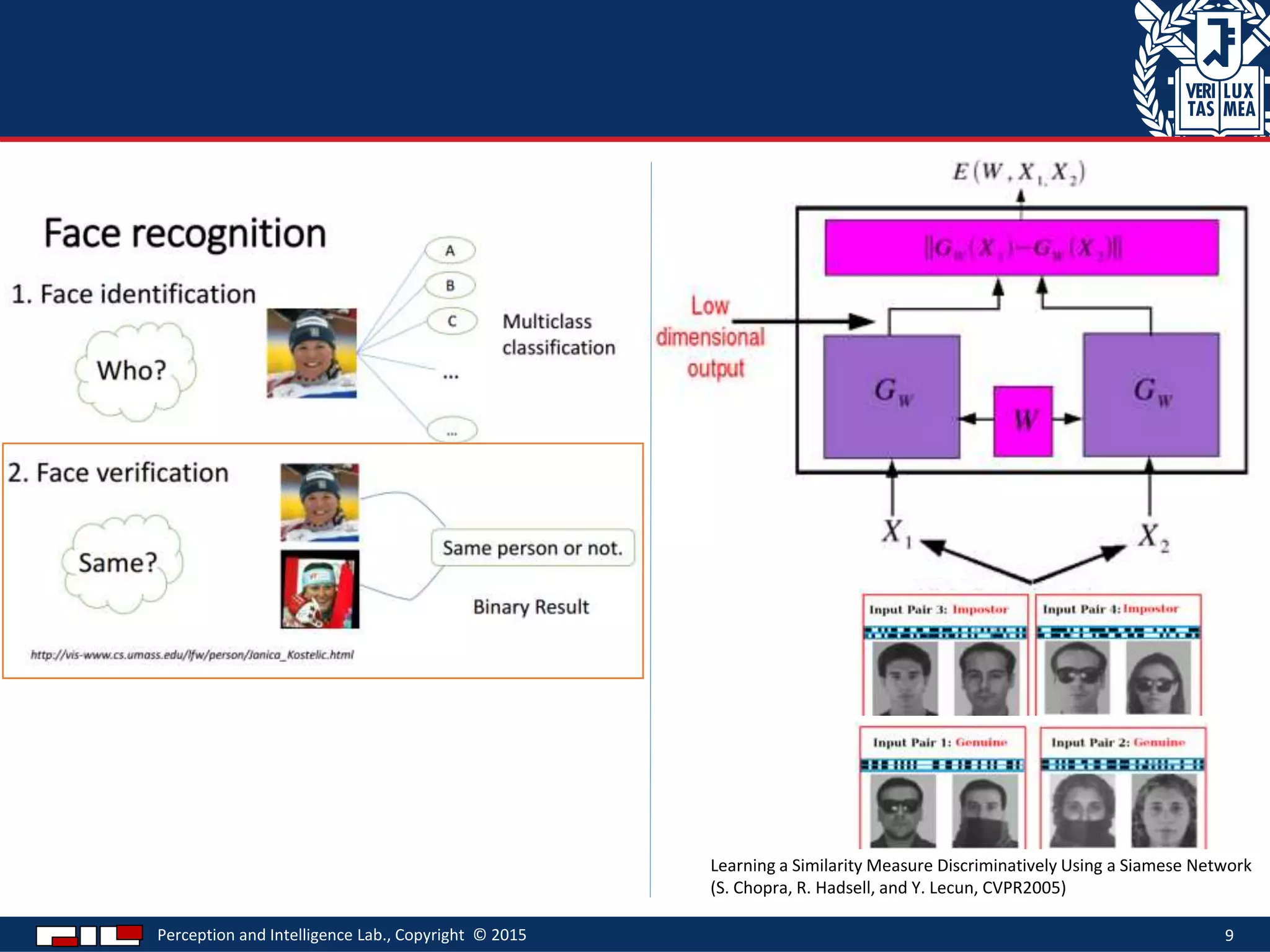

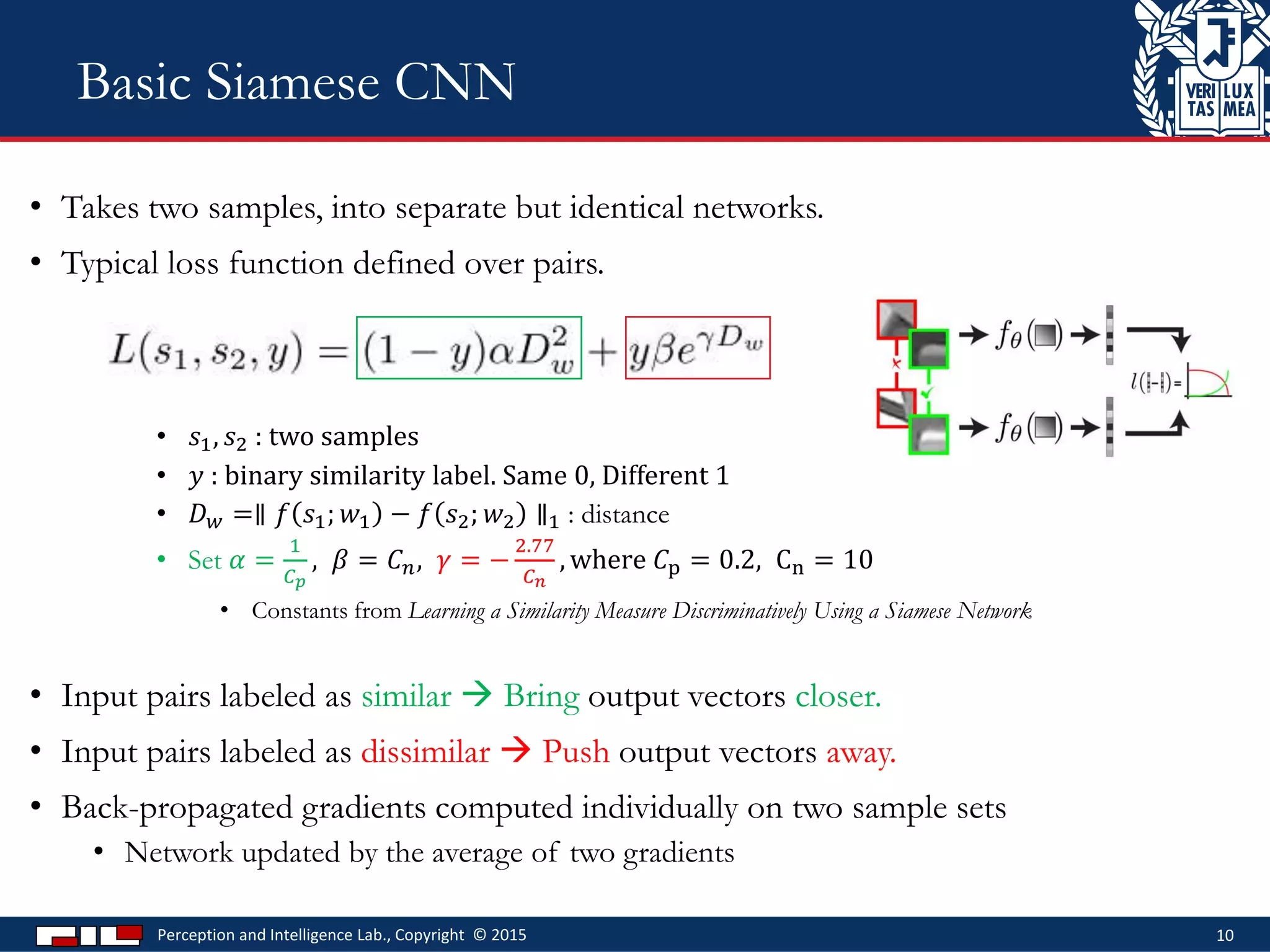

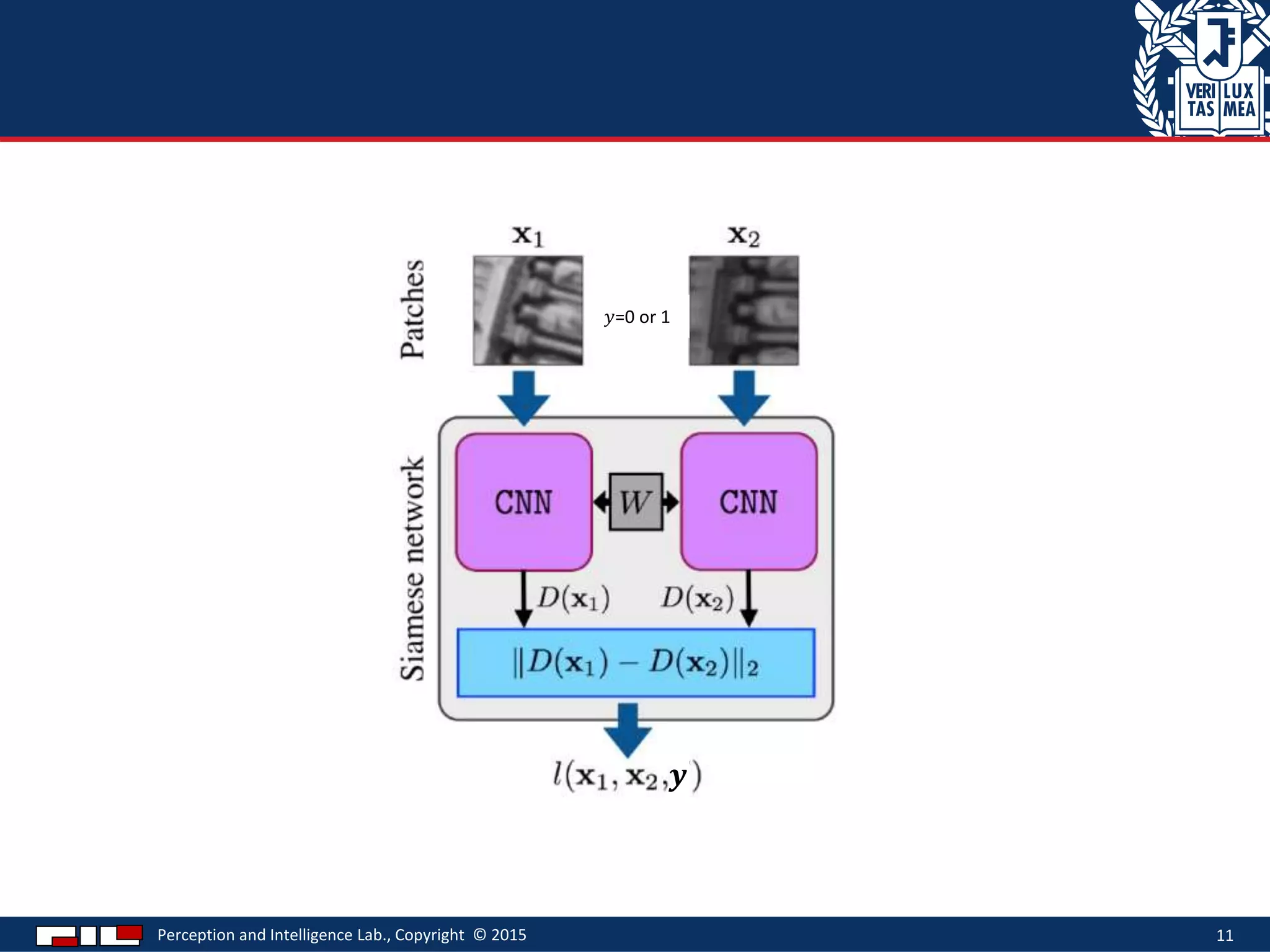

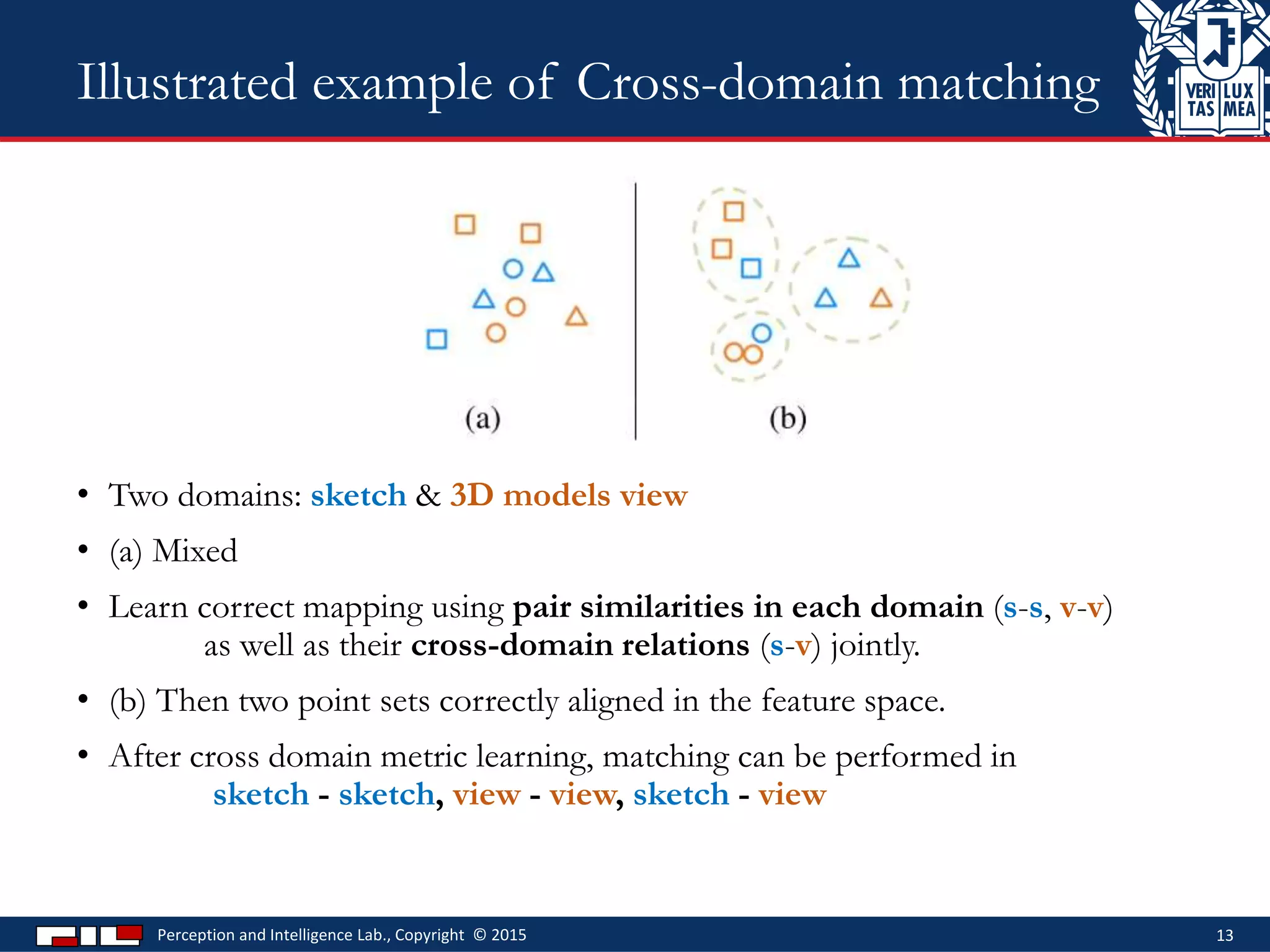

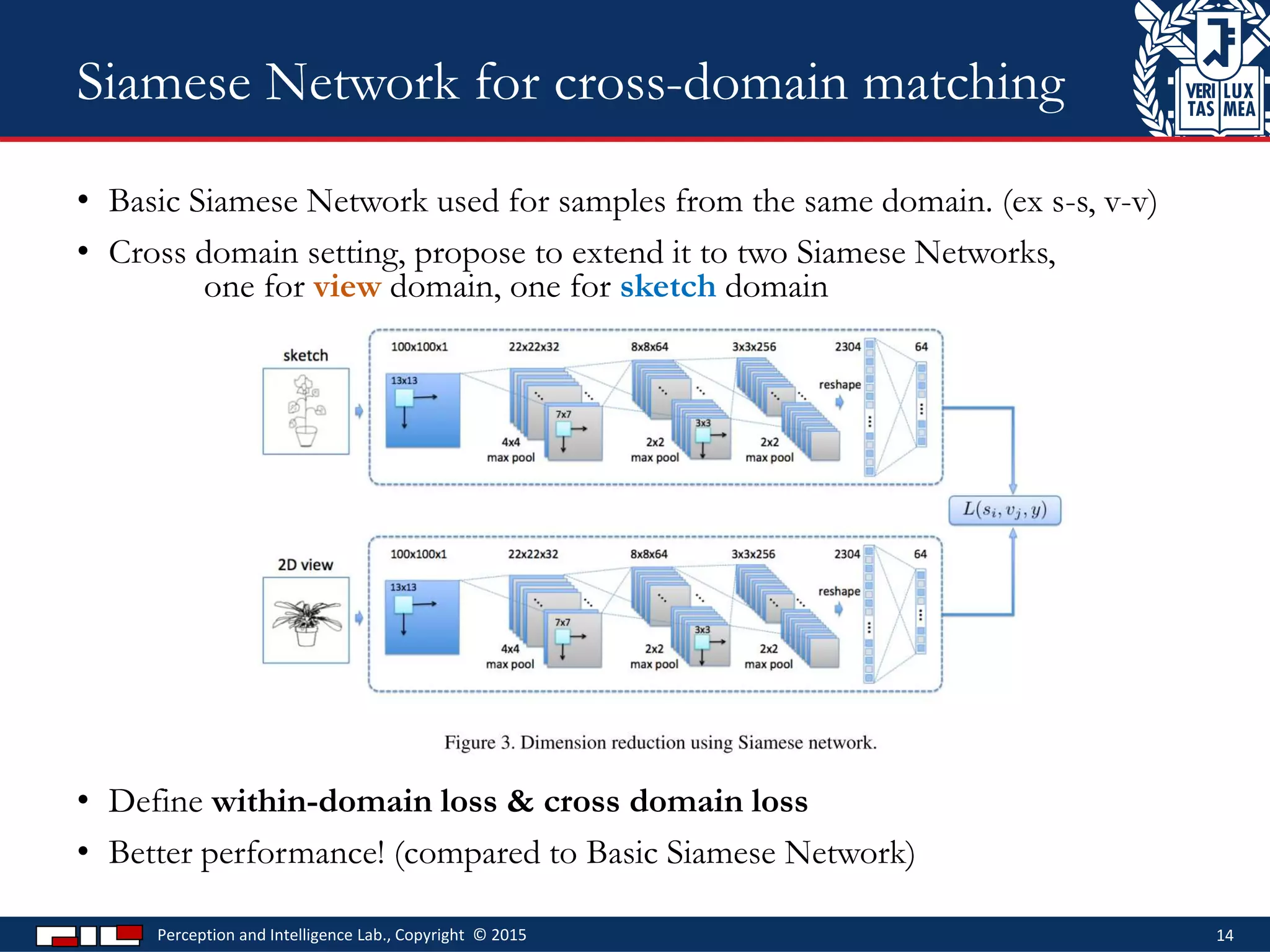

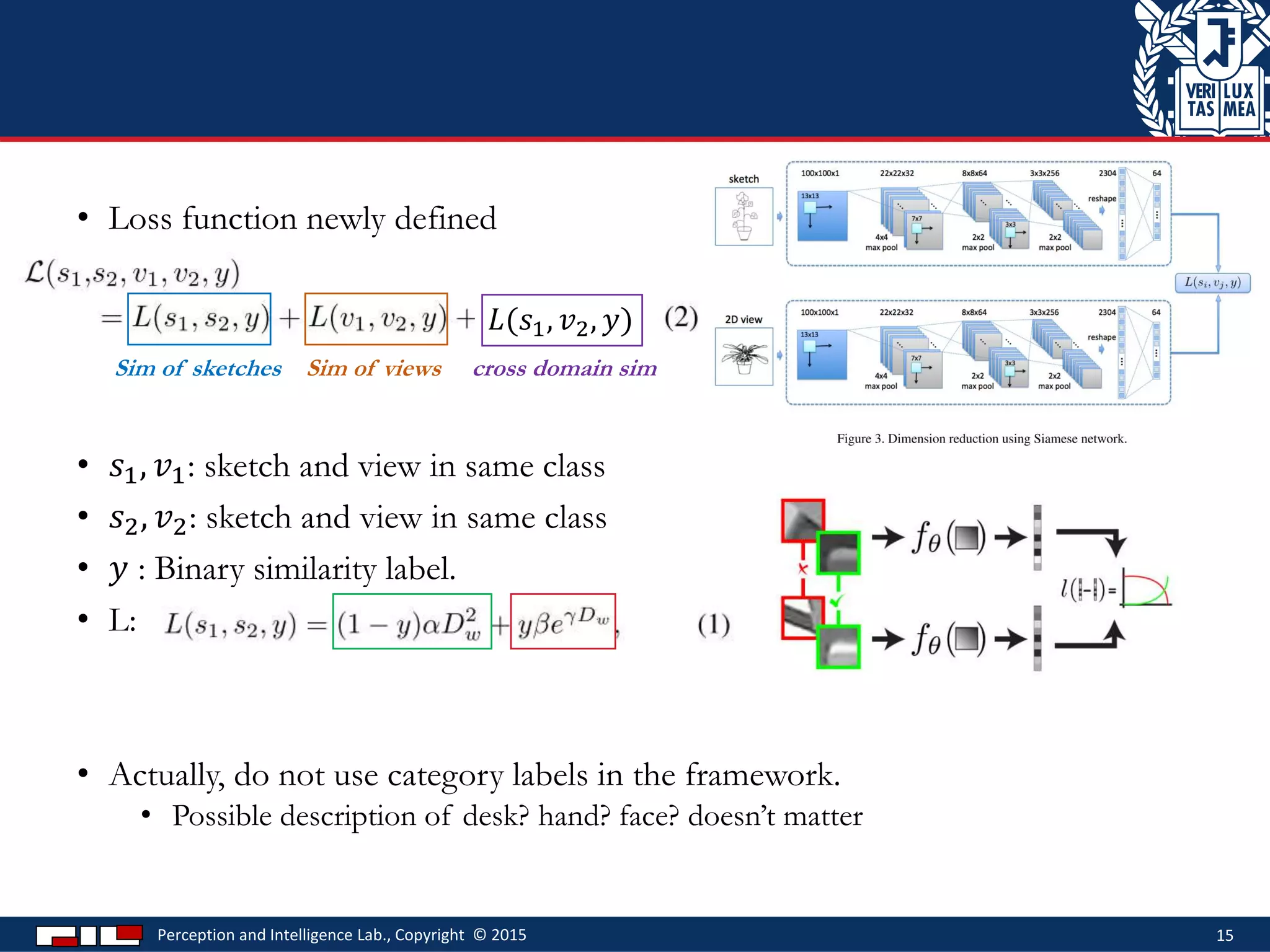

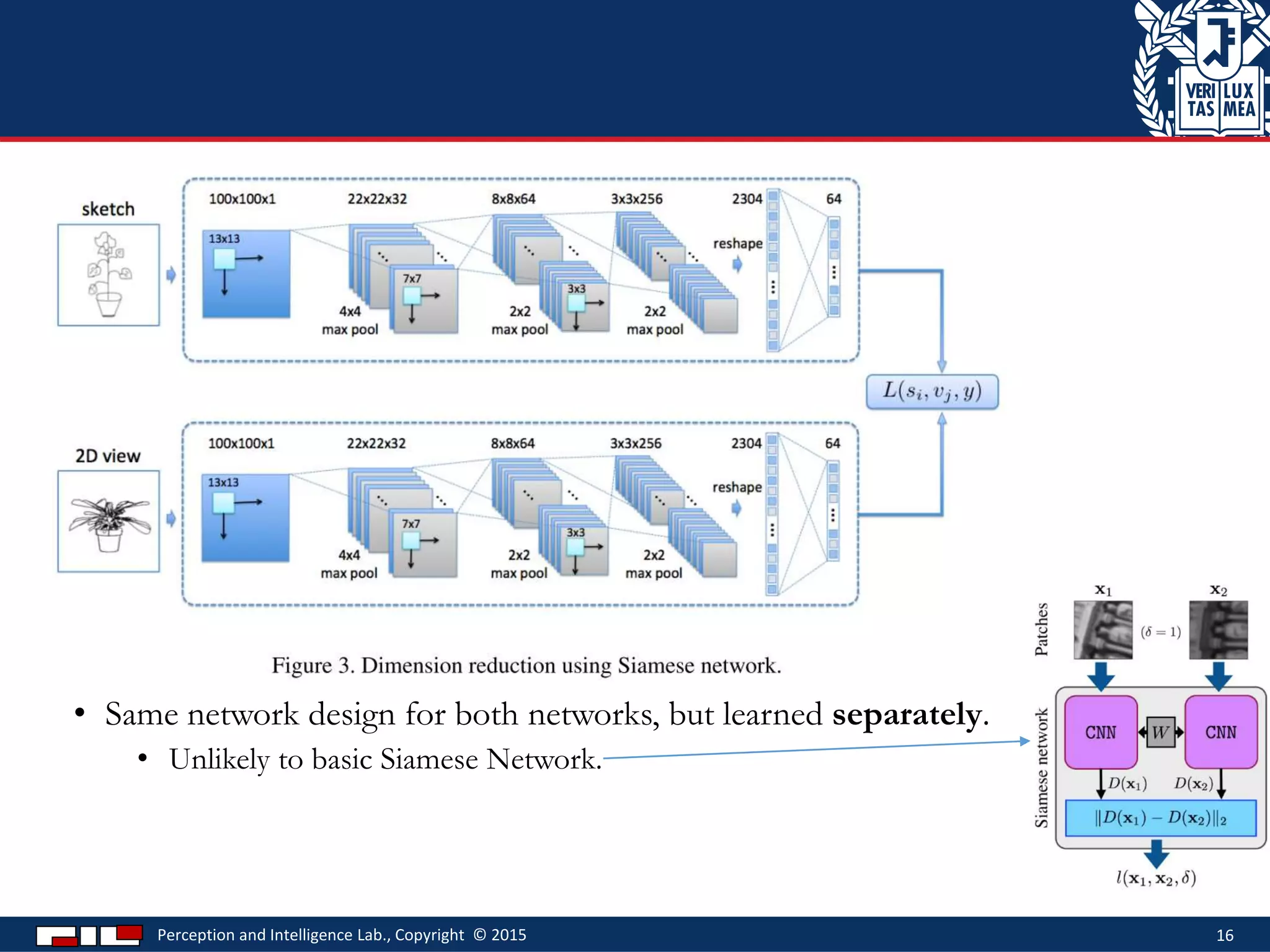

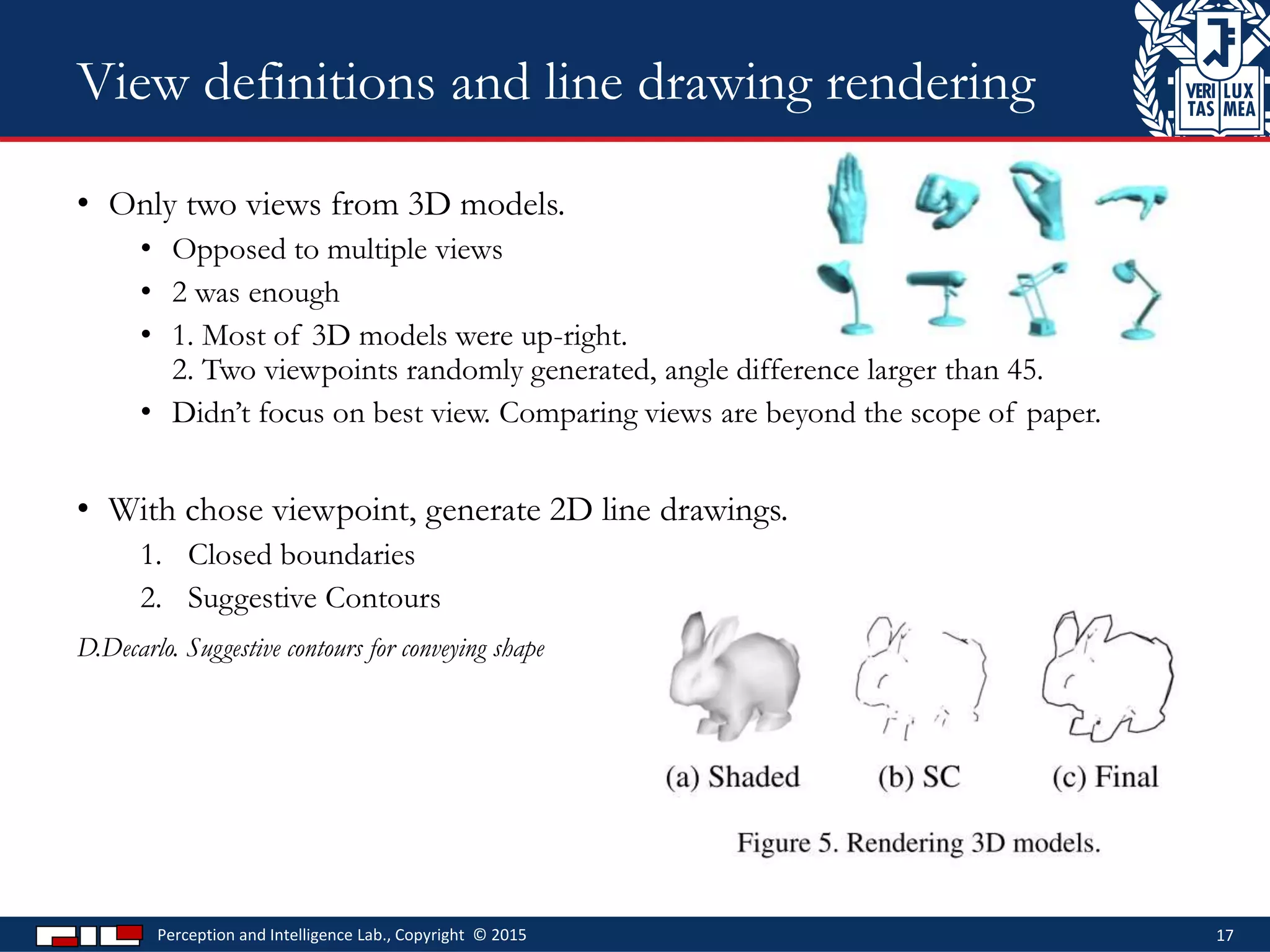

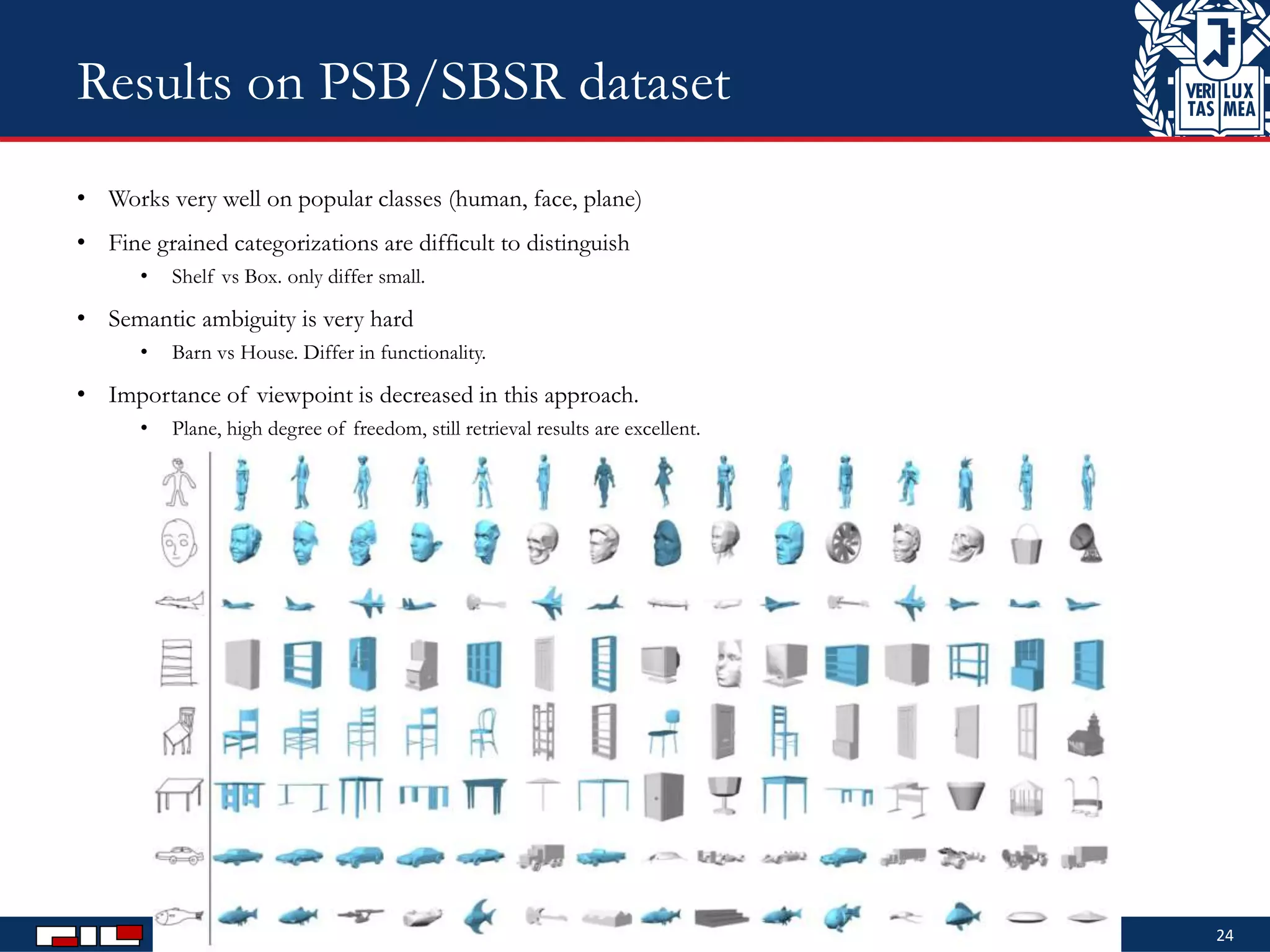

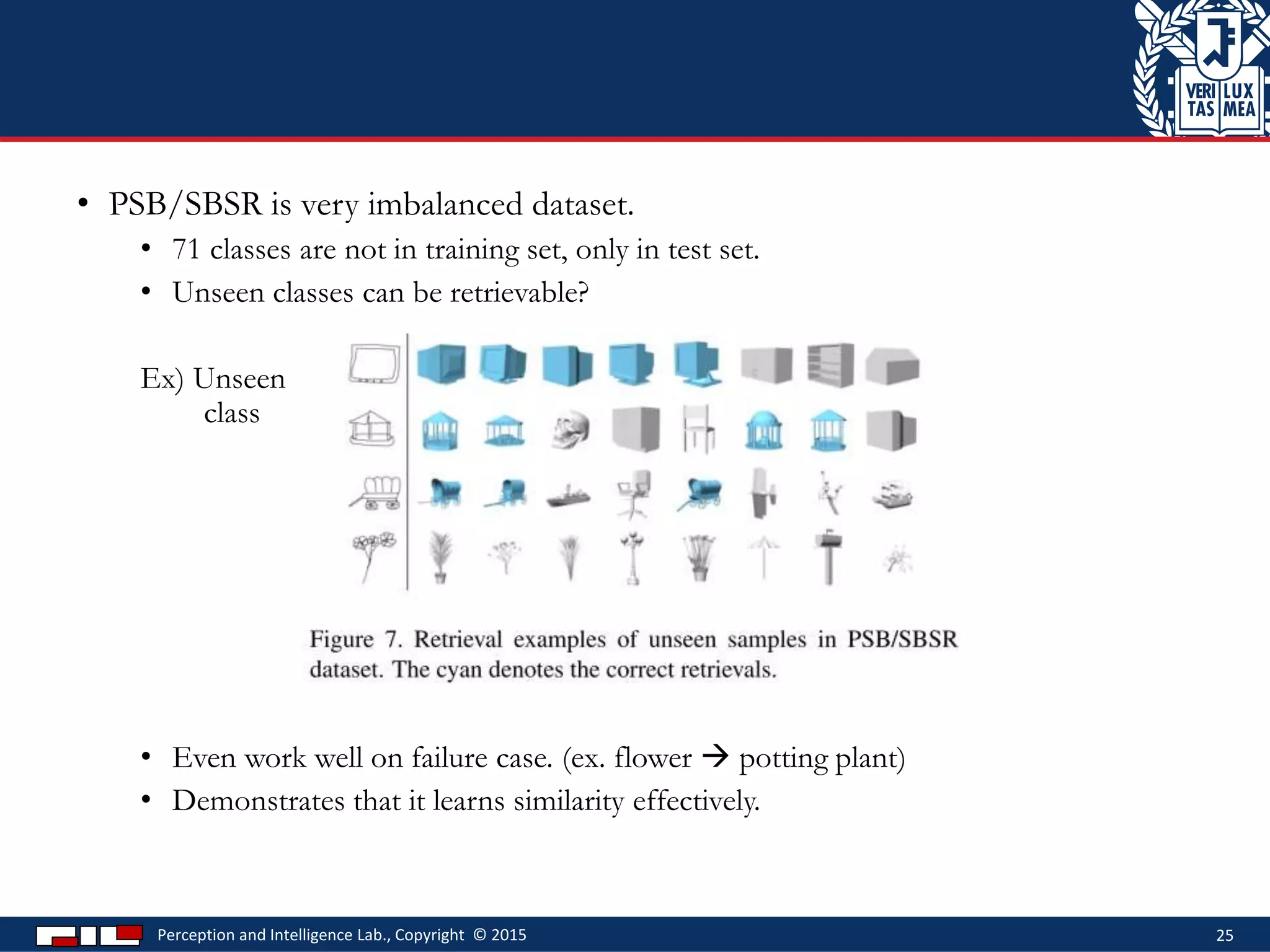

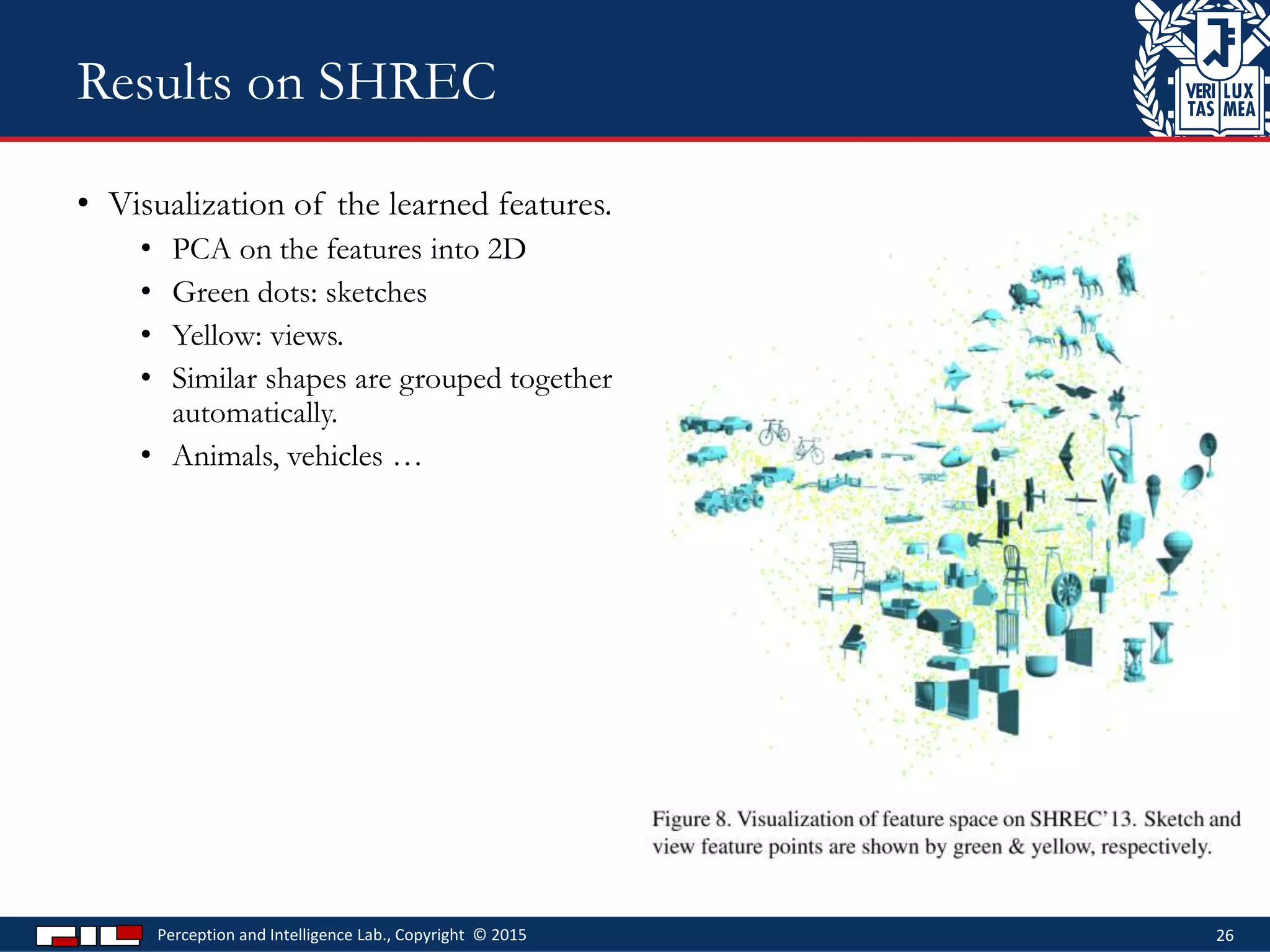

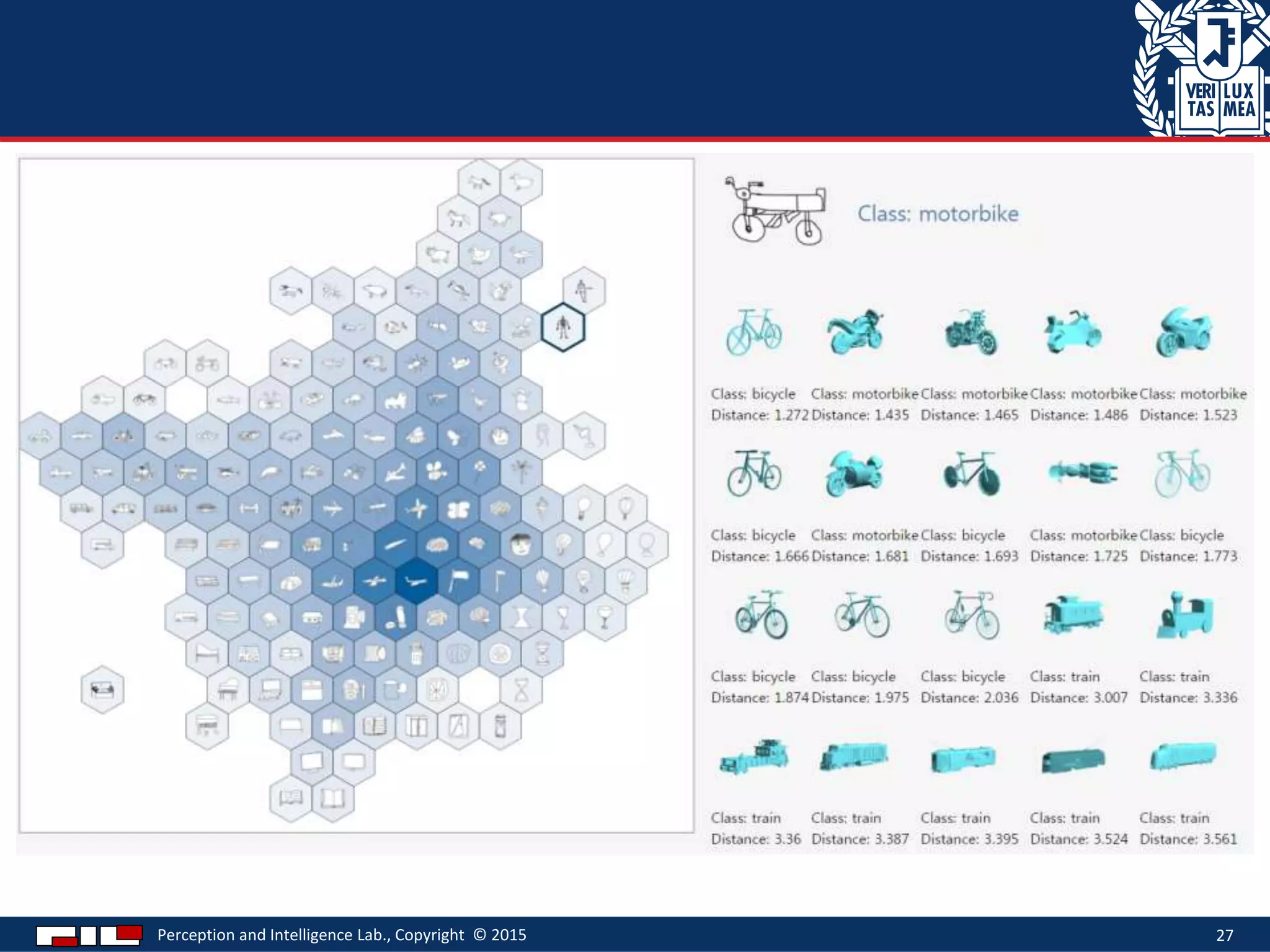

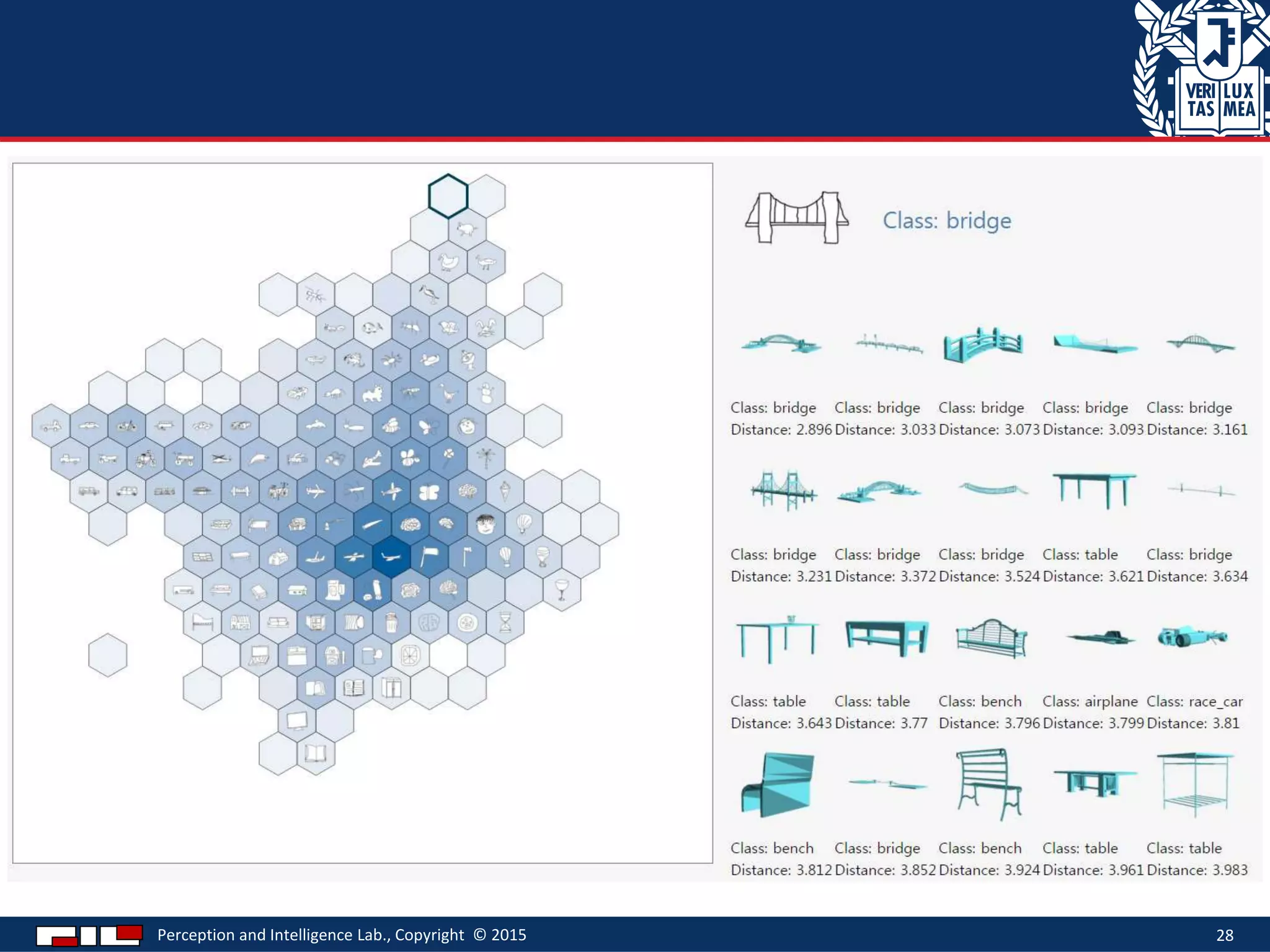

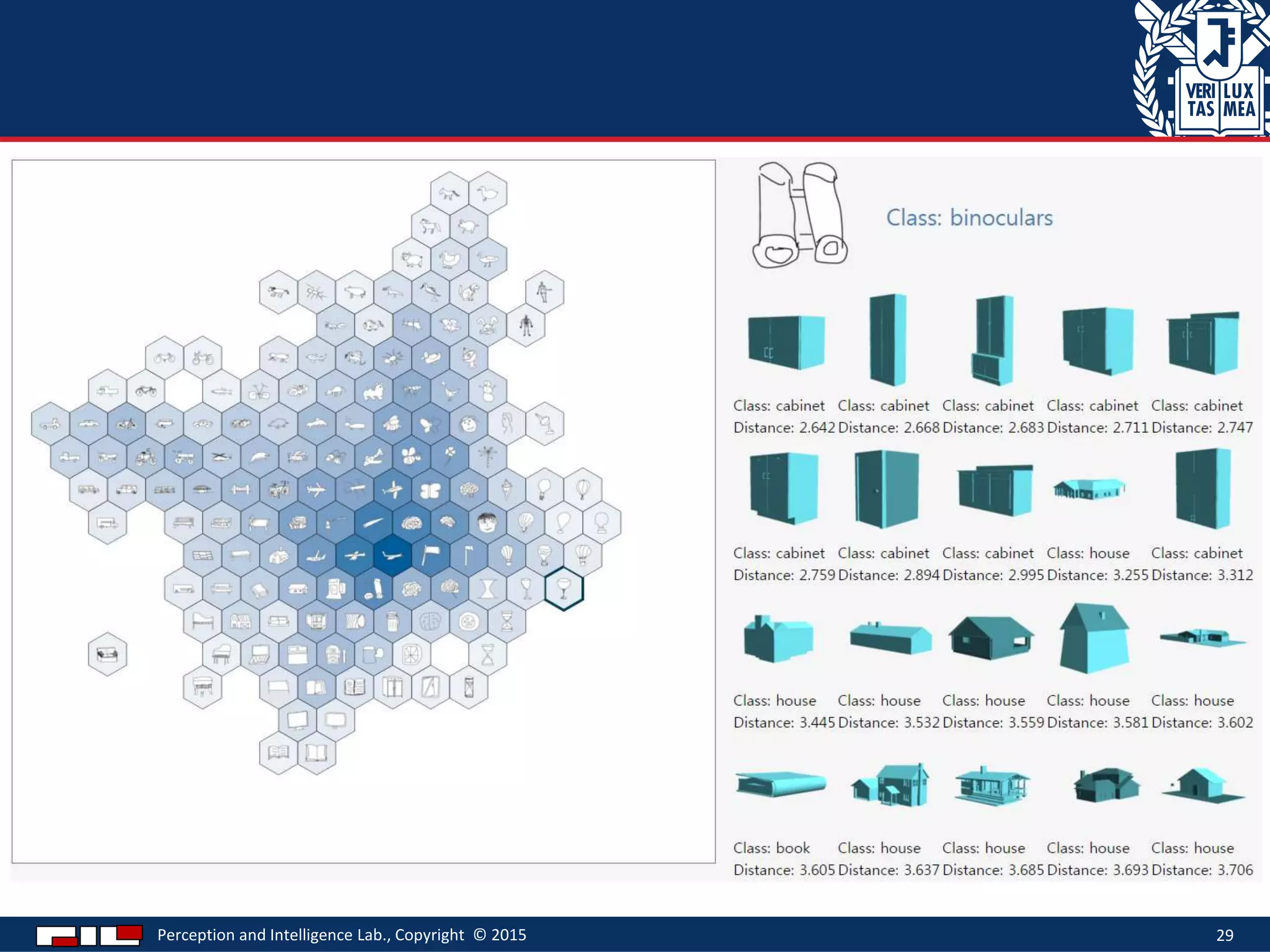

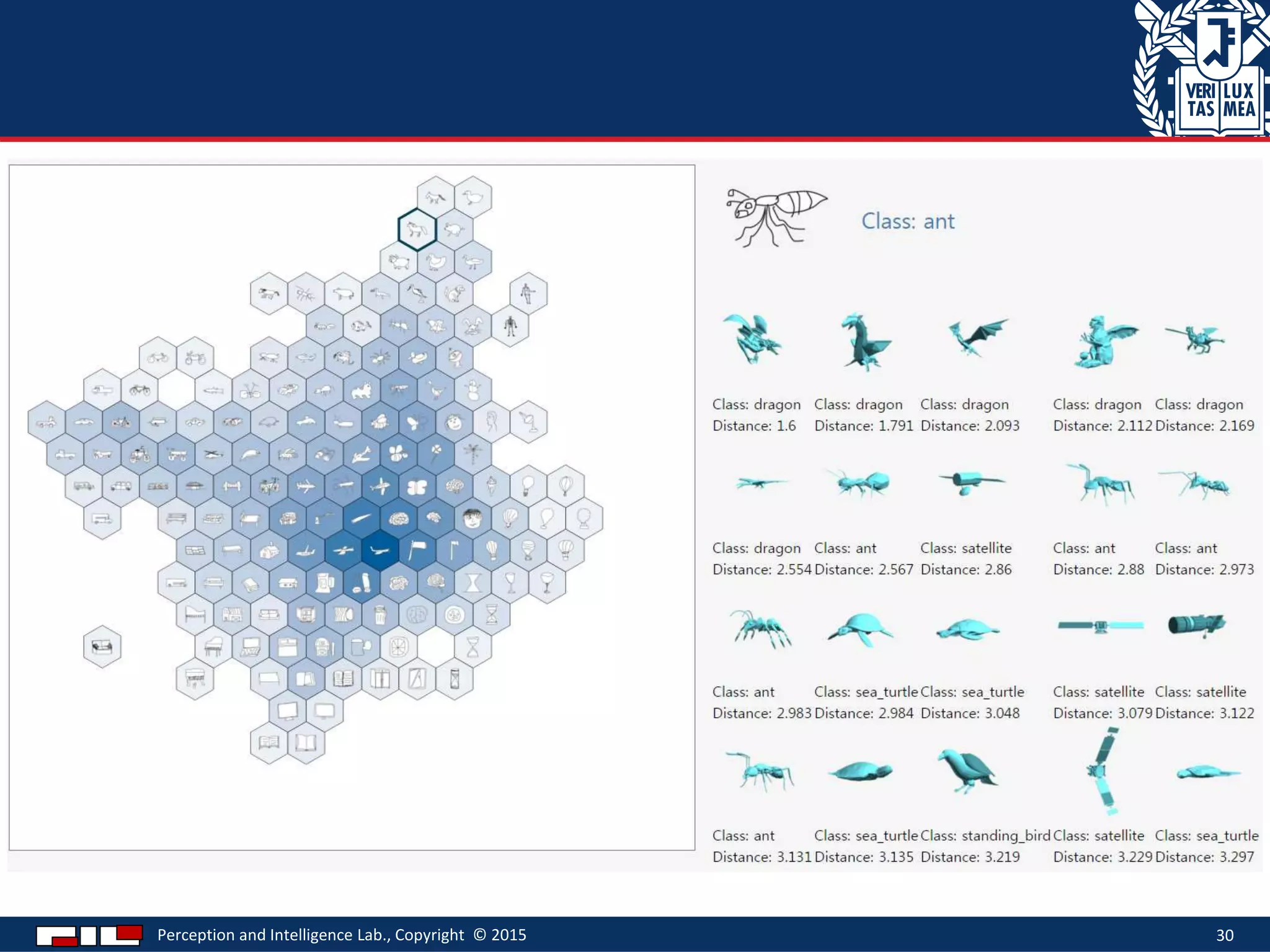

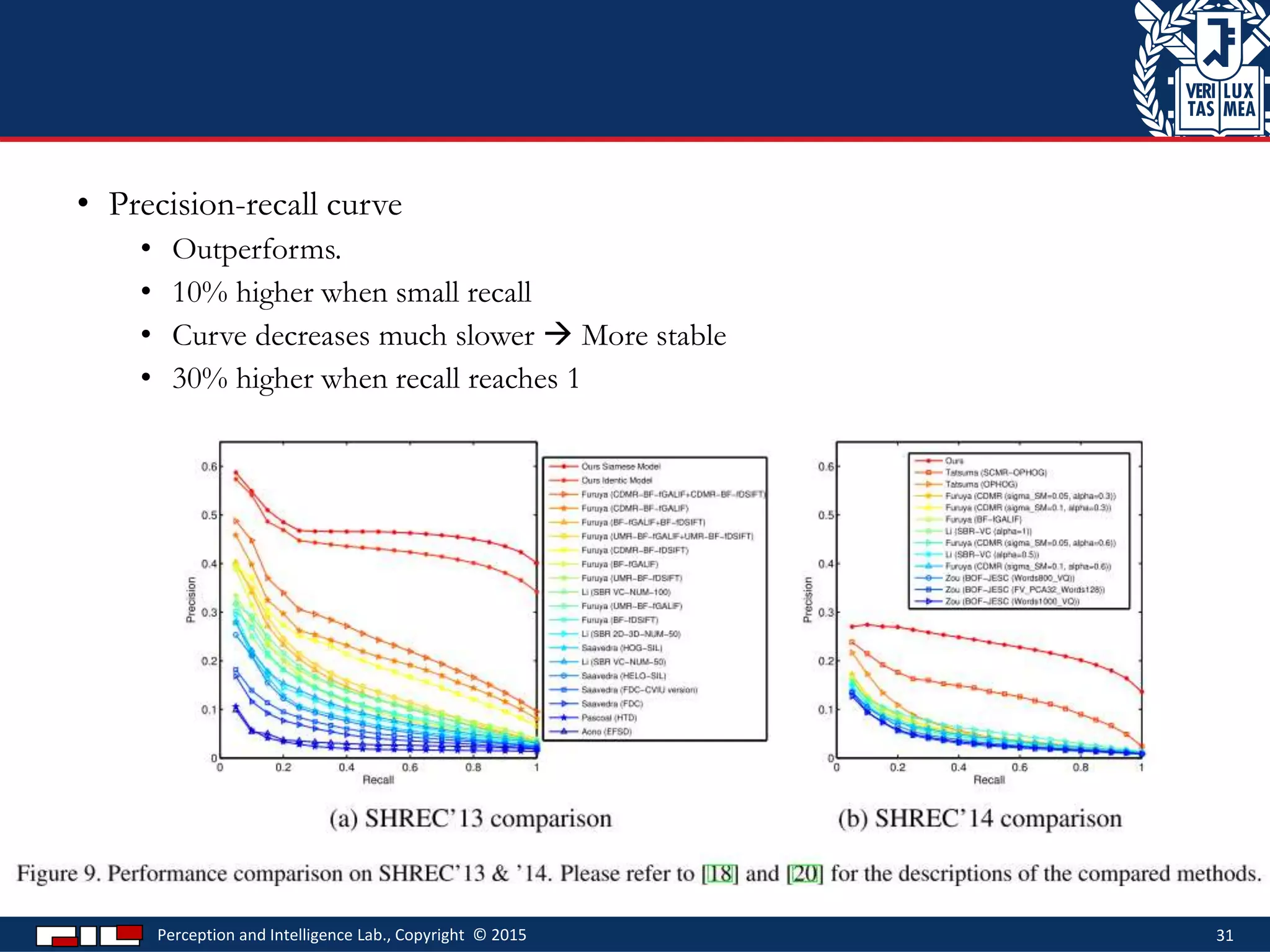

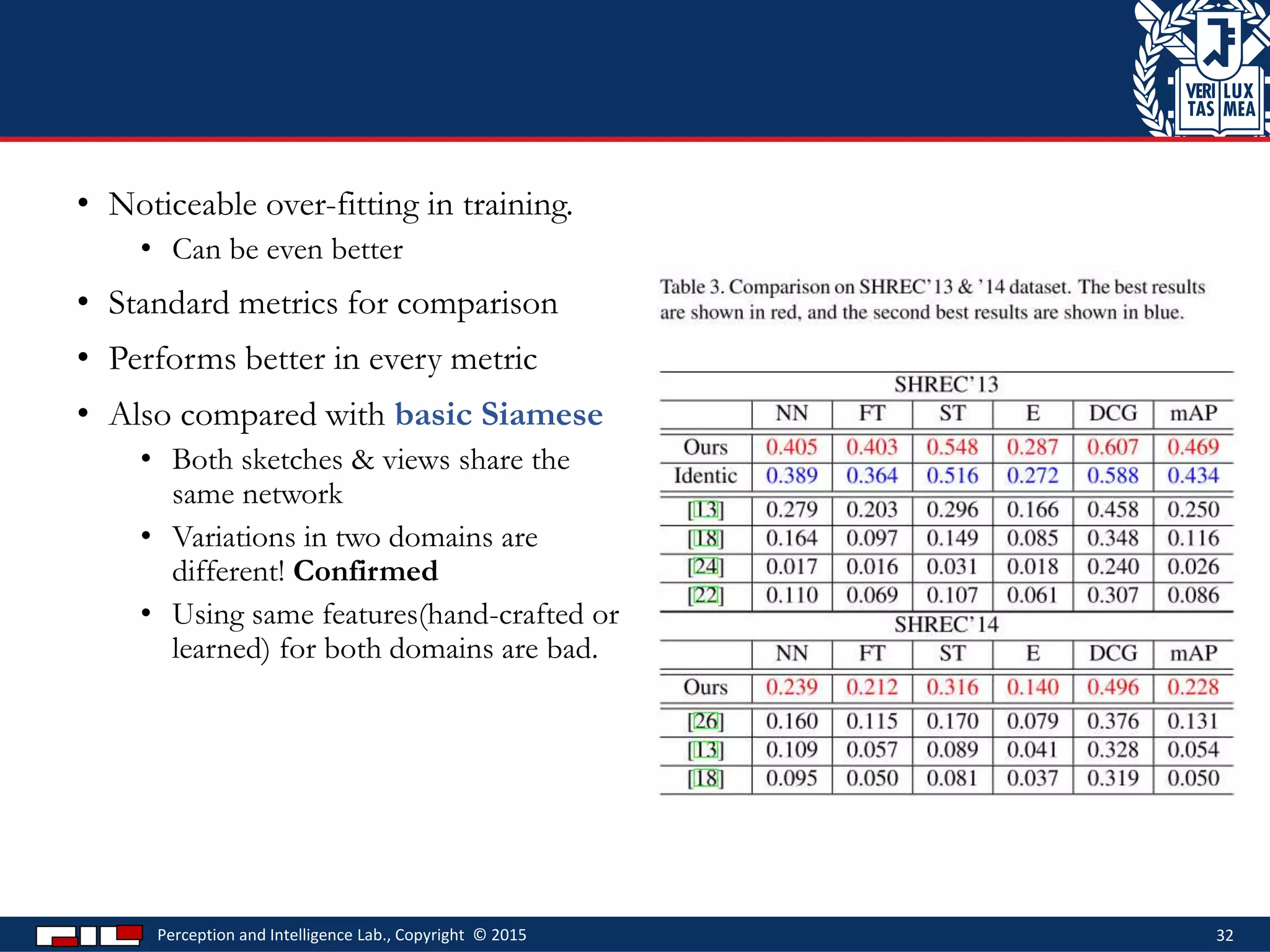

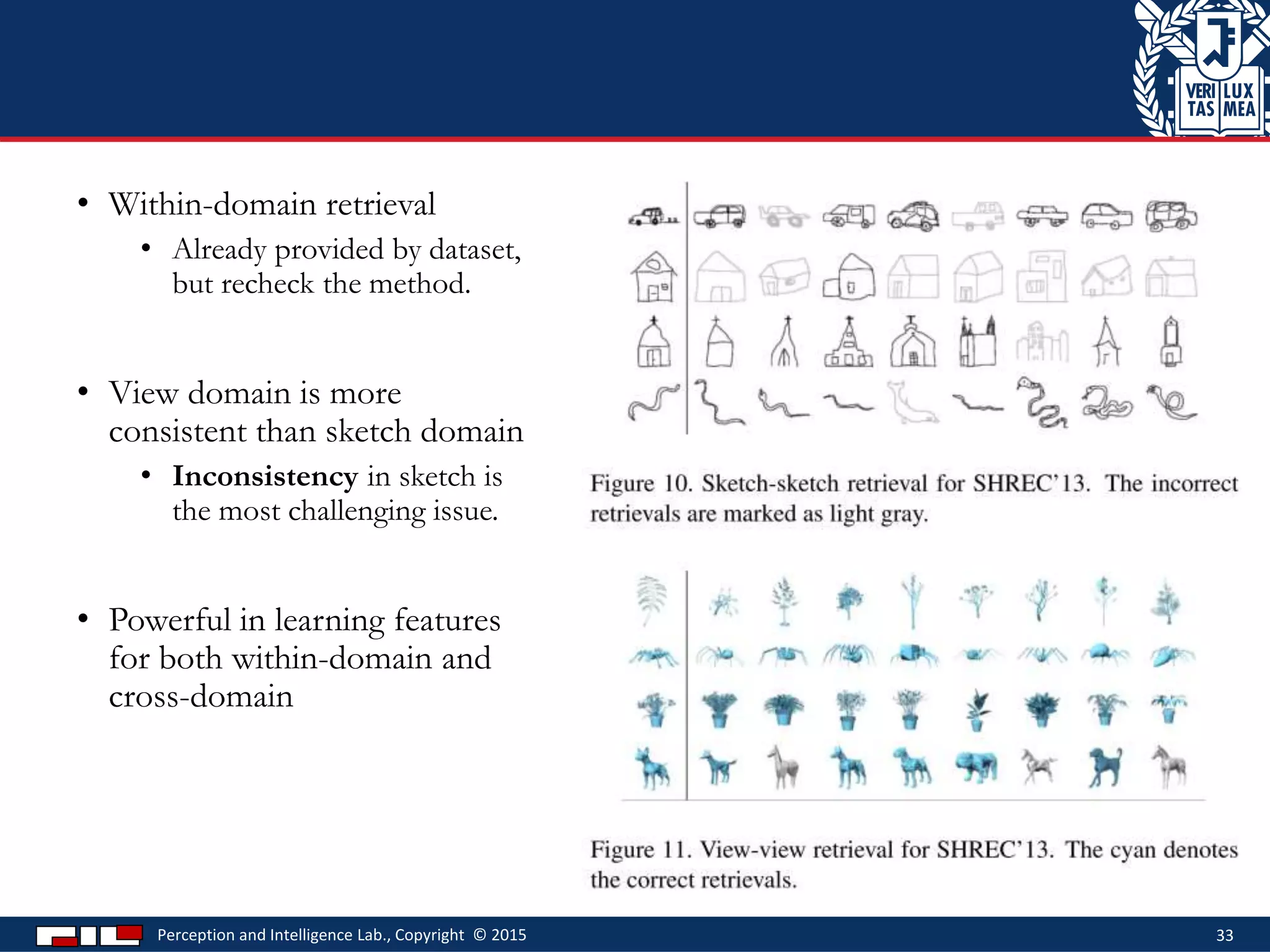

This document describes a method for sketch-based 3D shape retrieval using convolutional neural networks. It proposes using two Siamese CNNs, one for sketches and one for 3D model views, to learn feature representations that can match sketches to 3D models. The method outperforms previous approaches that relied on selecting "best views" of 3D models by directly learning similarities across domains. Experiments on standard datasets demonstrate the approach effectively retrieves 3D shapes from sketches without requiring viewpoint selection.