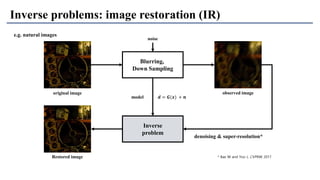

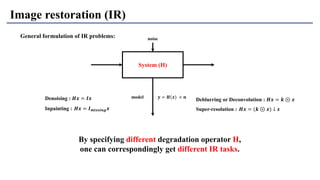

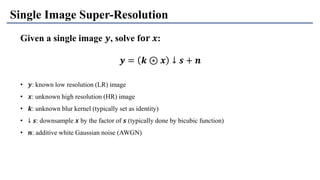

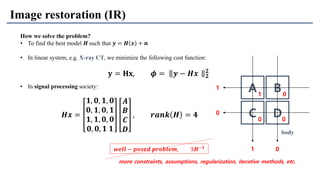

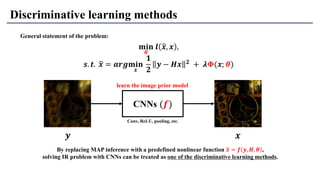

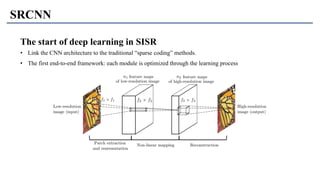

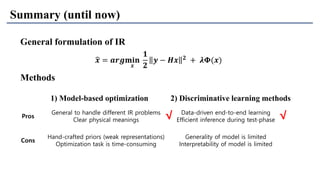

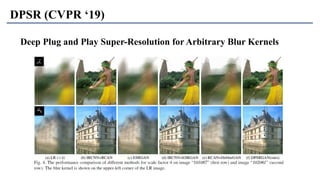

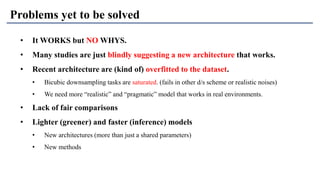

1) The document discusses super-resolution techniques in deep learning, including inverse problems, image restoration problems, and different deep learning models.

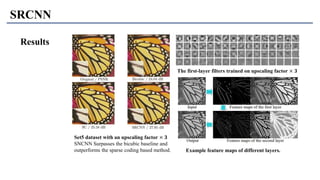

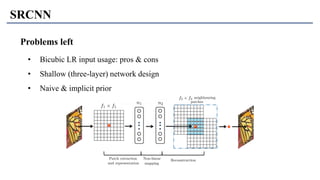

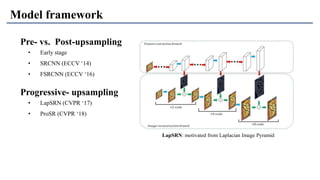

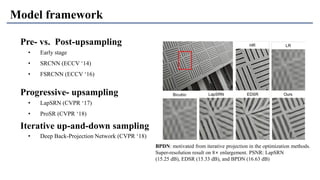

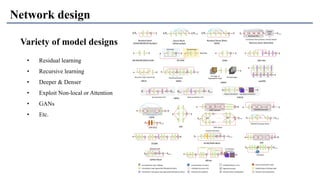

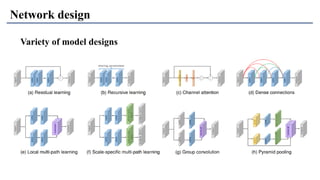

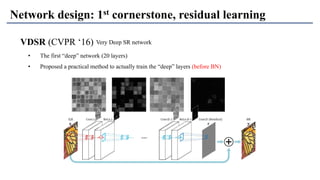

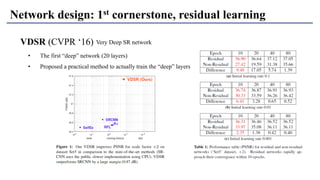

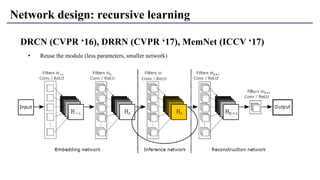

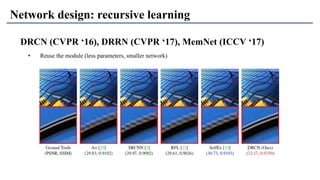

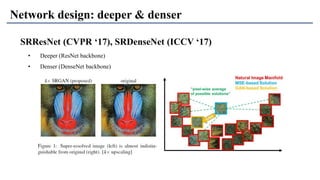

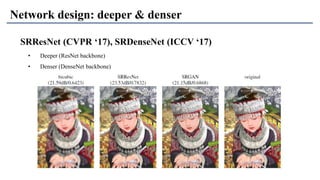

2) Early models like SRCNN used convolutional networks for super-resolution but were shallow, while later models incorporated residual learning (VDSR), recursive learning (DRCN), and became very deep and dense (SRResNet).

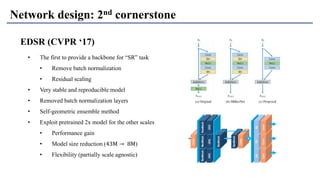

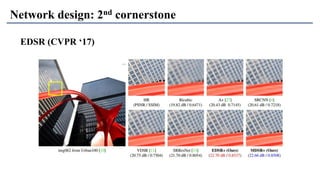

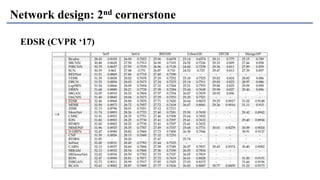

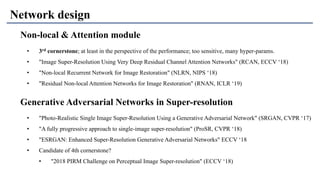

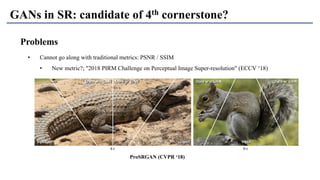

3) Key developments included EDSR which provided a strong backbone model and GAN-based approaches like SRGAN which aimed to generate more realistic textures but require new evaluation metrics.