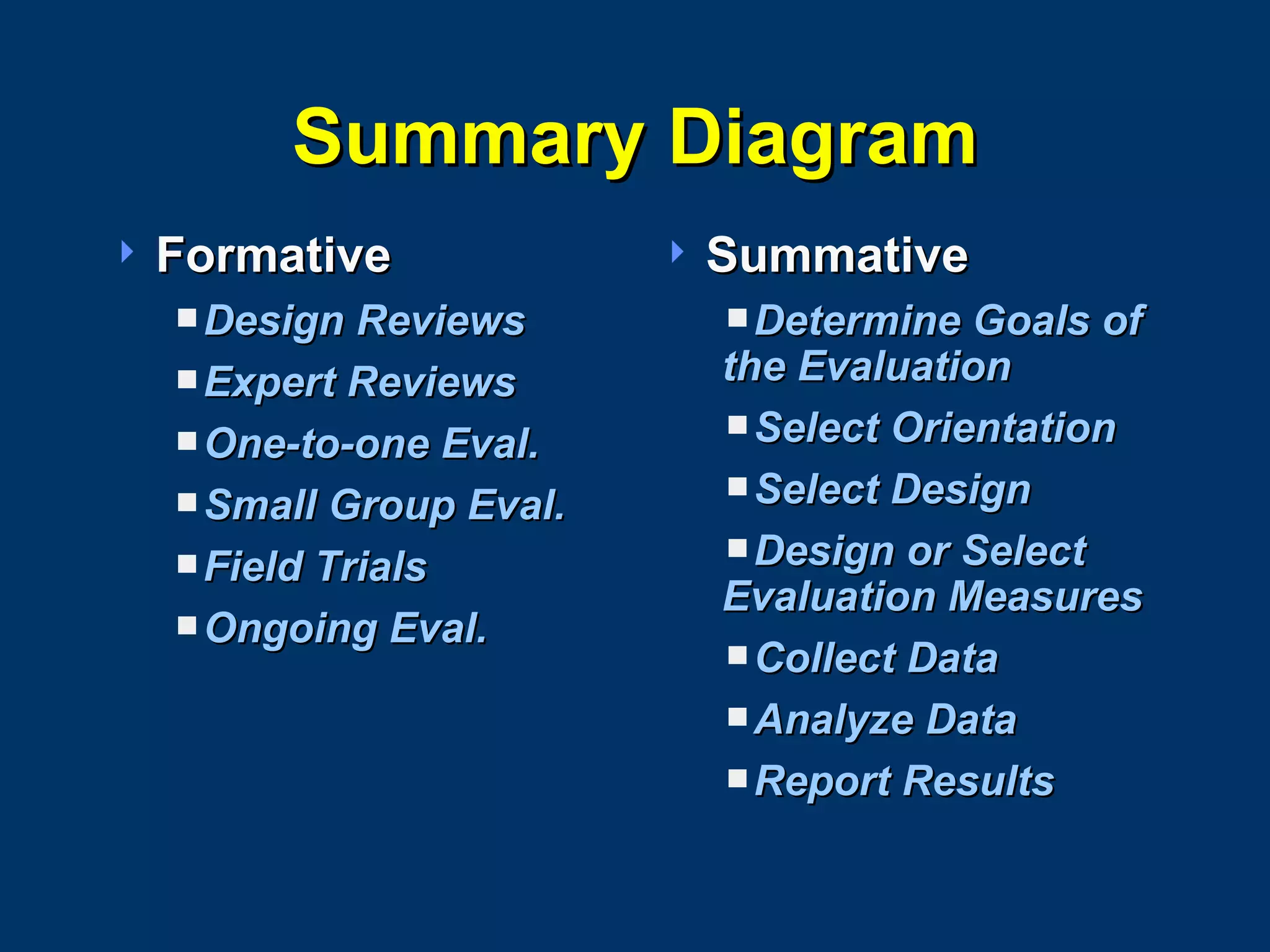

Summative evaluation involves collecting and analyzing data after implementation to provide decision makers information on the effectiveness and efficiency of instruction. It determines whether learner objectives were achieved and costs. There are two approaches: objectivism uses empirical data while subjectivism employs qualitative methods like interviews. Both have limitations if used alone. The designer should not conduct the first summative evaluation due to potential bias. The evaluation report summarizes the needs assessment, study design, results, and conclusions to help guide recommendations.