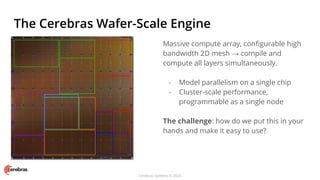

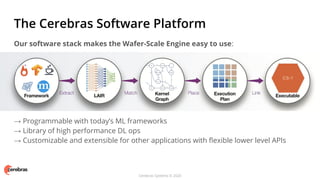

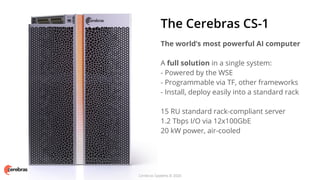

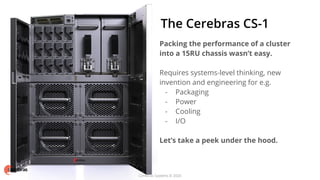

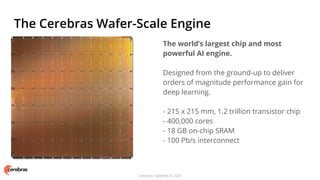

Cerebras Systems has developed the Cerebras CS-1, which contains the world's largest chip (the Wafer-Scale Engine or WSE) and is designed to accelerate deep learning by orders of magnitude. The WSE contains over 1 trillion transistors and 400,000 cores optimized for sparse tensor operations. The CS-1 packs the performance of a cluster into a standard rack unit chassis and is programmable with popular machine learning frameworks. It is already being used by customers like Argonne National Laboratory to accelerate large-scale AI workloads.

![Cerebras Systems © 2020

Flexible cores optimized for tensor operations

Fully programmable compute core

Full array of general instructions with ML extensions

Flexible general ops for control processing

- e.g. arithmetic, logical, load/store, branch

Optimized tensor ops for data processing

- fmac [z] = [z], [w], a

- 3D 3D 2D scalar

Sparse compute engine for neural networks

- Dataflow-triggered computation

- Filters out zero data → skips unnecessary processing

- Higher performance and efficiency for sparse NN](https://image.slidesharecdn.com/13hock-200415060118/85/13-Supercomputer-Scale-AI-with-Cerebras-Systems-9-320.jpg)