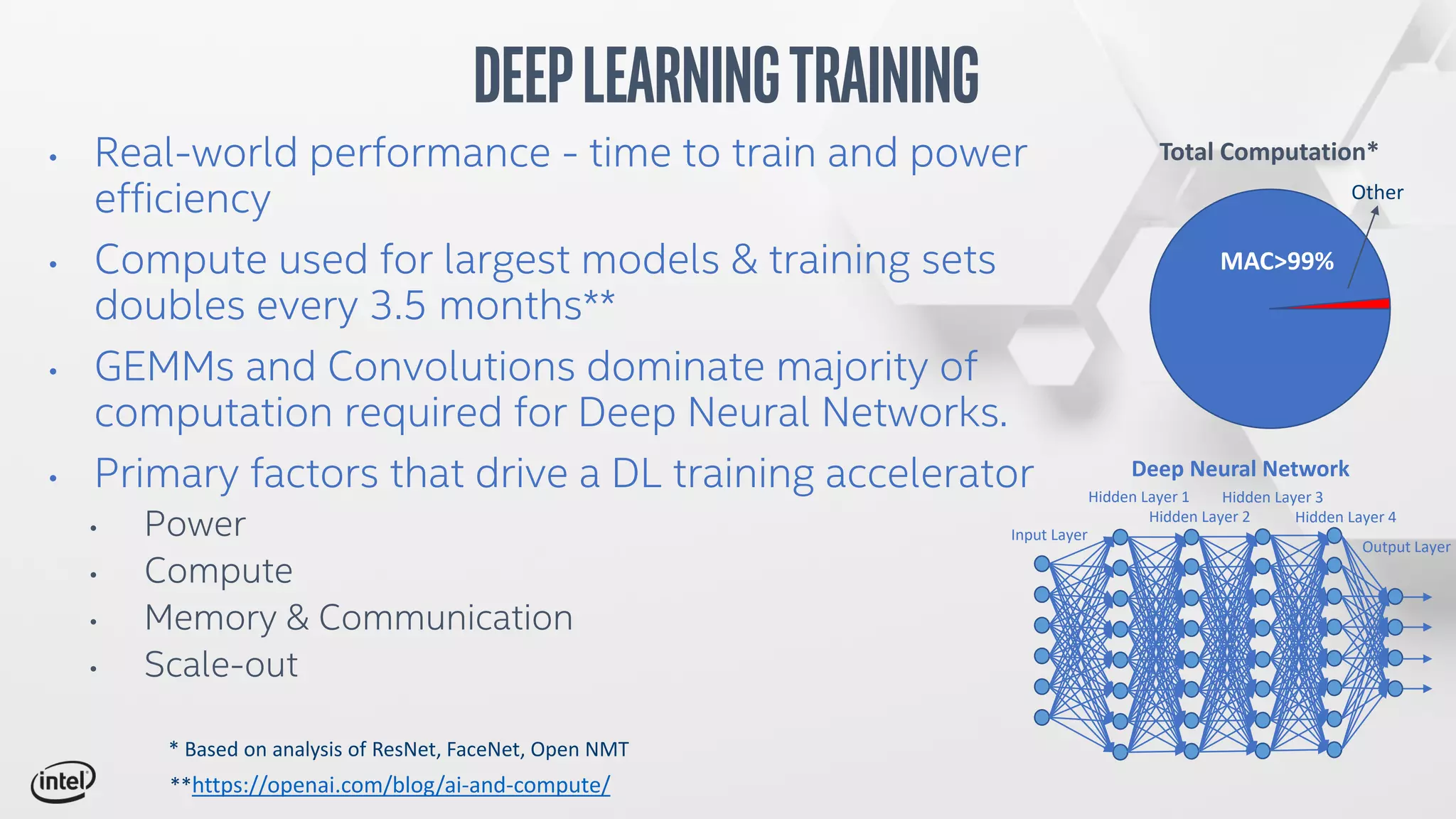

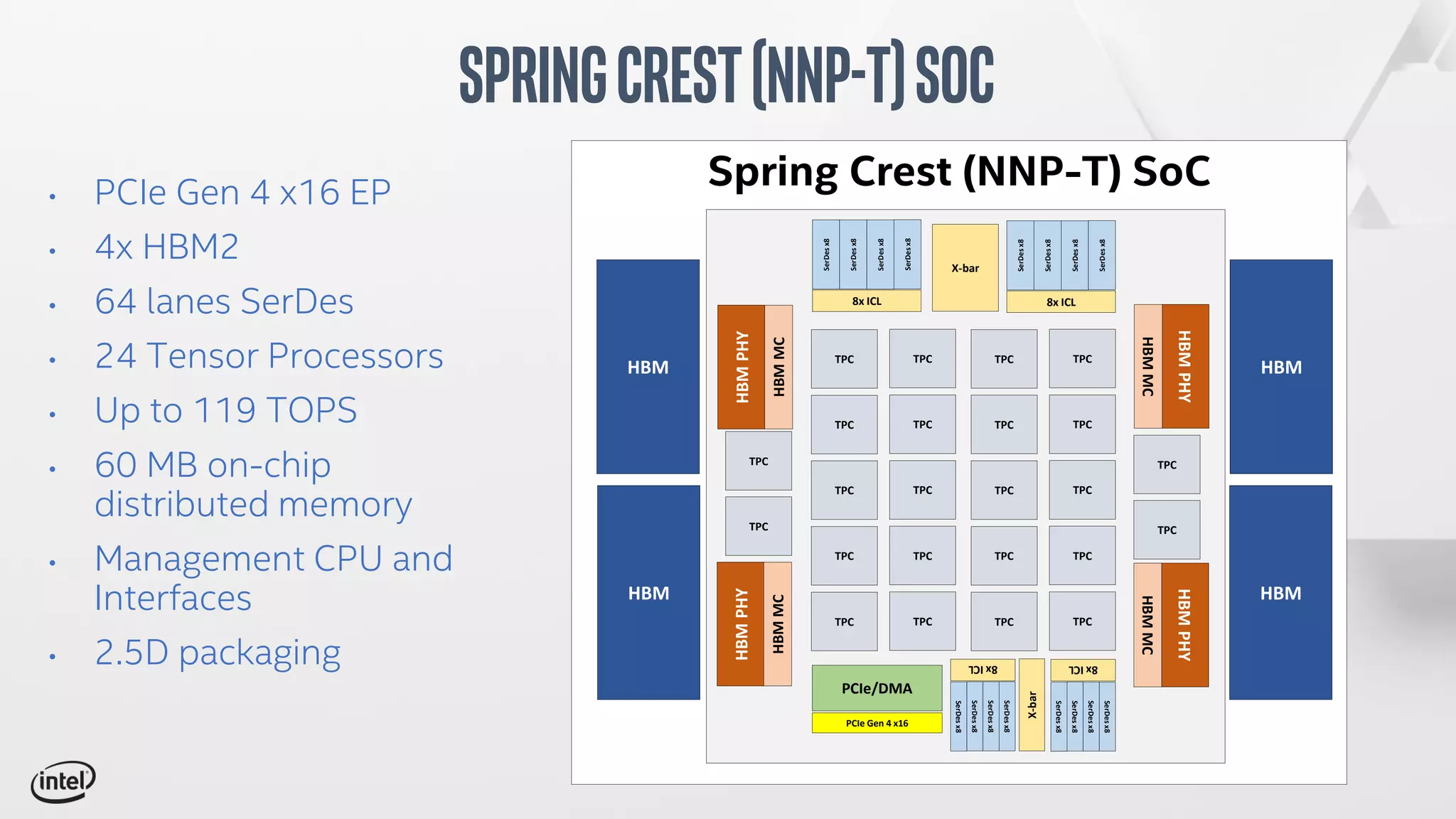

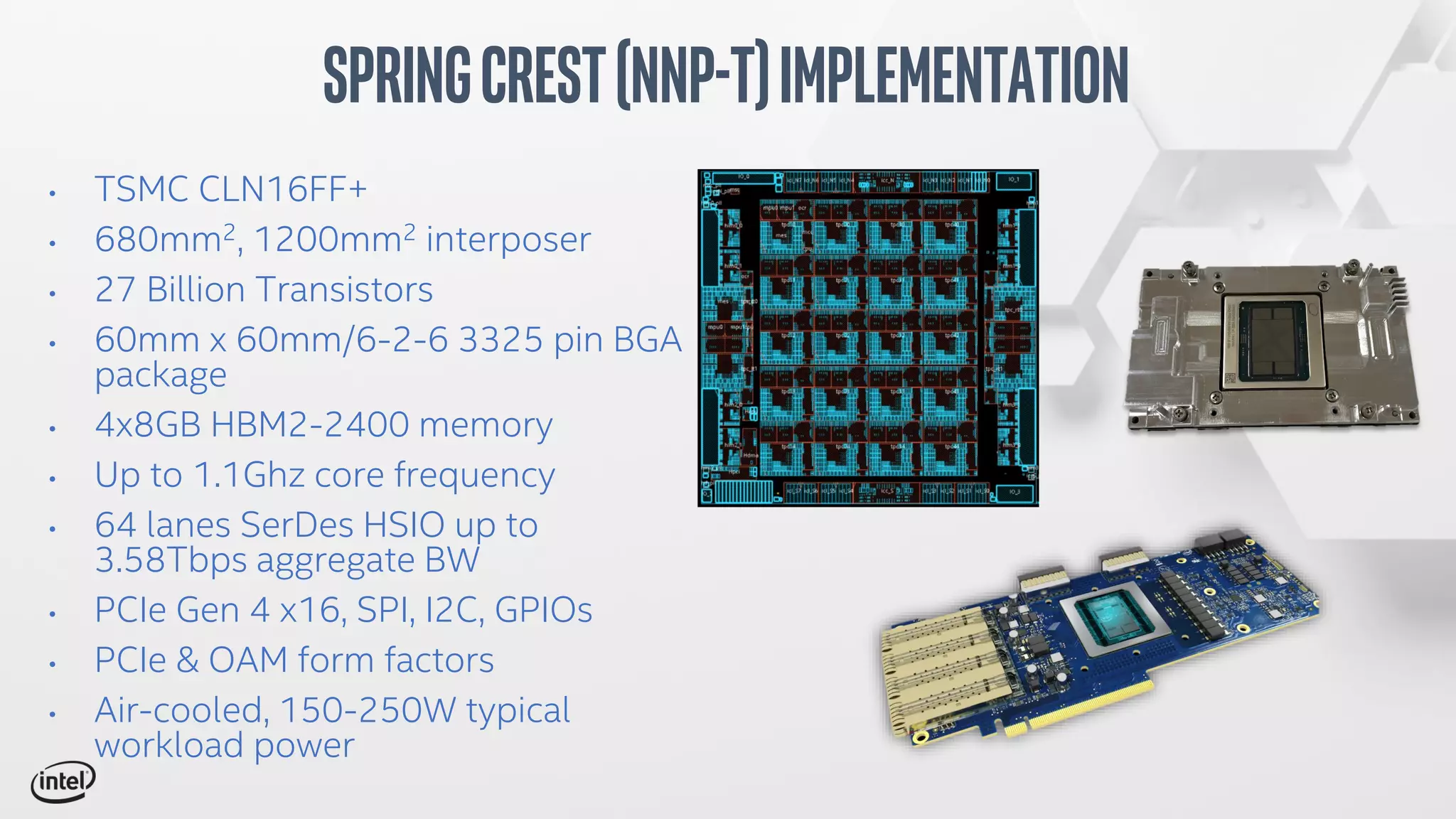

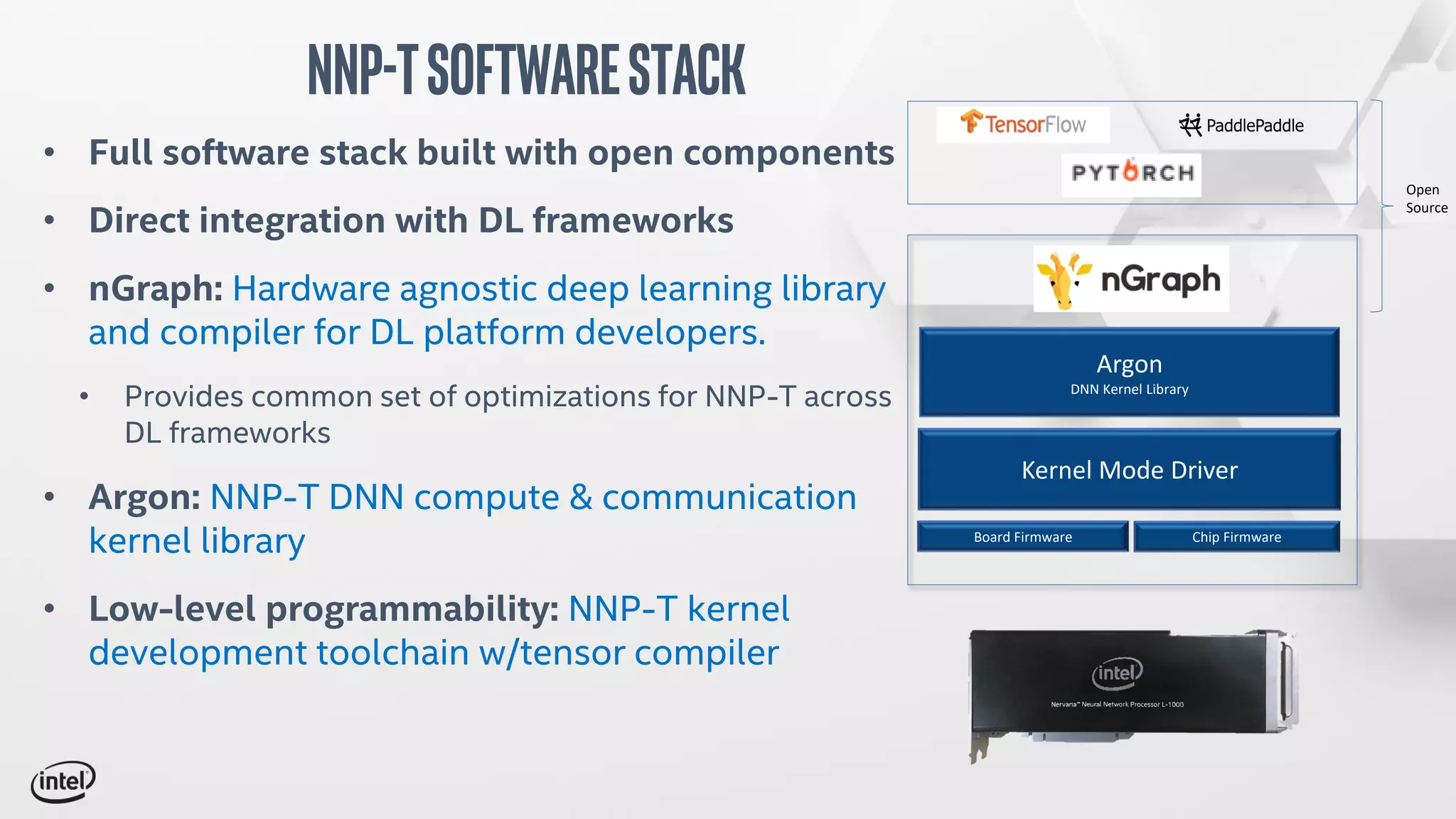

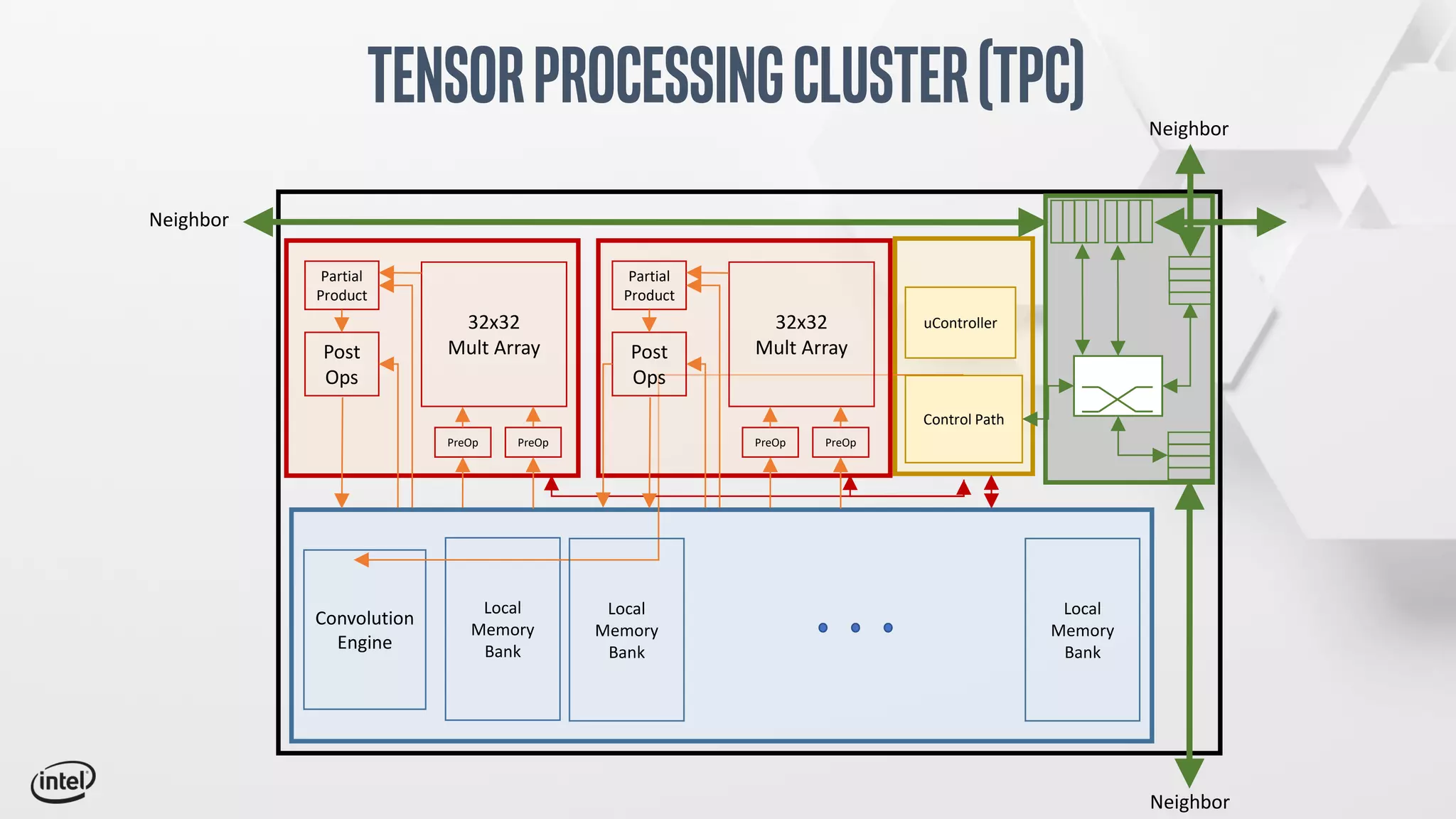

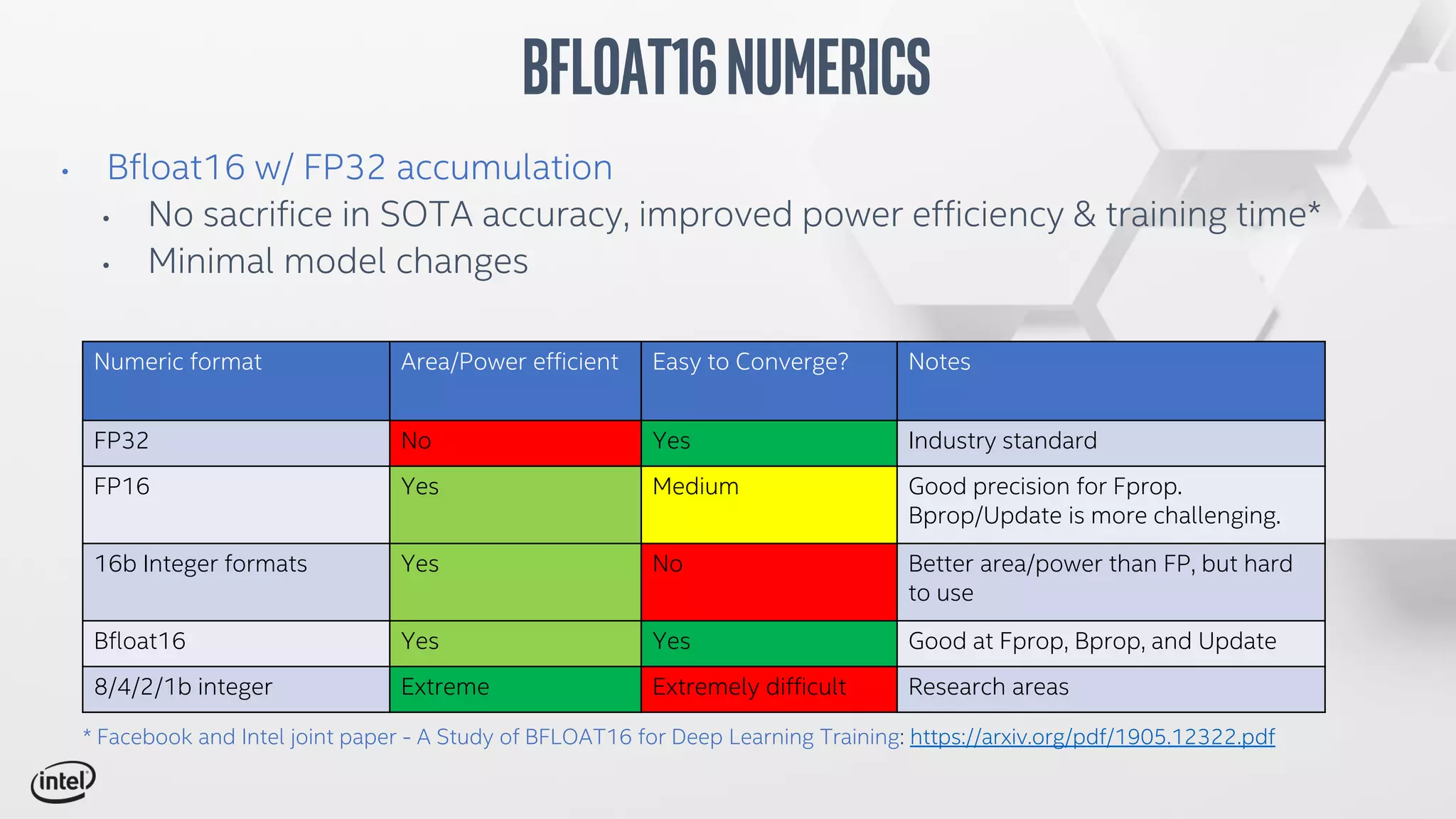

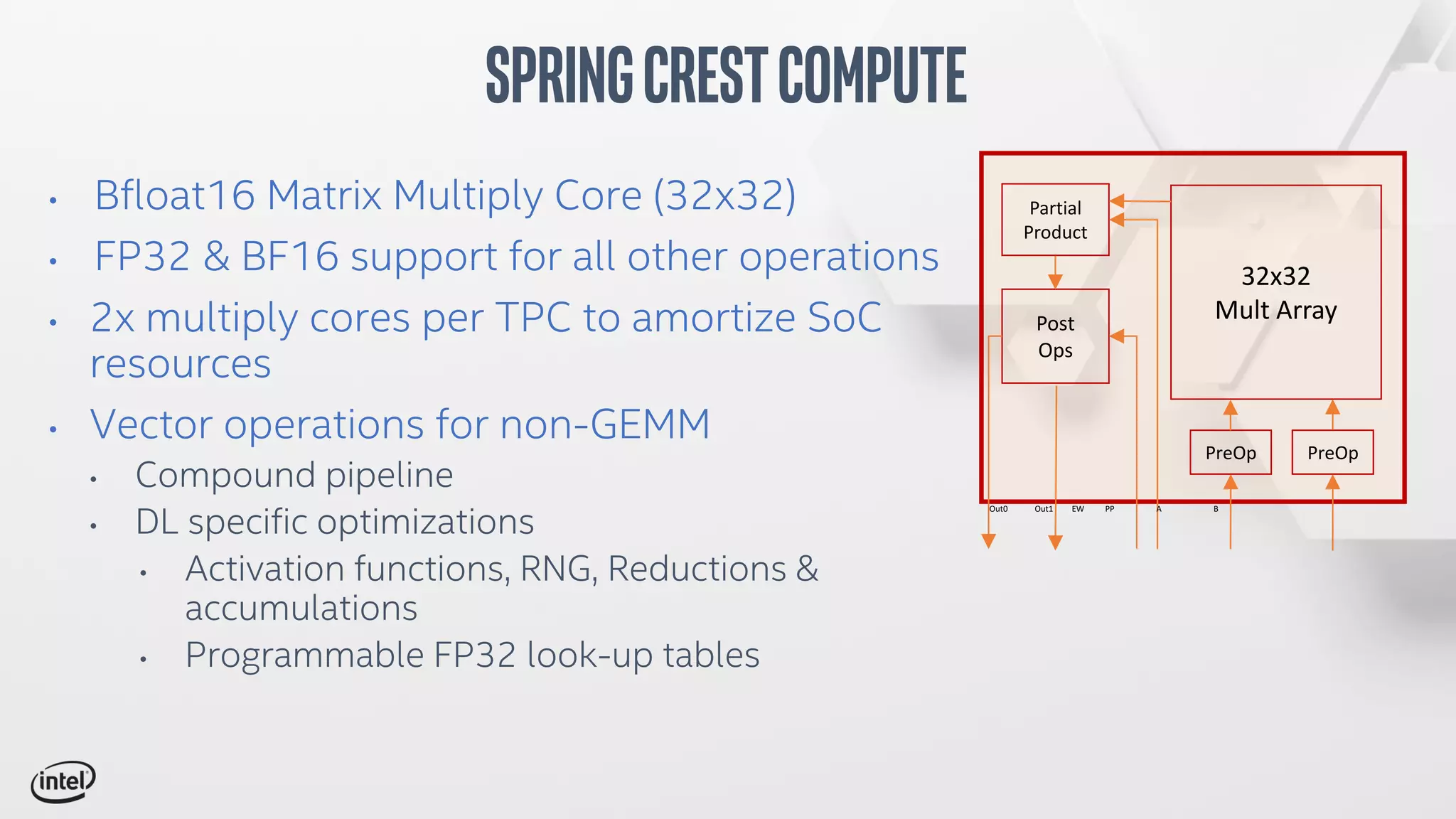

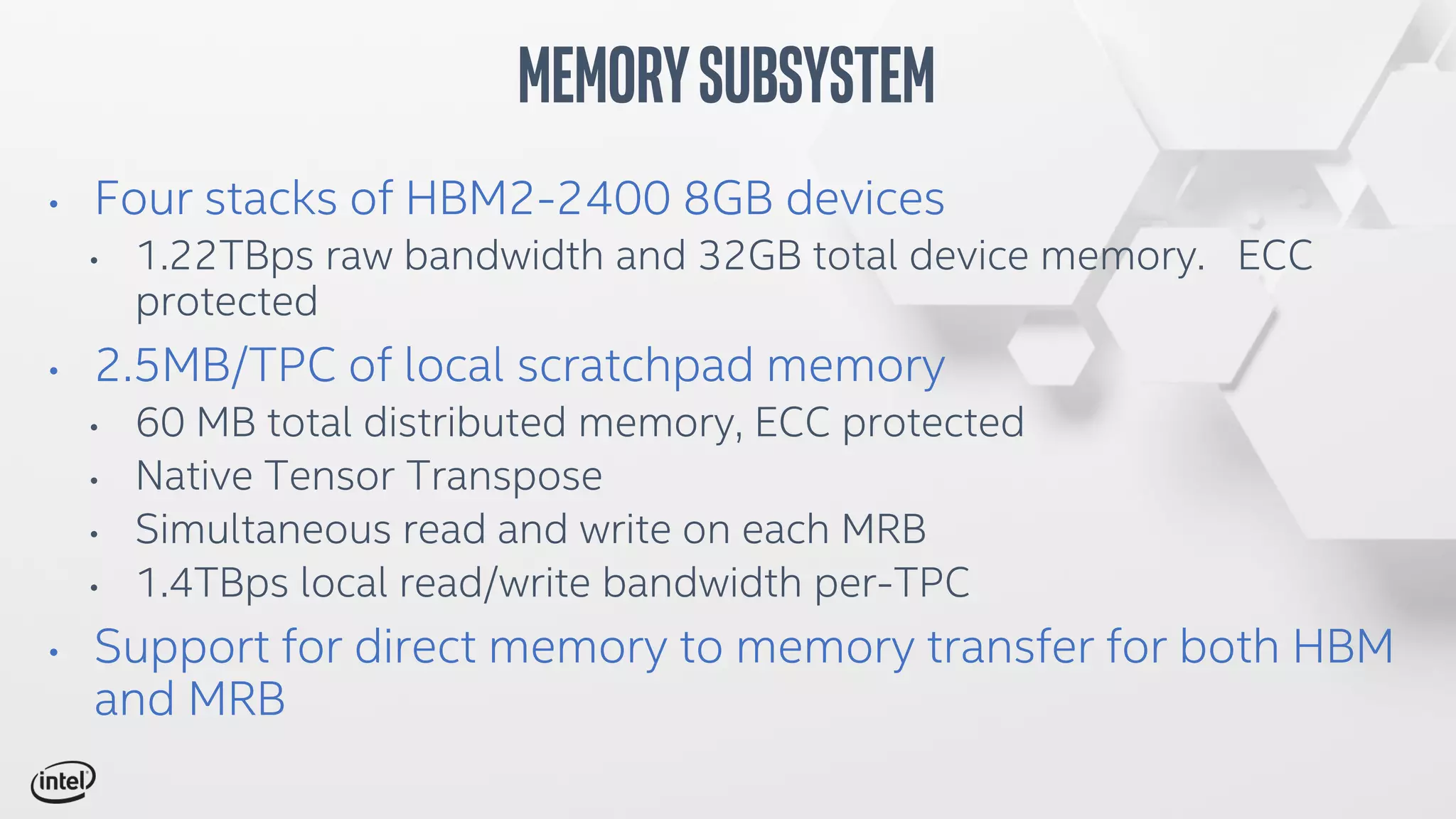

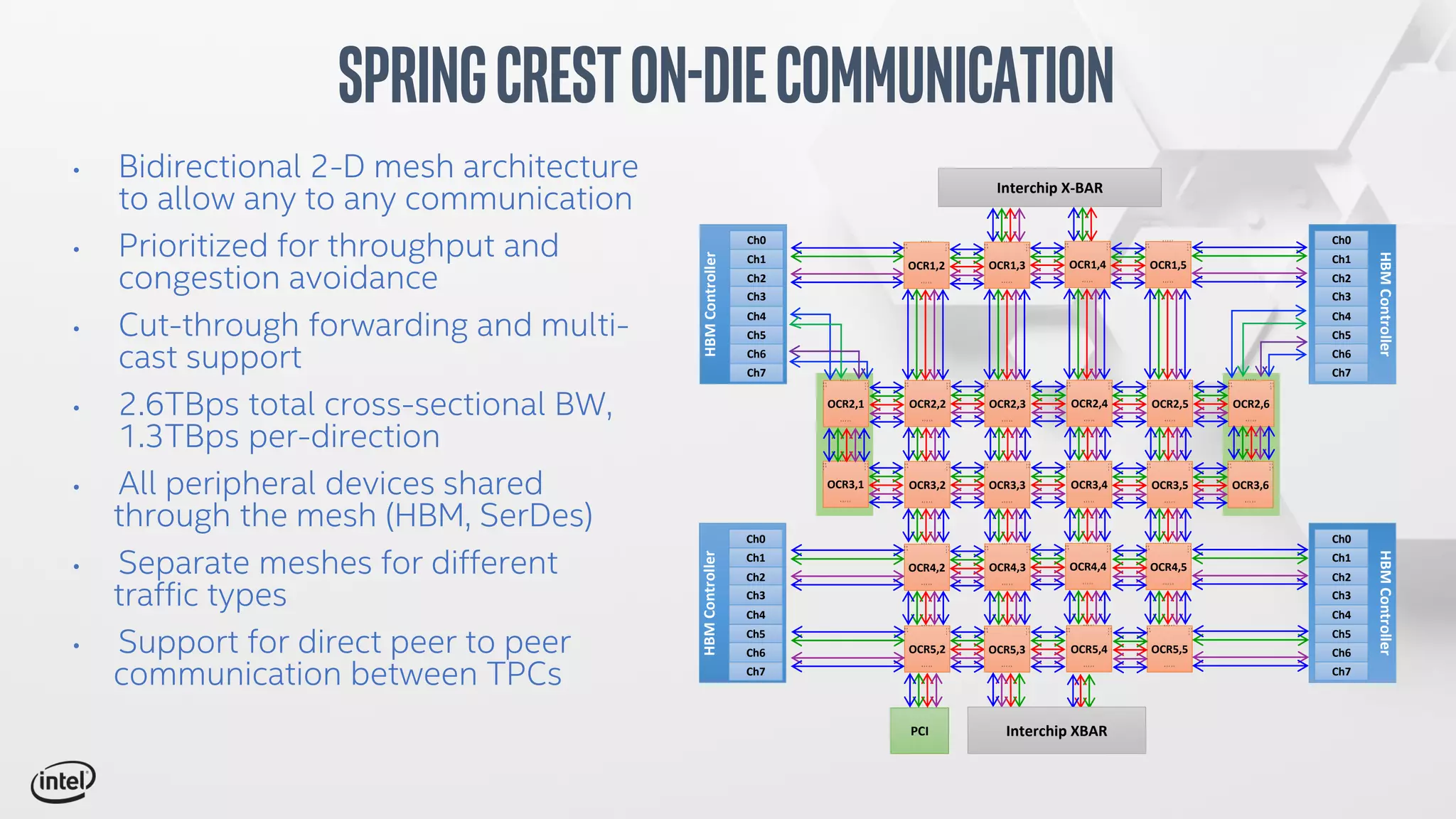

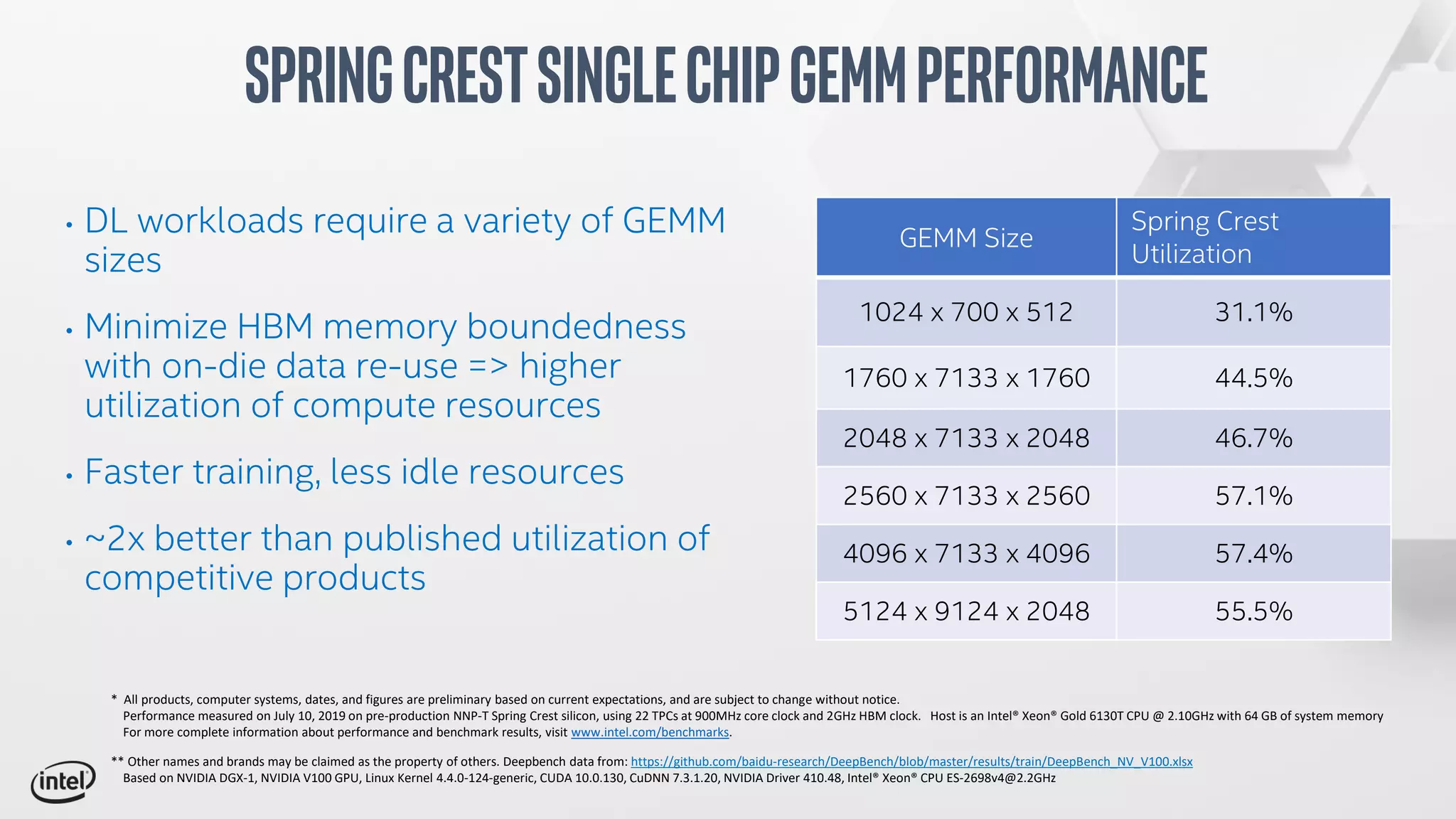

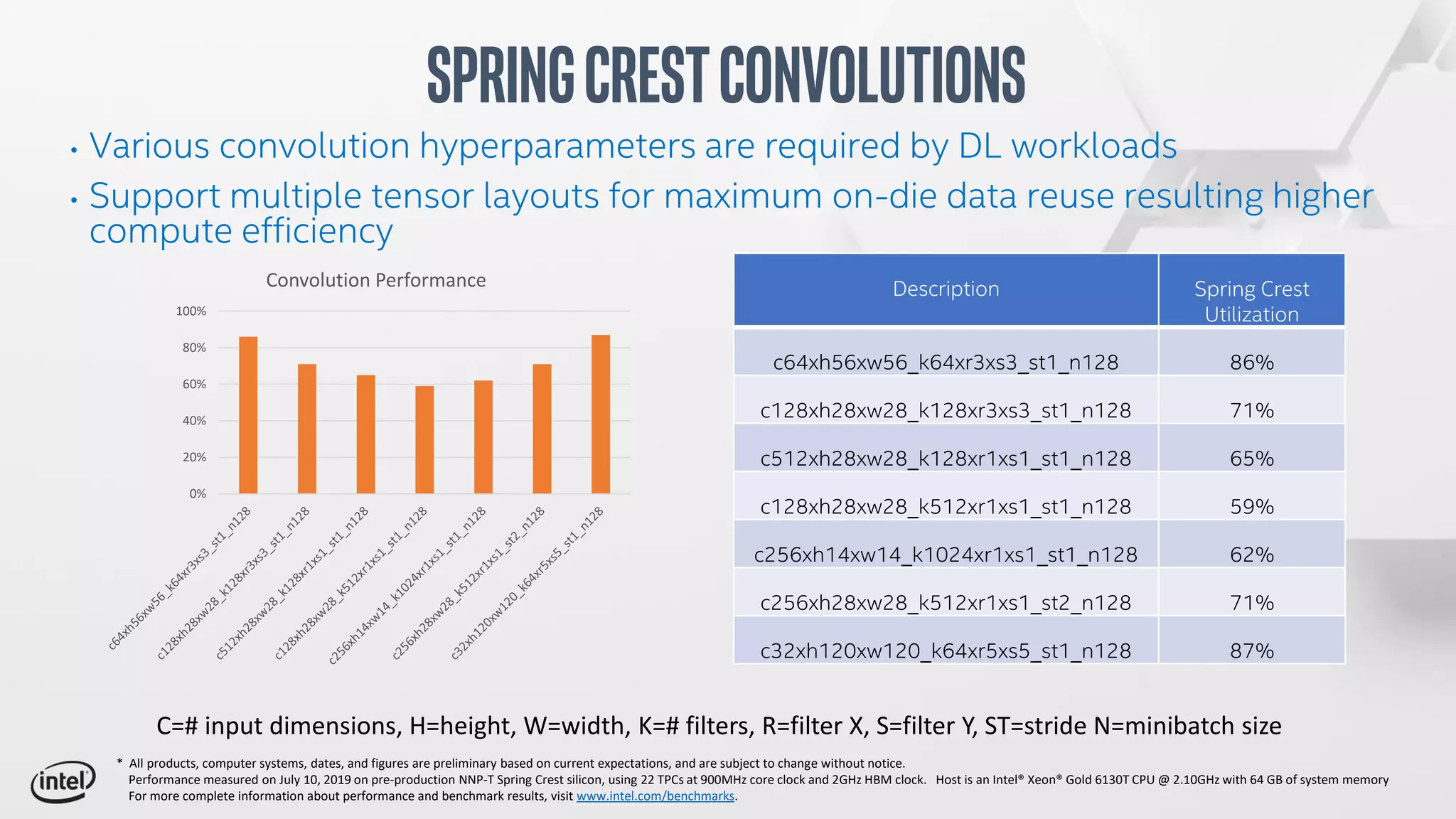

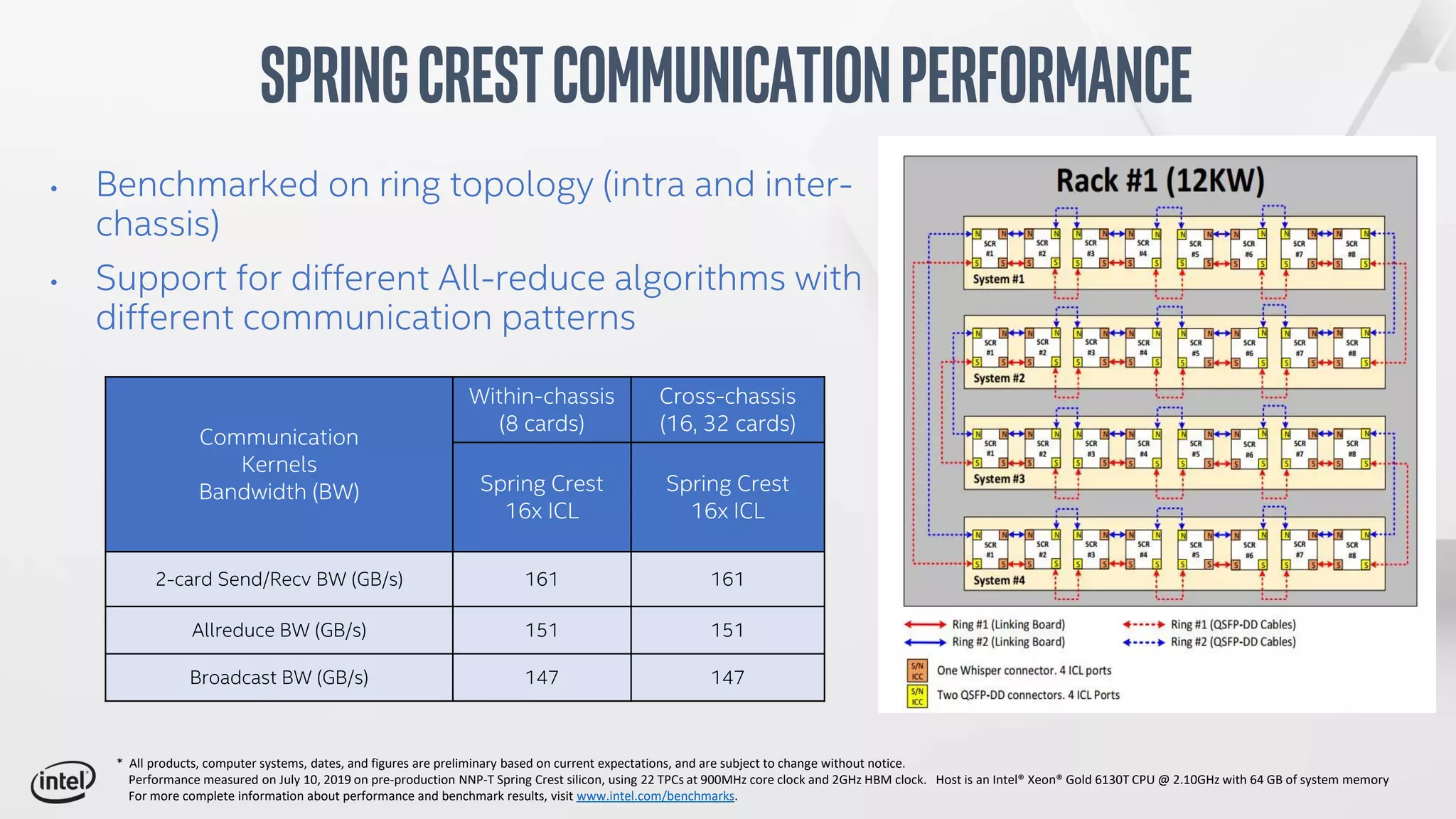

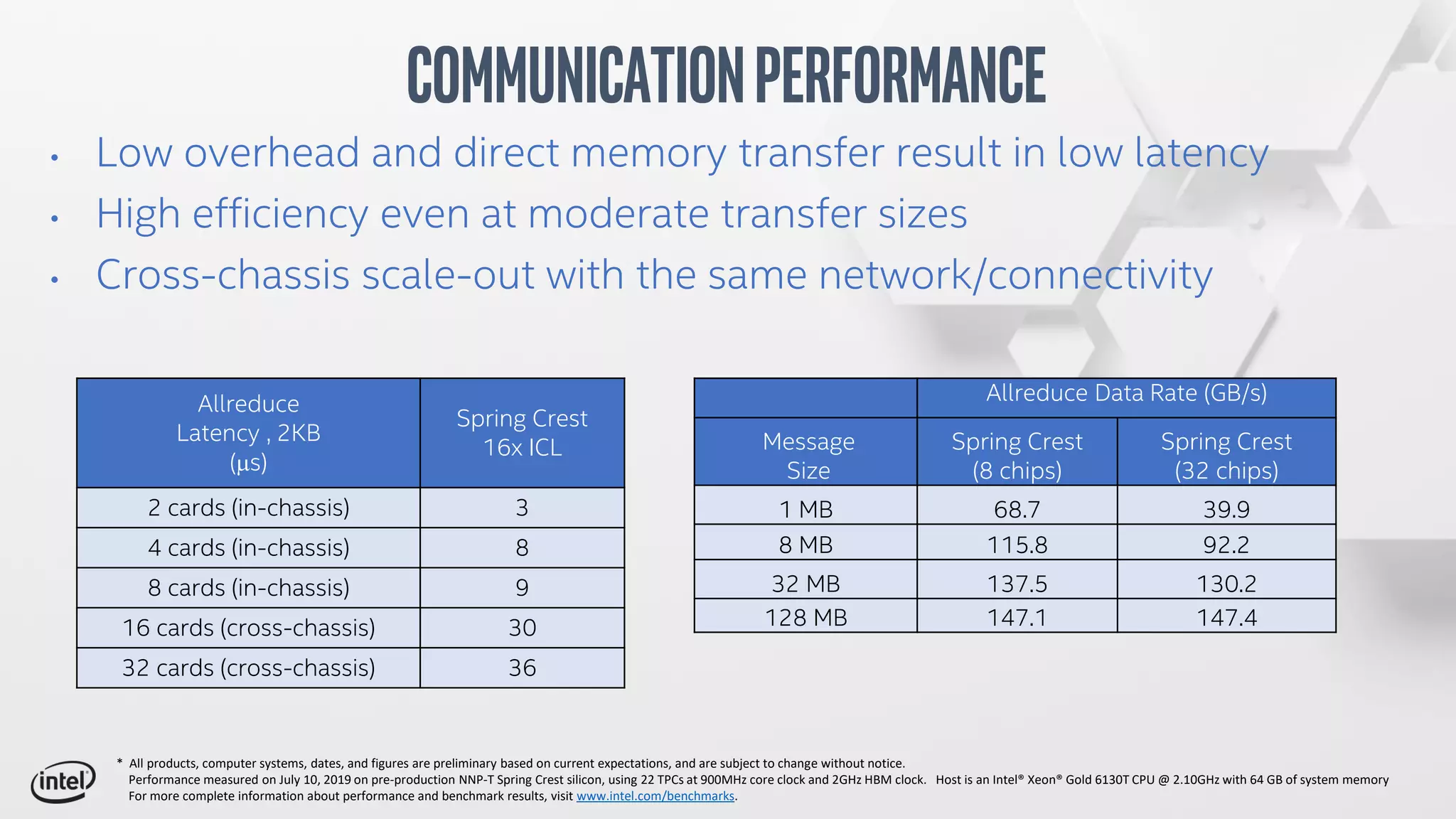

The document discusses Intel's Spring Crest deep learning accelerator, the Nervana NNP-T, detailing its architecture, performance metrics, power efficiency, and software stack. It emphasizes factors such as compute, memory, and communication for deep learning training at scale, alongside specific design features and benchmark results. Additionally, it provides legal disclaimers related to product performance and forward-looking statements, indicating that specifications are subject to change.