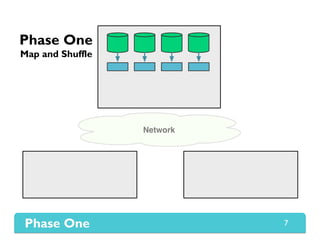

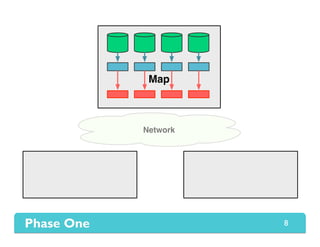

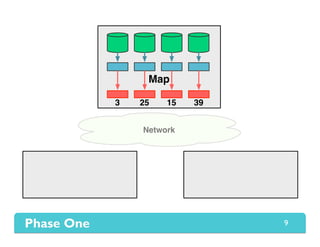

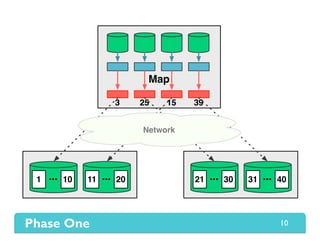

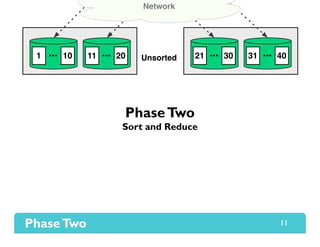

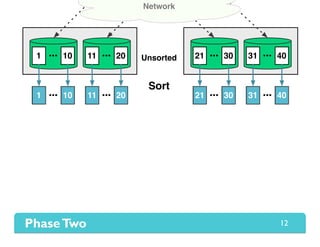

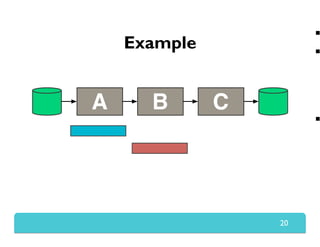

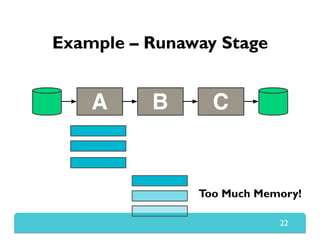

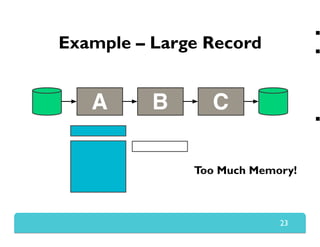

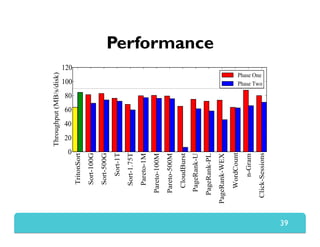

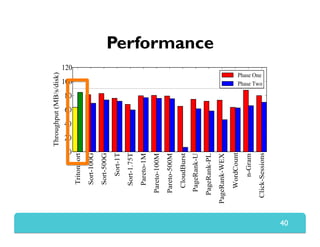

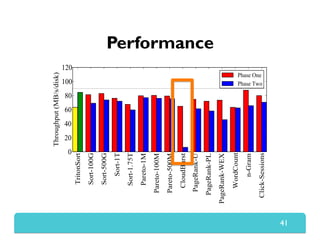

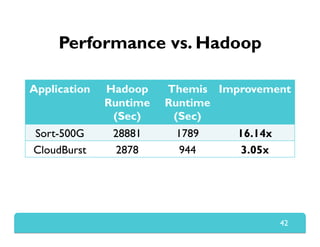

The document presents Themis, an I/O-efficient MapReduce implementation designed to achieve the '2-I/O property', minimizing disk reads and writes during data processing. It aims to overcome the inefficiencies of existing systems like Hadoop by efficiently managing memory and avoiding swapping or spilling under pressure. Themis demonstrates significant performance improvements across various workloads, in some cases achieving over 16 times faster execution than Hadoop.