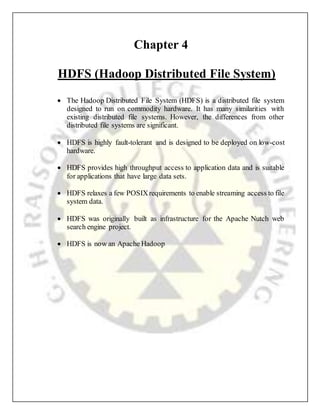

The report discusses the key components and objectives of HDFS, including data replication for fault tolerance, HDFS architecture with a NameNode and DataNodes, and HDFS properties like large data sets, write once read many model, and commodity hardware. It provides an overview of HDFS and its design to reliably store and retrieve large volumes of distributed data.

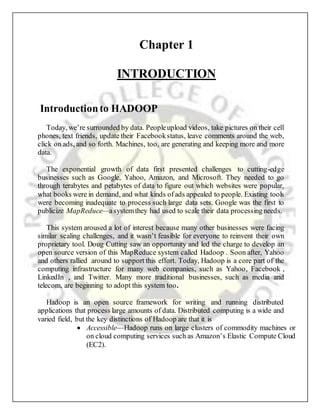

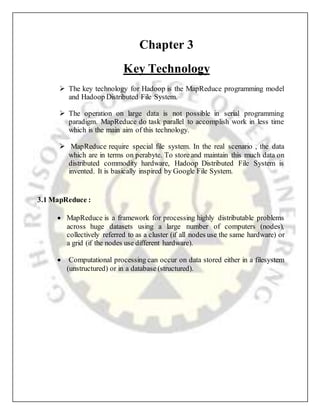

![What is DataNode?

A DataNode stores data in the [HadoopFileSystem]. A functional filesystem has more than

one DataNode, with data replicated across them.

On startup, a DataNode connects to the NameNode; spinning until that service comes up. It

then responds to requests from the NameNode for filesystem operations.

Client applications can talk directly to a DataNode, once the NameNode has provided the

location of the data. Similarly, MapReduce operations farmed out to TaskTracker instances

near a DataNode, talk directly to the DataNode to access the files. TaskTracker instances

can, indeed should, be deployed on the same servers that host DataNode instances, so

that MapReduce operations are performed close to the data.

DataNode instances can talk to each other, which is what they do when they are replicating

data.

There is usually no need to use RAID storage for DataNode data, because data is

designed to be replicated across multiple servers, rather than multiple disks on the

same server.

An ideal configuration is for a server to have a DataNode, a TaskTracker, and then

physical disks one TaskTracker slot per CPU. This will allow

every TaskTracker 100% of a CPU, and separate disks to read and write data.

Avoid using NFS for data storage in production system.](https://image.slidesharecdn.com/hdfsfinal-180402060318/85/HDFS-21-320.jpg)

![REFERENCES

[1] “What is apache hadoop?” https://hadoop.apache.org/, accessed:2015-08-13.

[2] M. Zaharia, D. Borthakur, J. Sen Sarma, K. Elmeleegy, S. Shenker, and I. Stoica, “Delay

scheduling: a simple technique for achieving locality and fairness in cluster scheduling,” in

Proceedings of the 5th European conference on Computer systems. ACM, 2010, pp. 265–

278.

[3] K. S. Esmaili, L. Pamies-Juarez, and A. Datta, “The corestorage primitive: Cross-object

redundancy for efficient data repair & access in erasure coded storage,” arXiv preprint

arXiv:1302.5192, 2013.

[4] G. Ananthanarayanan, S. Agarwal, S. Kandula, A. Greenberg, I. Stoica, D. Harlan, and

E. Harris, “Scarlett: Coping with skewed content popularity in mapreduce clusters.” in

Proceedings of the Sixth Conference on Computer Systems, ser. EuroSys ’11. New York,

NY, USA: ACM, 2011, pp. 287–300. [Online]. Available:

http://doi.acm.org/10.1145/1966445.196647

[5] G. Kousiouris, G. Vafiadis, and T. Varvarigou, “Enabling proac-tive data management

in virtualized hadoop clusters based on predicted data activity patterns.” in P2P, Parallel,

Grid, Cloud and Internet Computing (3PGCIC), 2013 Eighth International Conference on,

Oct 2013, pp. 1–8.

[6] A. Papoulis, Signal analysis. McGraw-Hill, 1977, vol. 191.

[7] Q. Wei, B. Veeravalli, B. Gong, L. Zeng, and D. Feng, “Cdrm: A cost-effective dynamic

replication management scheme for cloud storage cluster.” in Cluster Computing

(CLUSTER), 2010 IEEE Inter-national Conference on, Sept 2010, pp. 188–196.](https://image.slidesharecdn.com/hdfsfinal-180402060318/85/HDFS-27-320.jpg)

![BIBLIOGRAPHY

[1] Jason Venner, Pro Hadoop, Apress

[2] Tom White, Hadoop: The Definitive Guide , O’REILLY

[3] Chuck Lam, Hadoop in Action, MANNING

[4] www.Hadoop.apache.org

[5] http://www.tutorialshadoop.com

[6] Lecture Notes in Computer Science, 2013.](https://image.slidesharecdn.com/hdfsfinal-180402060318/85/HDFS-28-320.jpg)