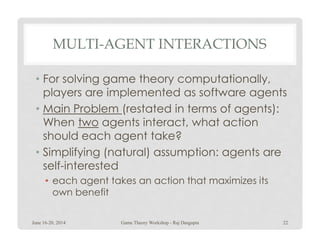

This document provides an overview of a workshop on computational aspects of game theory held from June 16-20, 2014 at the Indian Statistical Institute Kolkata. The workshop was organized by Raj Dasgupta and covered topics including normal form games, Bayesian games, mechanism design, and coalition games. Students presented on topics from the workshop and worked on programming tools for solving game theory problems.

![DEFINITION: NORMAL FORM GAME

• For a game with I players, the normal form

representation of the game ΓN specifies for

each player i, a set of strategies Si (with si Є Si)

and a payoff or utility function ui(s1, s2, s3,

s …s) giving the utility associated with thes4…sI) giving the utility associated with the

outcome of the game corresponding to the

joint strategy profile (s1, s2, s3, s4…sI).

• Formal notation:

ΓN =[ I, {Si}, {ui(.)}]

June 16-20, 2014 58Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-58-320.jpg)

![DEFINITION:

STRICTLY DOMINATED STRATEGY

• A strategy si Є Si is strictly dominated for player I in

game ΓN =[ I, {Si}, {ui(.)}] if there exists another

strategy si

‘ Є Si such that for all s-i Є S-i

ui(si

‘,s-i) > ui(si,s-i)ui(si

‘,s-i) > ui(si,s-i)

• In this case we say that strategy si

‘ strictly

dominates strategy si

June 16-20, 2014 59Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-59-320.jpg)

![DEFINITION:

WEAKLY DOMINATED STRATEGY

• A strategy si Є Si is weakly dominated for player I in

game ΓN =[ I, {Si}, {ui(.)}] if there exists another

strategy si

‘ Є Si such that for all s-i Є S-i

ui(si

‘,s-i) >= ui(si,s-i)i i -i i i -i

• with strict inequality for some s-i. In this case,

we say that strategy si

‘ weakly dominates

strategy si

• A strategy is a weakly dominant strategy for

player I in game ΓN =[ I, {Si}, {ui(.)}] if it weakly

dominates every other strategy in Si

June 16-20, 2014 61Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-61-320.jpg)

![DEFINITION: MIXED STRATEGY

• Given player i’s (finite) pure strategy set Si, a

mixed strategy for player i , σi: Si → [0,1],

assigns to each pure strategy si Є Si a

probability σi(si) >=0 that it will be played,

where Σ si Є Si σi(si) =1where Σ si Є Si σi(si) =1

• The set of all mixed strategies for player i is

denoted by ∆(Sj)={(σ1,σ2,σ3,... ,σMi) Є RM: σmi >0

for all m = 1...M and Σm=1

Μ σmi=1

• ∆(Sj) is called the mixed strategy profile

• si is called the support of the mixed strategy

σi(si)

June 16-20, 2014 71Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-71-320.jpg)

![DEFINITION: STRICT DOMINATION

IN MIXED STRATEGIES

• A strategy σi Є ∆(Si) is strictly dominated for player i

in game ΓN =[ I, {∆(Si)}, {ui(.)}] if there exists

another strategy σi

‘ Є ∆(Si) such that for all σ-i Є Π

j<>i ∆(Sj)

ui(σi

‘, σ-i) > ui(σi, σ-i)ui(σi

‘, σ-i) > ui(σi, σ-i)

• In this case we say that strategy σi

‘ strictly

dominates strategy σi

• A strategy σi is a strictly dominant strategy

for player i in game ΓN =[ I, {∆(Si)}, {ui(.)}] if it

strictly dominates every other strategy in

∆(Sj)

June 16-20, 2014 72Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-72-320.jpg)

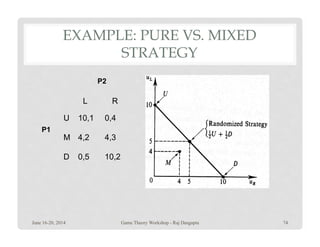

![PURE VS. MIXED STRATEGY

• Player i’s pure strategy is strictly dominated in game

ΓN =[ I, {∆(Si)}, {ui(.)}] if and only if there exists

another strategy σi

‘ Є ∆(Si) such that

ui(σi

‘, s-i) > ui(si, s-i)ui(σi , s-i) > ui(si, s-i)

for all s-i Є S-i

June 16-20, 2014 73Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-73-320.jpg)

![BEST RESPONSE AND

NASH EQUILIBRIUM

• In a game ΓN =[ I, {∆(Si)}, {ui(.)}] , strategy s*

i is

a best response for player i to its opponents’

strategies s-i if

ui(s*

i, s-i) >= ui(si, s-i)ui(s*

i, s-i) >= ui(si, s-i)

for all si ε ∆(Si).

• A strategy profile s = (s*

1 ,s*

2 … s*

N) is a Nash

equilibrium if s*

i is a best response for all

players i= 1…N

June 16-20, 2014 75Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-75-320.jpg)

![RATIONALIZABLE STRATEGIES

• In game ΓN =[ I, {∆(Si)}, {ui(.)}], the strategies in ∆(Si)

that survive the iterated removal of strategies that

are never a best response are known as player i’s

rationalizable strategies.

June 16-20, 2014 78Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-78-320.jpg)

![REGRET

• An agent i’s regret for playing an strategy si if the

other agent’s joint strategy profile is s-i is defined as

[max s’_i ε S_i ui(si’, s-i)] - ui(si, s-i)

• An agent i’s max regret is defined as

max s_-i’ ε S_-i [max s’_i ε S_i ui(si’, s-i)] - ui(si, s-i)

• An agent i’s minimax regret is defined as

arg min s_i ε S_i (max s_-i’ ε S_-i [max s’_i ε S_i ui(si’, s-i)] - ui(si, s-

i))

June 16-20, 2014 84Game Theory Workshop - Raj Dasgupta](https://image.slidesharecdn.com/1-introgametheory-140615223457-phpapp02/85/1-intro-game-theory-84-320.jpg)