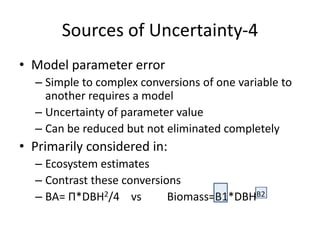

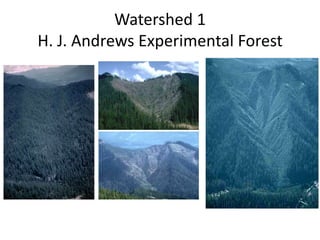

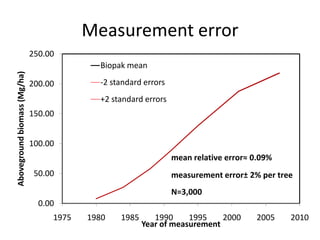

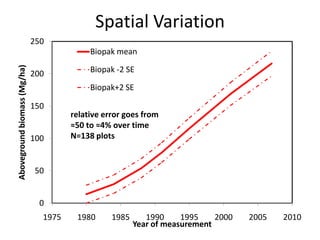

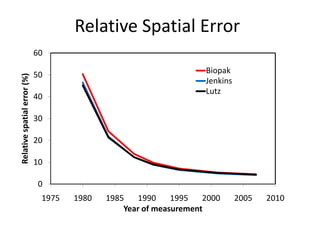

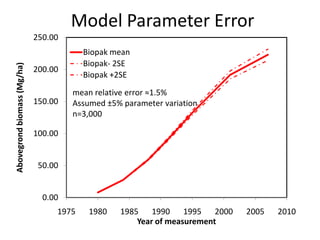

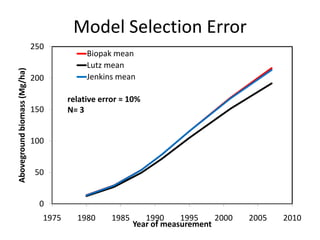

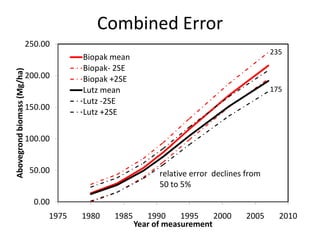

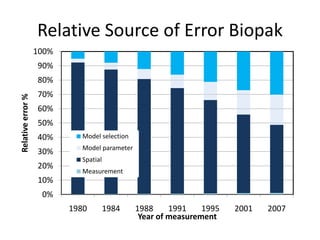

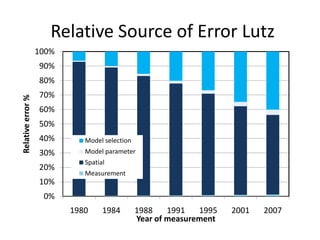

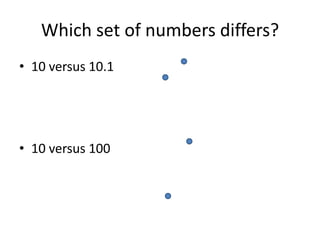

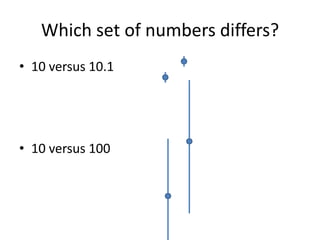

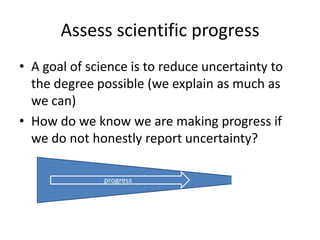

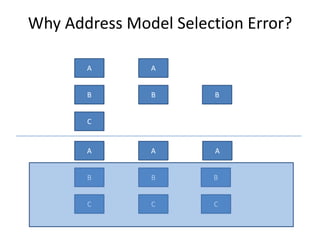

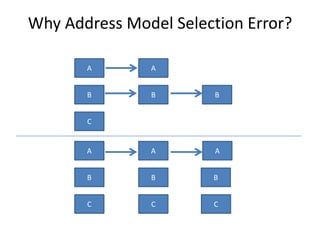

This document discusses uncertainty analysis and its importance for synthesis science. It identifies four main sources of uncertainty: measurement error, natural variation, model parameter error, and model selection error. For a case study at the H.J. Andrews Experimental Forest, it analyzes the relative contribution of each source of uncertainty over time for aboveground biomass estimates. It argues that addressing and quantifying uncertainty is essential for assessing scientific progress, improving models and knowledge, and developing standard guidelines for reporting uncertainty in synthesis science.