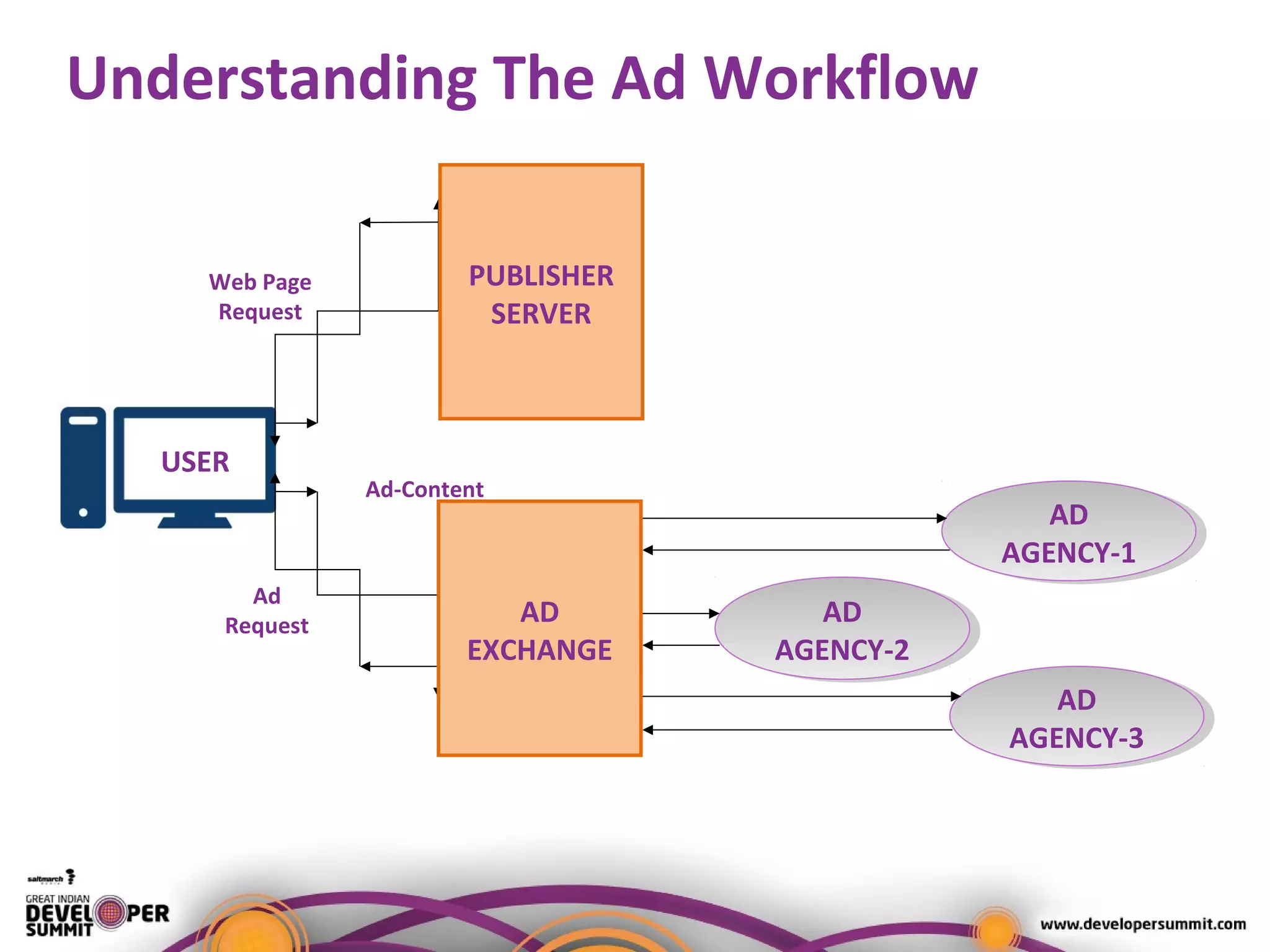

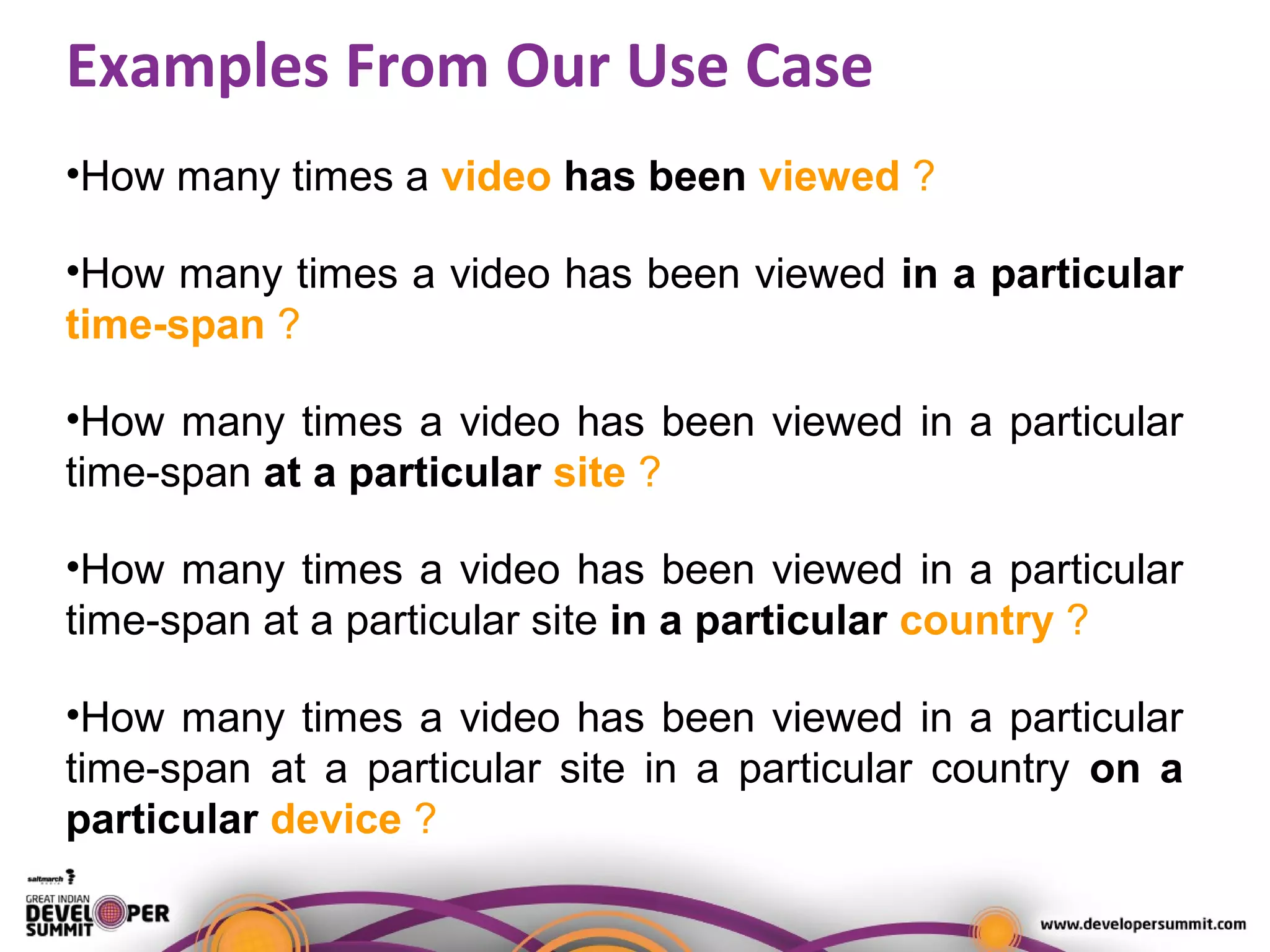

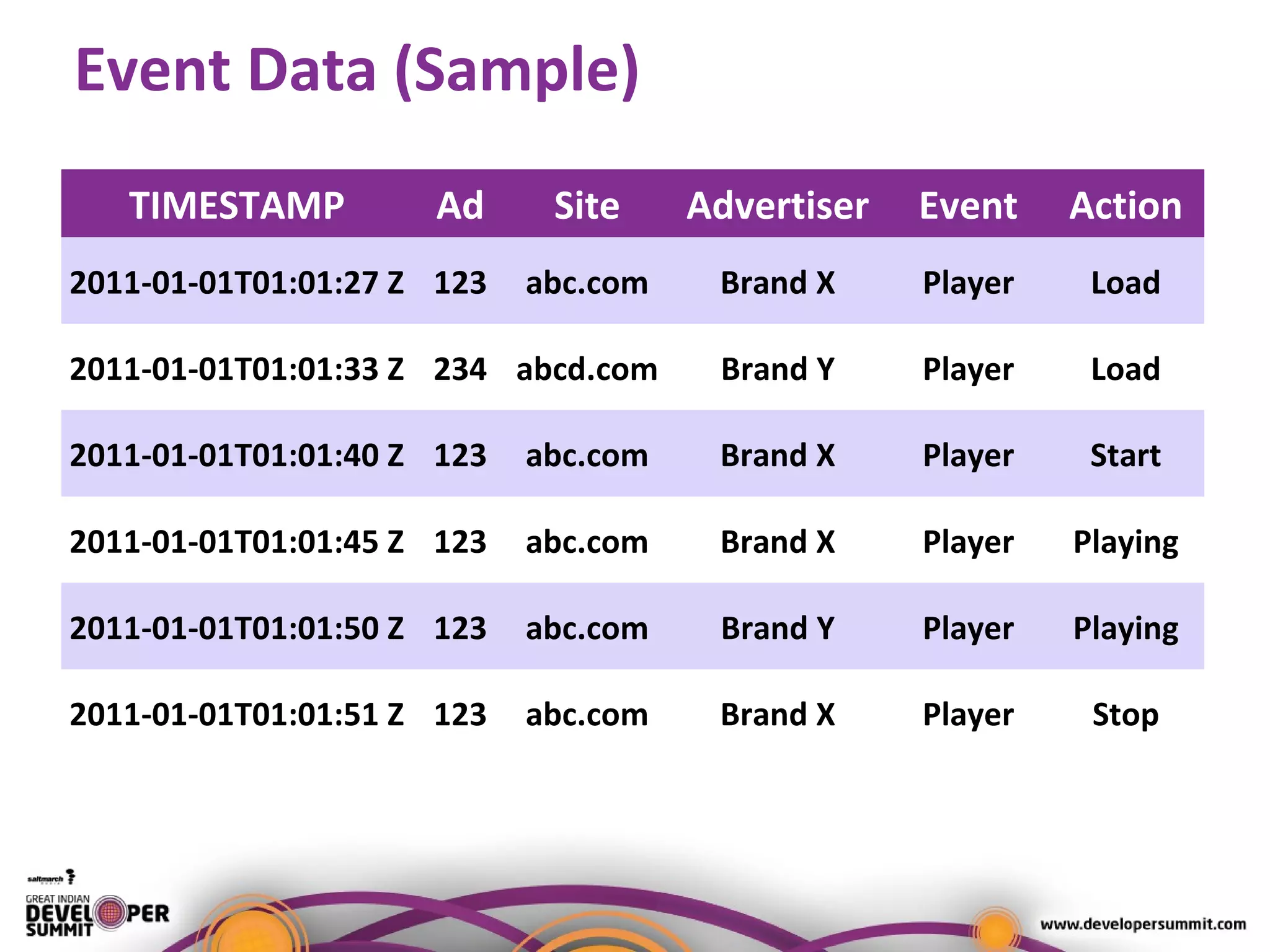

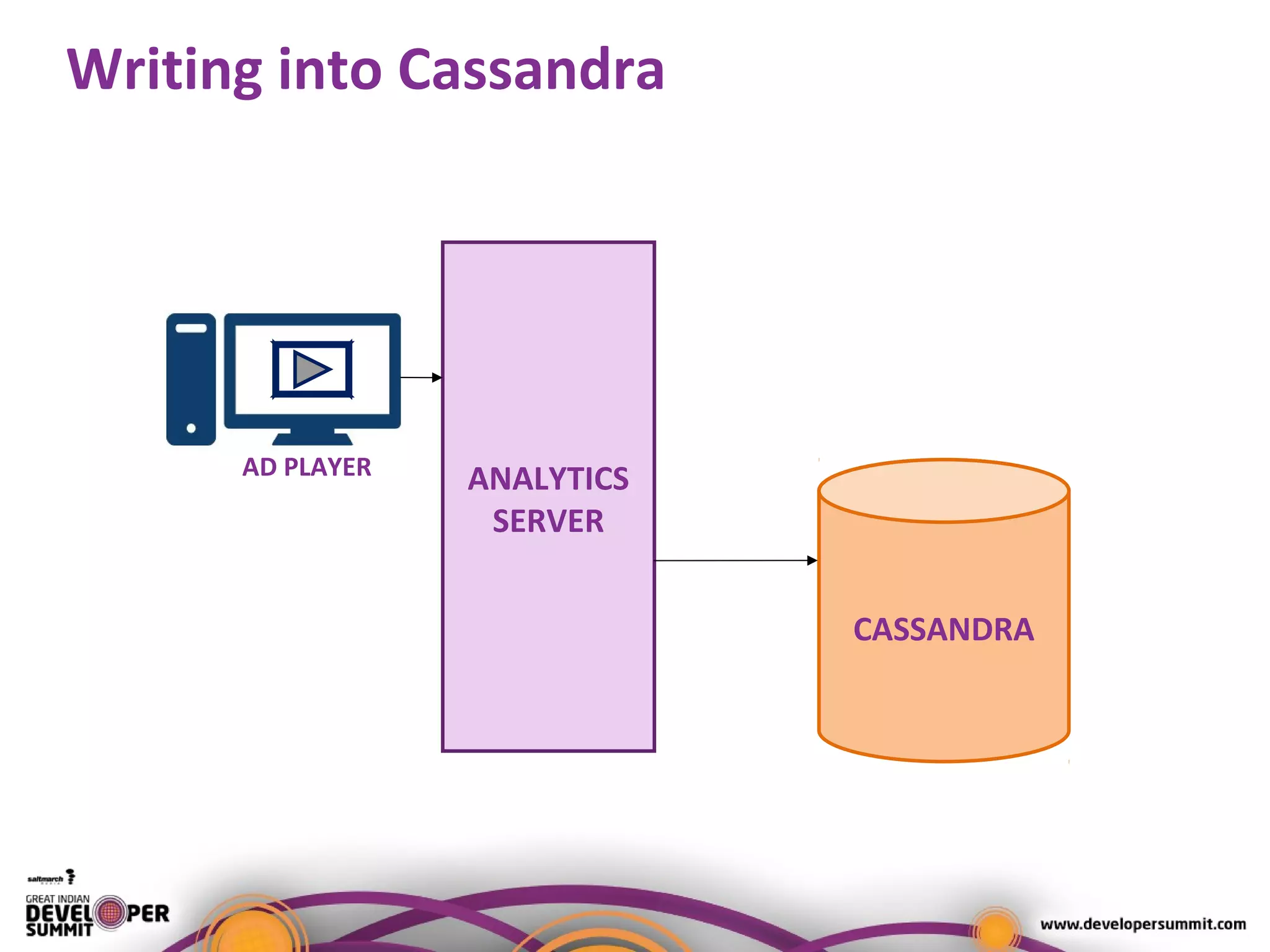

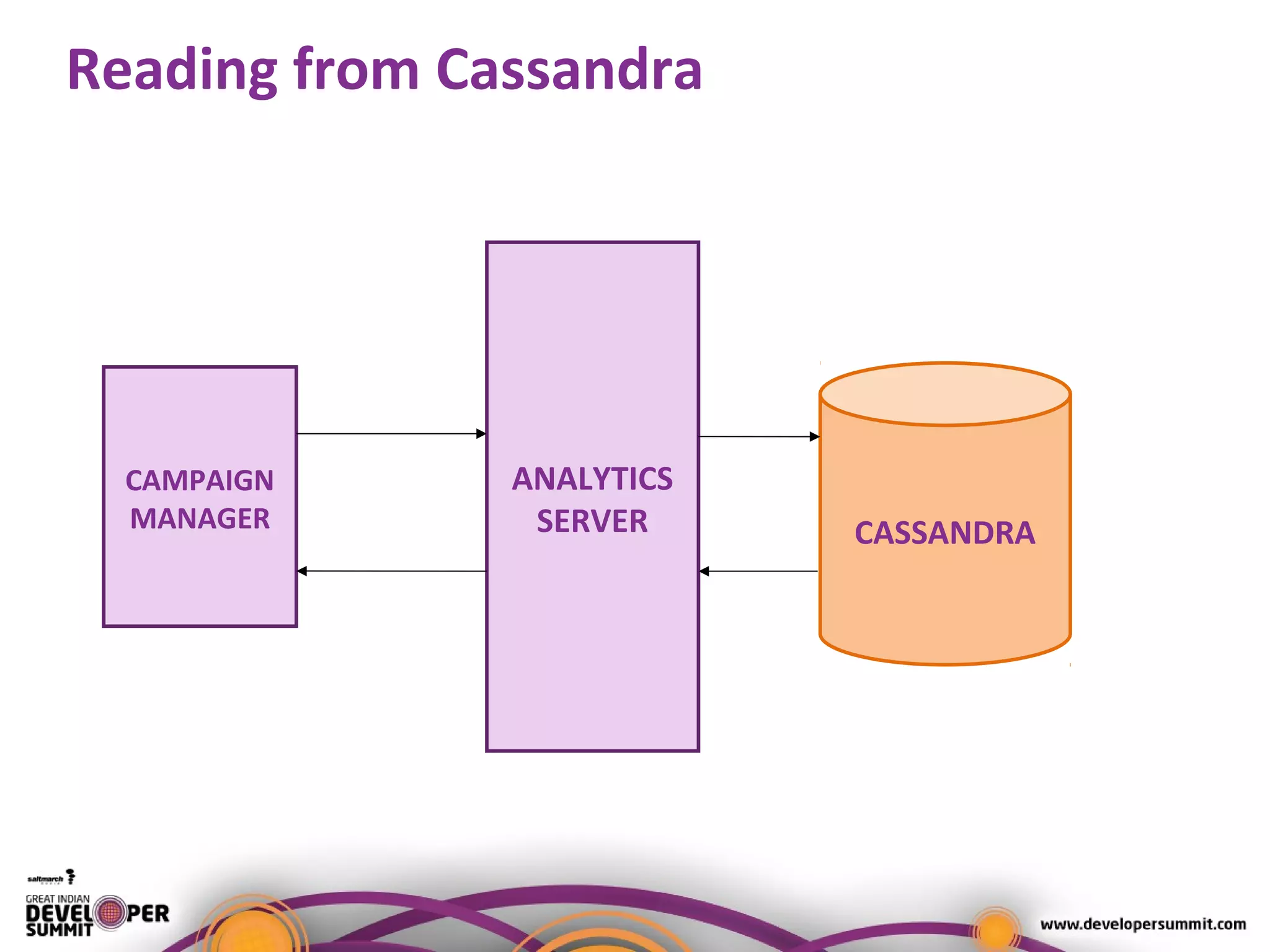

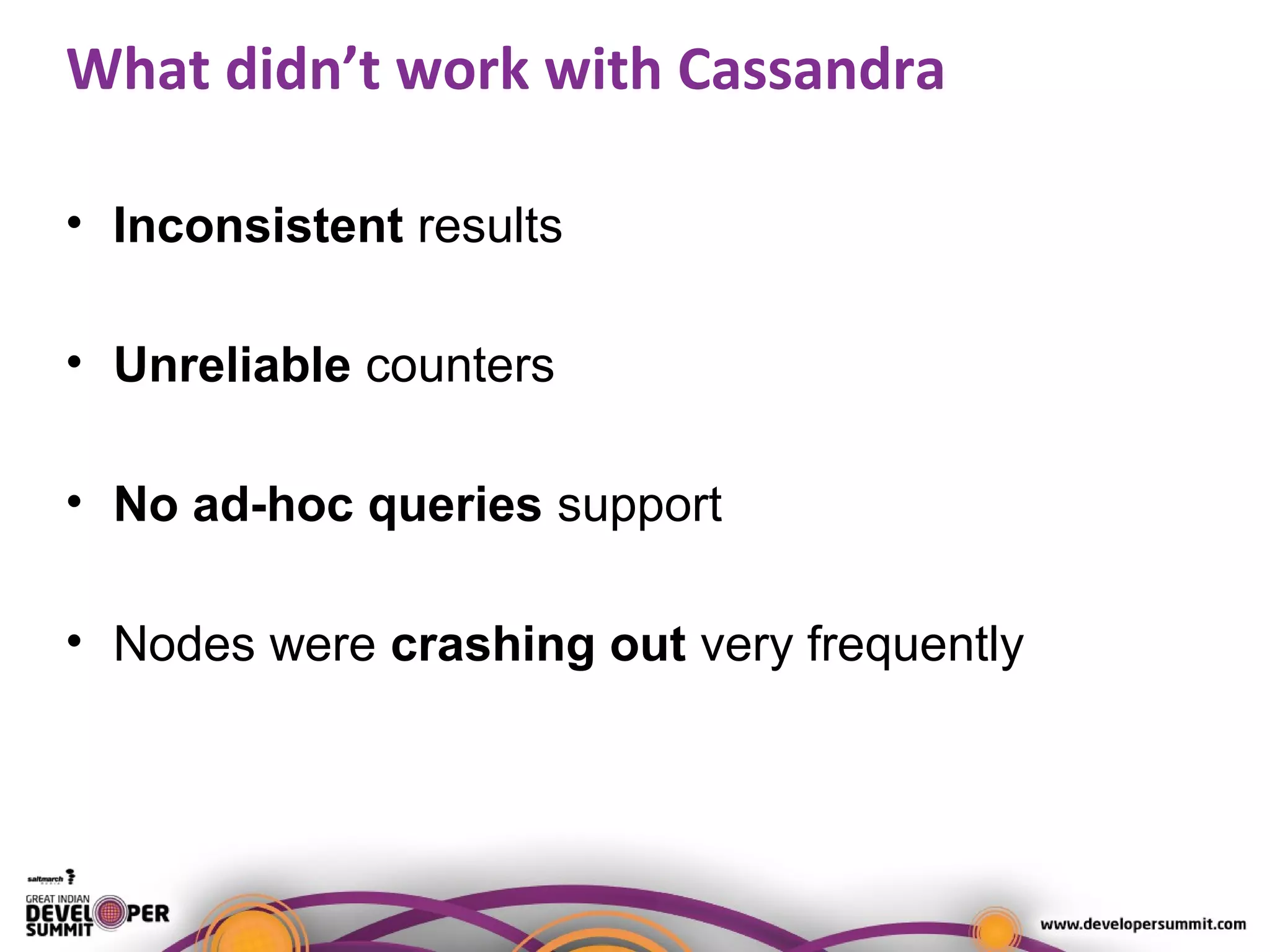

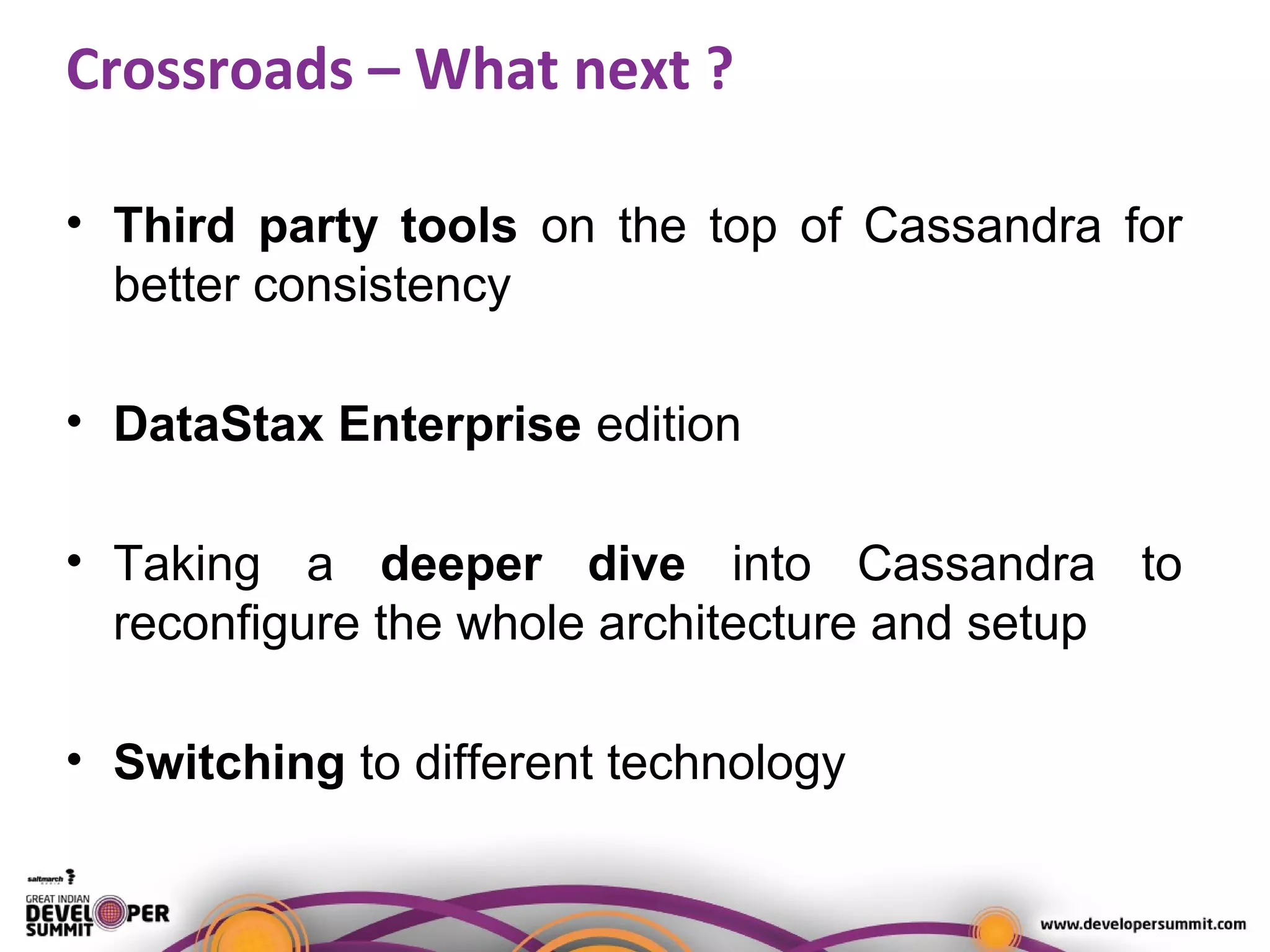

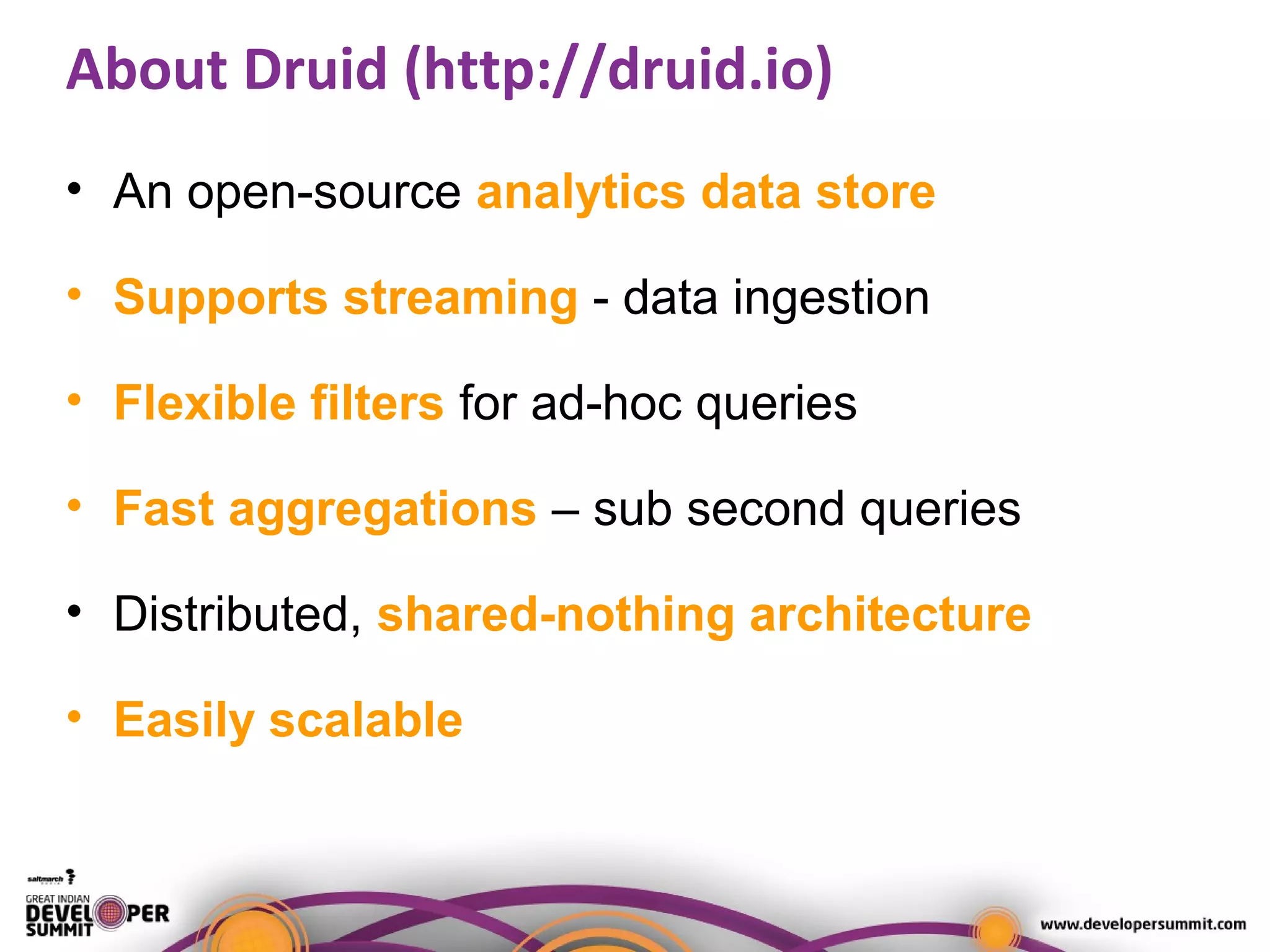

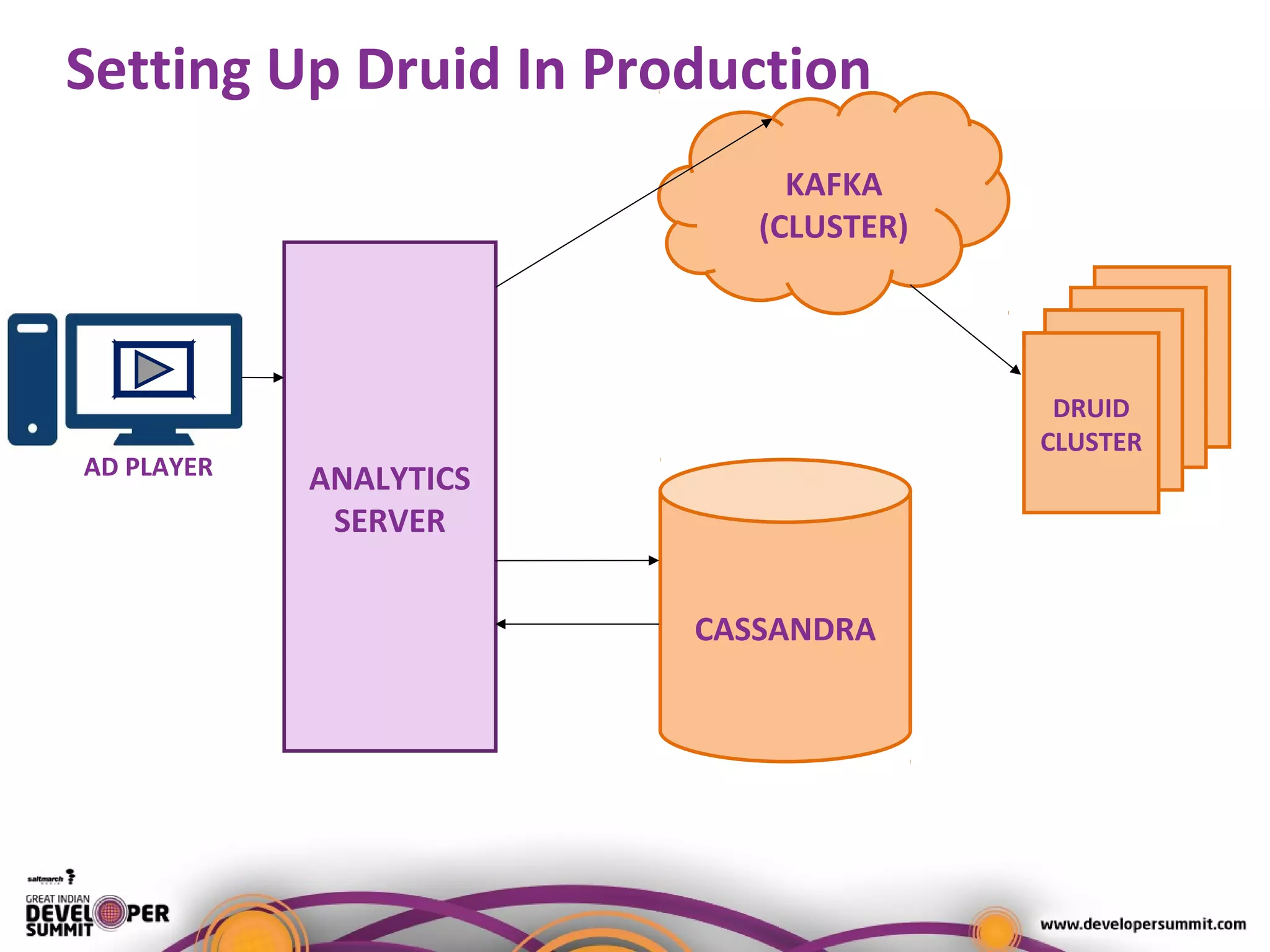

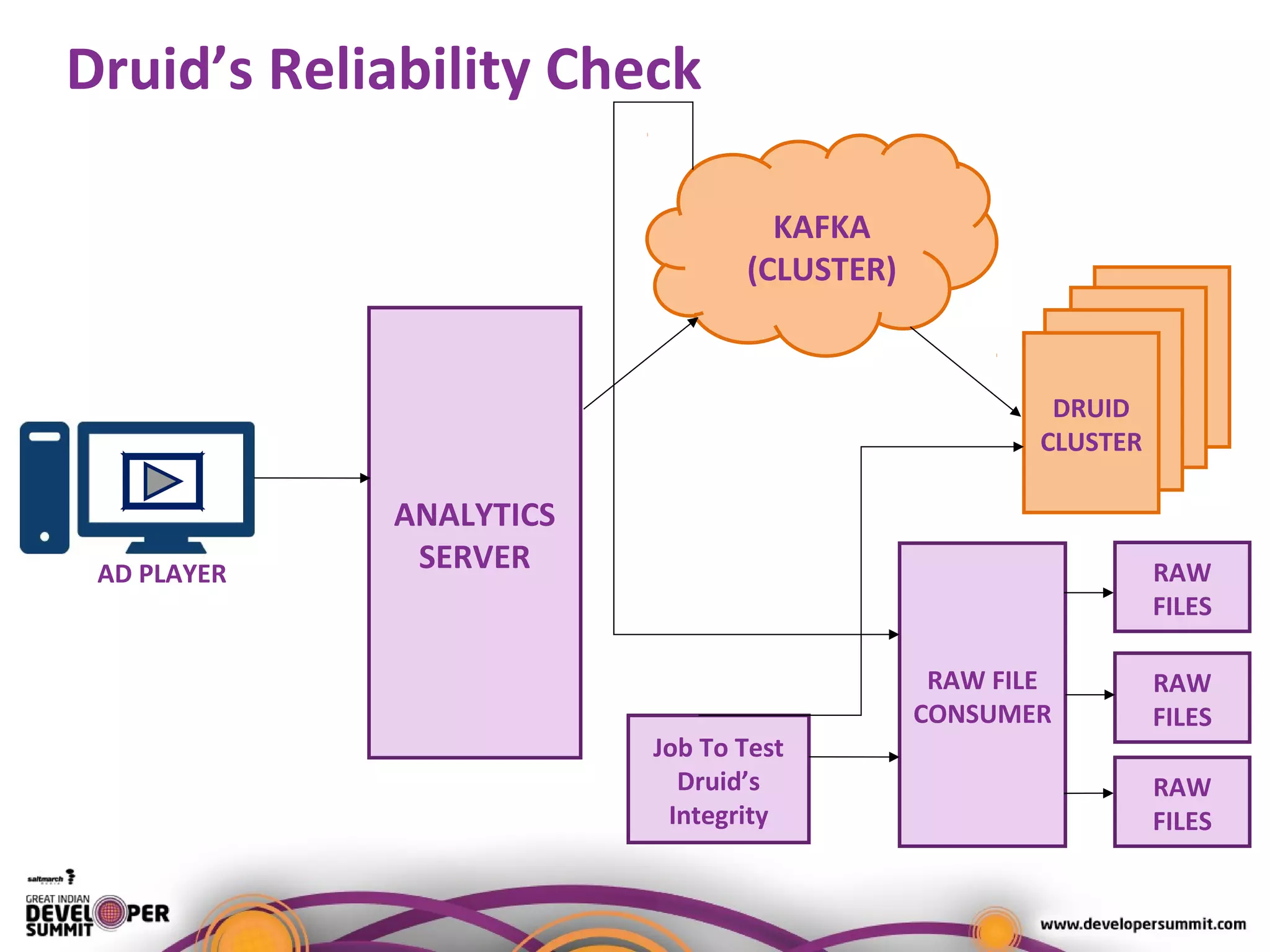

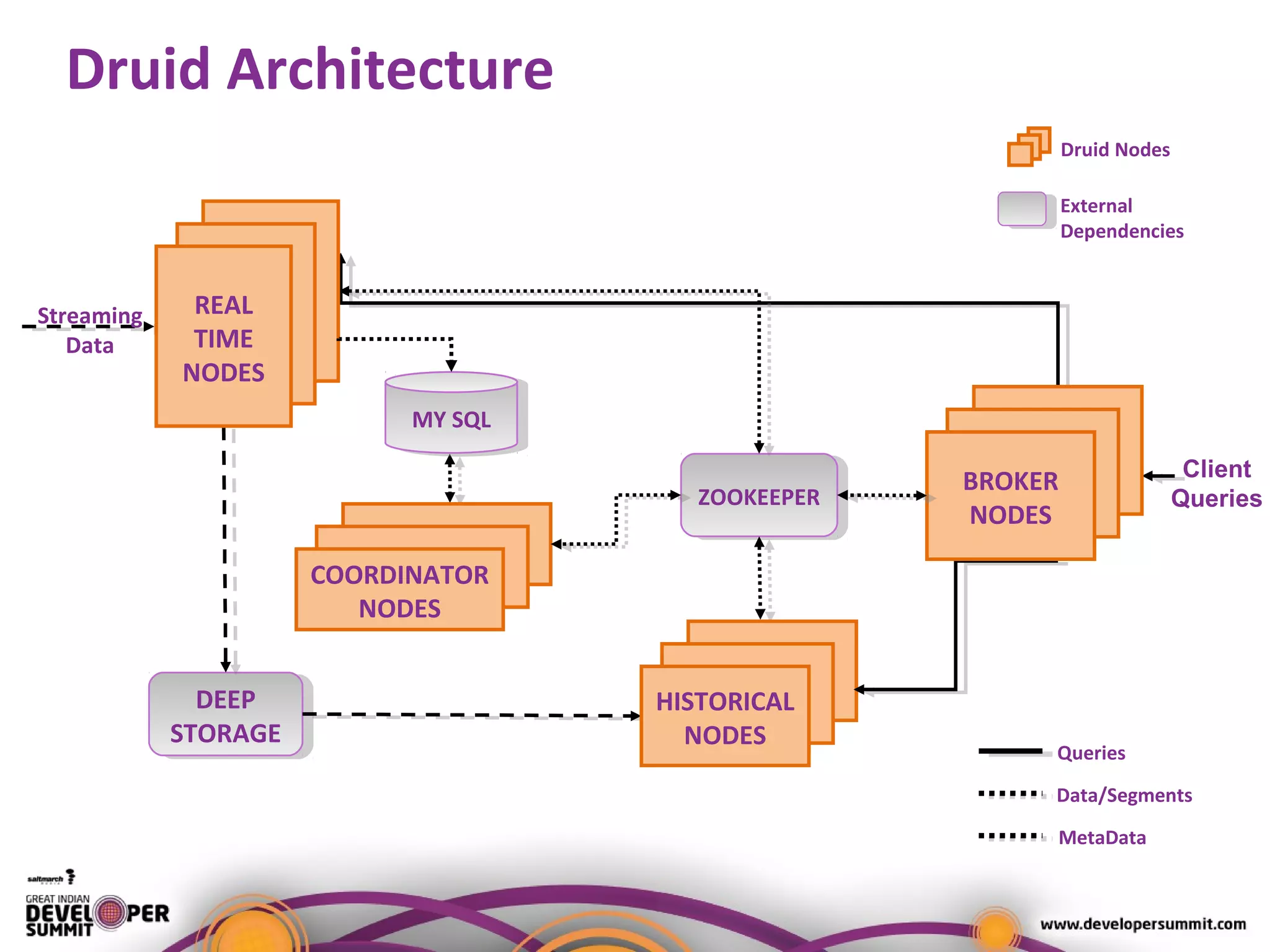

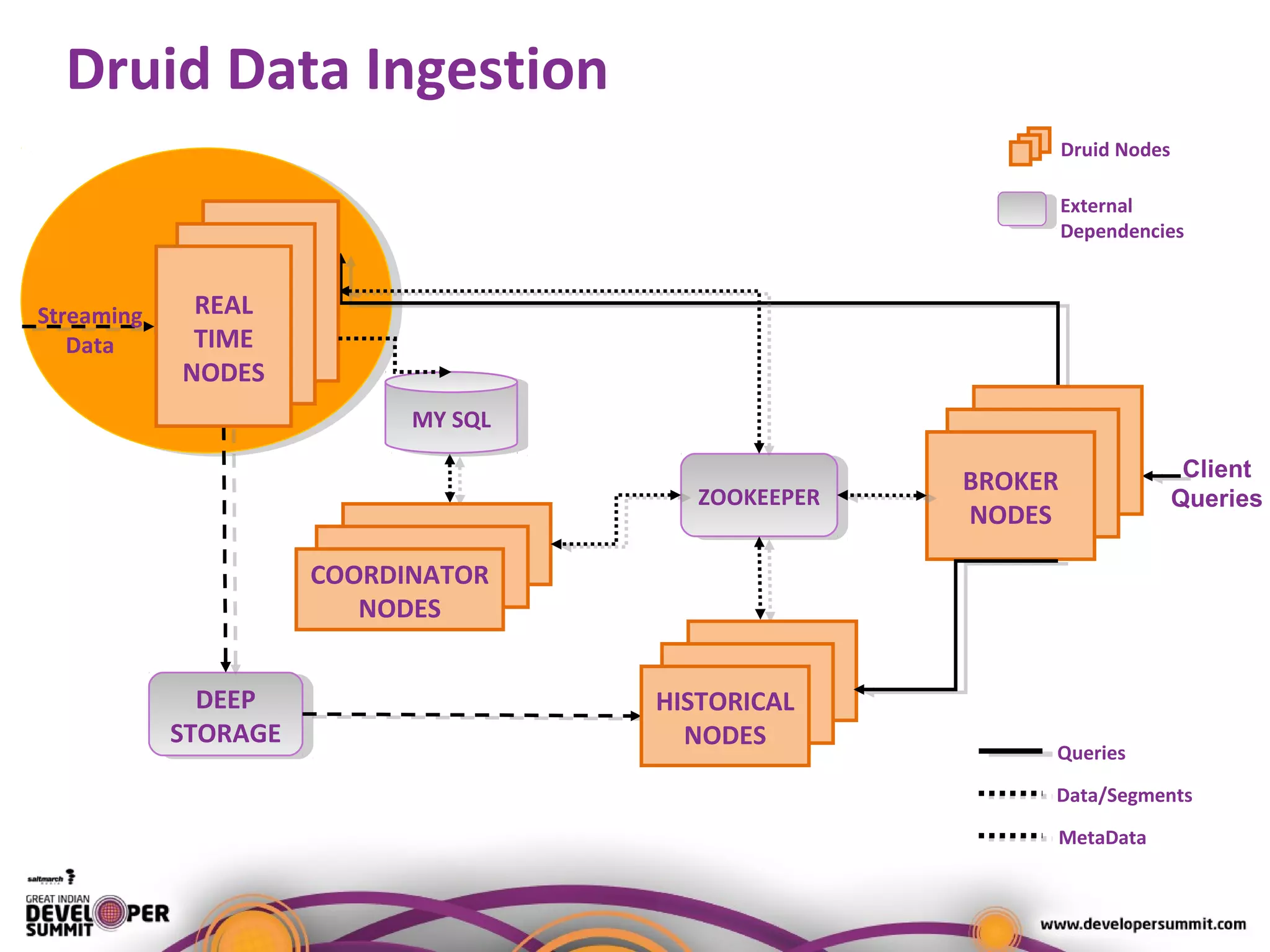

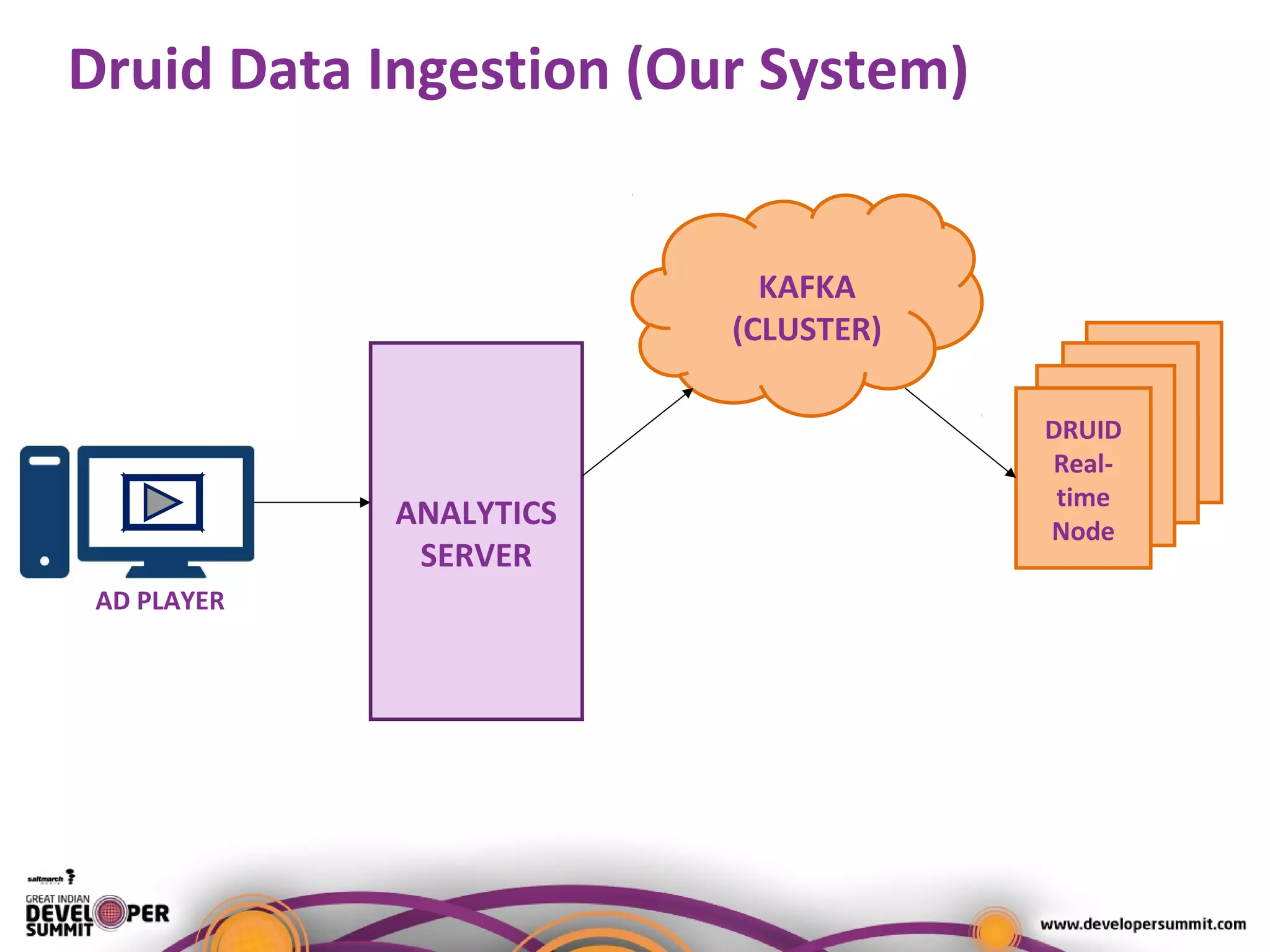

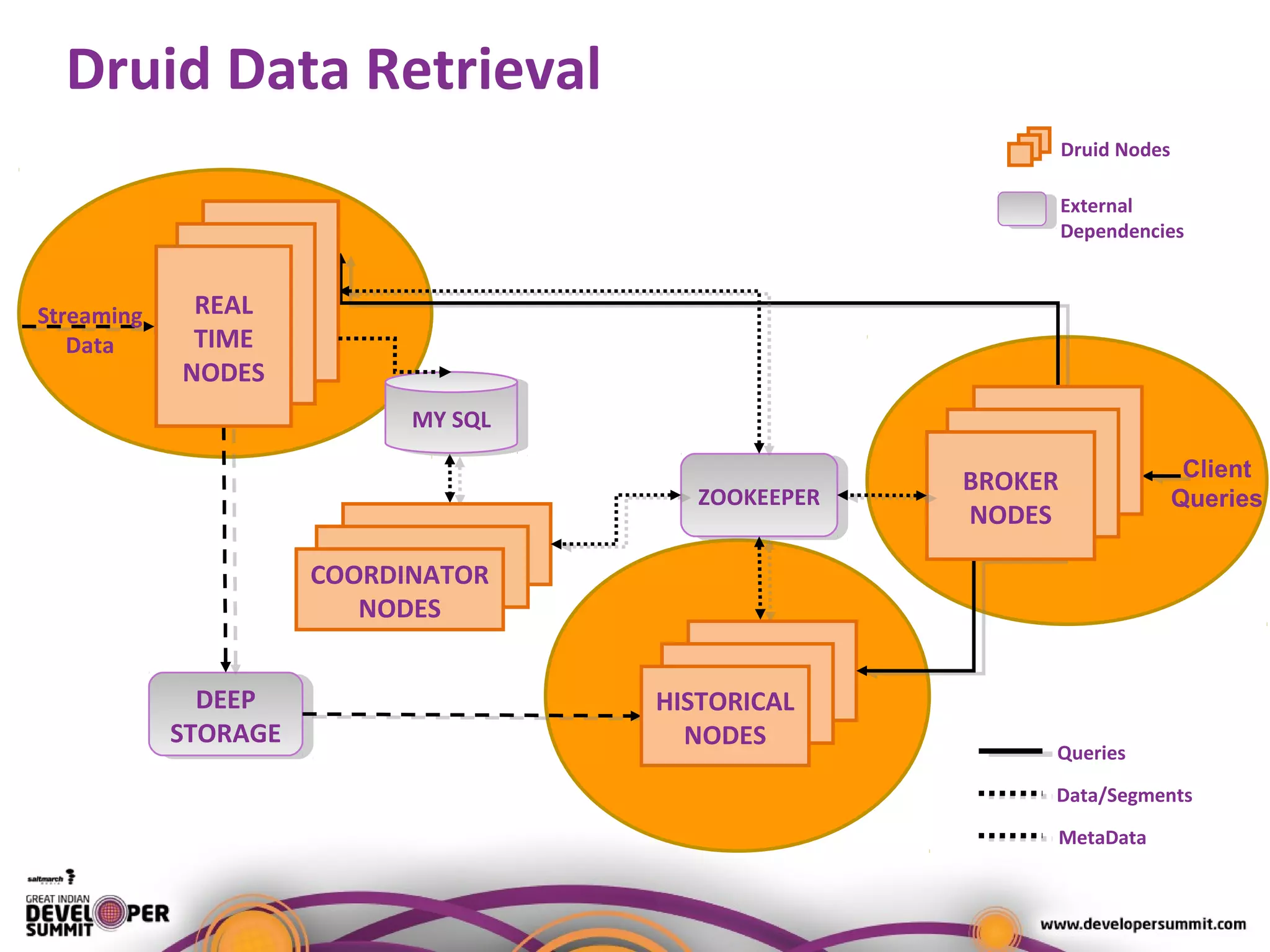

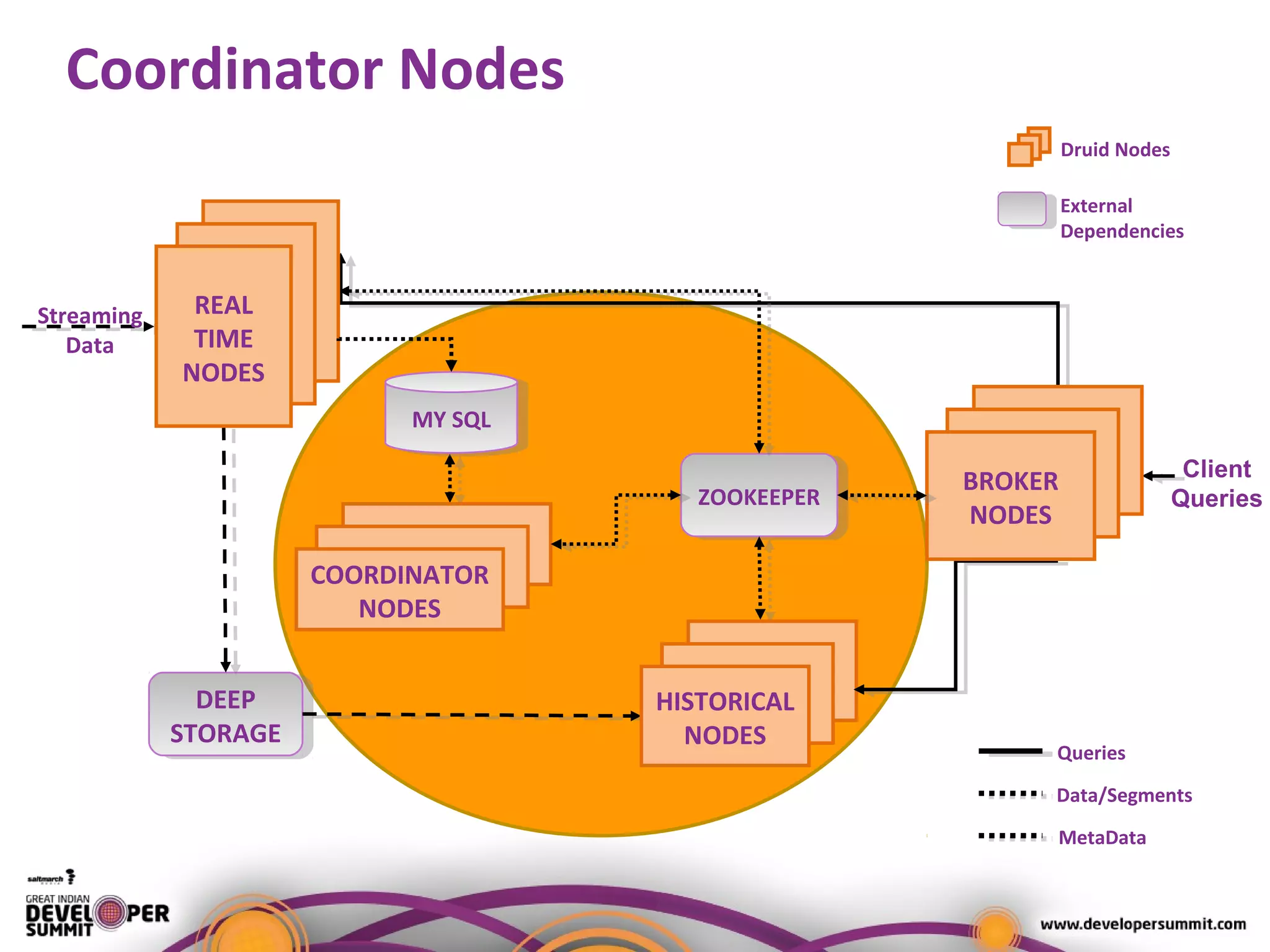

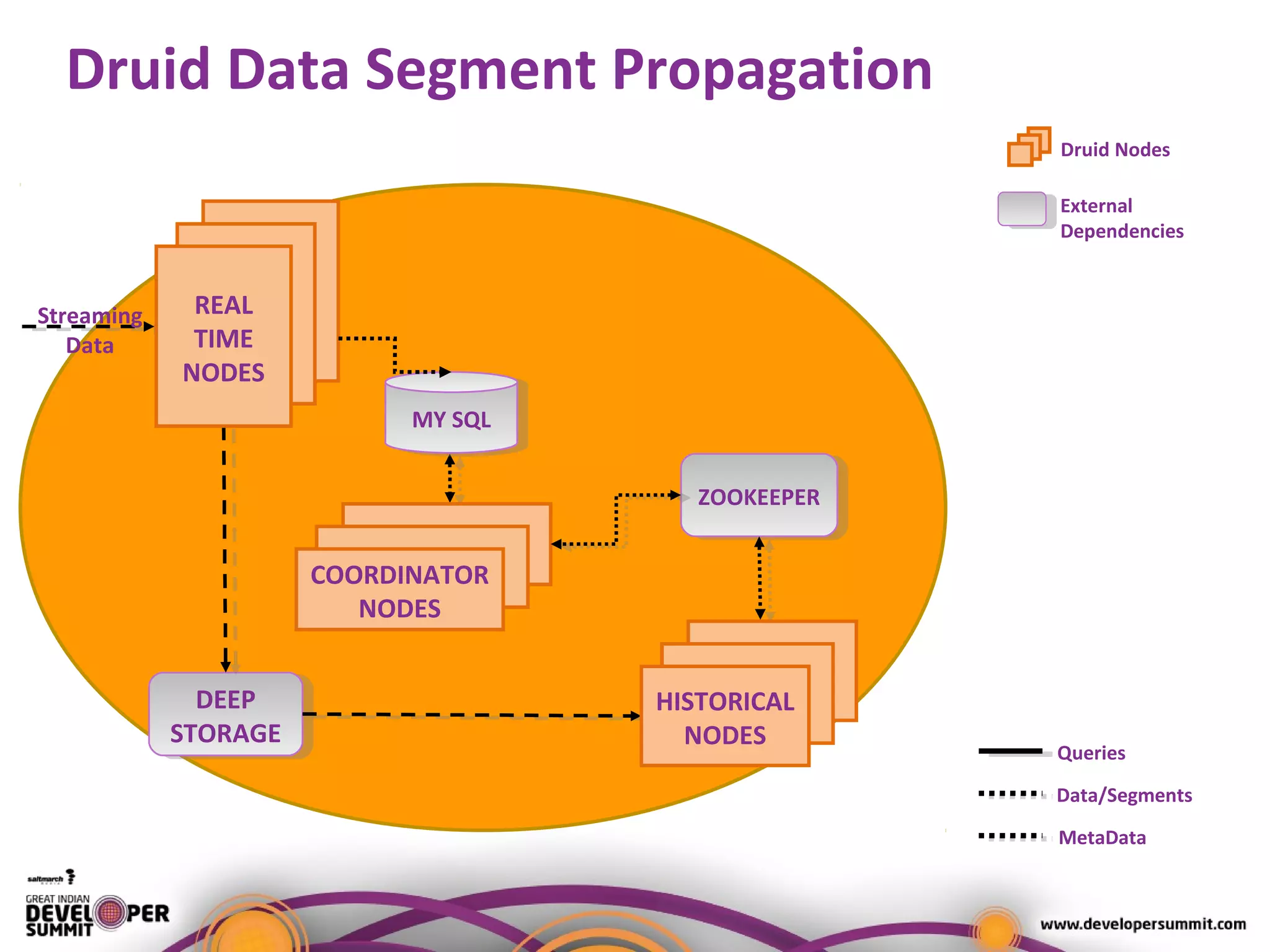

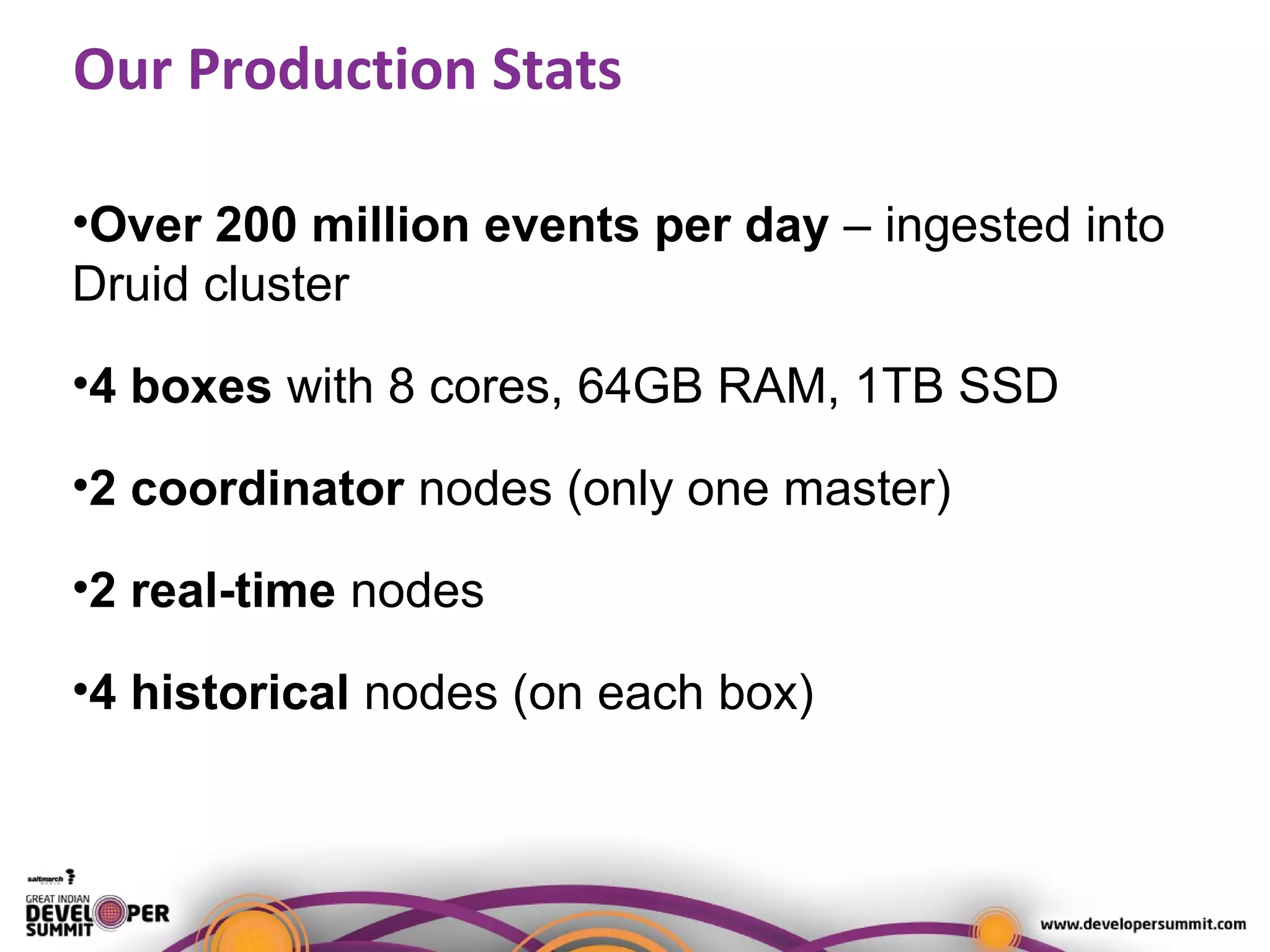

The document presents a case study on using Druid for real-time analytics in digital marketing, led by Salil Kalia, a tech lead with significant experience in software development. It discusses the challenges faced with other technologies like Redis and Cassandra, ultimately highlighting Druid's capabilities such as streaming data ingestion, fast aggregations, and scalability, supported by a practical demo. The case study also outlines the system architecture and production stats, showcasing Druid's effectiveness in processing over 200 million events per day.