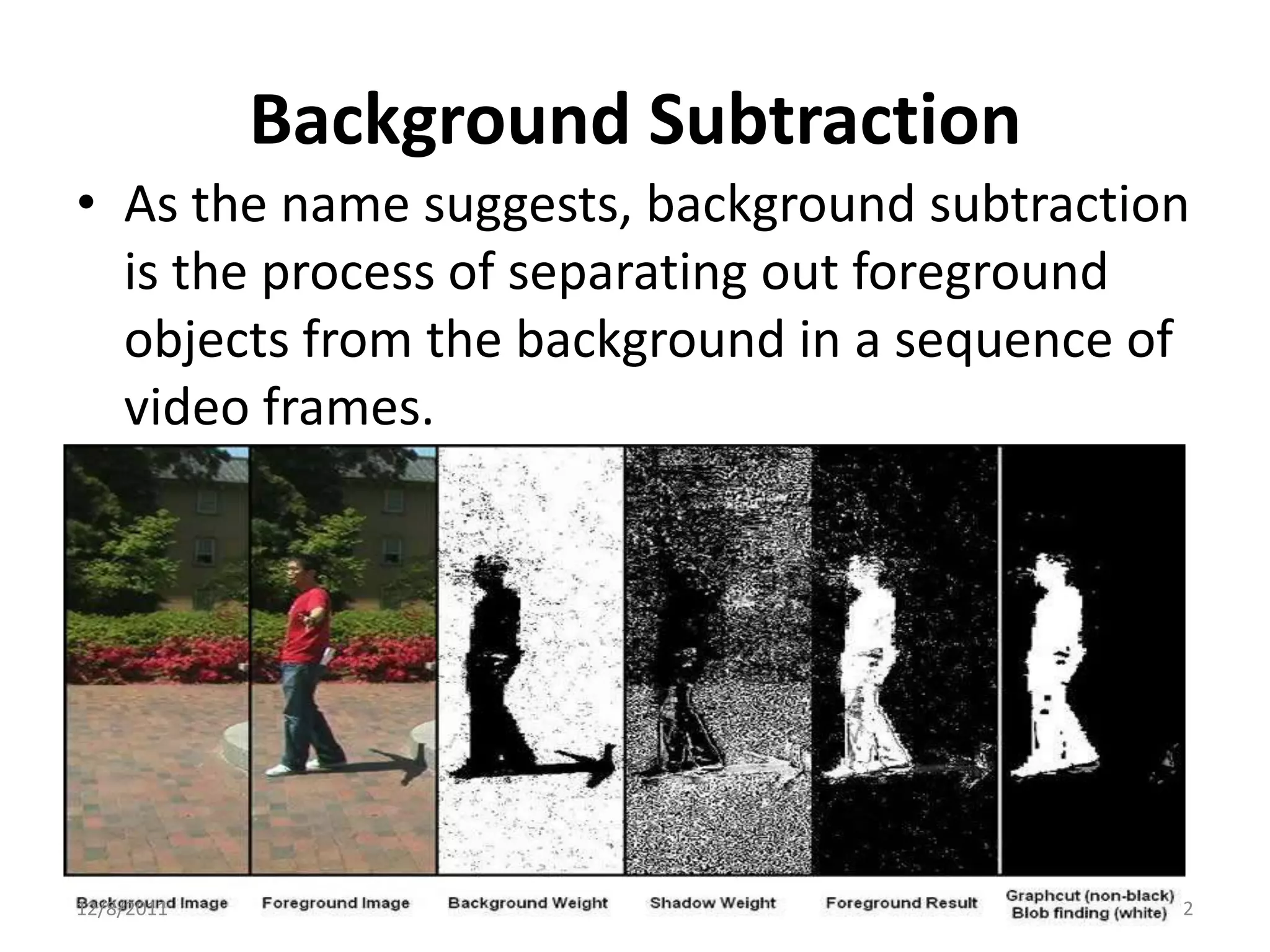

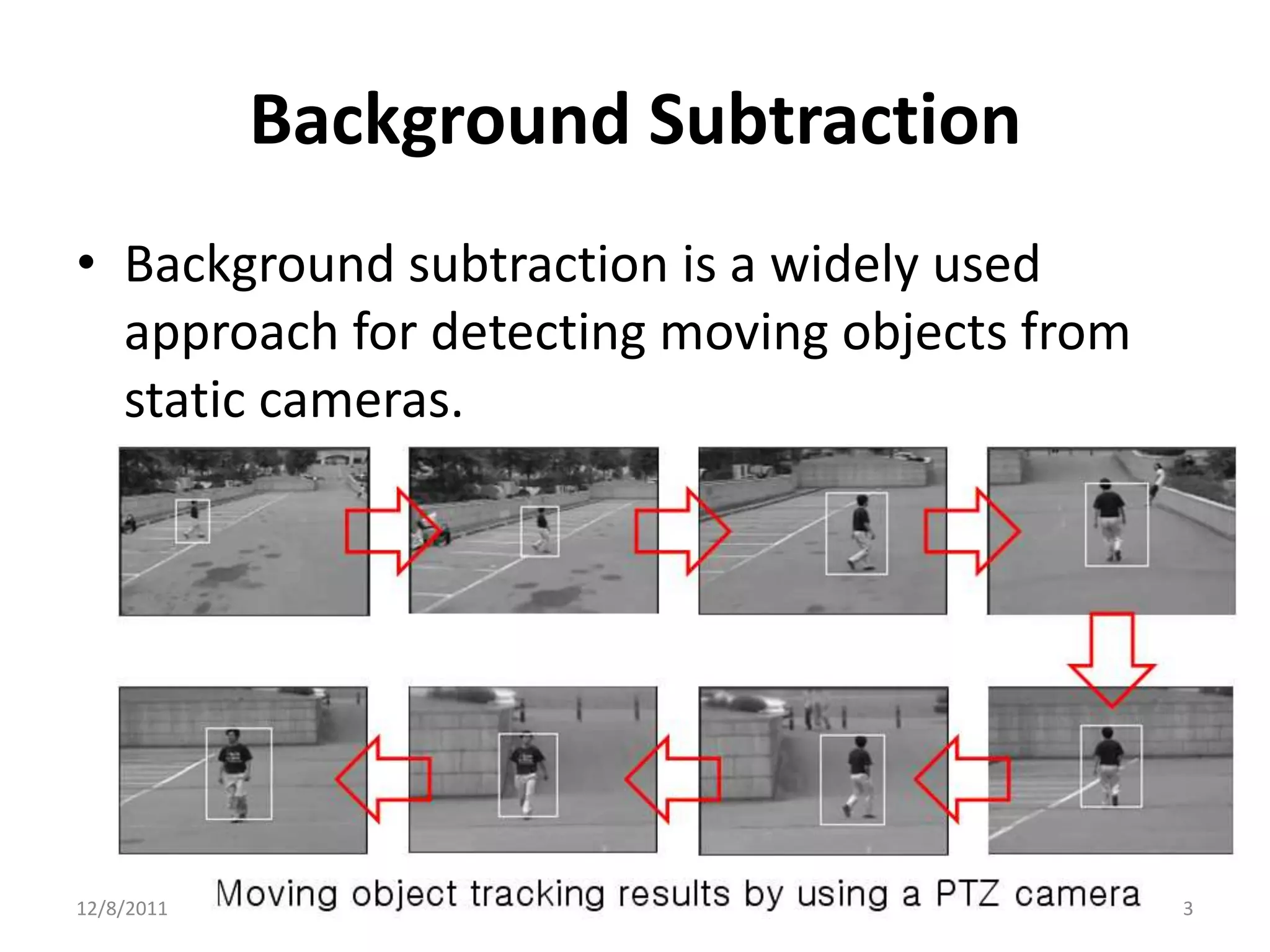

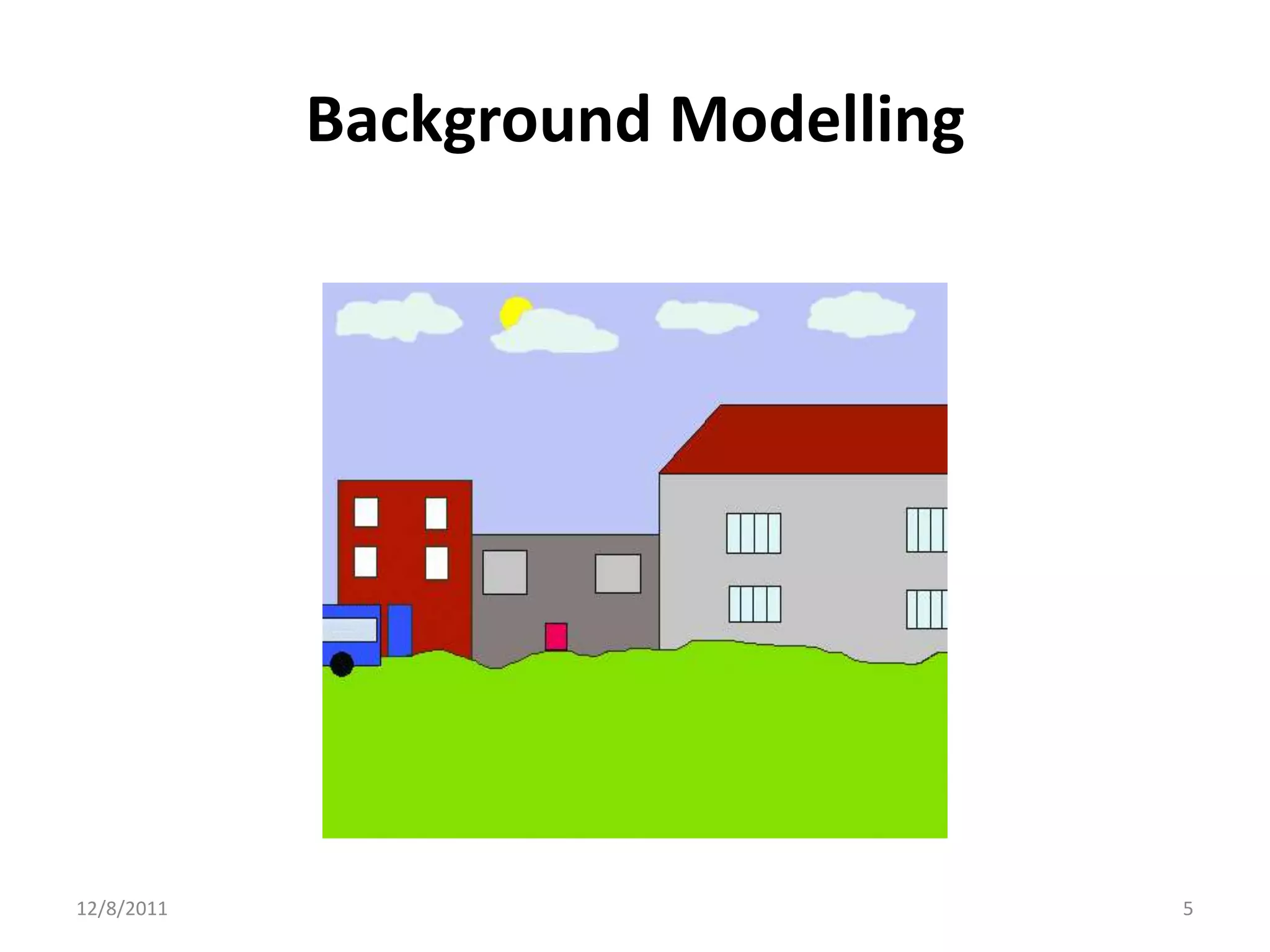

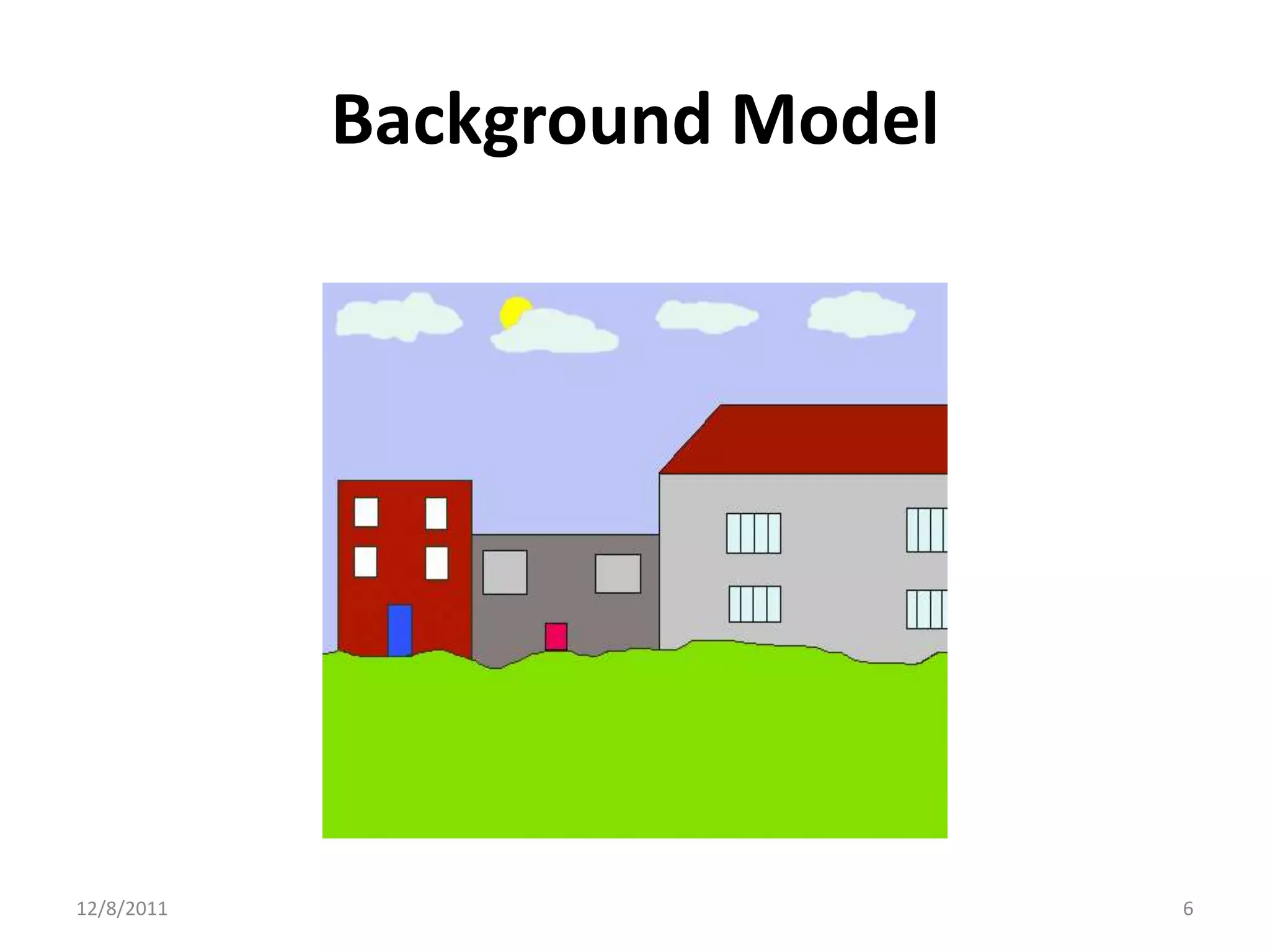

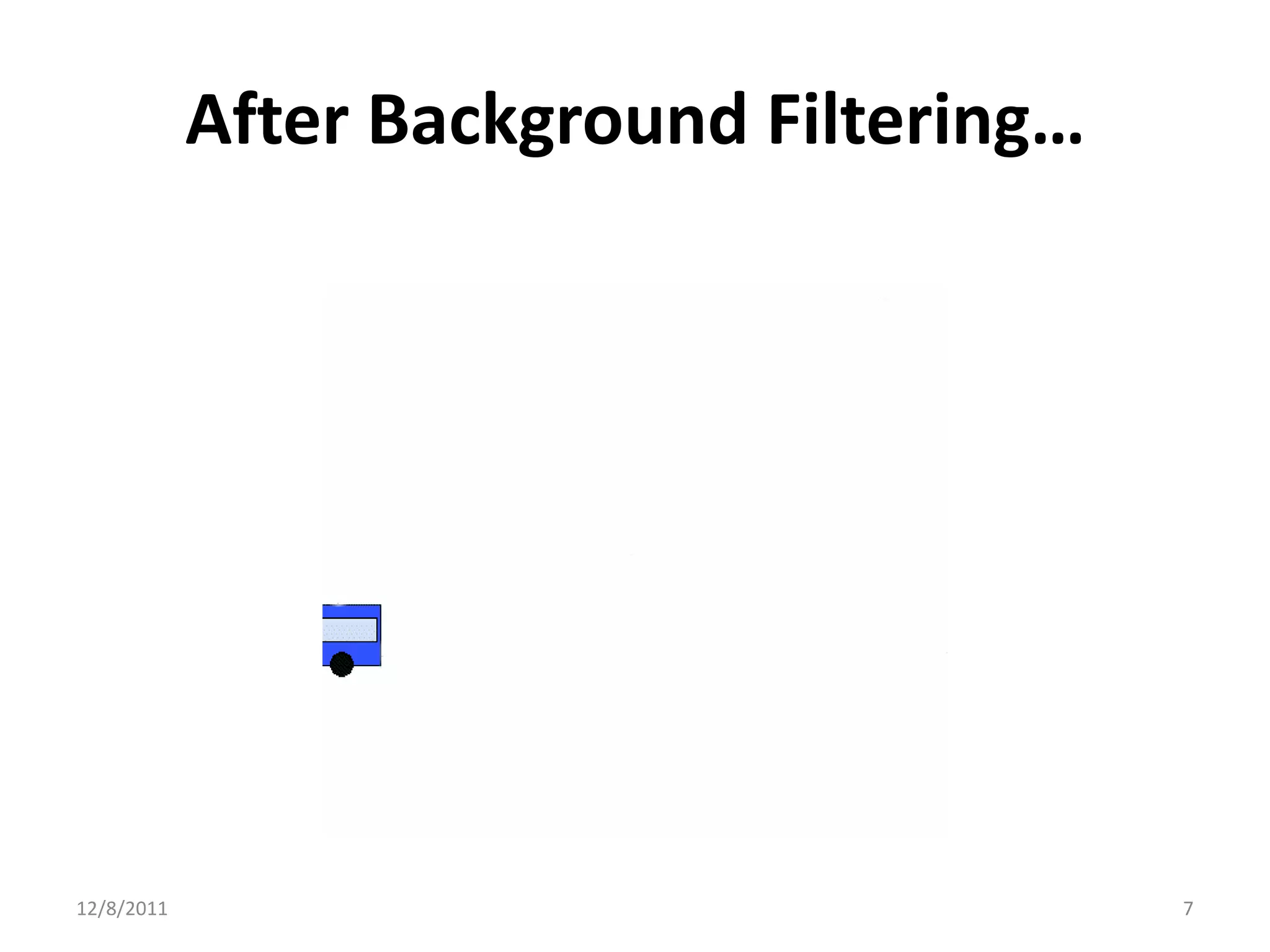

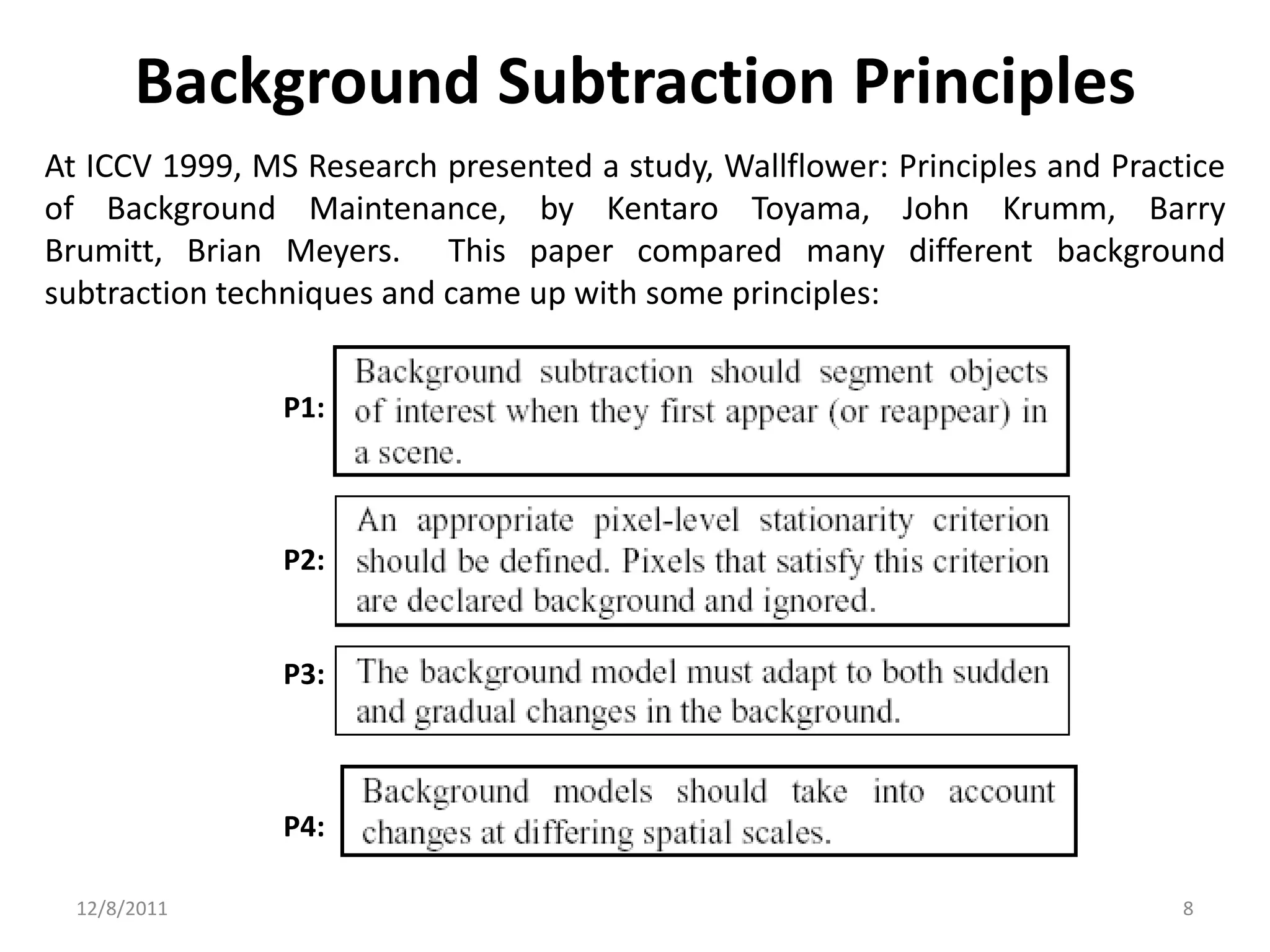

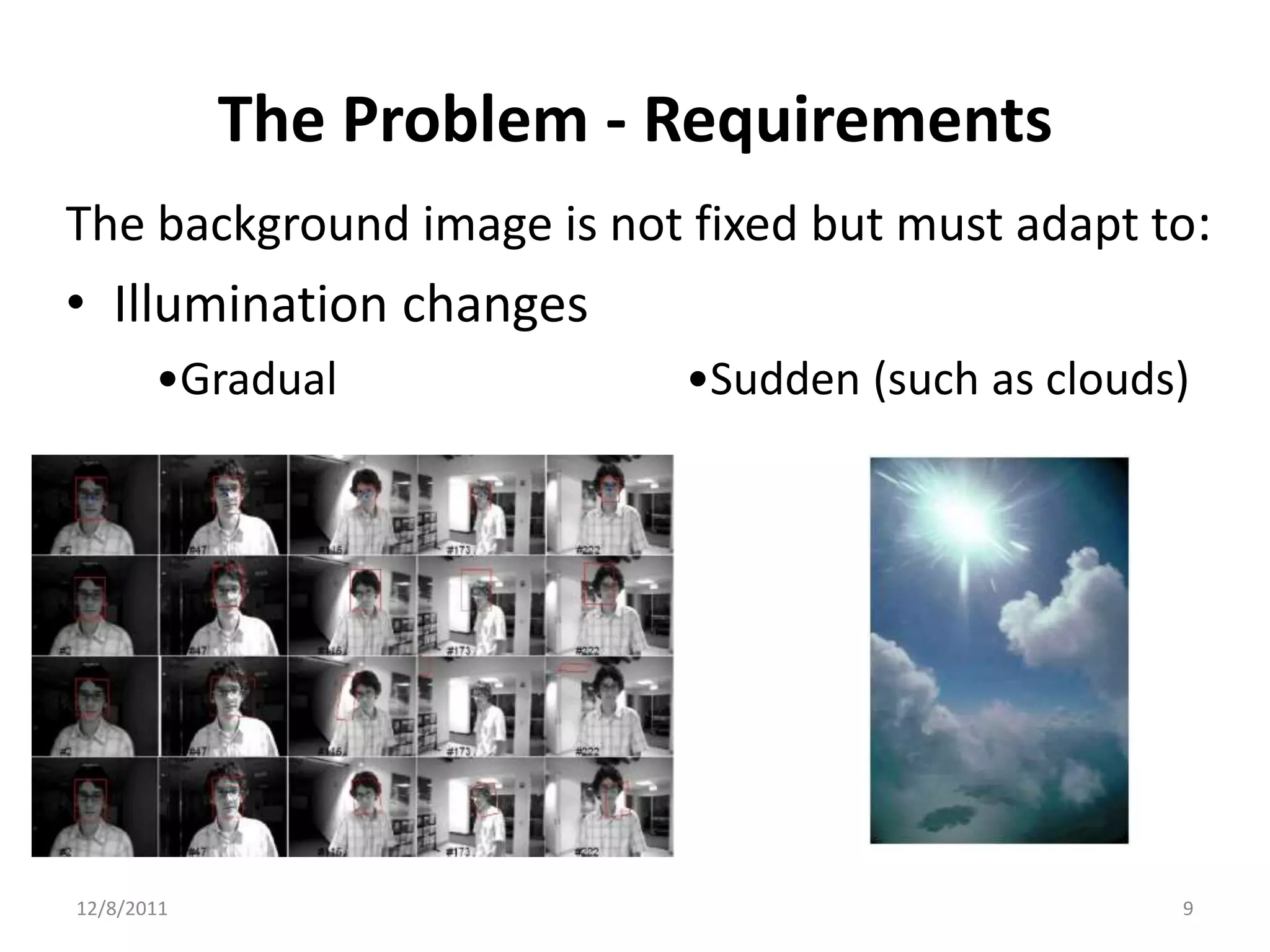

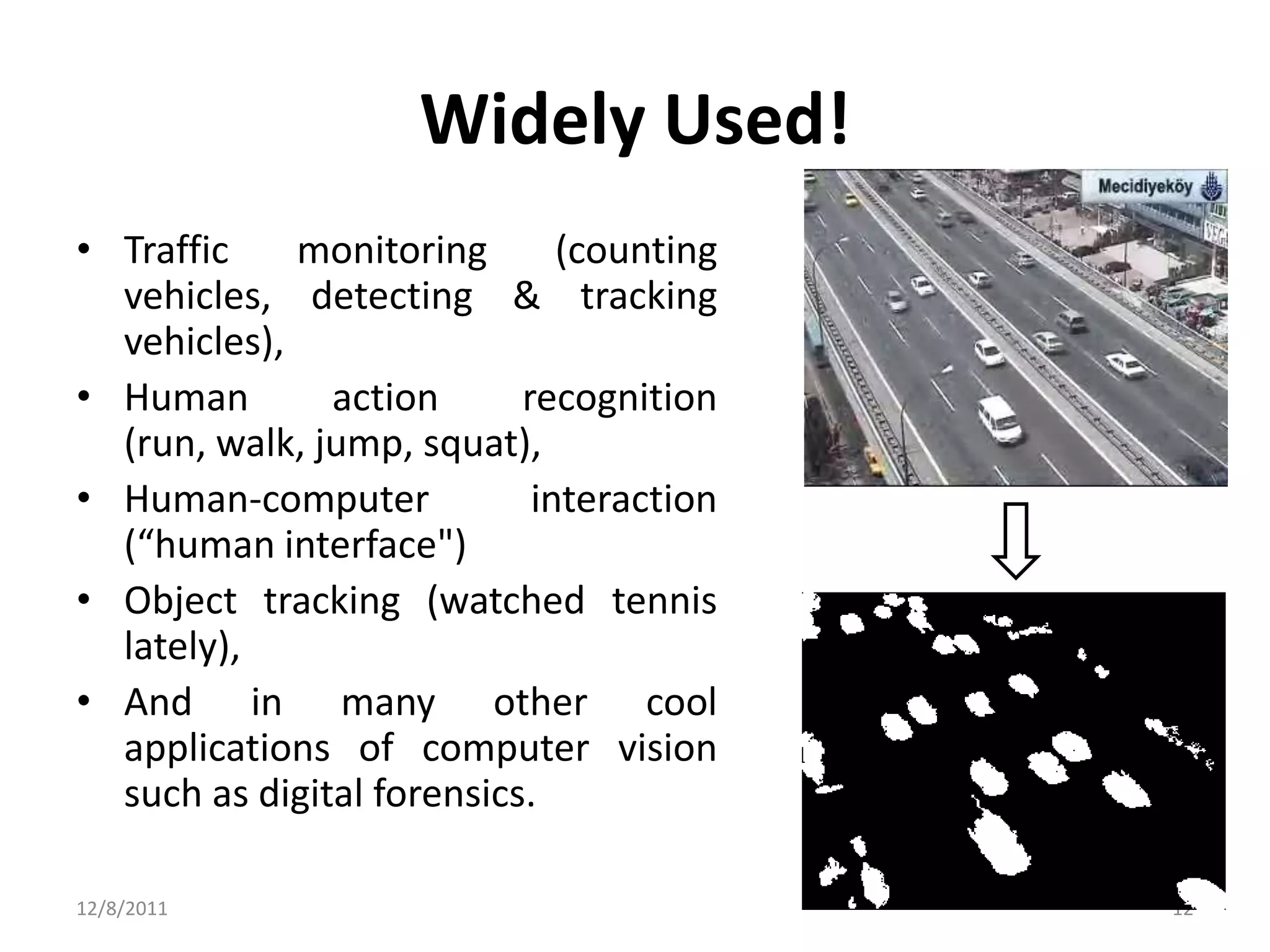

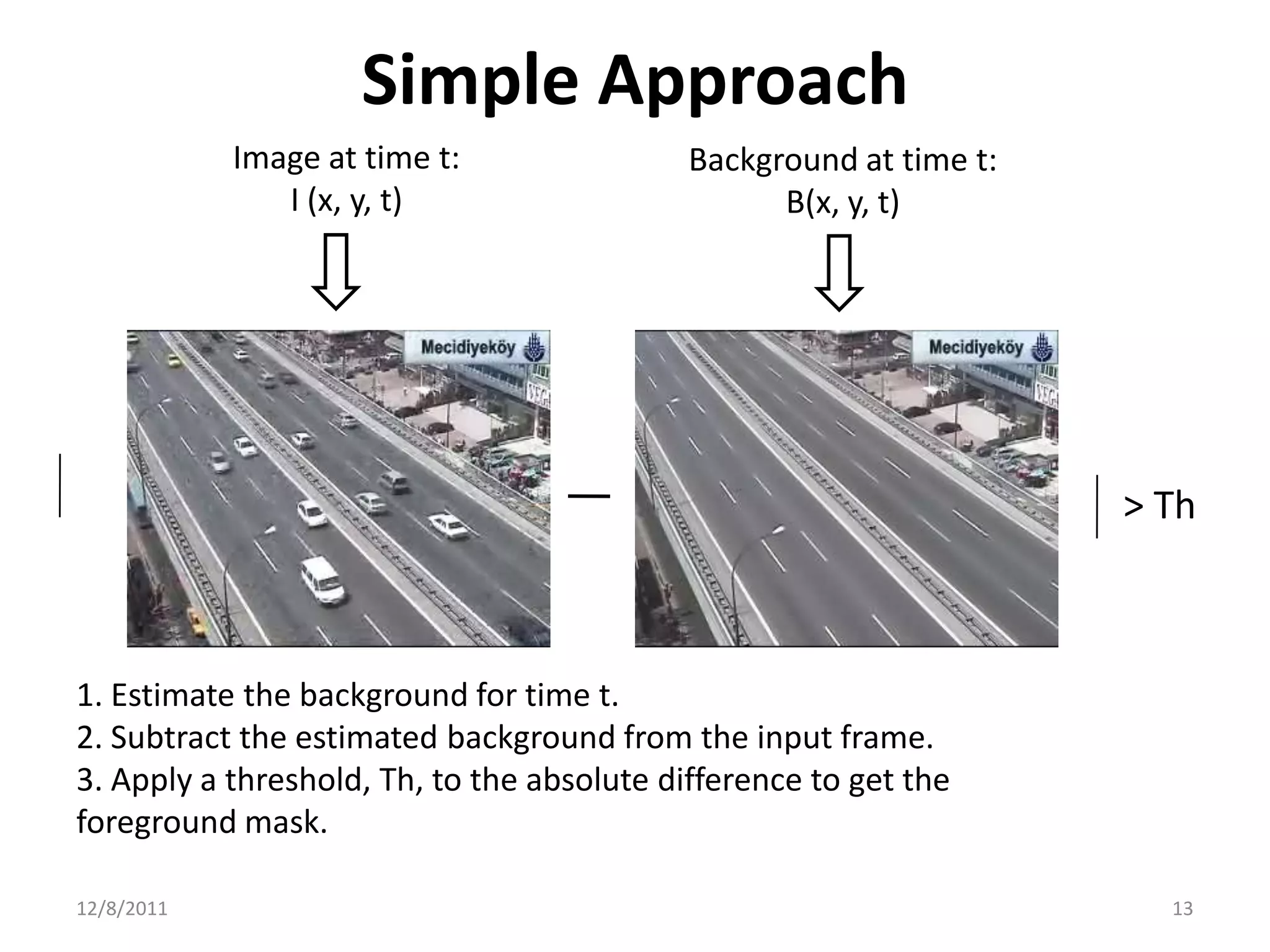

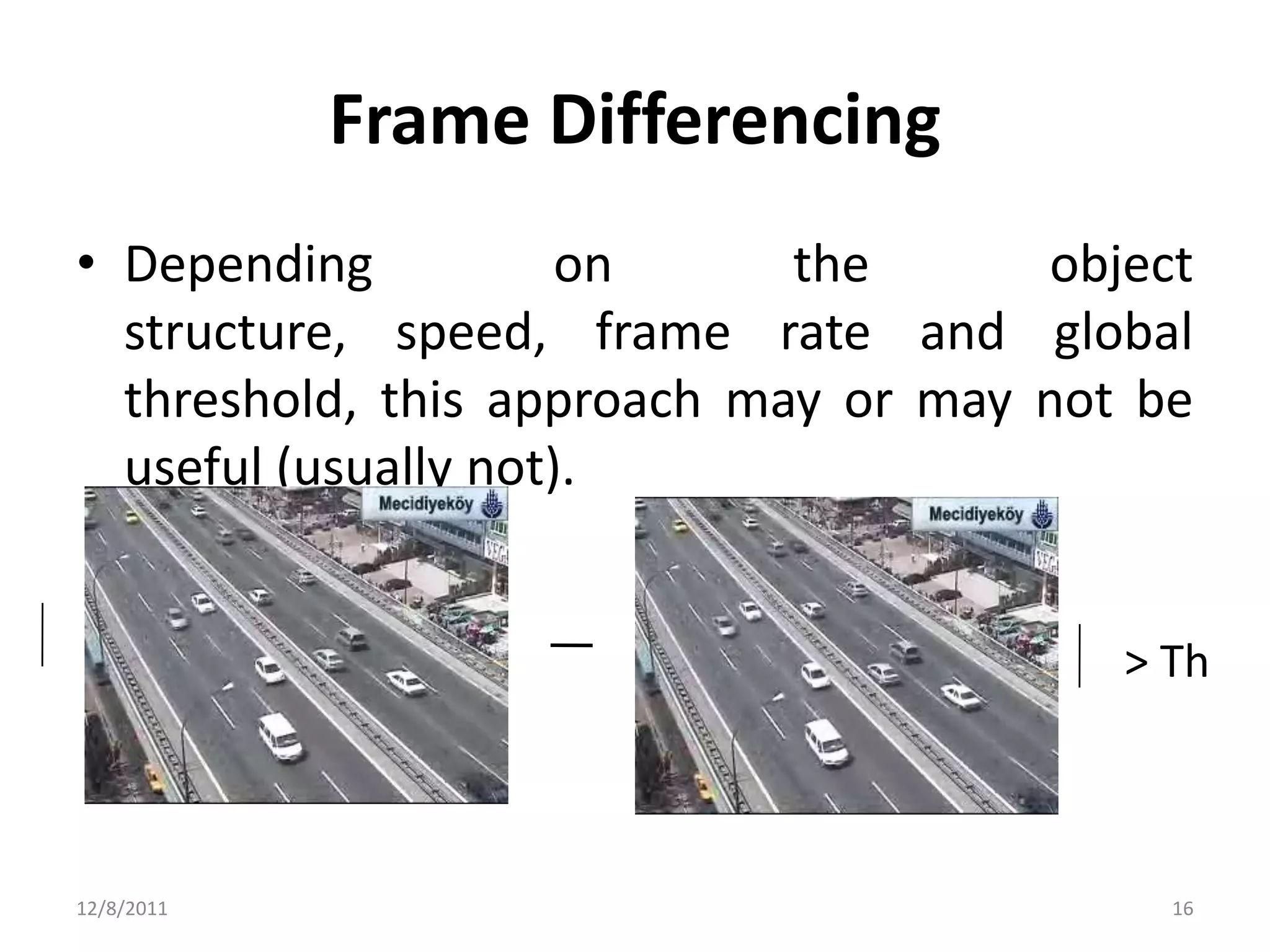

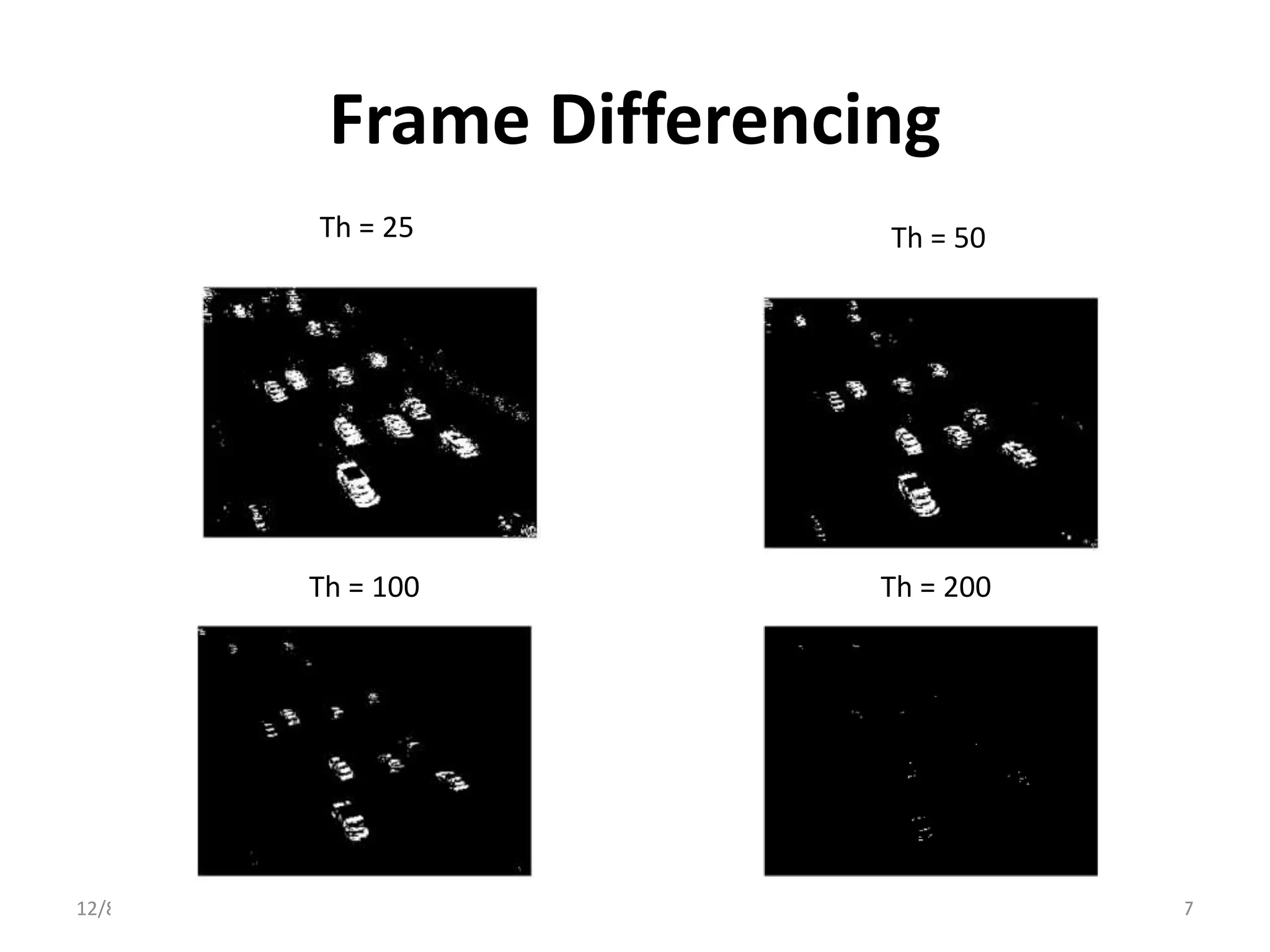

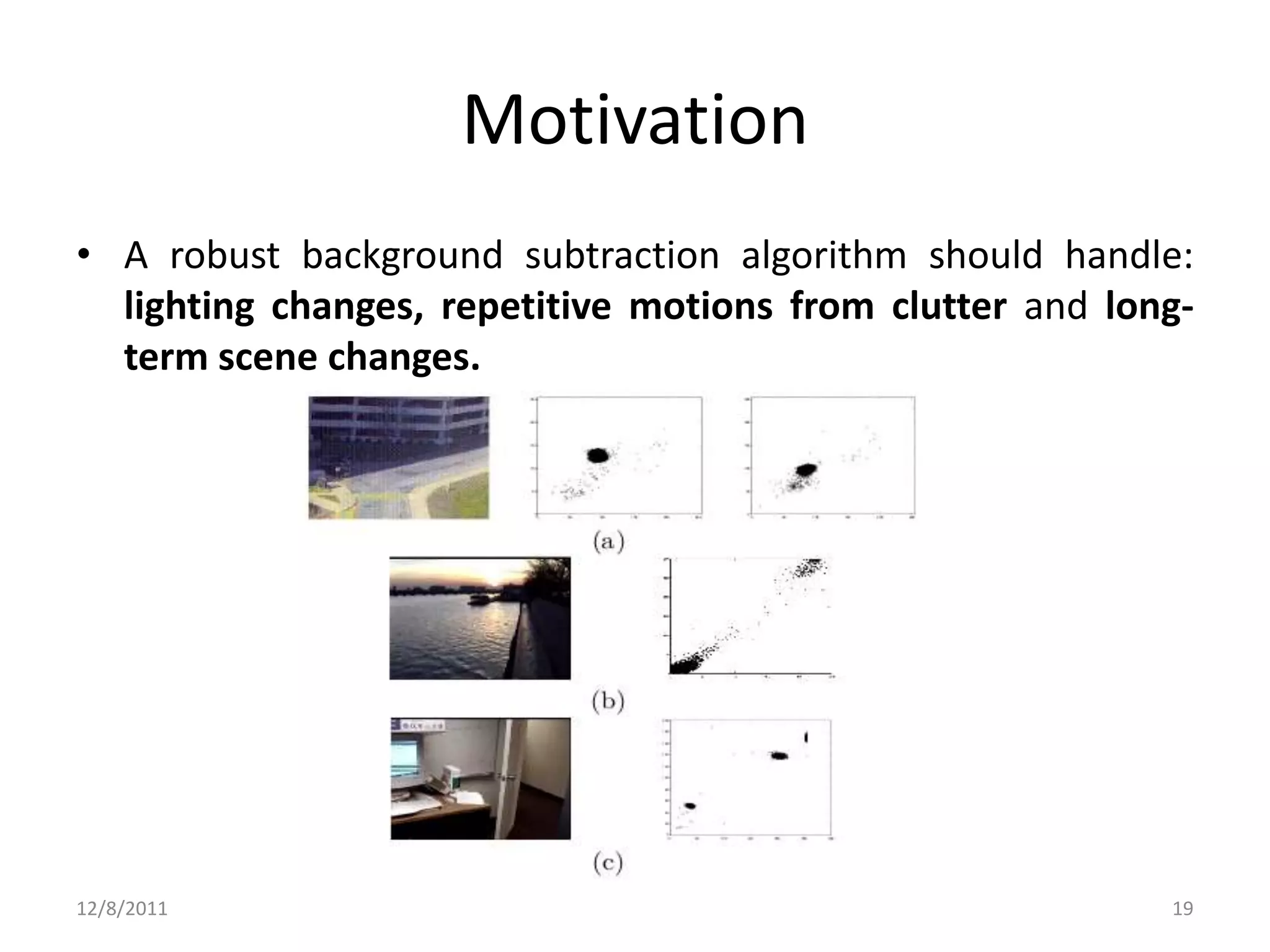

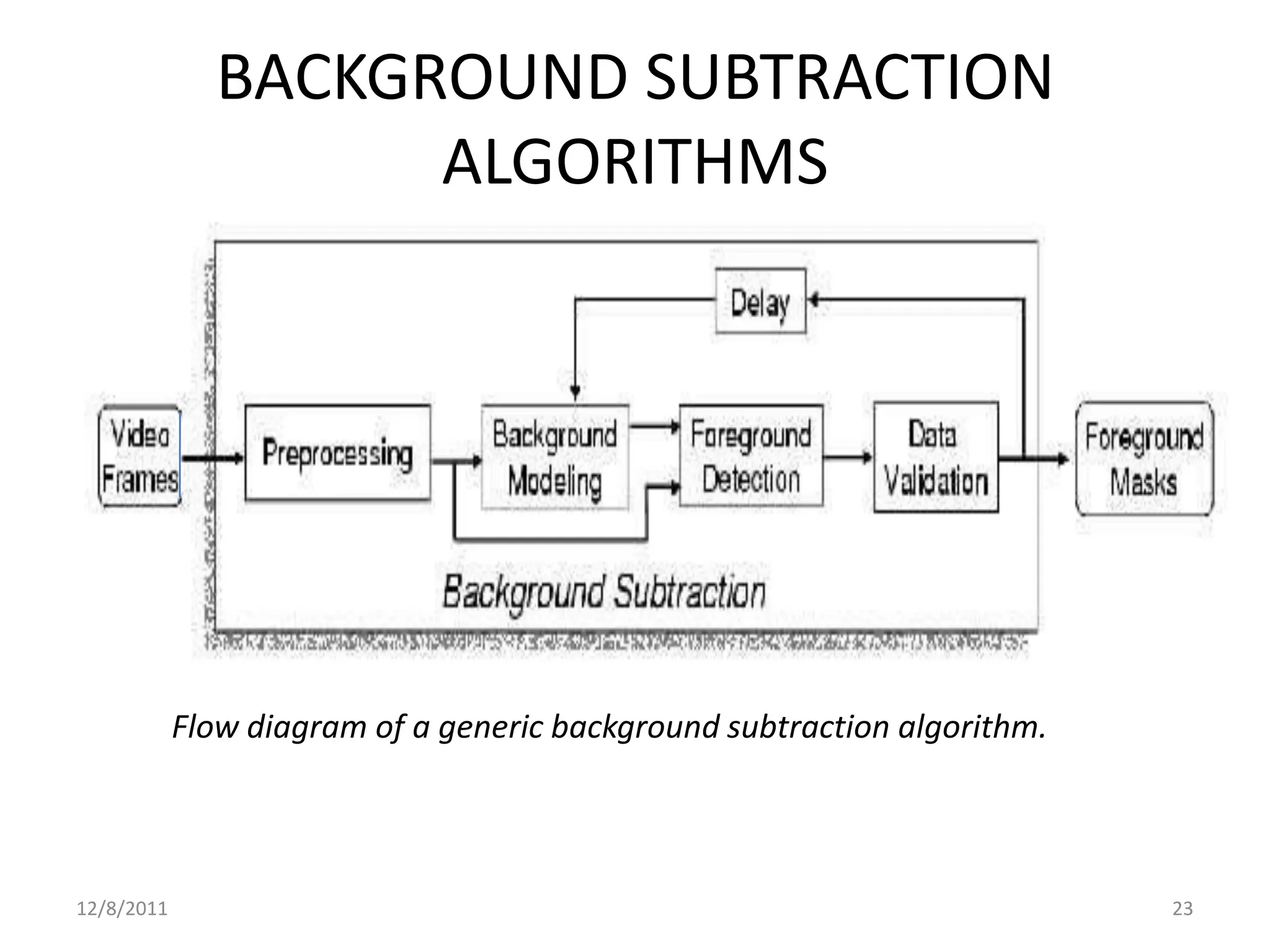

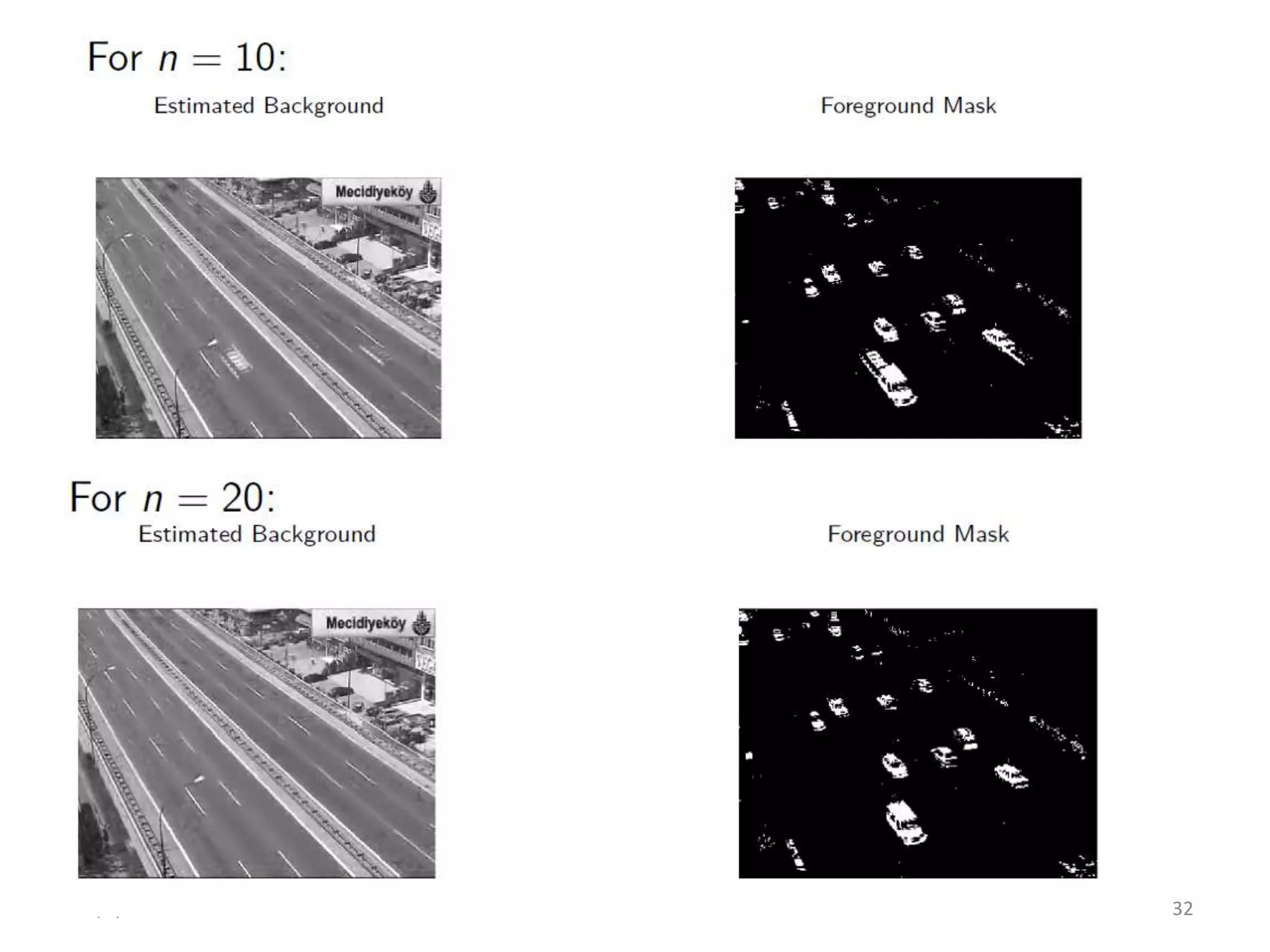

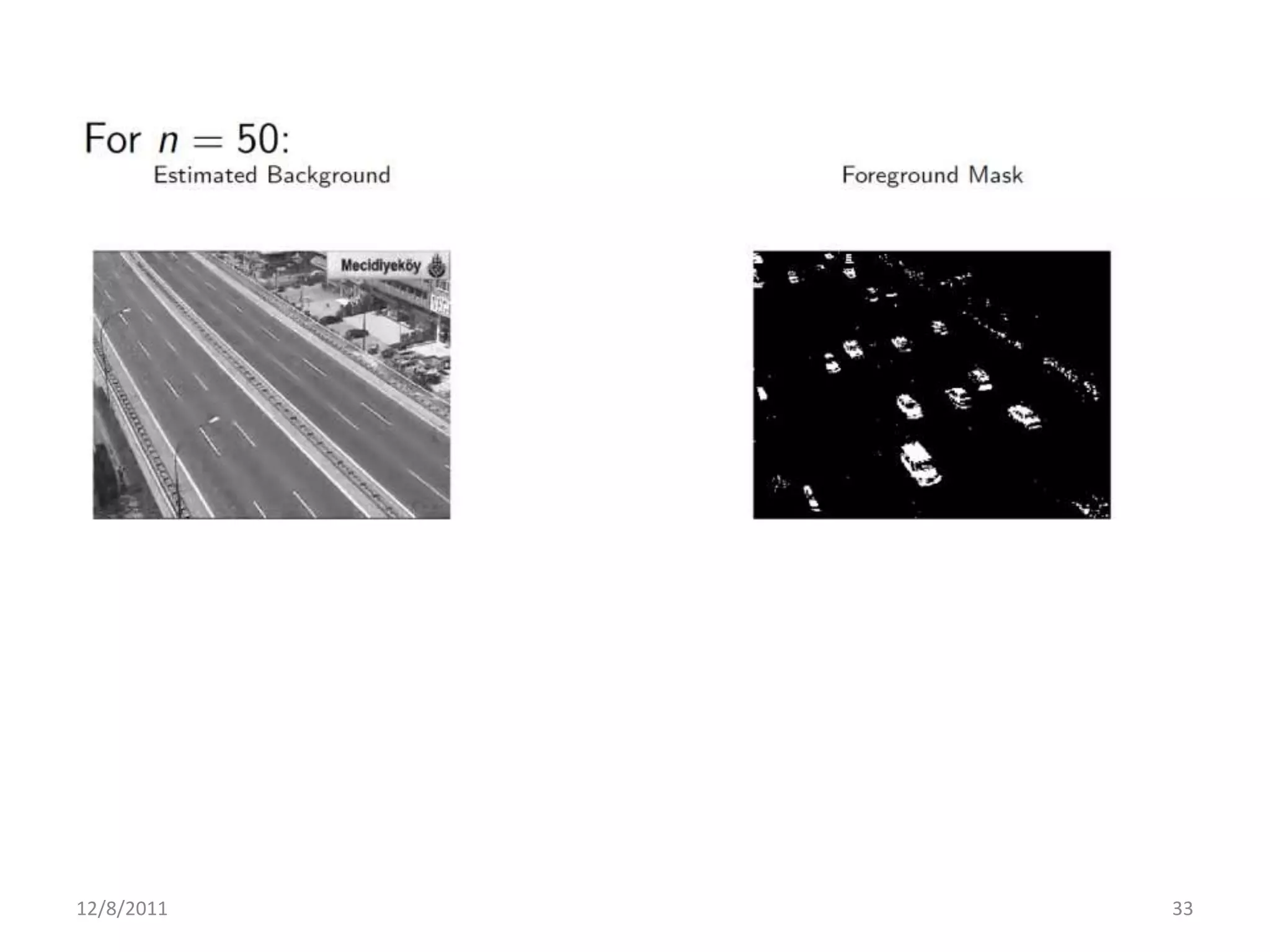

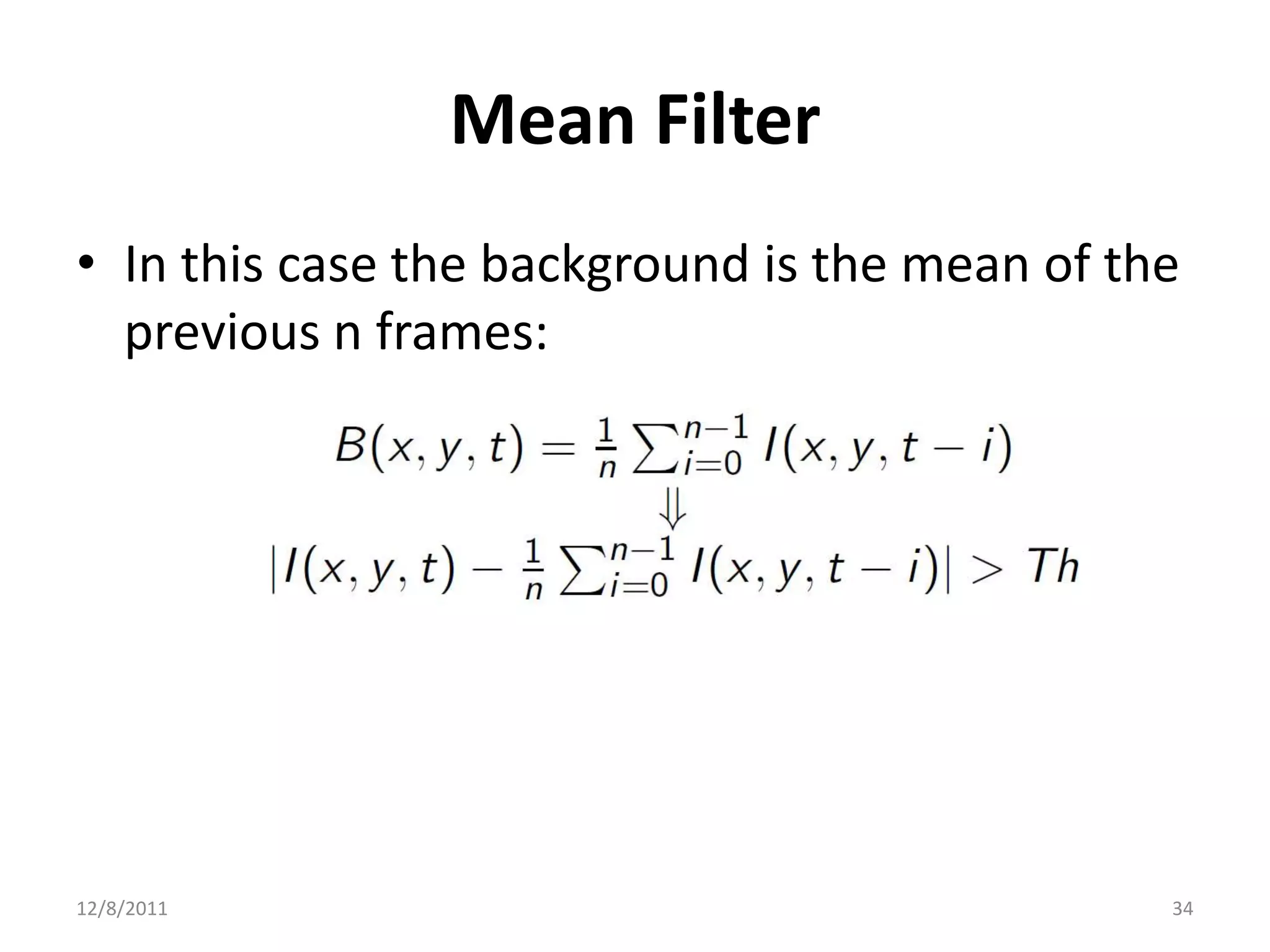

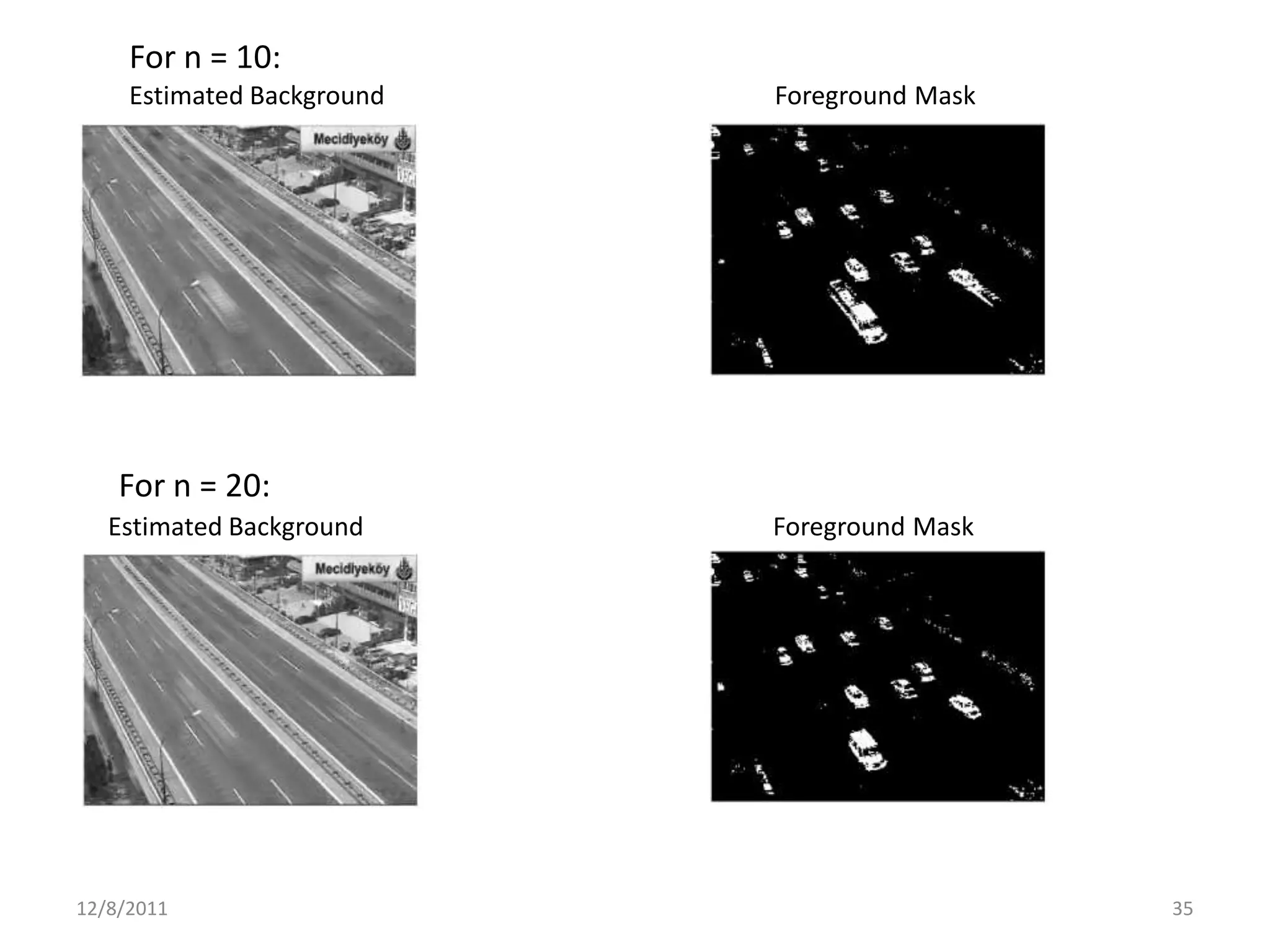

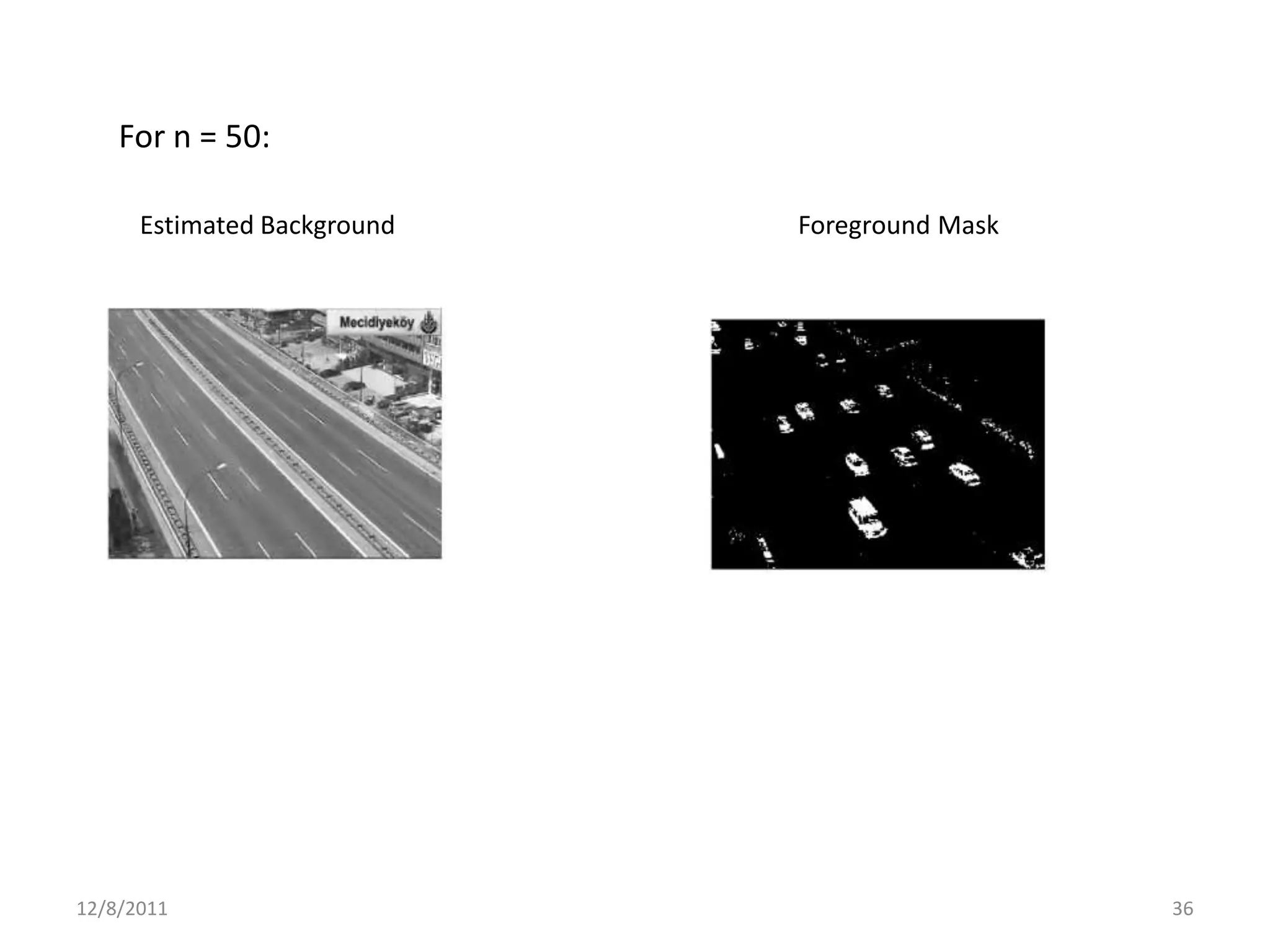

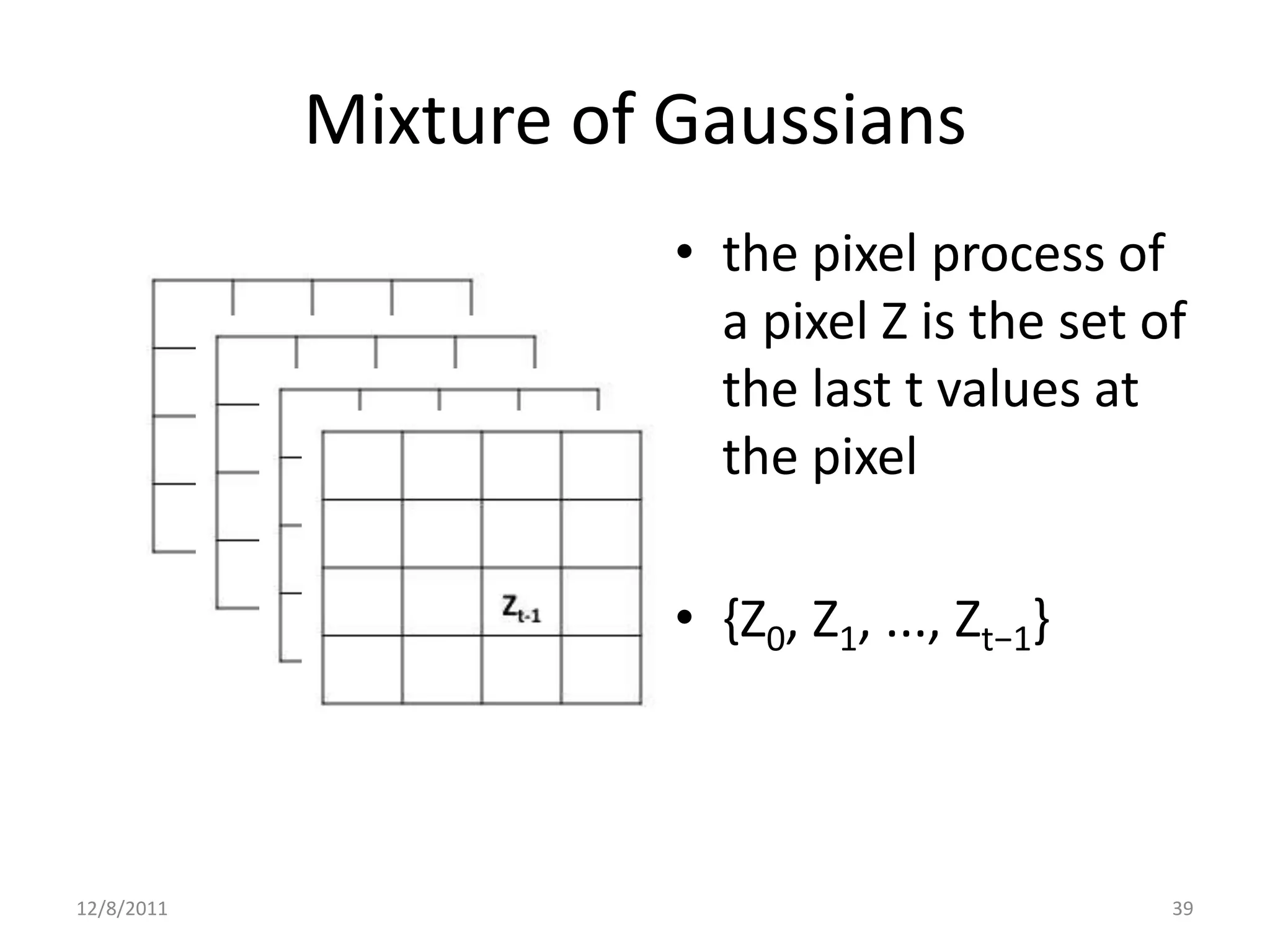

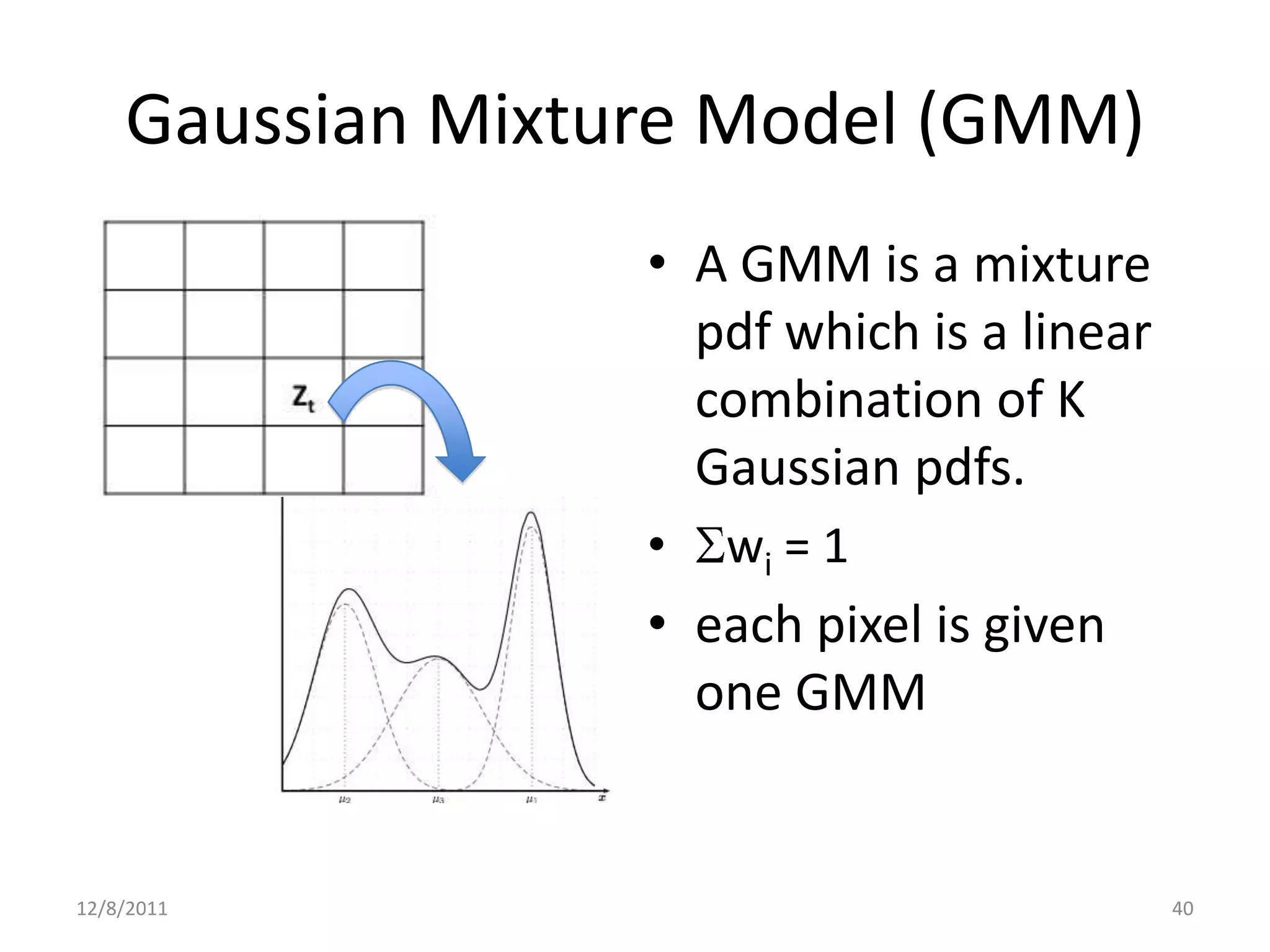

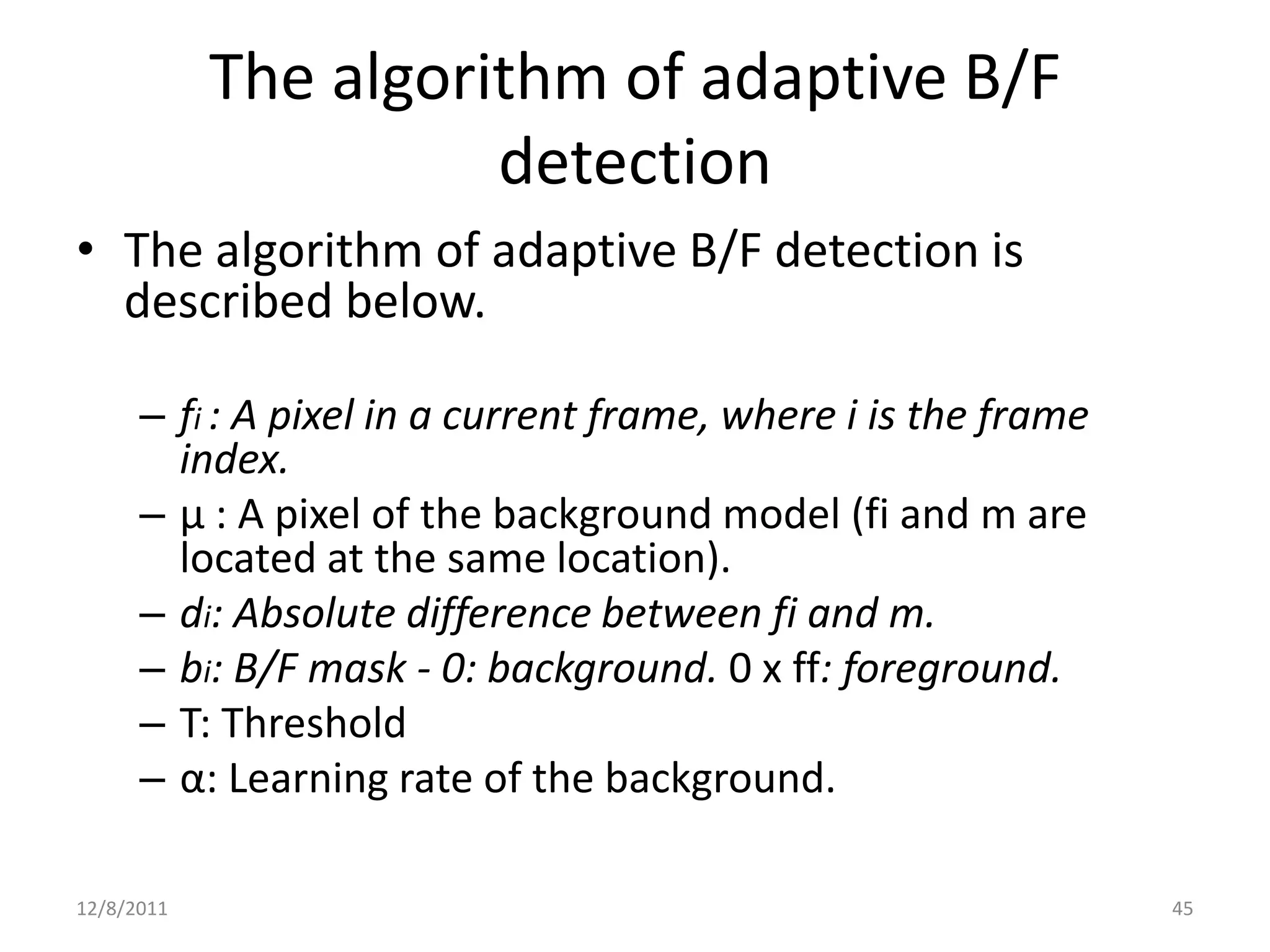

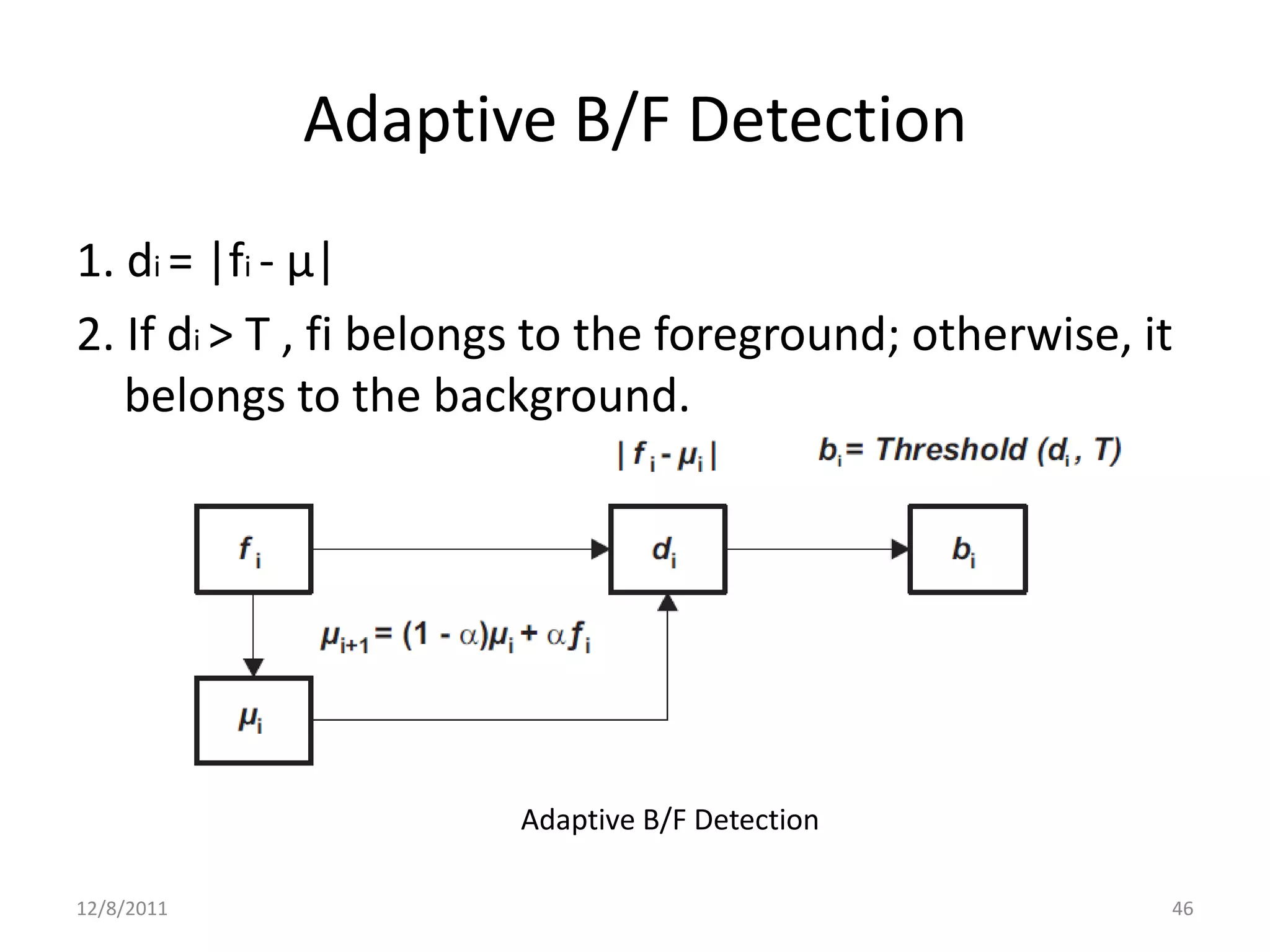

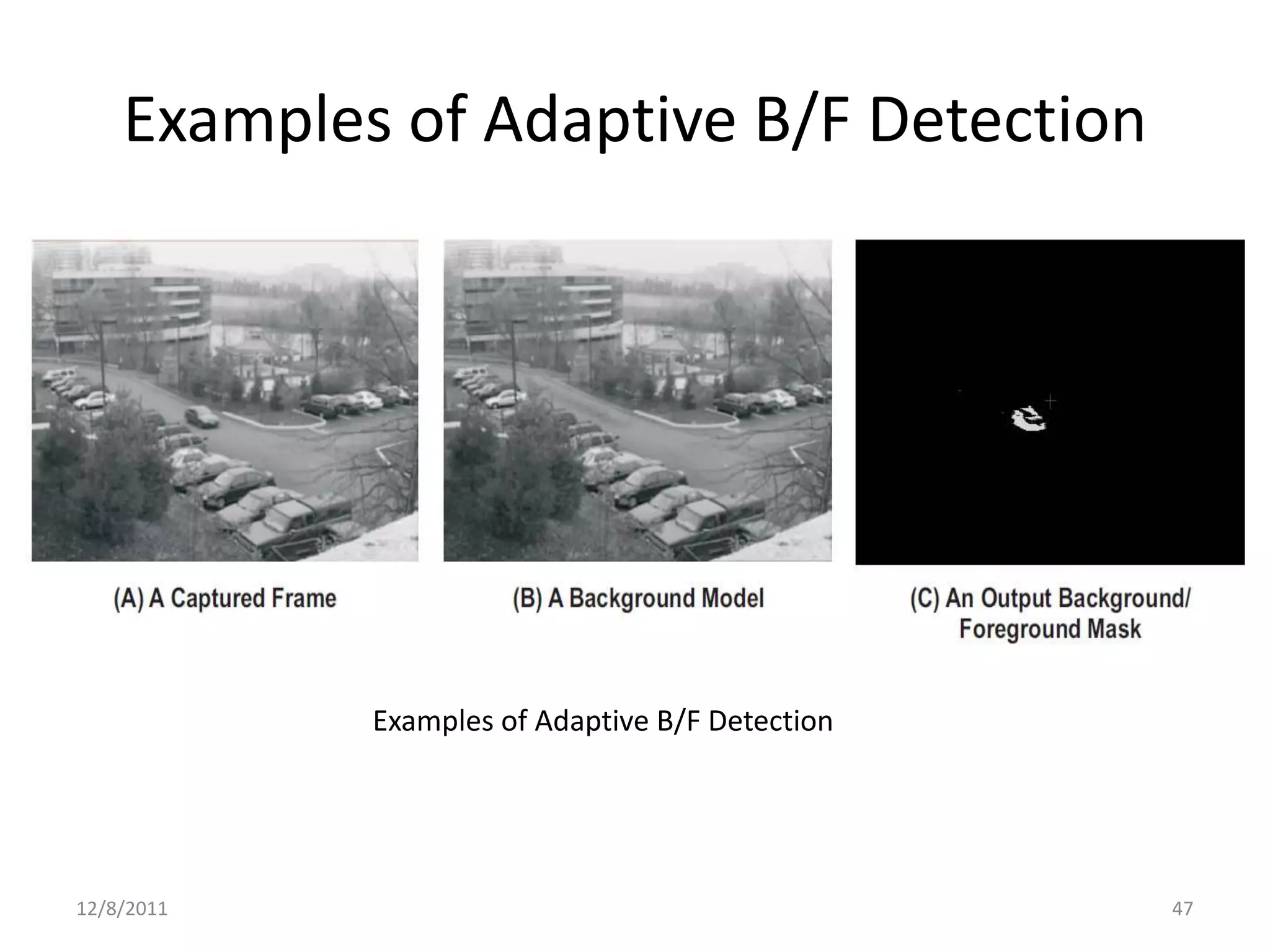

Background subtraction is a technique used to separate foreground objects from backgrounds in video frames. It works by comparing each frame to a background model and detecting differences which indicate moving foreground objects. Recursive techniques like mixtures of Gaussians model the background pixel values over time using multiple Gaussian distributions, allowing the background model to adapt to changing lighting conditions. Adaptive background/foreground detection uses a background model that evolves over time to distinguish foreground objects from the background in a robust way.