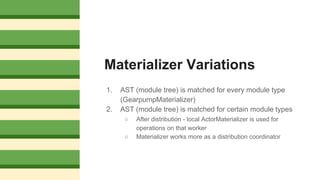

The document discusses integrating Akka streams with the Gearpump big data streaming platform. It provides background on Akka streams and Gearpump, and describes how Gearpump implements a GearpumpMaterializer to rewrite the Akka streams module tree for distributed execution across a Gearpump cluster. Key points covered include the object models of Akka streams and Gearpump, prerequisites for big data platforms, challenges integrating the two, and how the materializer handles distribution.

![Big data

platform

integration

challenges (1)

A number of

GraphStages have

completion or

cancellation

semantics. Big data

pipelines are often

infinite streams and

do not complete.

Cancel is often

viewed as a failure.

● Balance[T]

● Completion[T]

● Merge[T]

● Split[T]](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-16-320.jpg)

![Big data

platform

integration

challenges (2)

A number of

GraphStages have

specific upstream and

downstream ordering

and timing directives.

● Batch[T]

● Concat[T]

● Delay[T]

● DelayInitial[T]

● Interleave[T]](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-17-320.jpg)

![Akka-streams Graph[S, M]

● Graph is parameterized by

○ Shape

○ Materialized Value

● Graph contains a Module contains a Shape

○ Module is where the runtime is constructed and manipulated

● Graph’s first level subtypes provide basic functionality

○ Source

○ Sink

○ Flow

○ BidiFlow

S M

Graph

Source

Sink

Flow

BidiFlow

Module

Shape](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-23-320.jpg)

![GraphStage[S <: Shape]

Graph

GraphStageWithMaterializedValue

GraphStage

GraphStageModule

Module](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-24-320.jpg)

![GraphStage[S <: Shape]

subtypes (incomplete)

↳ Balance[T]

↳ Batch[In, Out]

↳ Broadcast[T]

↳ Collect[In, Out]

↳ Concat[T]

↳ DelayInitial[T]

↳ DropWhile[T]

↳ Expand[In, Out]

↳ FlattenMerge[T, M]

↳ Fold[In, Out]

↳ FoldAsync[T]

↳ FutureSource[T]

↳ GroupBy[T, K]

↳ Grouped[T]

↳ GroupedWithin[T]

↳ Interleave[T]

↳ Intersperse[T]

↳ LimitWeighted[T]

↳ Map[In, Out]

↳ MapAsync[In, Out]

↳ Merge[T]

↳ MergePreferred[T]

↳ MergeSorted[T]

↳ OrElse[T]

↳ Partition[T]

↳ PrefixAndTail[T]

↳ Recover[T]

↳ Scan[In, Out]

↳ SimpleLinearGraph[T]

↳ Sliding[T]](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-25-320.jpg)

![What about Module?

● Module is a recursive structure containing a Set[Modules]

● Module is a declarative data structure used as the AST

● Module is used to represent a graph of nodes and edges from the original

GraphStages

● Module contains downstream and upstream ports (edges)

● Materializers walk the module tree to create and run instances of publishers

and subscribers.

● Each publisher and subscriber is an actor (ActorGraphInterpreter)](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-26-320.jpg)

![Gearpump Object Model

↪ Graph[Node, Edge] holds

↳ Tasks (Node)

↳ Partitioners (Edge)

↪ This is a Gearpump Graph, not to be

confused with akka-streams Graph.](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-27-320.jpg)

![Gearpump Graph[N<:Task, E<:Partitioner]

● Graph is parameterized by

○ Node - must be a subtype of Task

○ Edge - must be a subtype of Parititioner

N E

Graph

List[Task]

List[Partitioner]](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-28-320.jpg)

![GraphTask

subtypes (incomplete)

↳ BalanceTask

↳ BatchTask[In, Out]

↳ BroadcastTask[T]

↳ CollectTask[In, Out]

↳ ConcatTask

↳ DelayInitialTask[T]

↳ DropWhileTask[T]

↳ ExpandTask[In, Out]

↳ FlattenMerge[T, M]

↳ FoldTask[In, Out]

↳ FutureSourceTask[T]

↳ GroupByTask[T, K]

↳ GroupedTask[T]

↳ GroupedWithinTask[T]

↳ InterleaveTask[T]

↳ IntersperseTask[T]

↳ LimitWeightedTask[T]

↳ MapTask[In, Out]

↳ MapAsyncTask[In, Out]

↳ MergeTask[T]

↳ OrElseTask[T]

↳ PartitionTask[T]

↳ PrefixAndTailTask[T]

↳ RecoverTask[T]

↳ ScanTask[In, Out]

↳ SlidingTask[T]](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-30-320.jpg)

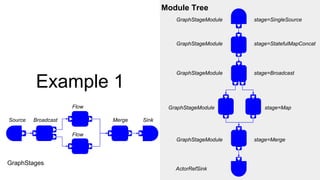

![Example 1

Source

Broadcast

Flow

Merge

Sink

implicit val materializer = ActorMaterializer()

val sinkActor = system.actorOf(Props(new SinkActor())

val source = Source((1 to 5))

val sink = Sink.actorRef(sinkActor, "COMPLETE")

val flowA: Flow[Int, Int, NotUsed] = Flow[Int].map {

x => println(s"processing broadcasted element : $x in flowA"); x

}

val flowB: Flow[Int, Int, NotUsed] = Flow[Int].map {

x => println(s"processing broadcasted element : $x in flowB"); x

}

val graph = RunnableGraph.fromGraph(GraphDSL.create() {

implicit b =>

val broadcast = b.add(Broadcast[Int](2))

val merge = b.add(Broadcast[Int](2))

source ~> broadcast

broadcast ~> flowA ~> merge

broadcast ~> flowB ~> merge

merge ~> sink

ClosedShape

})

graph.run()](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-32-320.jpg)

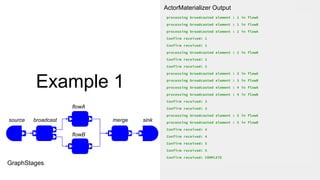

![Example 1

implicit val materializer = ActorMaterializer()

val sinkActor = system.actorOf(Props(new SinkActor())

val source = Source((1 to 5))

val sink = Sink.actorRef(sinkActor, "COMPLETE")

val flowA: Flow[Int, Int, NotUsed] = Flow[Int].map {

x => println(s"processing broadcasted element : $x in flowA"); x

}

val flowB: Flow[Int, Int, NotUsed] = Flow[Int].map {

x => println(s"processing broadcasted element : $x in flowB"); x

}

val graph = RunnableGraph.fromGraph(GraphDSL.create() {

implicit b =>

val broadcast = b.add(Broadcast[Int](2))

val merge = b.add(Broadcast[Int](2))

source ~> broadcast

broadcast ~> flowA ~> merge

broadcast ~> flowB ~> merge

merge ~> sink

ClosedShape

})

graph.run()

Source Broadcast

Flow

Flow

Merge

GraphStages

Sink

class SinkActor extends Actor {

def receive: Receive = {

case any: Any =>

println(s“Confirm received: $any”)

}](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-33-320.jpg)

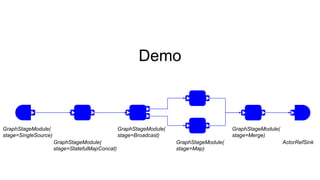

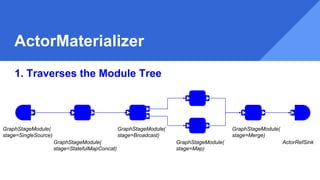

![Example 1

implicit val materializer = ActorMaterializer()

val sinkActor = system.actorOf(Props(new SinkActor())

val source = Source((1 to 5))

val sink = Sink.actorRef(sinkActor, "COMPLETE")

val flowA: Flow[Int, Int, NotUsed] = Flow[Int].map {

x => println(s"processing broadcasted element : $x in flowA"); x

}

val flowB: Flow[Int, Int, NotUsed] = Flow[Int].map {

x => println(s"processing broadcasted element : $x in flowB"); x

}

val graph = RunnableGraph.fromGraph(GraphDSL.create() {

implicit b =>

val broadcast = b.add(Broadcast[Int](2))

val merge = b.add(Broadcast[Int](2))

source ~> broadcast

broadcast ~> flowA ~> merge

broadcast ~> flowB ~> merge

merge ~> sink

ClosedShape

})

graph.run()

source broadcast

flowA

flowB

merge

GraphStages

sink](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-35-320.jpg)

![Example 1

implicit val materializer = GearpumpMaterializer()

val sinkActor = system.actorOf(Props(new SinkActor())

val source = Source((1 to 5))

val sink = Sink.actorRef(sinkActor, "COMPLETE")

val flowA: Flow[Int, Int, NotUsed] = Flow[Int].map {

x => println(s"processing broadcasted element : $x in flowA"); x

}

val flowB: Flow[Int, Int, NotUsed] = Flow[Int].map {

x => println(s"processing broadcasted element : $x in flowB"); x

}

val graph = RunnableGraph.fromGraph(GraphDSL.create() {

implicit b =>

val broadcast = b.add(Broadcast[Int](2))

val merge = b.add(Broadcast[Int](2))

source ~> broadcast

broadcast ~> flowA ~> merge

broadcast ~> flowB ~> merge

merge ~> sink

ClosedShape

})

graph.run()

source broadcast

flowA

flowB

merge

GraphStages

sink](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-37-320.jpg)

![Example 2

No local graph.

More typical of distributed apps.

implicit val materializer = GearpumpMaterializer()

val sink = GearSink.to(new LoggerSink[String]))

val sourceData = new CollectionDataSource(

List("red hat", "yellow sweater", "blue jack", "red

apple", "green plant", "blue sky"))

val source = GearSource.from[String](sourceData)

source.filter(_.startsWith("red")).map("I want to order

item: " + _).runWith(sink)](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-49-320.jpg)

)

val C = builder.add(Merge[Int](2))

val D = builder.add(Flow[Int].map(_ + 1))

val E = builder.add(Balance[Int](2))

val F = builder.add(Merge[Int](2))

val G = builder.add(Sink.foreach(println)).in

C <~ F

A ~> B ~> C ~> F

B ~> D ~> E ~> F

E ~> G

ClosedShape

}).run()](https://image.slidesharecdn.com/gearpumpakka-streams-161006023349/85/Gearpump-akka-streams-50-320.jpg)