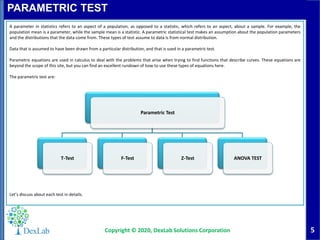

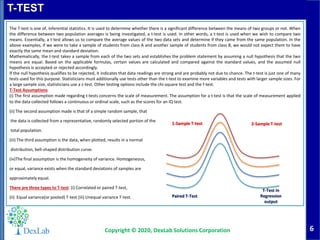

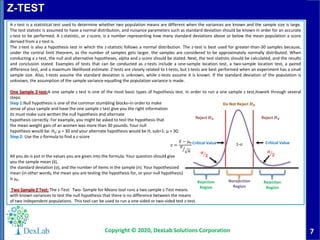

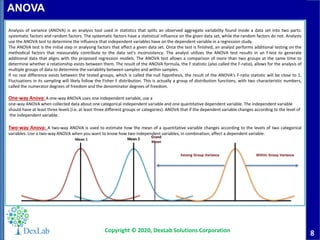

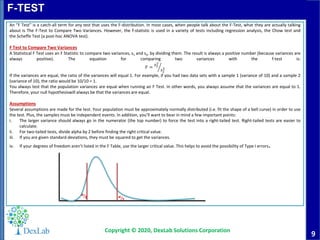

The document provides an overview of parametric hypothesis tests, including key tests like t-tests, z-tests, ANOVA, and F-tests, emphasizing their assumptions and applications in statistical analysis. It contrasts parametric tests, which rely on distributional assumptions and are commonly used with larger sample sizes, with non-parametric tests that do not have such requirements. The conclusion highlights the advantages of parametric tests in terms of ease of calculation and precision, along with their limitations, especially for smaller sample sizes.