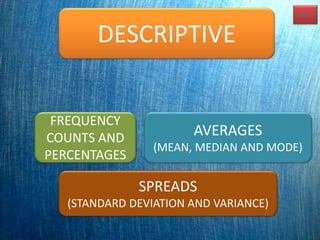

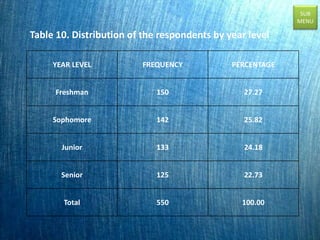

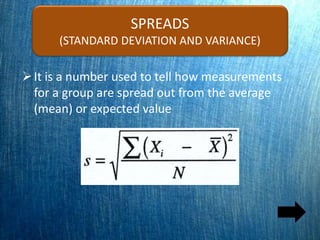

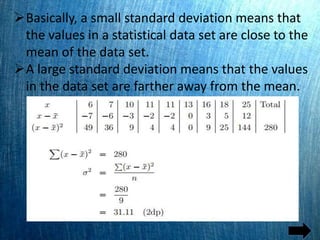

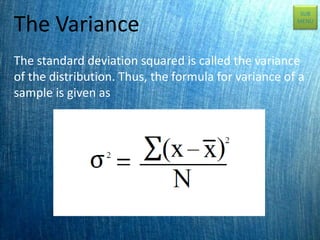

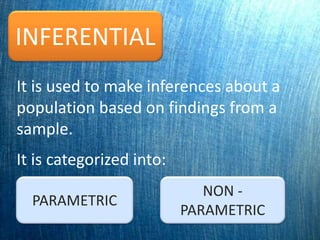

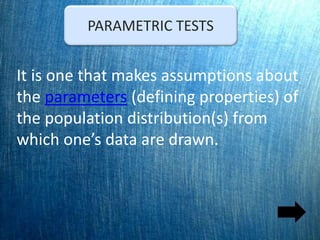

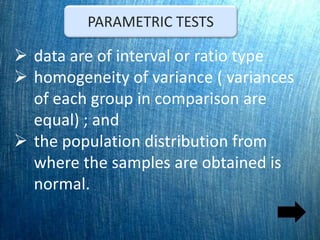

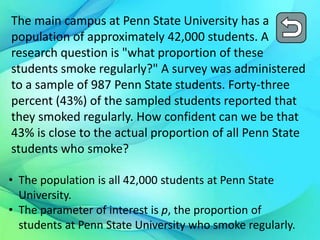

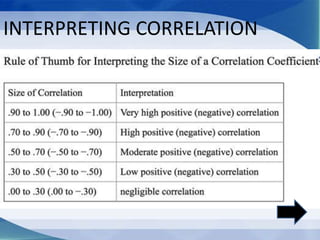

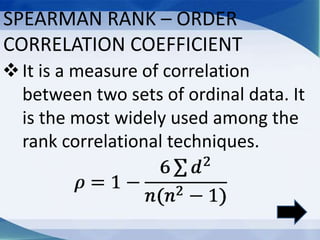

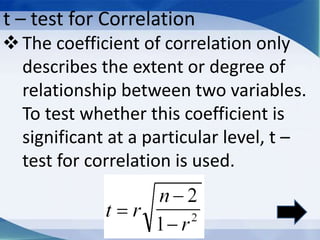

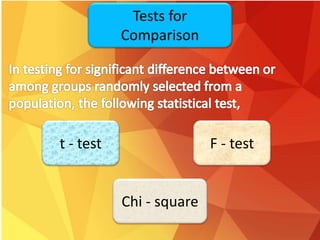

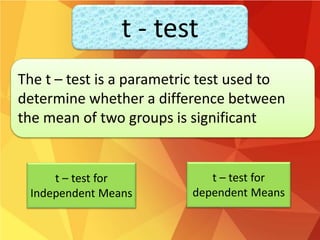

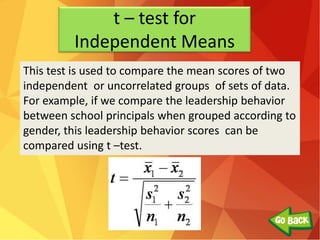

This document discusses various statistical concepts and techniques for data processing and analysis. It covers levels of measurement, descriptive statistics like frequency counts and percentages, averages, spreads, and inferential statistics including parametric tests like z-tests, t-tests, F-tests and non-parametric tests like chi-square. Correlation techniques such as Pearson product-moment correlation coefficient and Spearman rank-order correlation coefficient are also summarized. Common statistical tests for comparison including t-tests, F-tests, ANOVA, ANCOVA and chi-square are briefly explained.