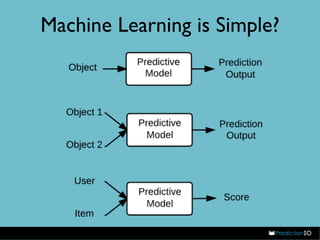

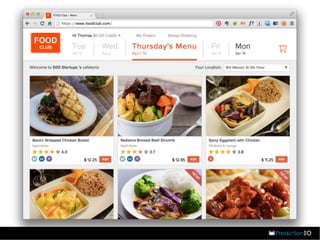

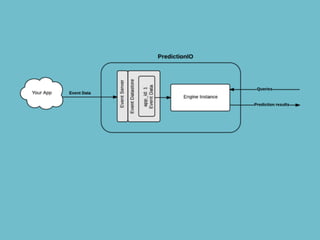

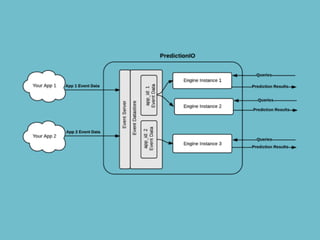

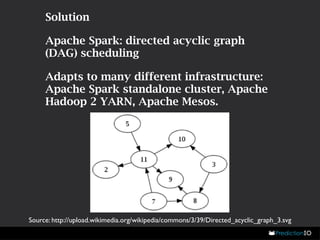

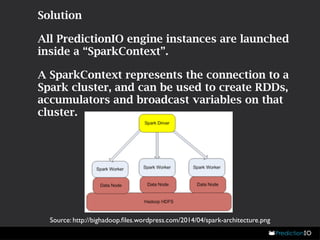

Simon Chan, CEO of PredictionIO, presented on their open source machine learning server. PredictionIO has over 5,000 developers and powers over 200 applications. It allows users to easily collect data, train models, and retrieve predictions. Key challenges included coordinating workflows on distributed clusters and distributed in-memory model retrieval, both of which PredictionIO addresses through Apache Spark.

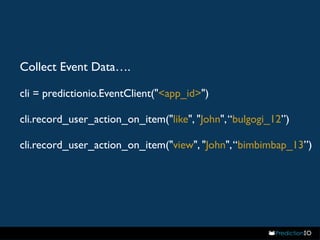

![Configurate the Engine Instance settings

in params/datasource.json

{

"appId": <app_id>,

"actions": [

"view", "like", ...

], ...

}](https://image.slidesharecdn.com/2c2predictionio-deview-140929192352-phpapp01/85/2C2-PredictionIO-29-320.jpg)

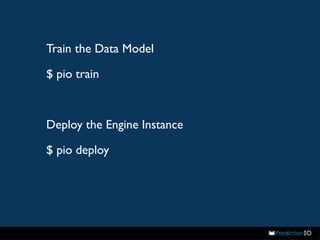

![Retrieve Prediction Results

from predictionio import EngineClient

client = EngineClient(url="http://localhost:8000")

prediction = client.send_query({"uid": "John", "n": 3})

print prediction

Output

{u'items': [{u'272': 9.929327011108398}, {u'313':

9.92607593536377}, {u’347': 9.92170524597168}]}](https://image.slidesharecdn.com/2c2predictionio-deview-140929192352-phpapp01/85/2C2-PredictionIO-31-320.jpg)