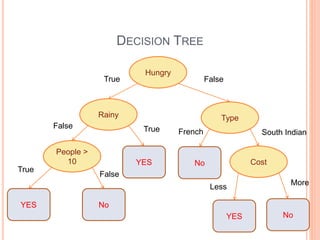

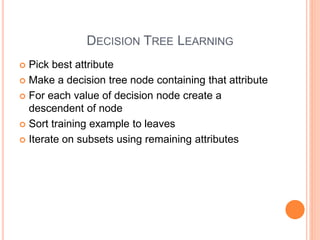

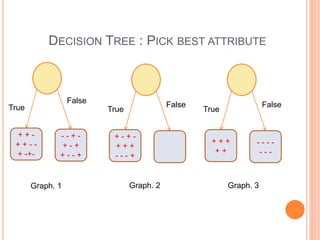

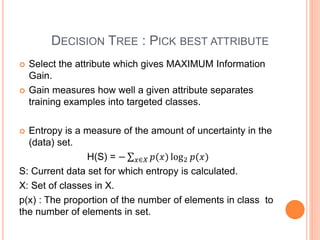

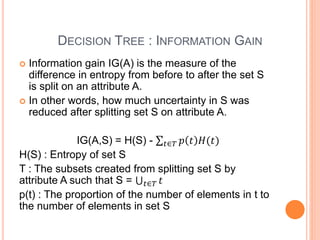

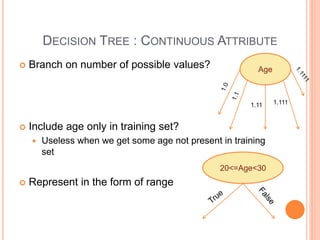

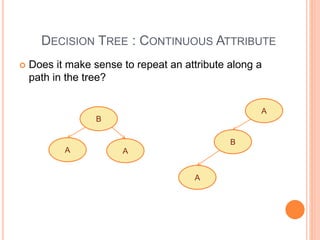

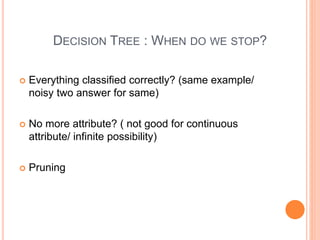

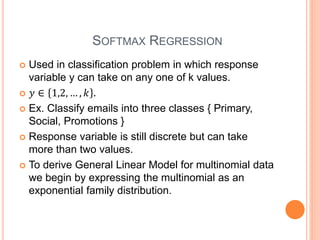

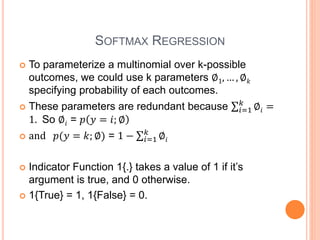

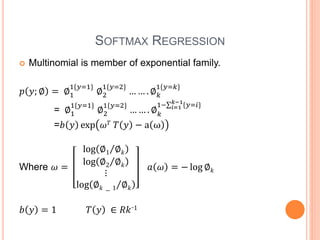

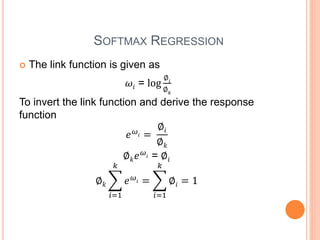

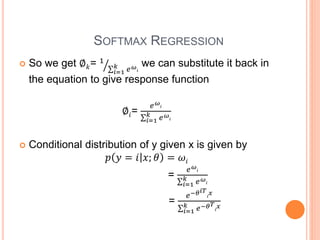

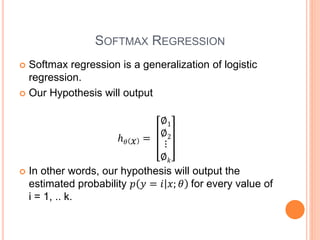

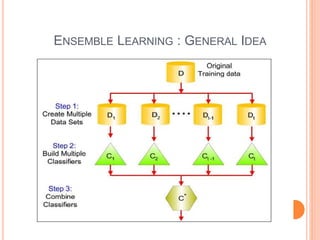

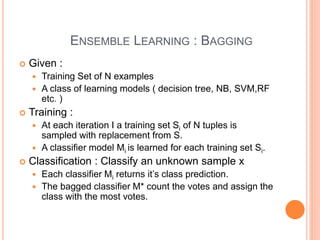

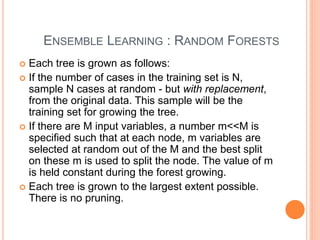

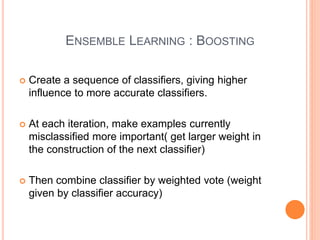

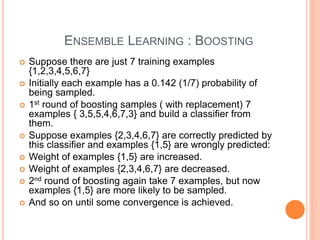

This document discusses decision trees, softmax regression, and ensemble methods in machine learning. It provides details on how decision trees use information gain to split nodes based on attributes. Softmax regression is described as a generalization of logistic regression for multi-class classification problems. Ensemble methods like bagging, random forests, and boosting are covered as techniques that improve performance by combining multiple models.