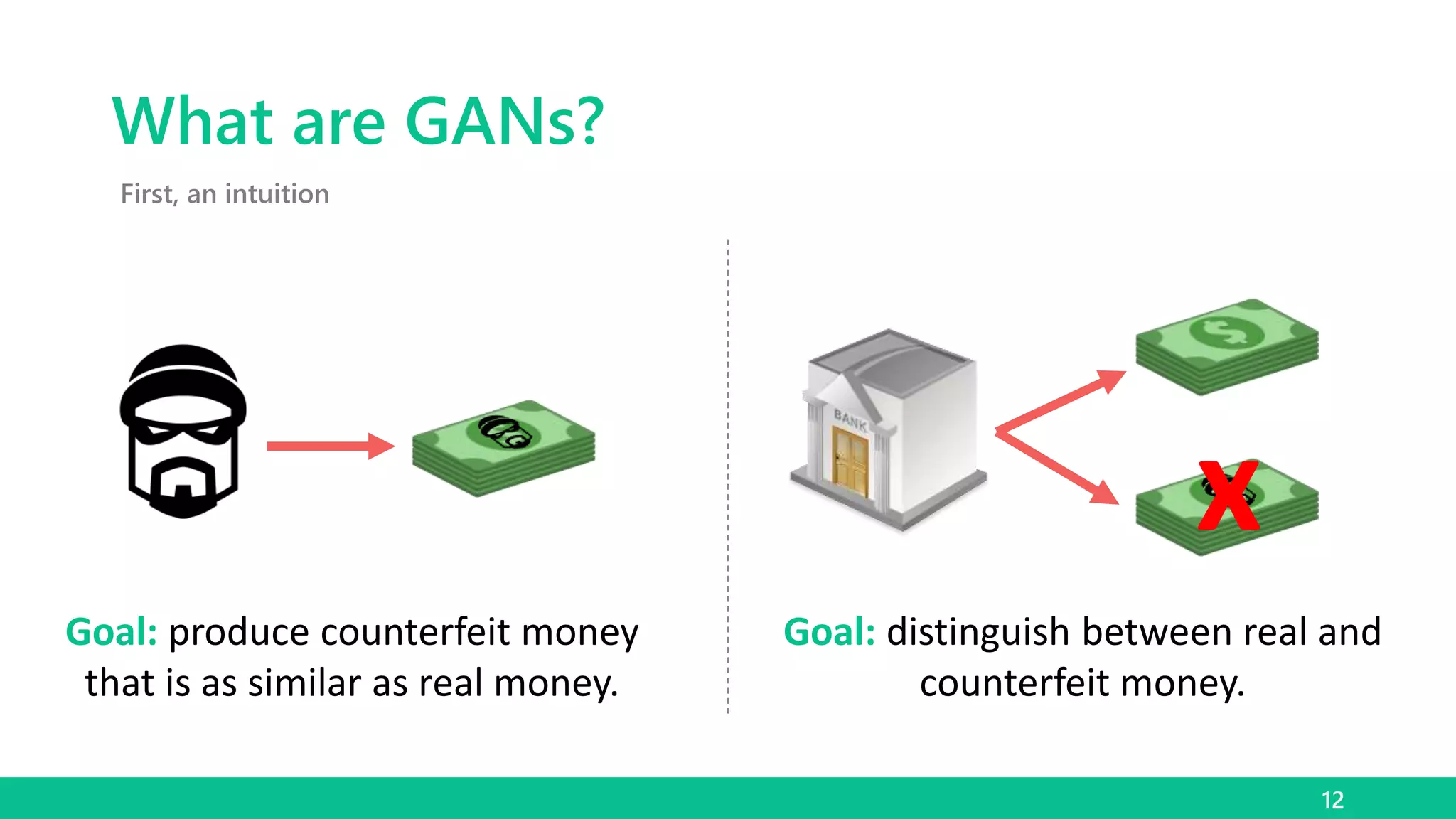

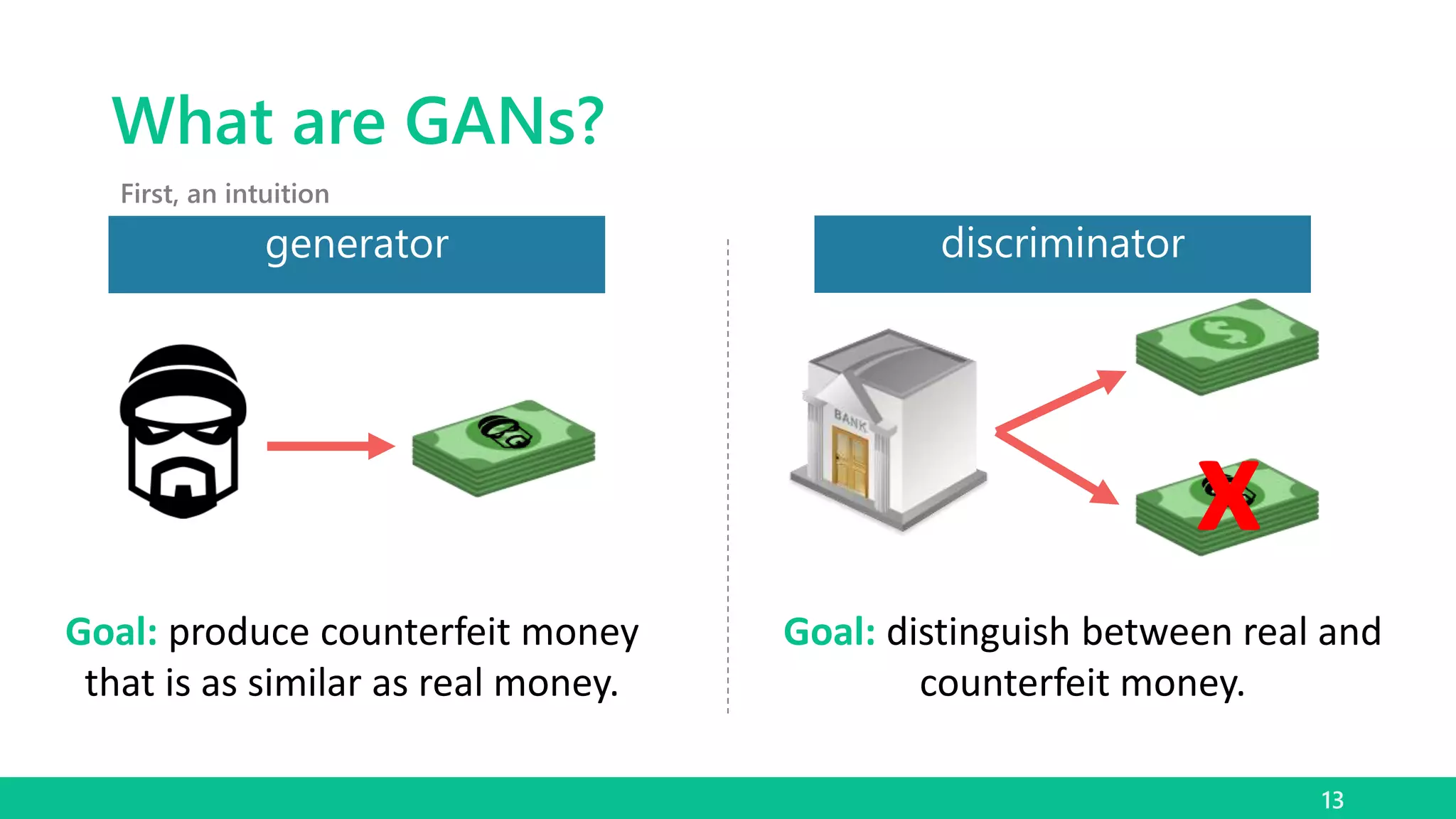

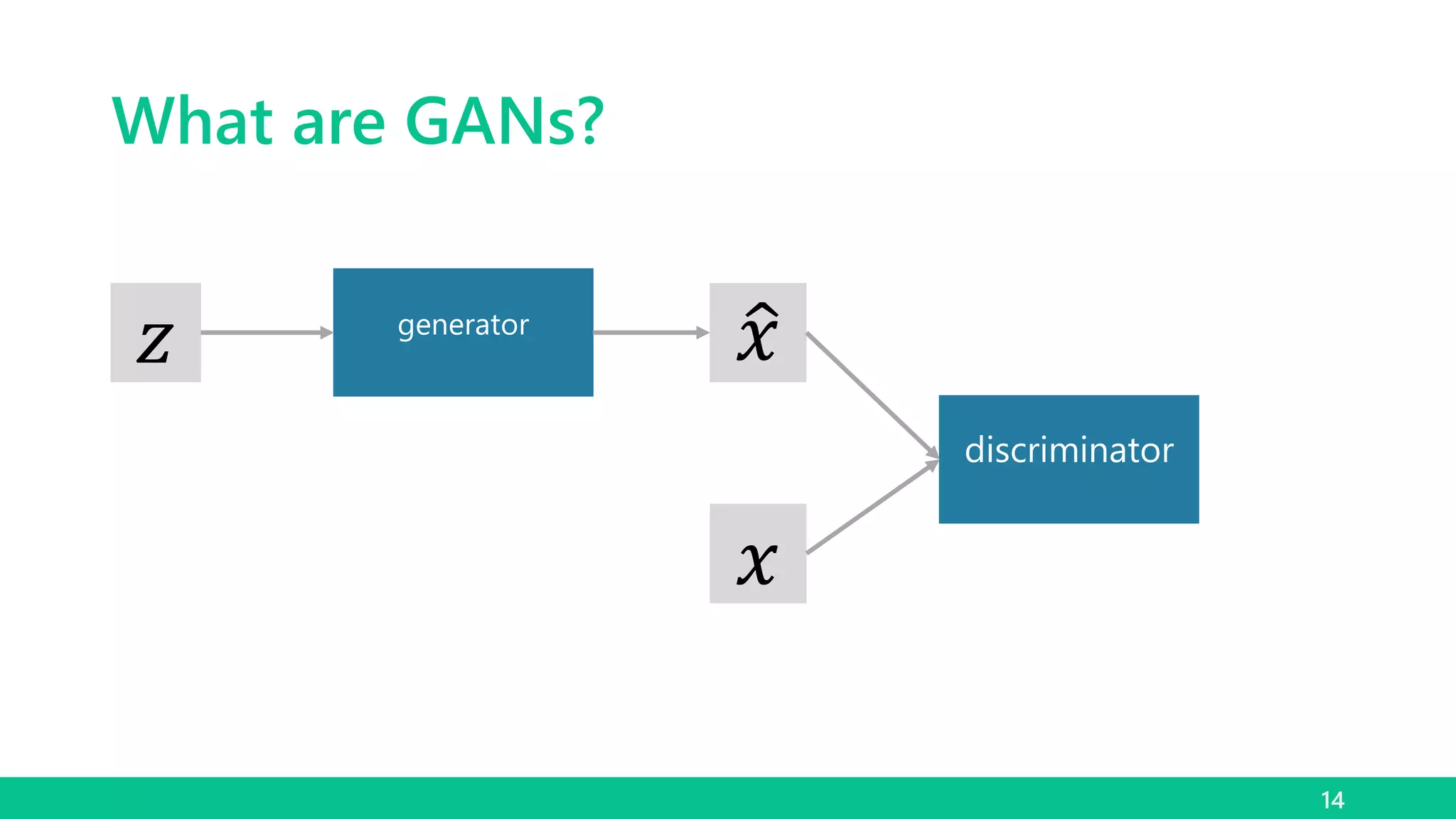

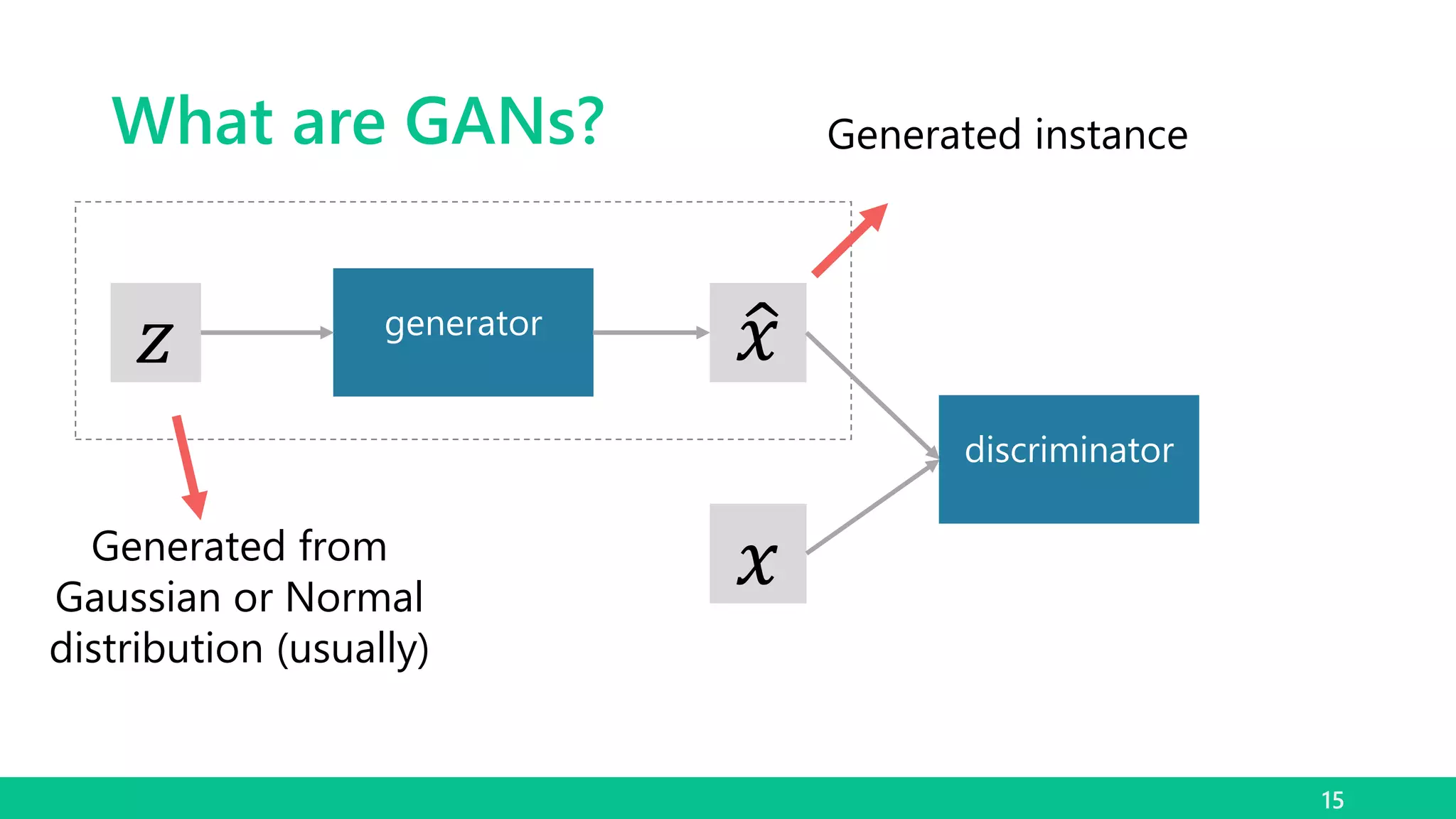

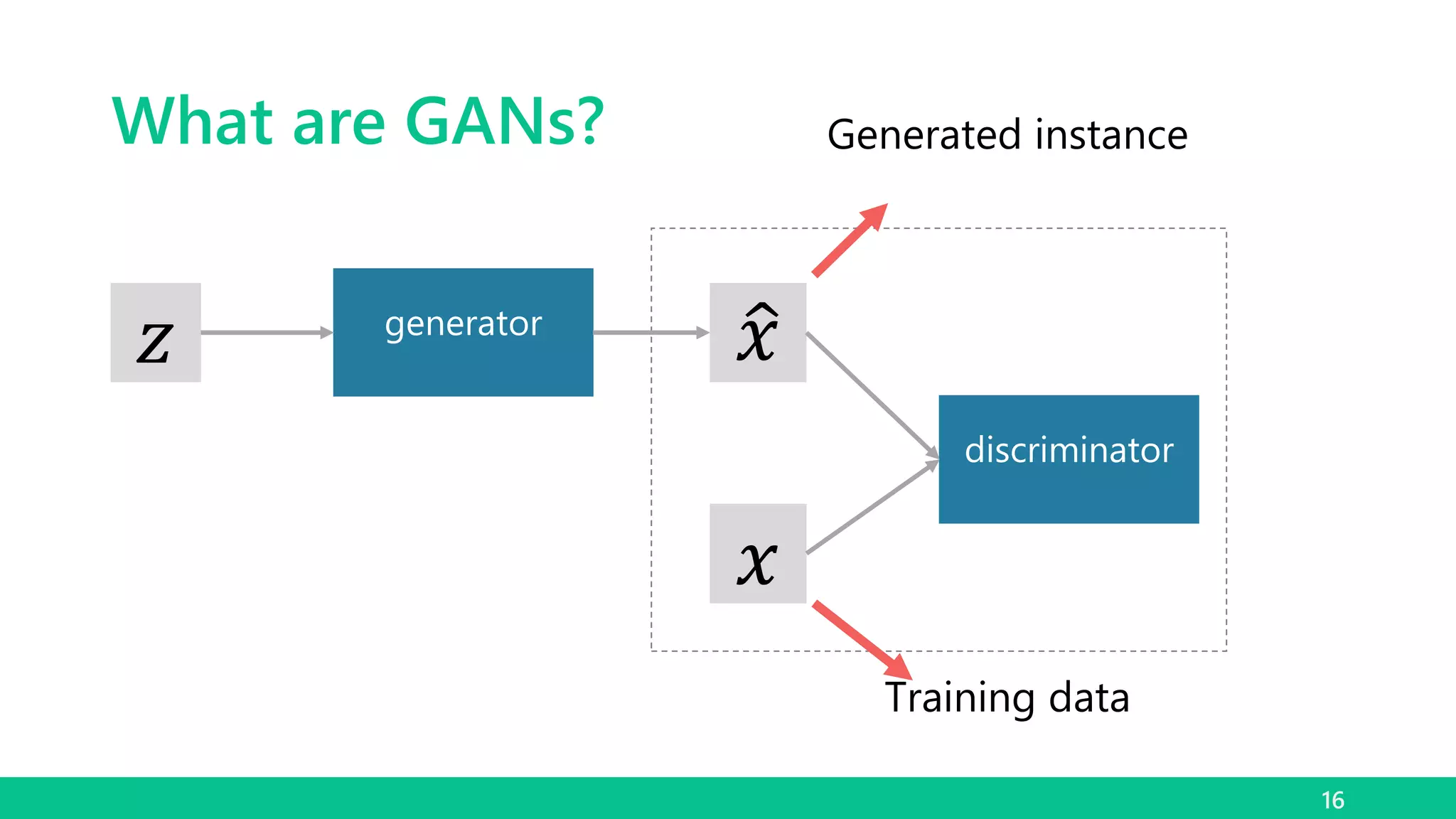

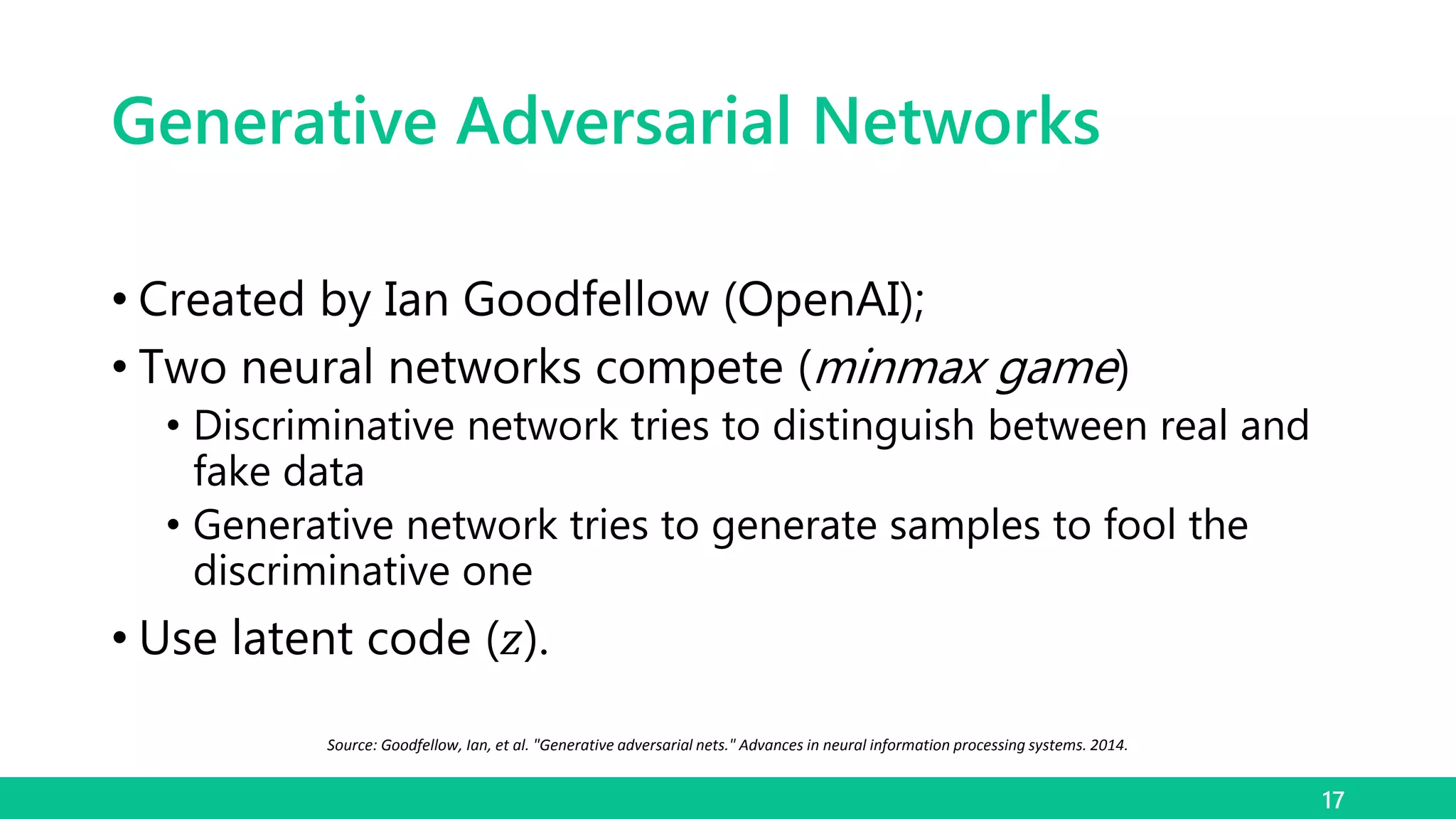

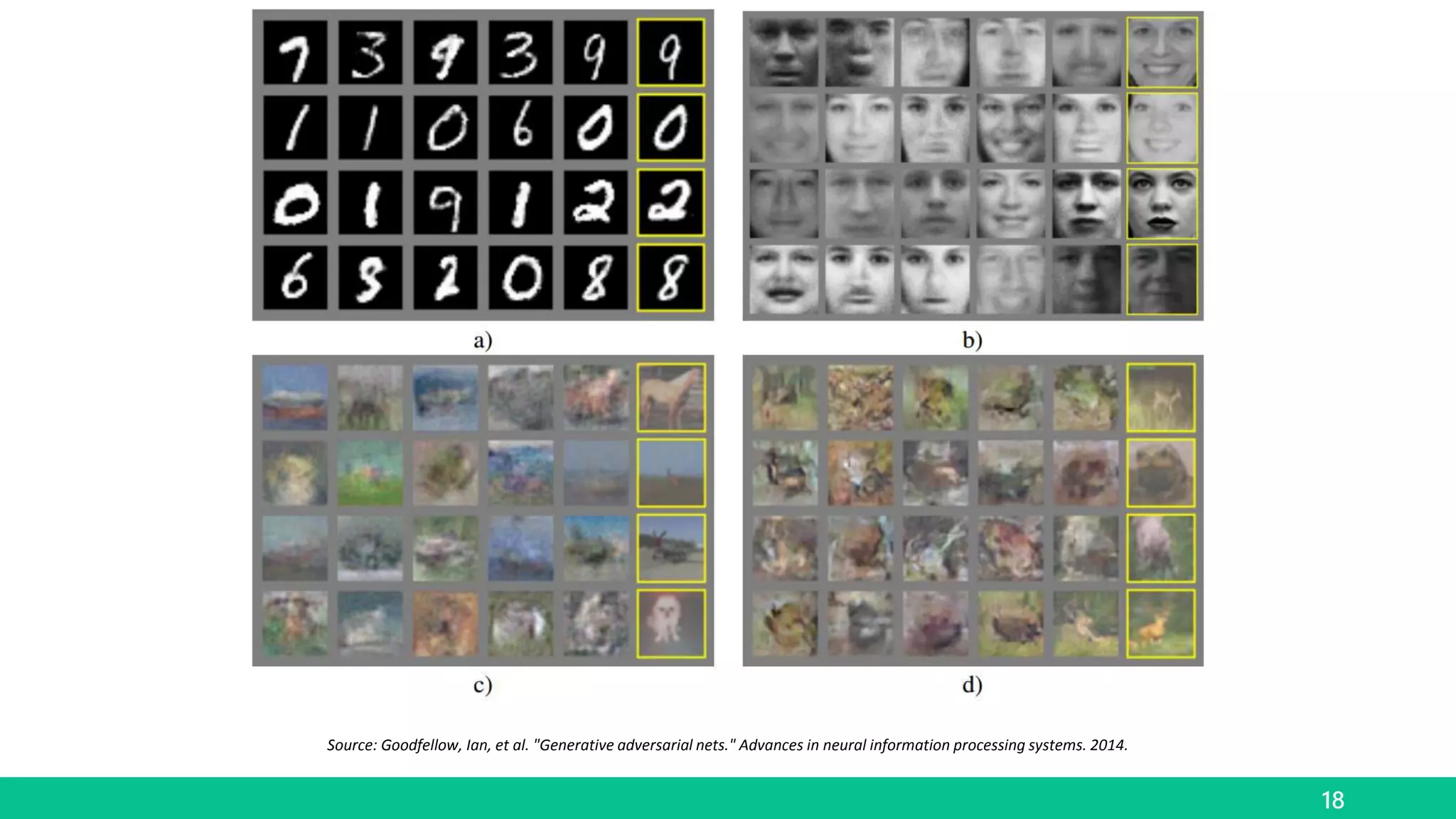

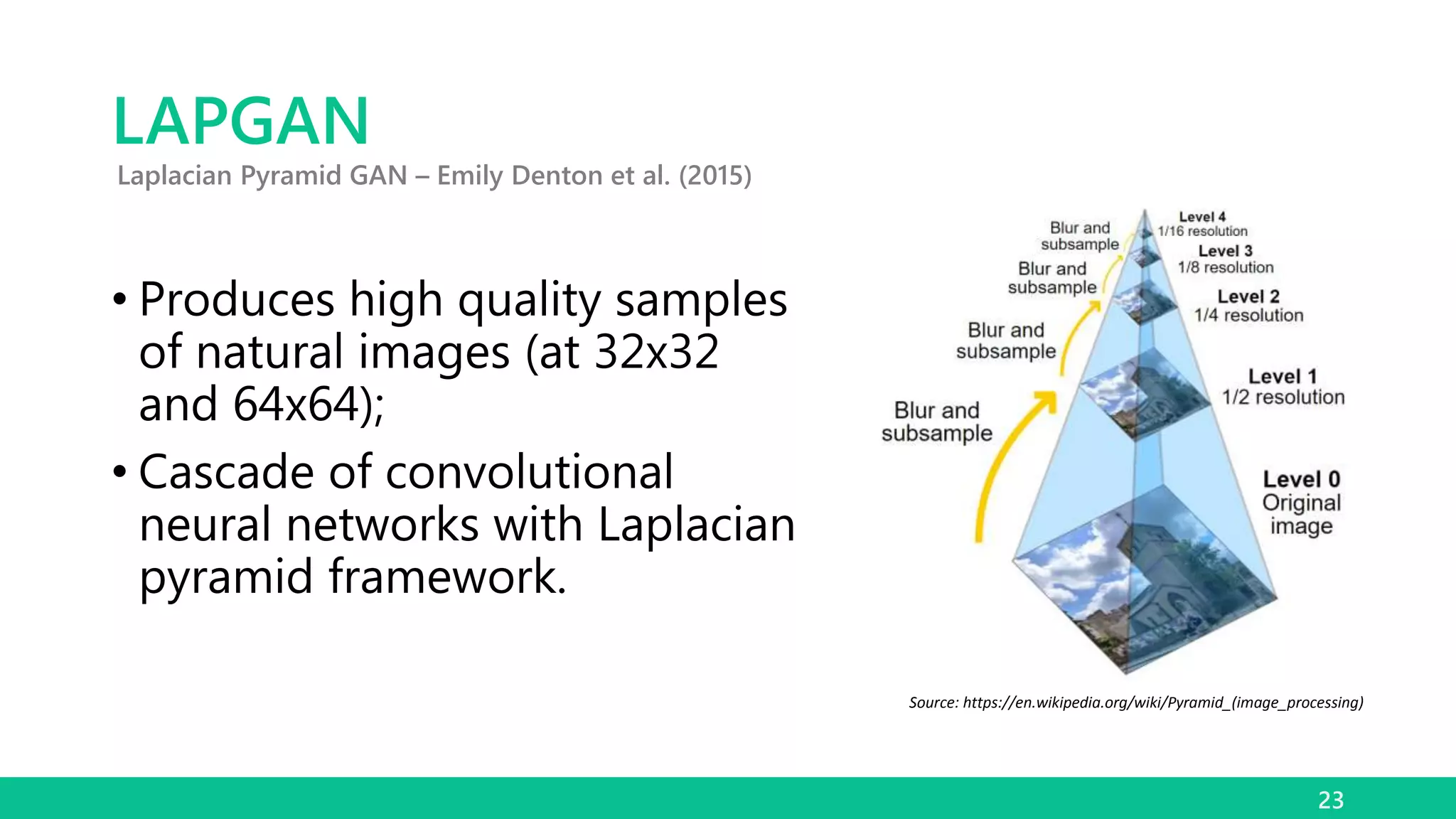

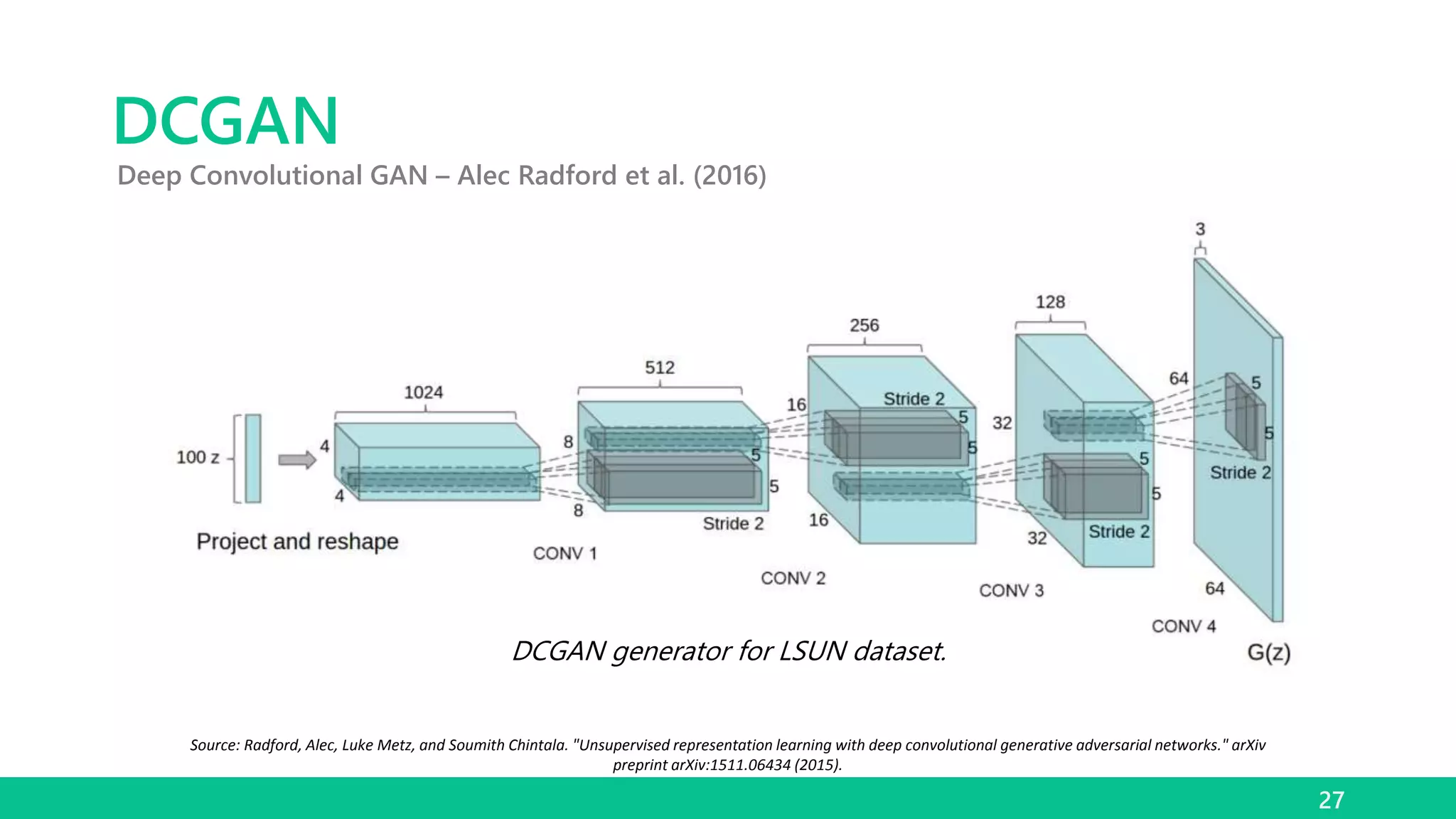

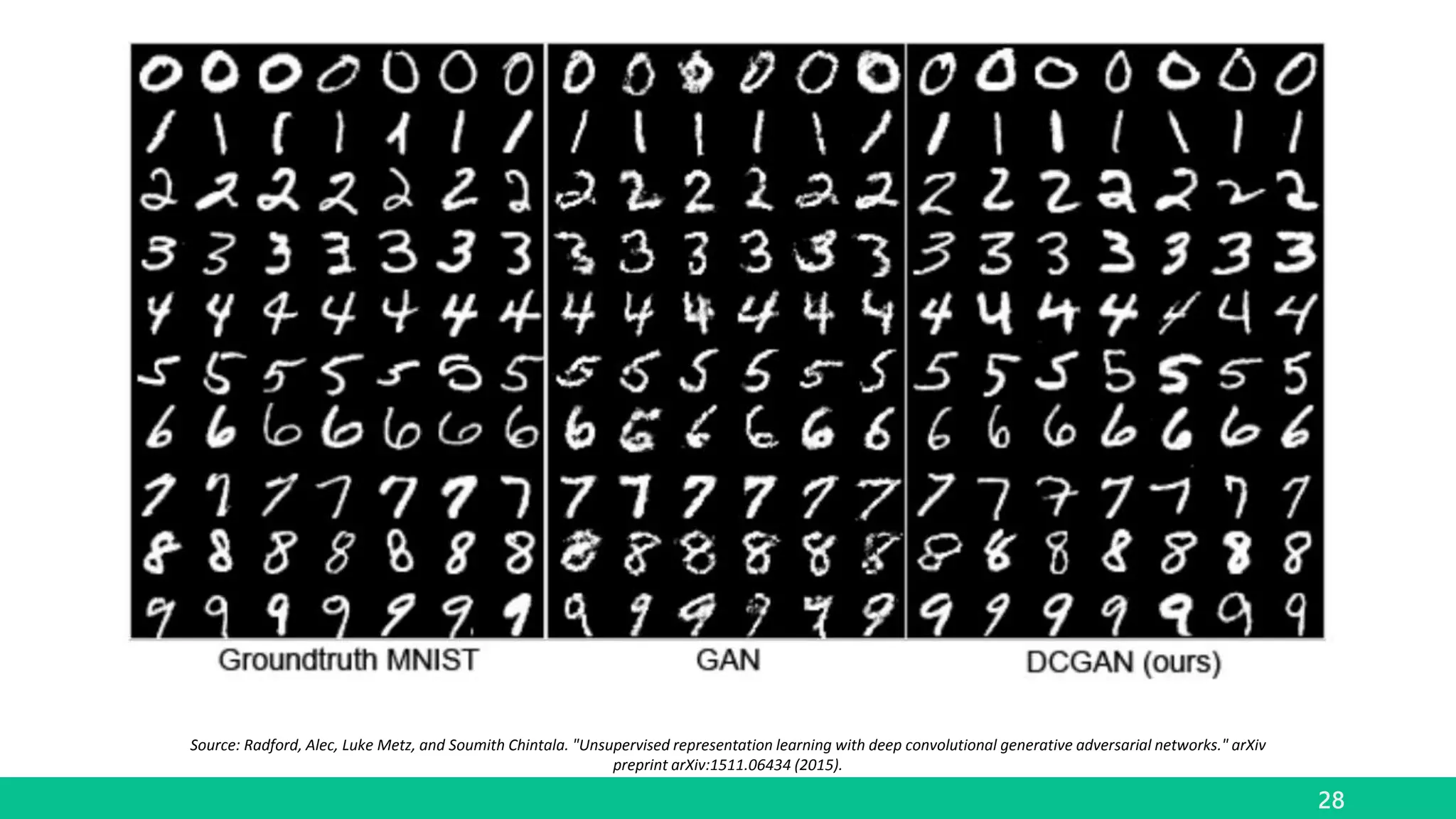

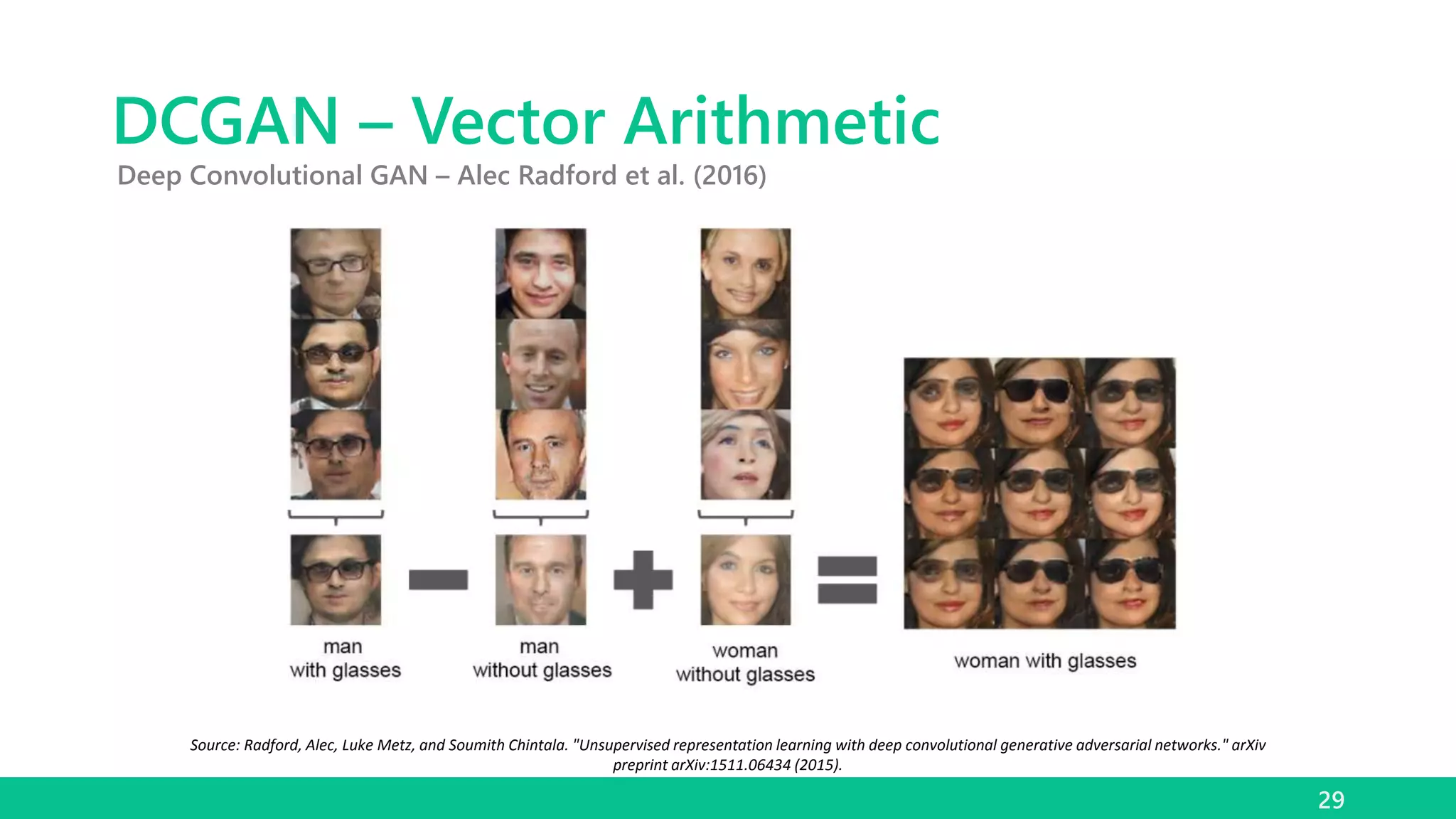

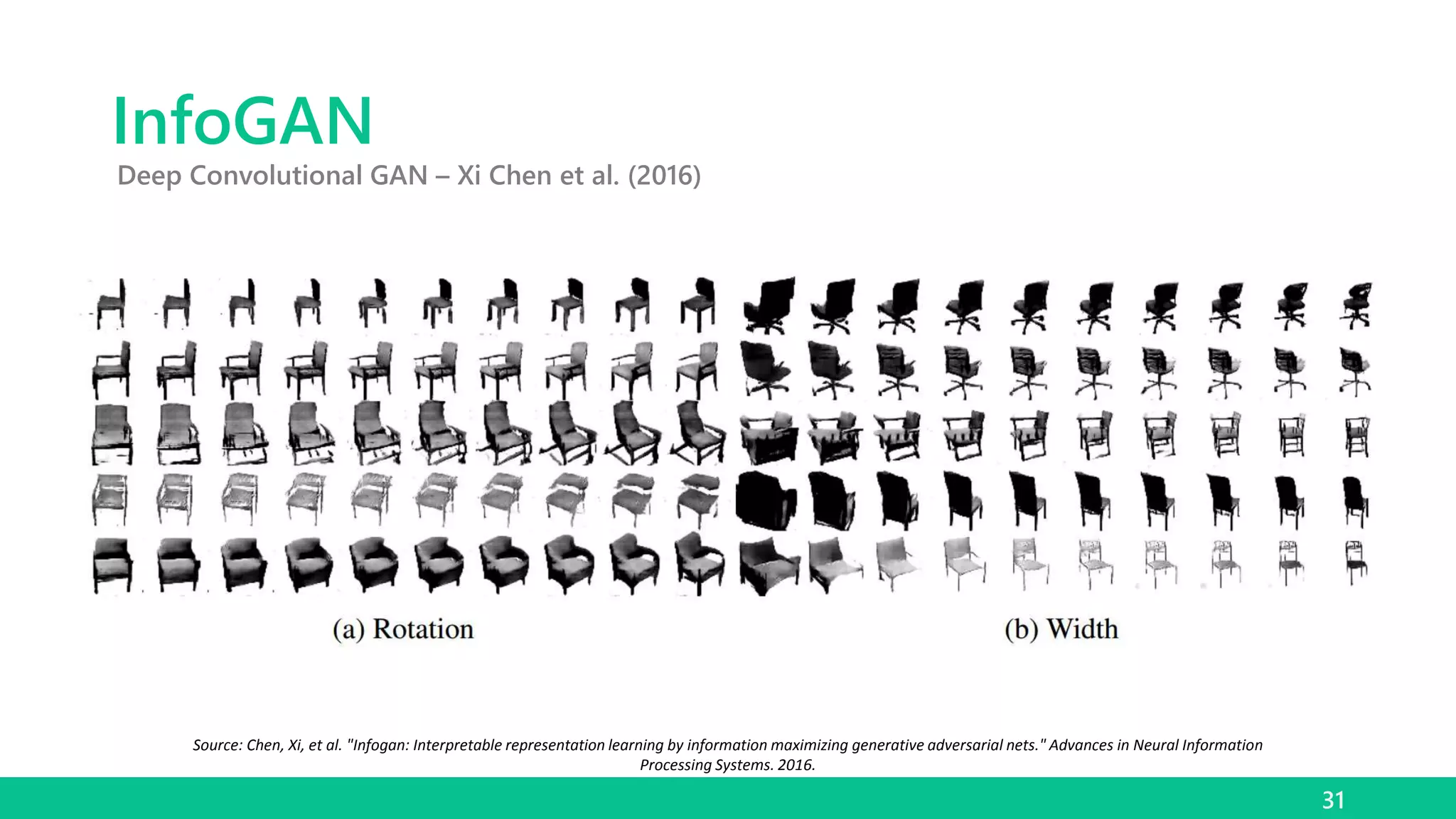

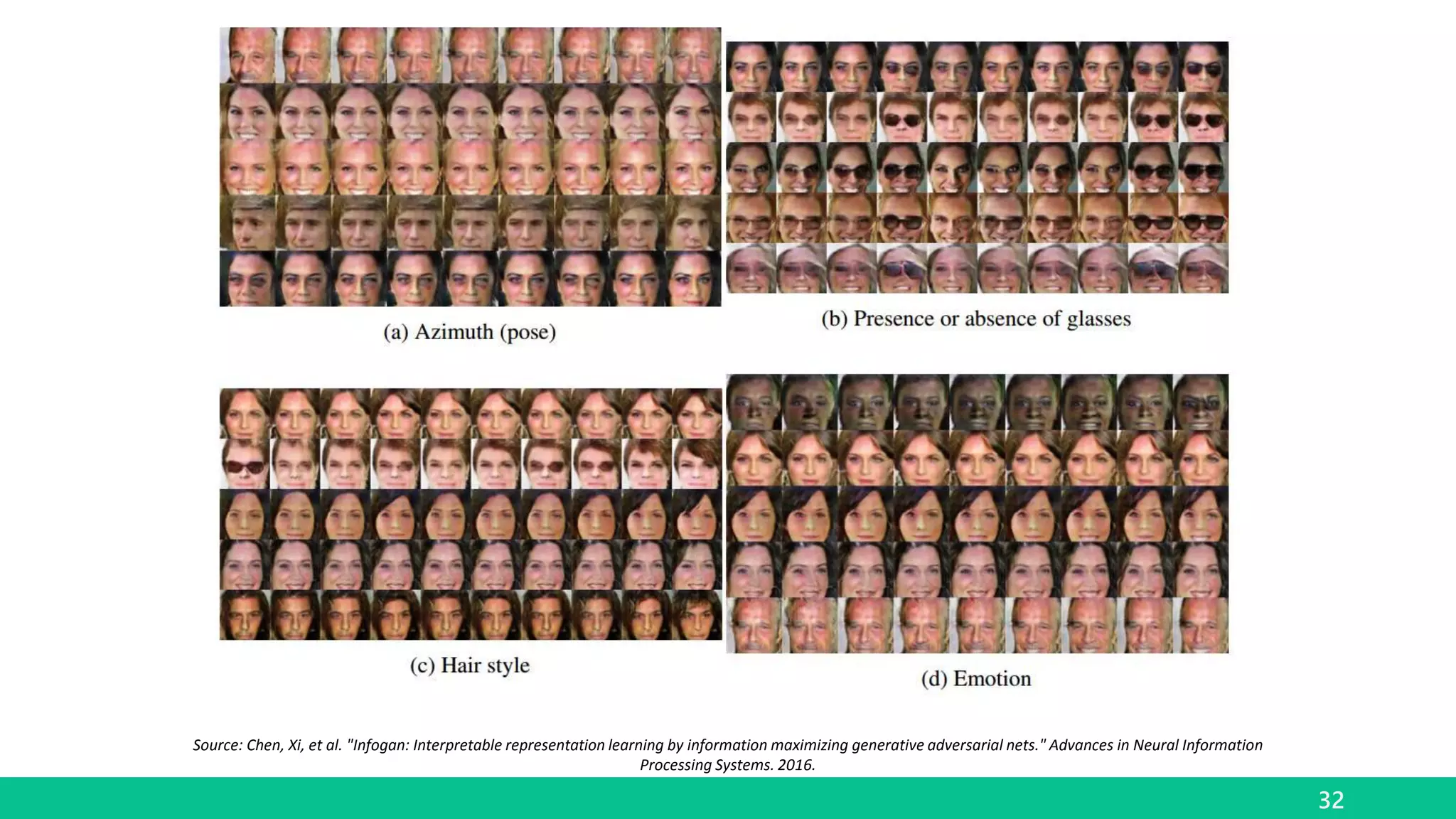

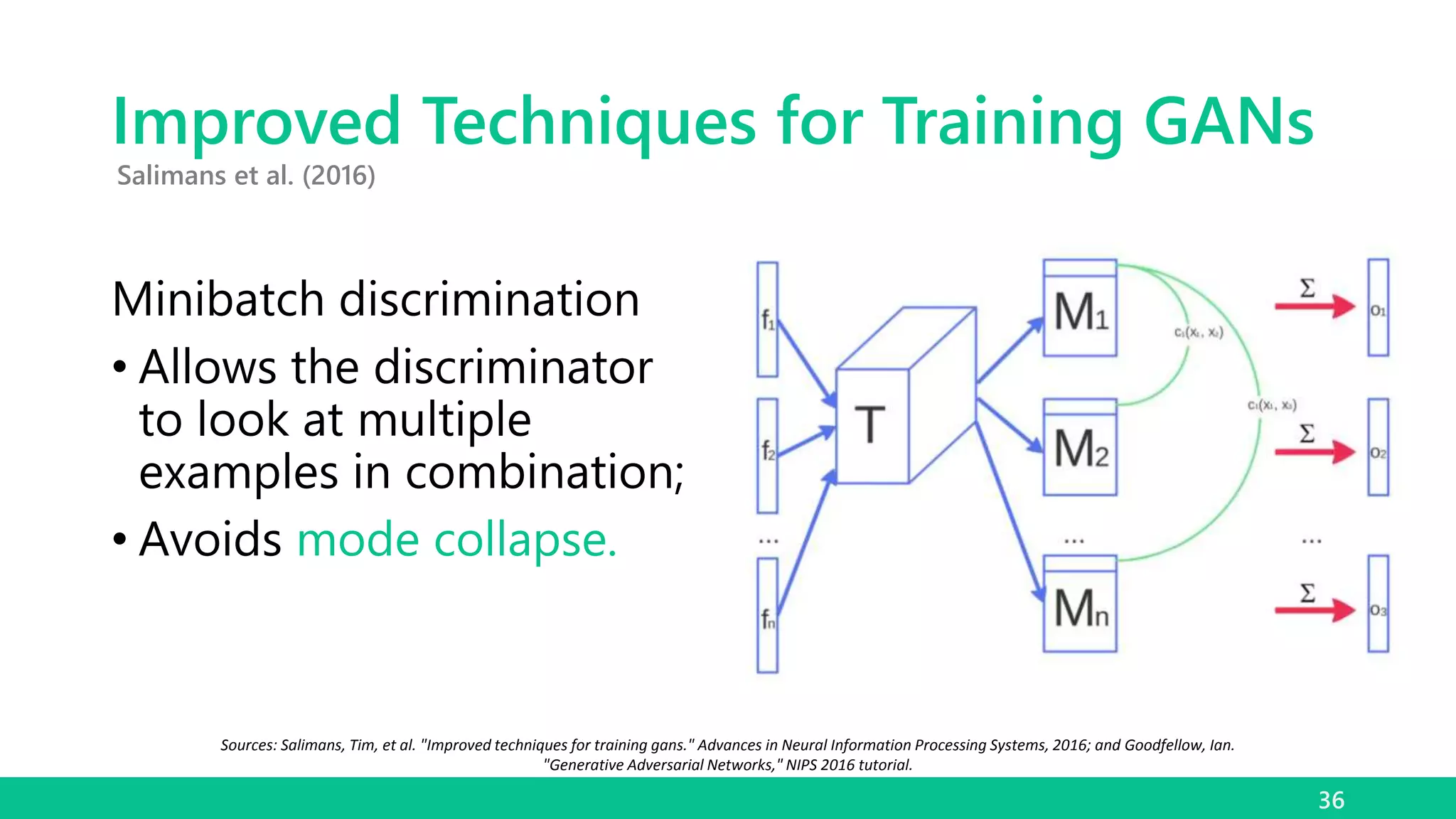

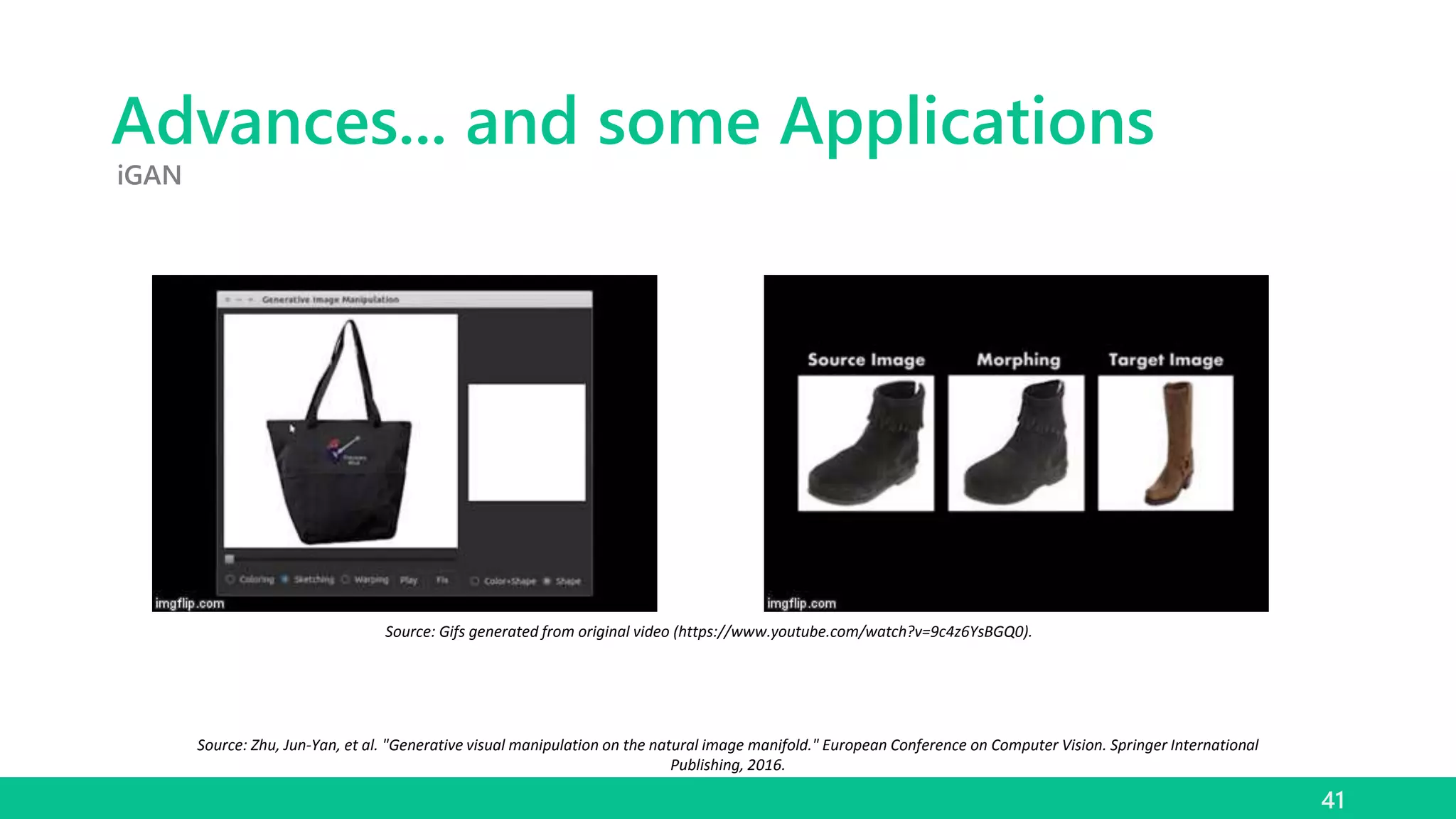

This document provides an introductory overview of Generative Adversarial Networks (GANs) and their significance in the machine learning field. It explains the mechanisms of GANs, which involve a generator and a discriminator, and highlights challenges such as mode collapse and training complexities. Additionally, it discusses advancements in GAN technology and various applications, emphasizing the ongoing research and potential for future developments.