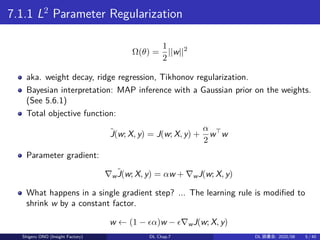

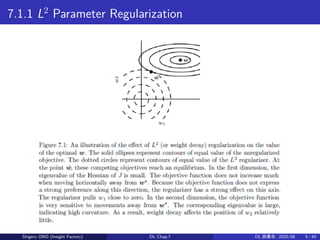

Regularization is used in deep learning to reduce generalization error by modifying the learning algorithm. Common regularization techniques for deep neural networks include:

1) Parameter norm penalties like L2 and L1 regularization that penalize the weights of a network. This encourages simpler models that generalize better.

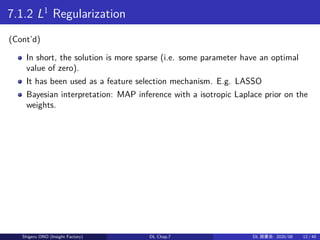

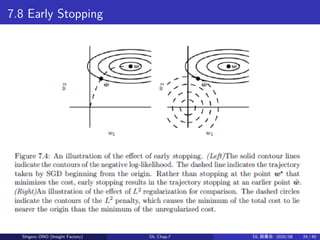

2) Early stopping which obtains the model parameters at the point of lowest validation error during training, rather than at the end of training.

3) Data augmentation which creates additional fake training data through techniques like translation to improve robustness.

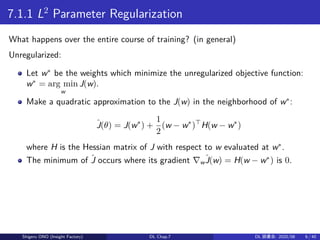

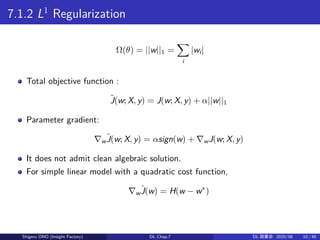

![7.1.2 L1

Regularization

(Cont’d)

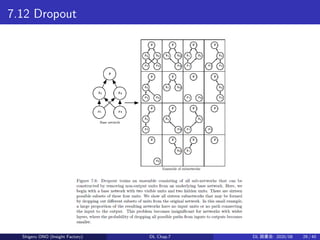

Assume the Hessian is diagonal, H = diag([H11, . . . , Hnn]) (i.e. no correlation

between the input features)

Then we have a quadratic approximation of the cost function:

ˆJ(w; X, y) = J(w∗

; X, y) +

∑

i

(

1

2

Hii(wi − w∗

i )2

+ α|wi|

)

The solution is:

wi = sign(w∗

i ) max

(

|w∗

i | −

α

Hii

, 0

)

Consider the situation where w∗

i > 0 for all i. Then

When w∗

i ≤ α

Hii

, the optimal value is wi = 0.

When w∗

i > α

Hii

, the optimal value is just shifted by a distance α

Hii

.

Shigeru ONO (Insight Factory) DL Chap.7 DL 読書会: 2020/08 11 / 40](https://image.slidesharecdn.com/chap7-200806012107/85/Goodfellow-Bengio-Couville-2016-Deep-Learning-Chap-7-11-320.jpg)

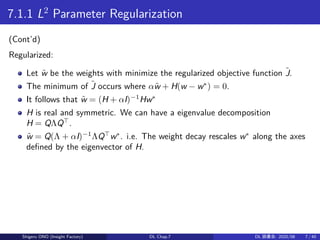

![7.5 Noise Robustness

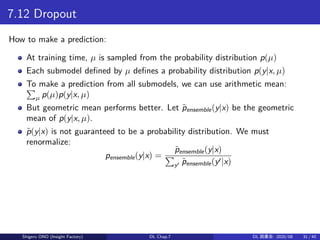

Idea: Add noise to the weights.

It can be interpreted as a stochastic implementation of Bayesian inference

over the weight.

Noise reflect our uncertainty on the model weights.

It can also be interpreted as equivalent to a more traditional form of

regularization.

Consider we wish to train a function ˆy(x) using the least-square cost function

J = Ep(x,y)[(ˆy(x) − y)2

]

Assume that we also include a random perturbation ϵw ∼ N(ϵ; 0, ηI) of the

network weights.

The objective function becomes ˜JW = Ep(x,y,ϵW)[(ˆyeW (x) − y)2

]

For small η, it is equivalent to J with a regularization term

ηEp(x,y)[||∇Wˆy(x)||2

].

It push the model into regions where the model is relatively insensitive to small

variations in the weights, finding points that are not merely minima, but

minima surrounded by flat regions.

Shigeru ONO (Insight Factory) DL Chap.7 DL 読書会: 2020/08 17 / 40](https://image.slidesharecdn.com/chap7-200806012107/85/Goodfellow-Bengio-Couville-2016-Deep-Learning-Chap-7-17-320.jpg)

![7.8 Early Stopping

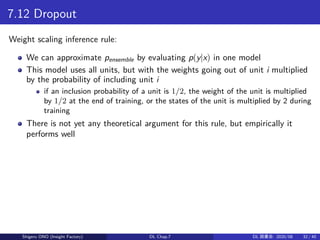

How early stopping acts as regularizer:

Restricting both the number of iterations and the learning rate limit the

volume of parameter space reachable from the initial parameter value.

In a simple linear model with a quadratic error function and simple gradient

decent, early stopping is equivalent to L2

regularization. [...skipped...]

Shigeru ONO (Insight Factory) DL Chap.7 DL 読書会: 2020/08 23 / 40](https://image.slidesharecdn.com/chap7-200806012107/85/Goodfellow-Bengio-Couville-2016-Deep-Learning-Chap-7-23-320.jpg)

![7.14 Tangent Distance, Tangent Prop and Manifold

Tangent Classifier

Tangent prop algorithm:

[...skipped...]

double backprop:

[...skipped...]

Shigeru ONO (Insight Factory) DL Chap.7 DL 読書会: 2020/08 40 / 40](https://image.slidesharecdn.com/chap7-200806012107/85/Goodfellow-Bengio-Couville-2016-Deep-Learning-Chap-7-40-320.jpg)