The document provides technical recommendations for SEO, including:

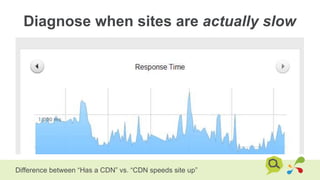

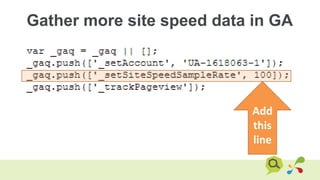

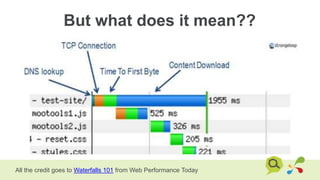

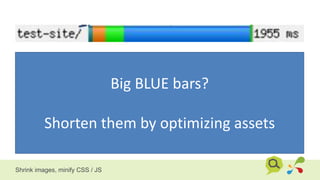

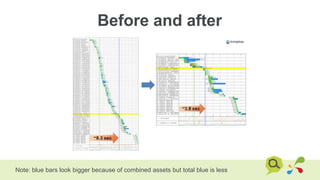

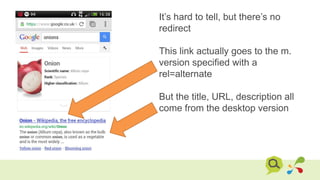

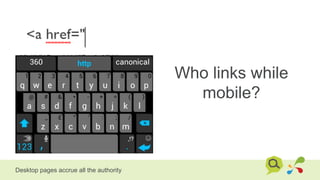

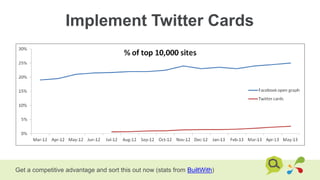

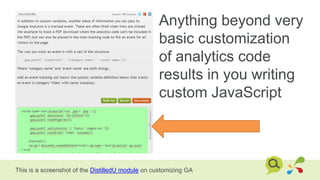

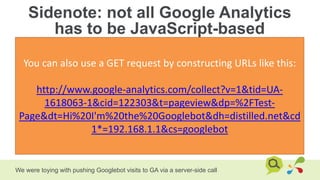

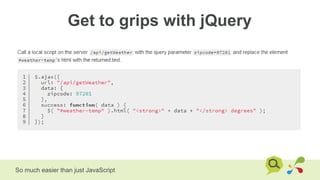

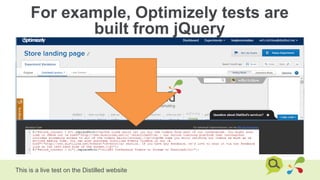

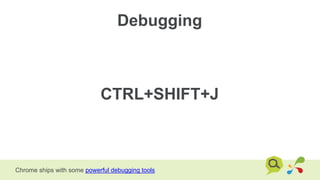

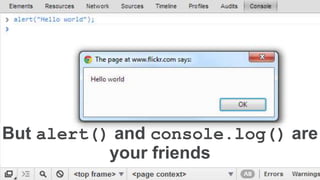

1. Removing URL parameters, implementing rel="alternate" for mobile sites, and using HREFLANG tags to group international sites. It also recommends diagnosing actual site speed issues, implementing Twitter Cards, and getting familiar with JavaScript, jQuery, and debugging tools.

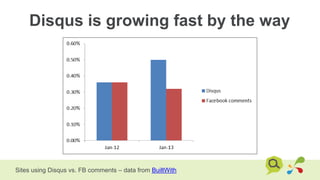

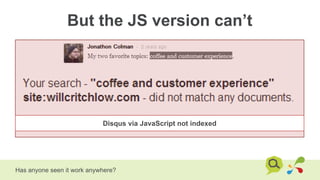

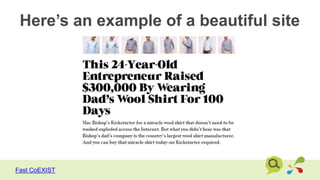

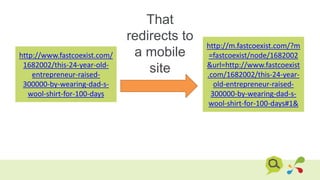

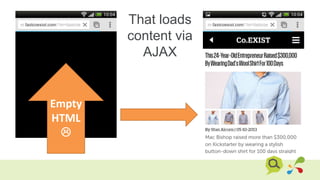

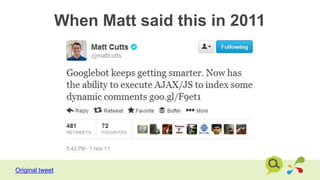

2. Auditing AJAX implementations to ensure all content and links are crawlable without JavaScript. It notes Google can now index some JavaScript-rendered content like Facebook comments.

3. When making recommendations, being prescriptive, but when auditing, being cautious of the costs of changes if content is already being indexed. Fix only what's necessary, not purely for technical purity.

![Set alerts for changes to Robots.txt

I use Server Density [disclaimer: we’re investors] – see how here](https://image.slidesharecdn.com/willcritchlow-technicalseo-130521103051-phpapp01/85/SearchLove-Boston-2013_Will-Critchlow_Technical-SEO-28-320.jpg)

![TechCrunch admits that

using Facebook comments

drove away most of their

commenters

-- techdirt [original TC article]

We have seen a few mis-steps from FB on the comment front](https://image.slidesharecdn.com/willcritchlow-technicalseo-130521103051-phpapp01/85/SearchLove-Boston-2013_Will-Critchlow_Technical-SEO-90-320.jpg)

![This gives Google …the ability to

read comments in AJAX or

JavaScript, such as Facebook

comments or Disqus comments

-- SearchEngineLand [emphasis mine]

Much of the coverage was similar to this](https://image.slidesharecdn.com/willcritchlow-technicalseo-130521103051-phpapp01/85/SearchLove-Boston-2013_Will-Critchlow_Technical-SEO-92-320.jpg)