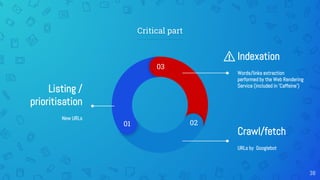

This document discusses different options for migrating a website from traditional server-side rendering to a single-page application framework like Angular while maintaining SEO optimization. The main options covered are:

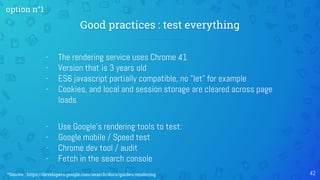

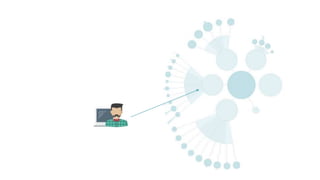

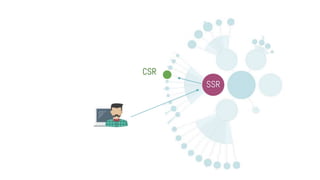

1. Making no architecture changes and optimizing for client-side rendering.

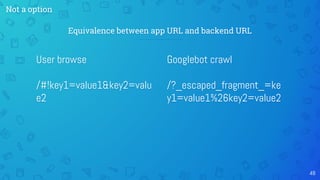

2. Using the AngularJS AJAX Crawling specification, but this is now deprecated.

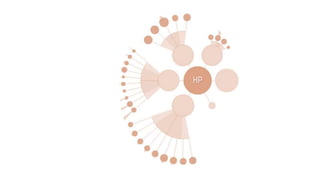

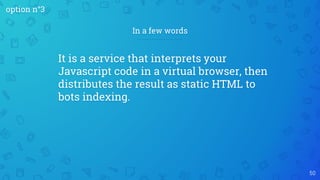

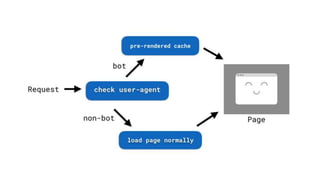

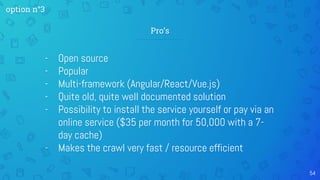

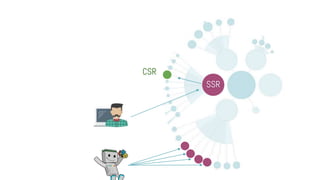

3. Using a service like Prerender.io that renders the JavaScript in a virtual browser to provide static HTML snapshots for crawlers.

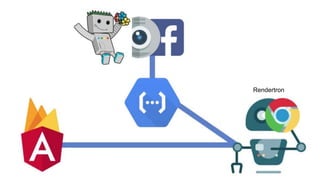

4. Using a newer alternative to Prerender called Rendertron that performs the same rendering but delivers snapshots on demand.

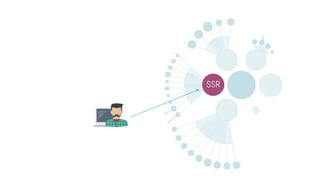

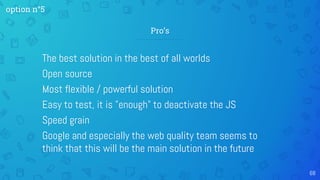

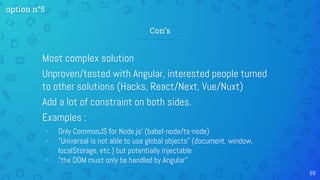

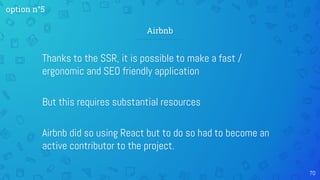

5. Implementing server-side rendering with Angular