Embed presentation

Downloaded 38 times

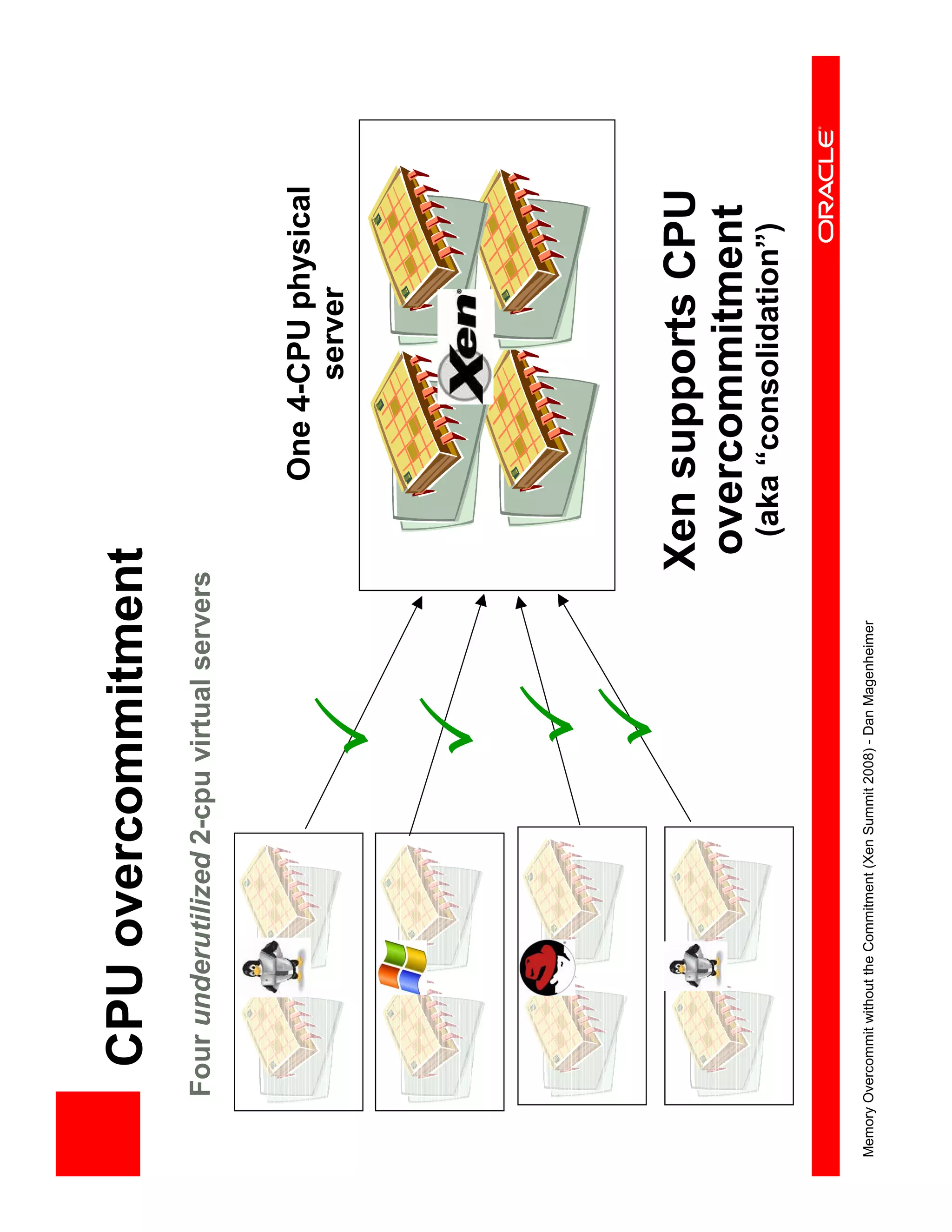

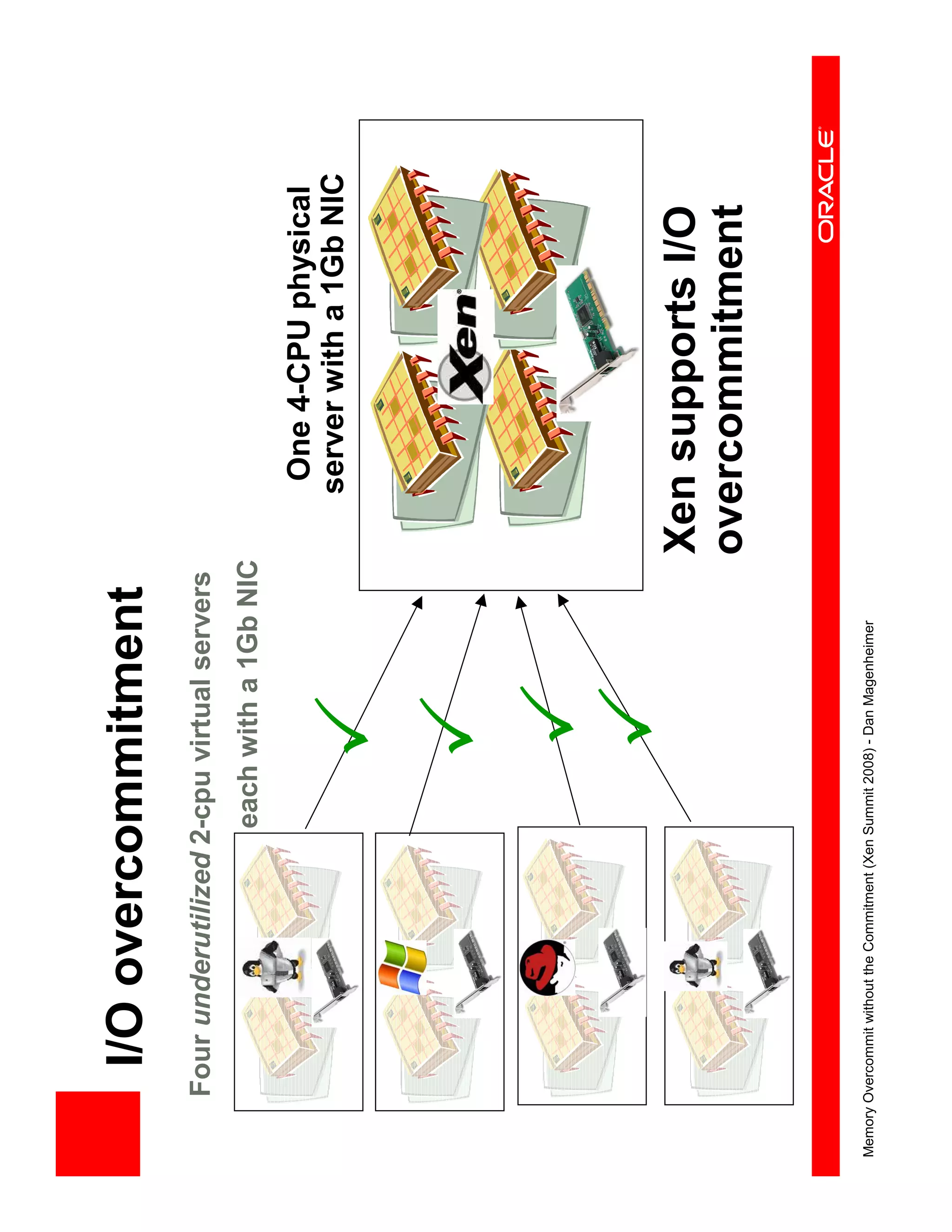

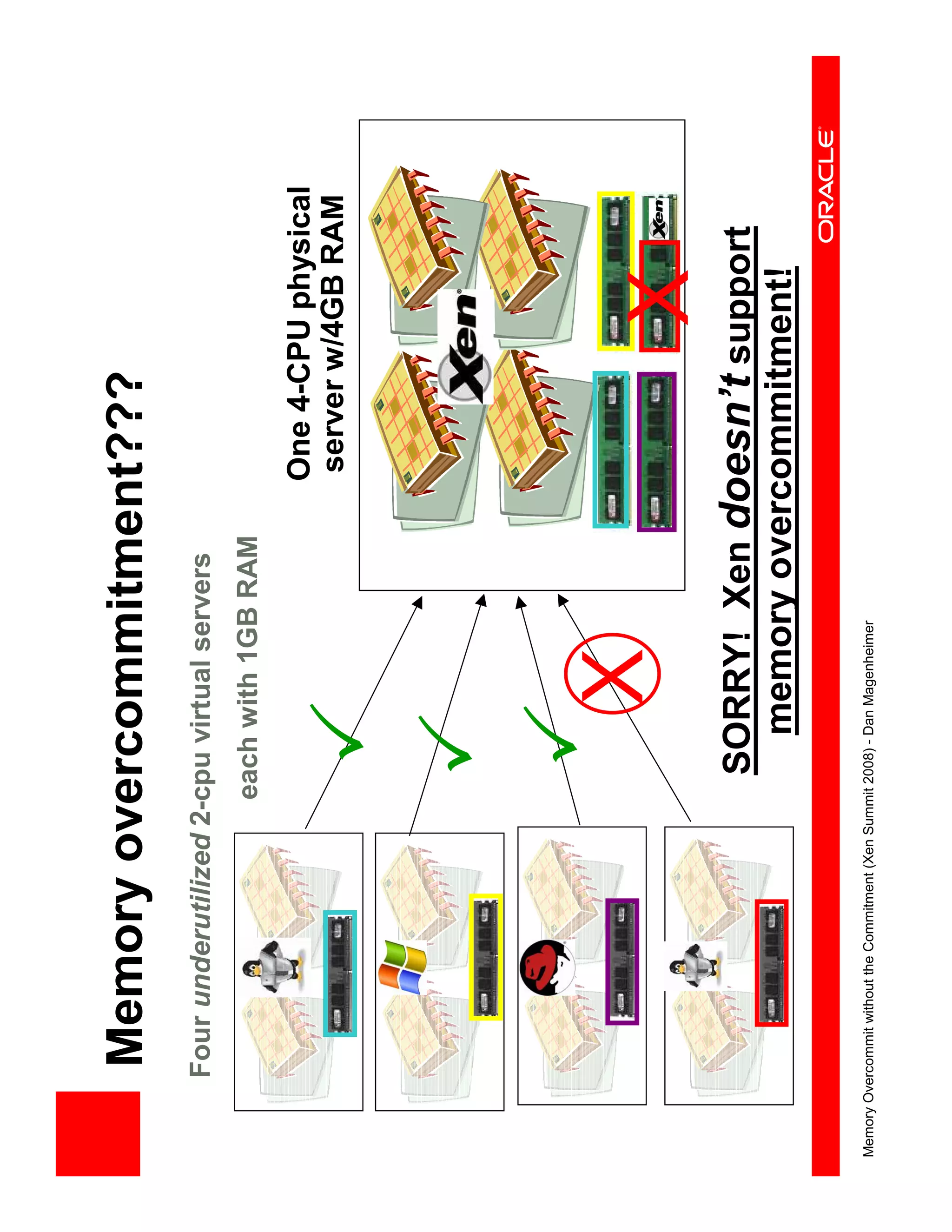

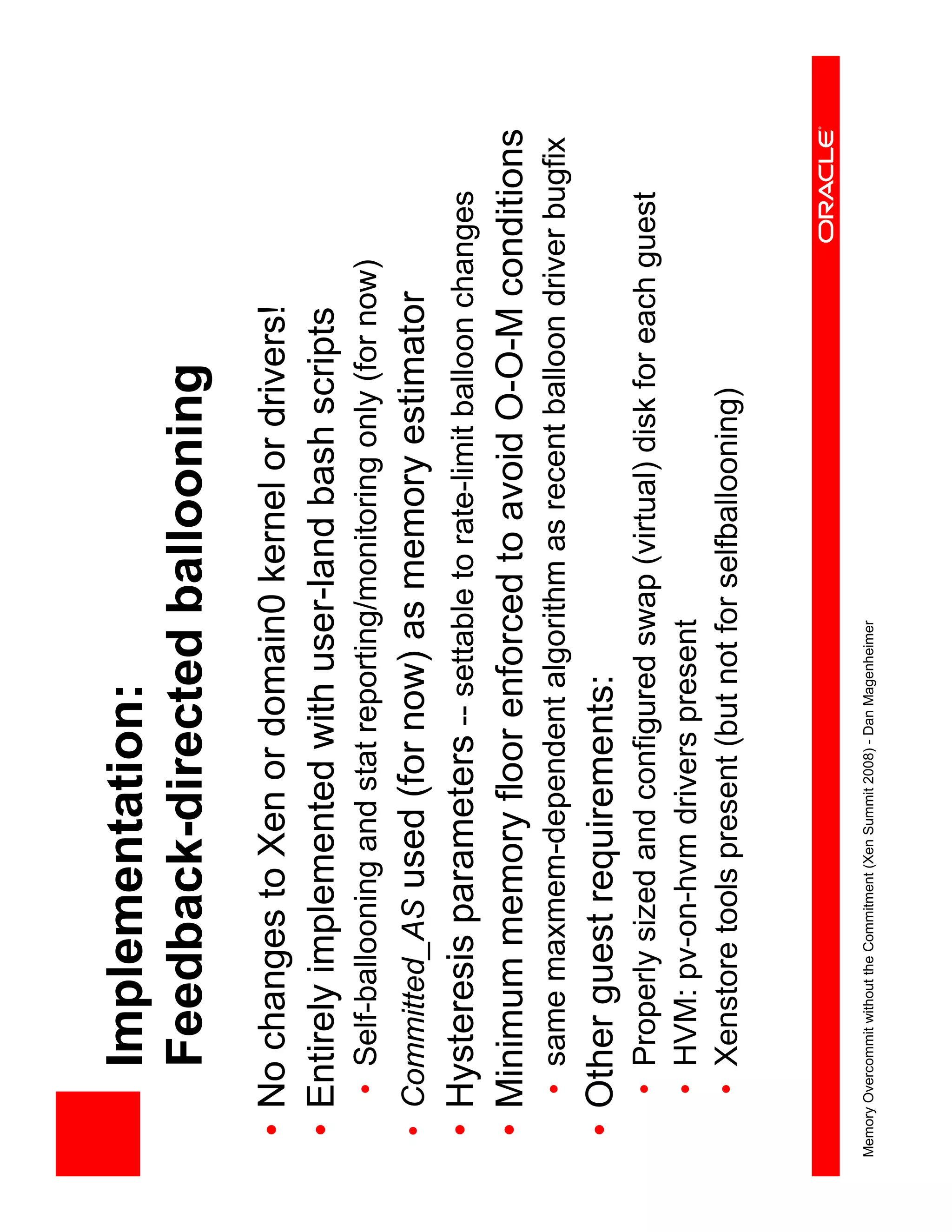

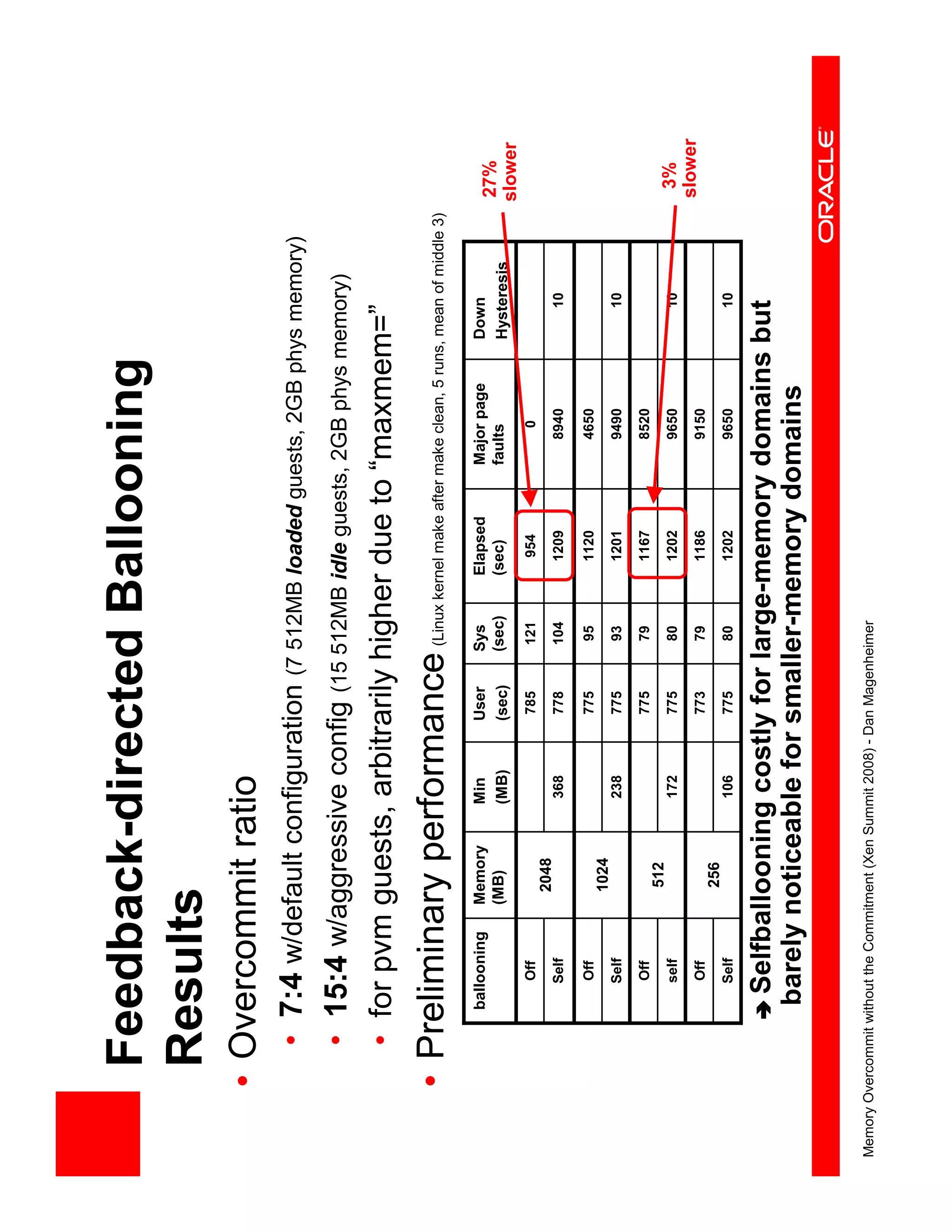

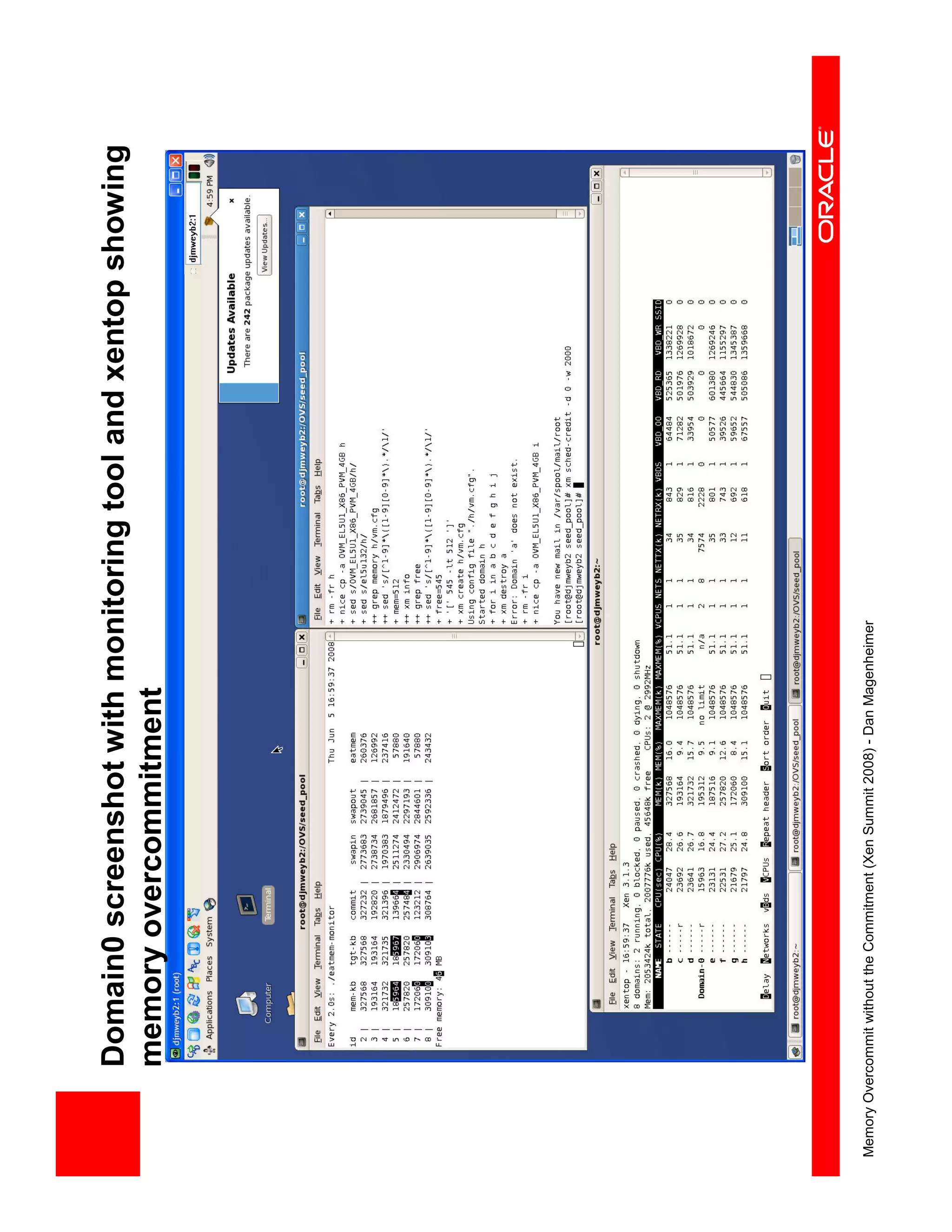

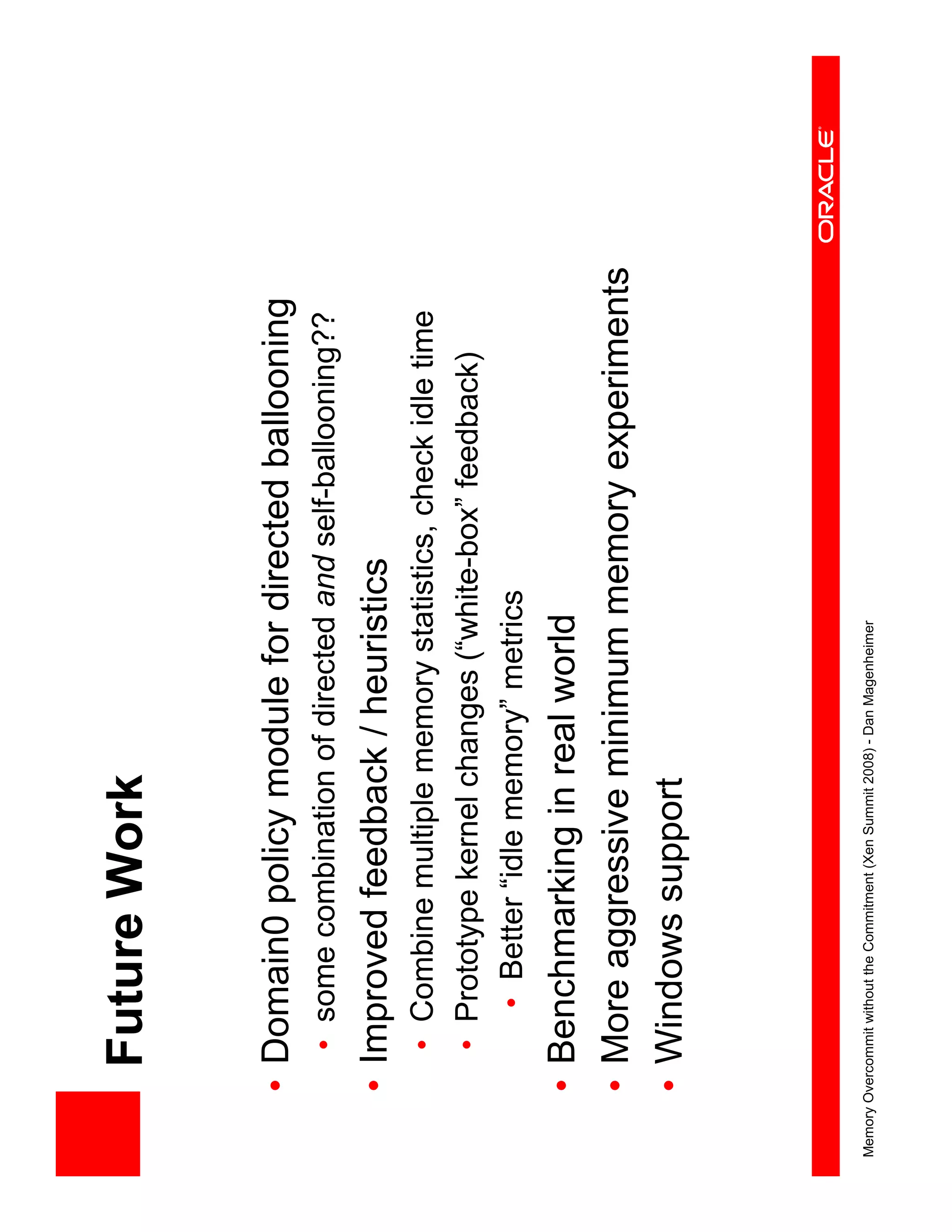

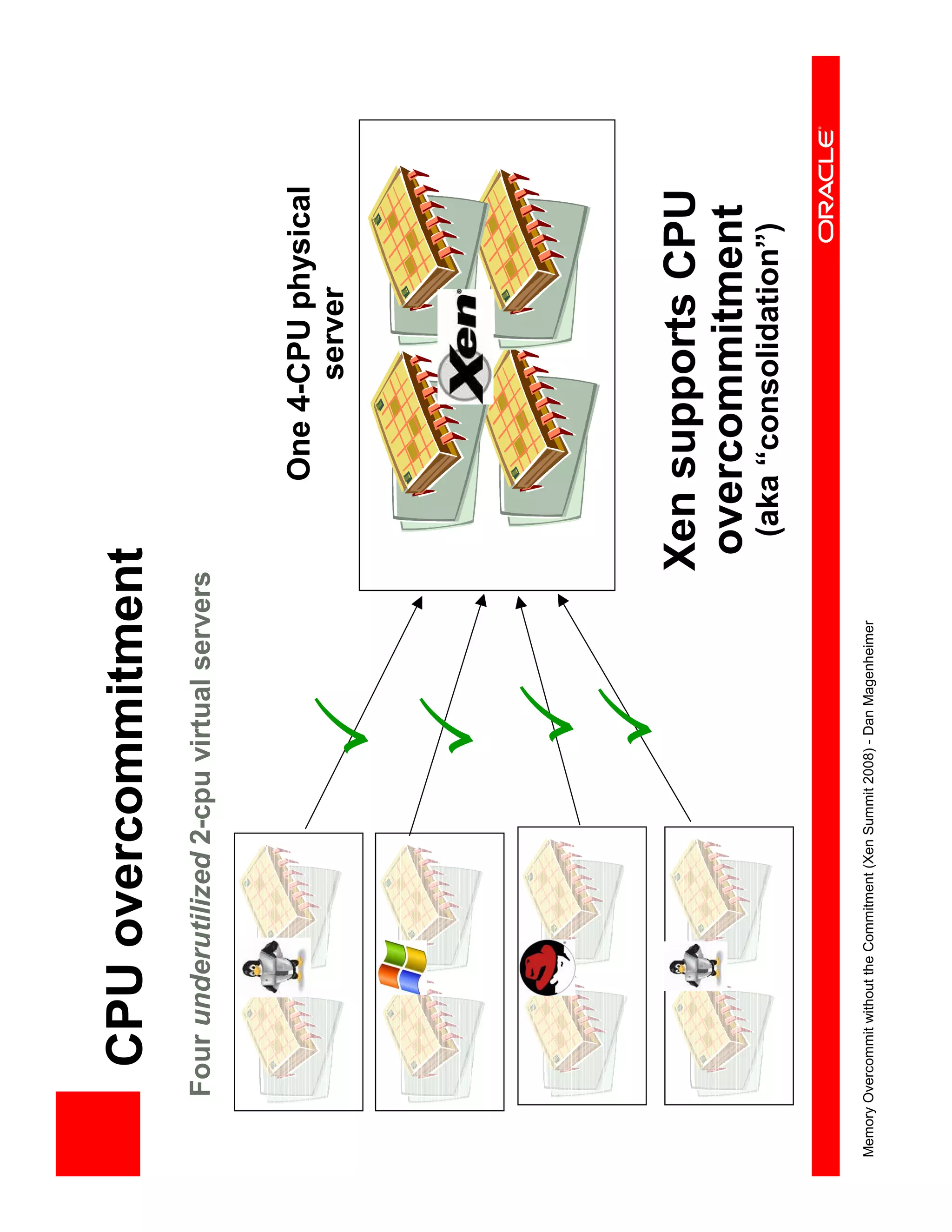

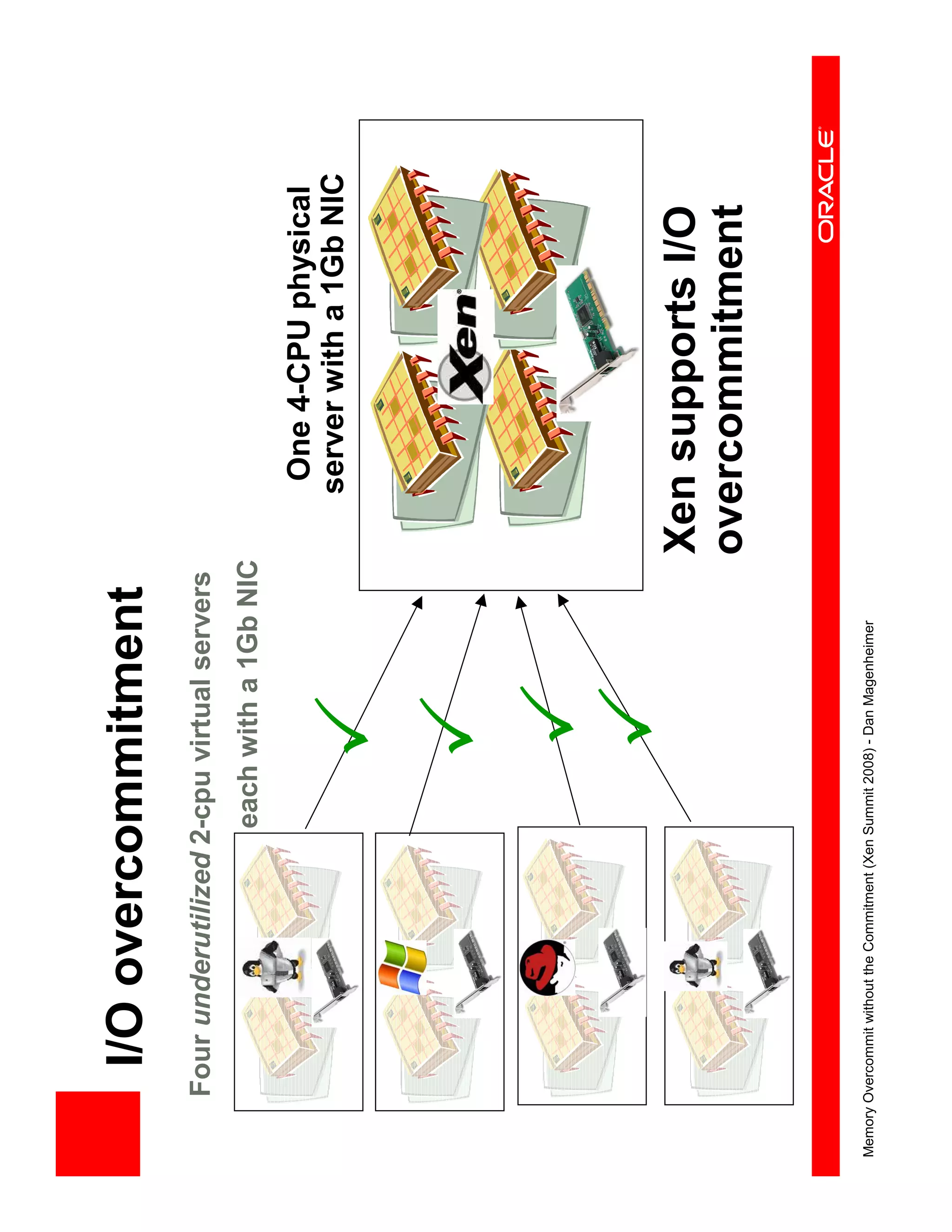

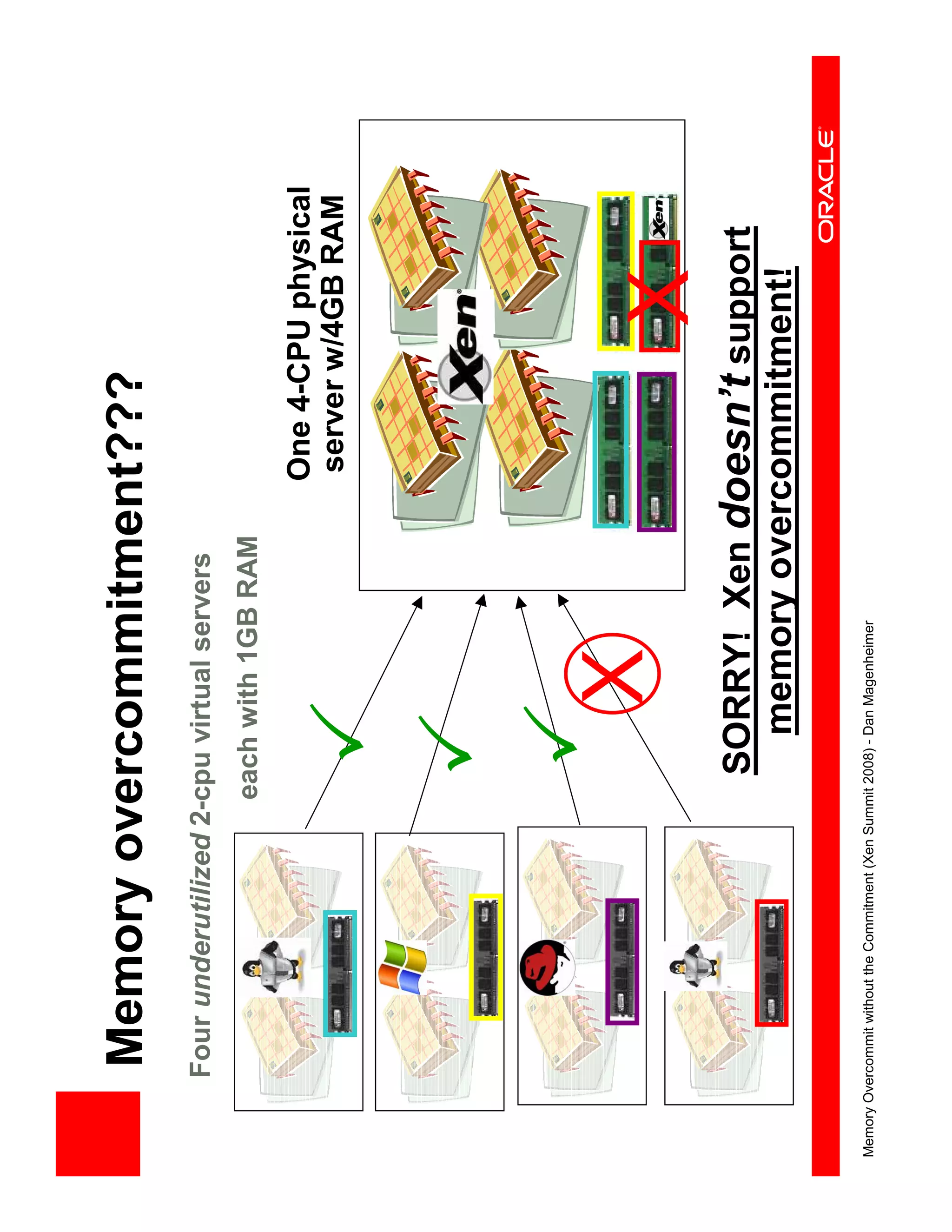

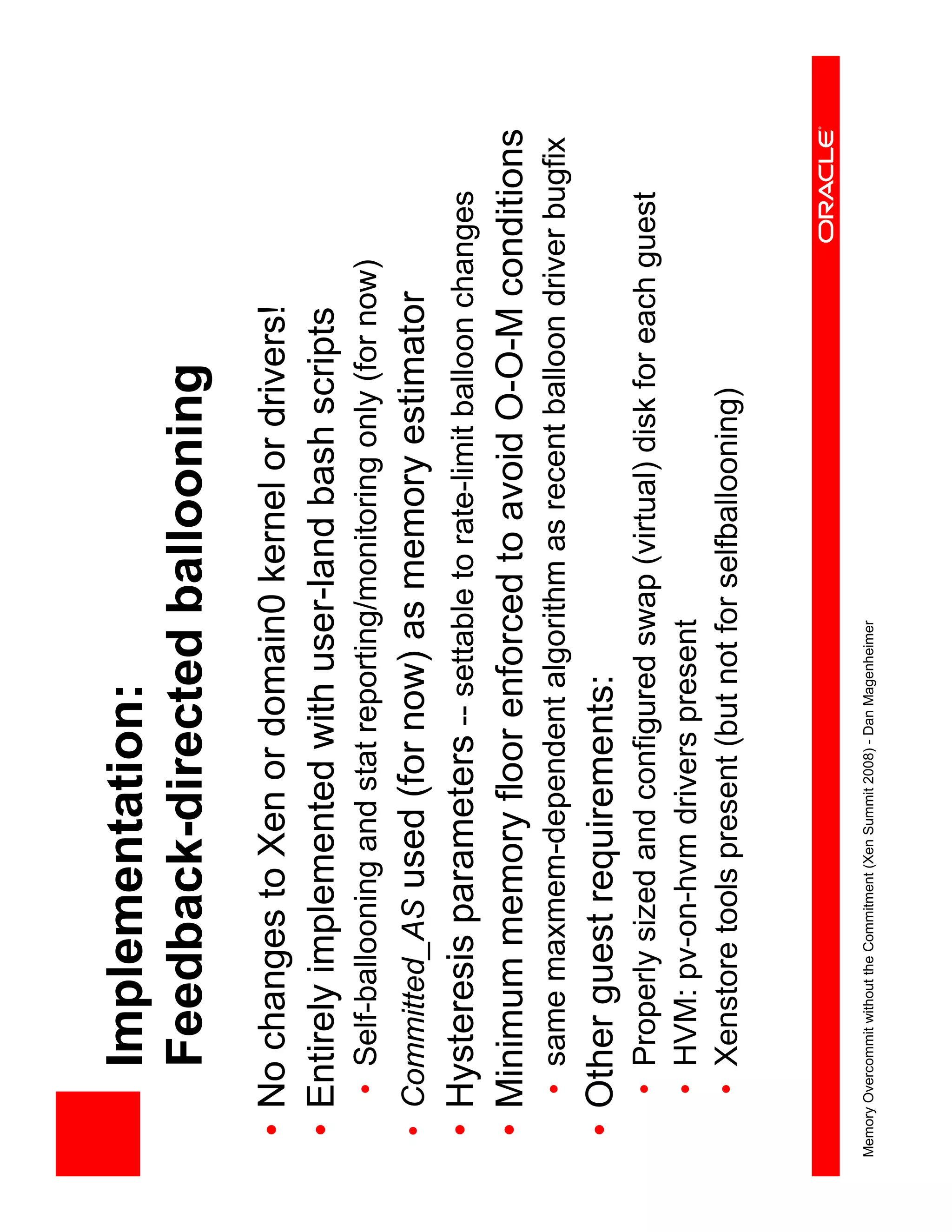

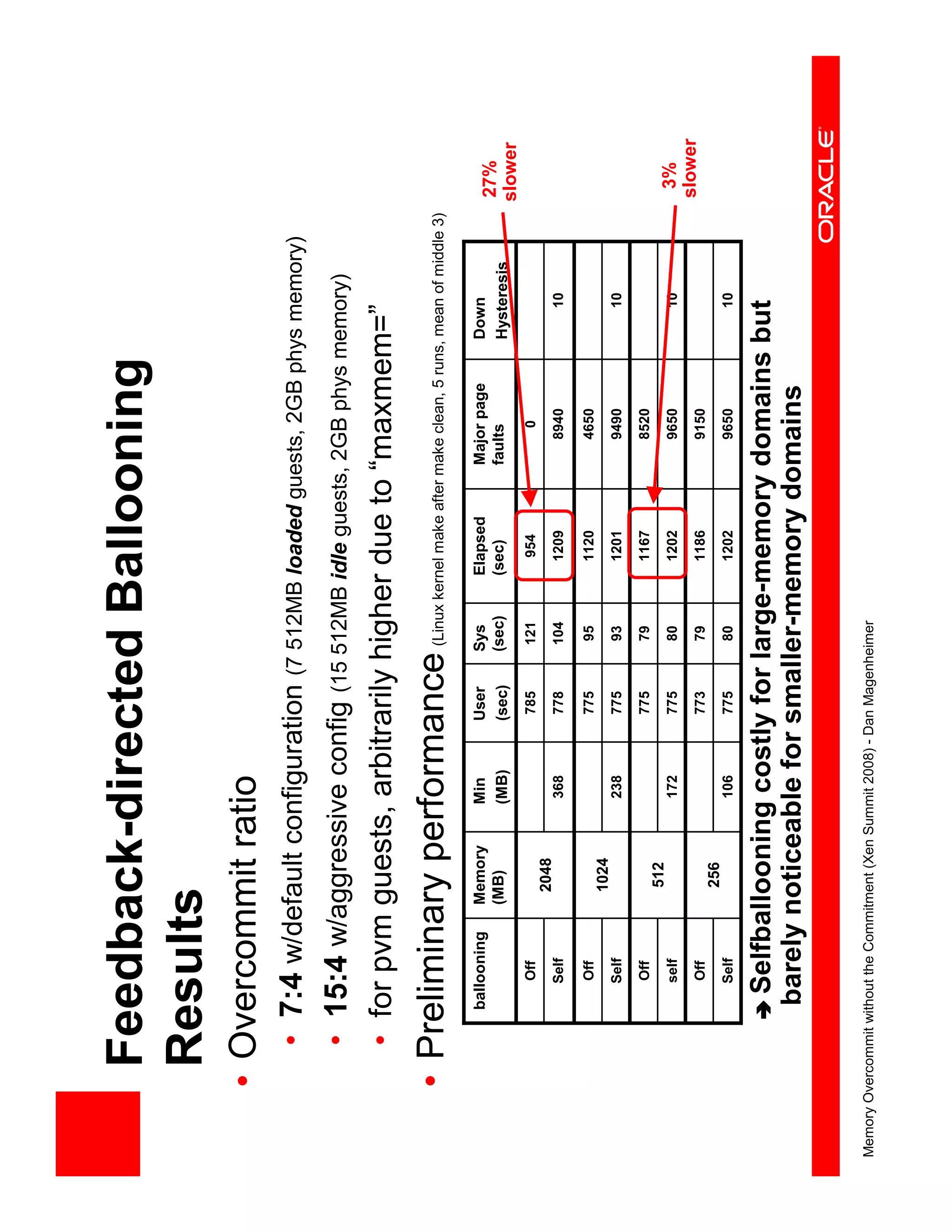

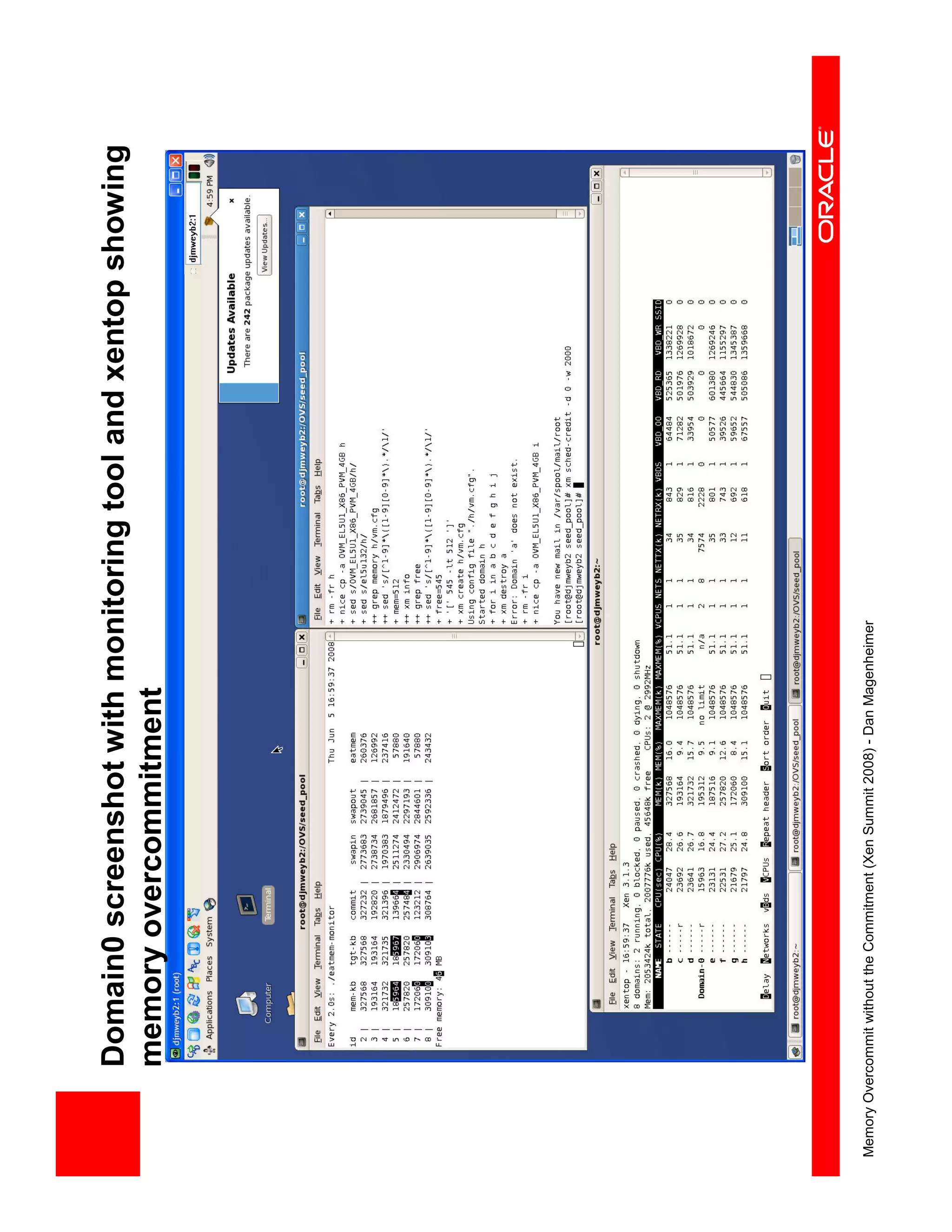

This document summarizes a presentation on memory overcommitment in virtualization given by Dan Magenheimer at the 2008 Xen Summit. It discusses why Xen currently does not support memory overcommitment while other virtualization platforms like VMware do. It then explores possible techniques for implementing memory overcommitment in Xen, such as ballooning, page sharing, and demand paging. The goal would be to allow more efficient memory utilization and higher server consolidation ratios.