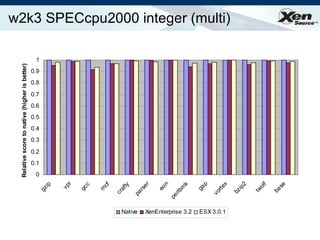

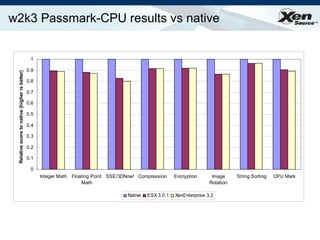

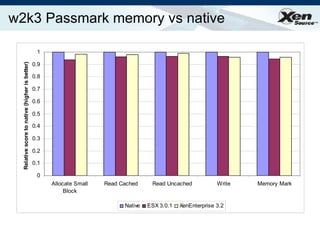

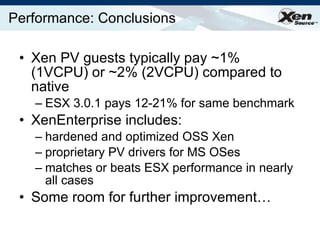

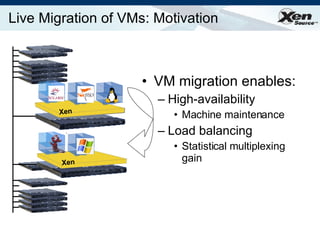

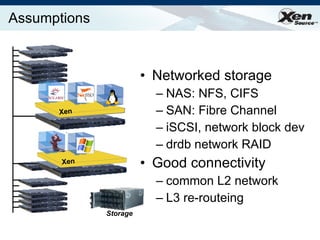

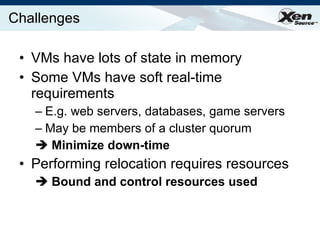

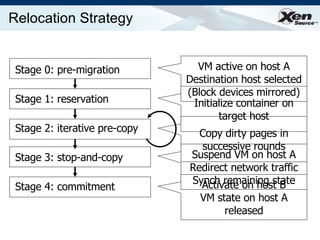

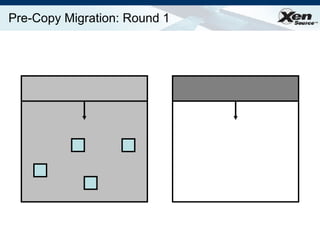

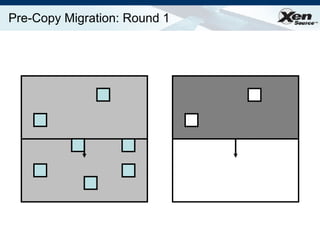

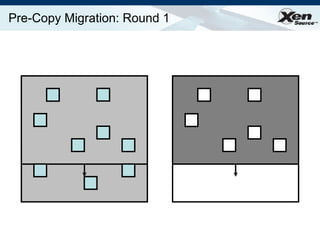

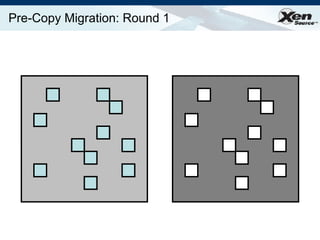

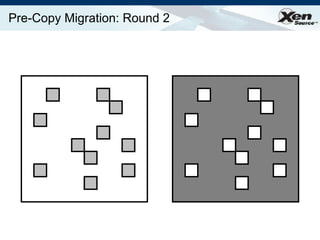

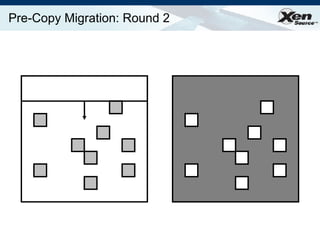

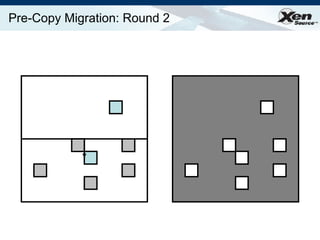

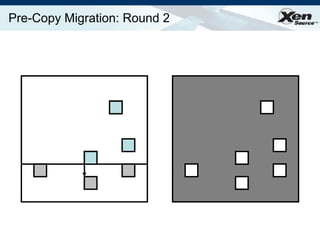

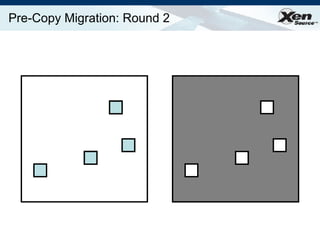

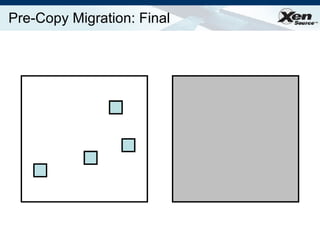

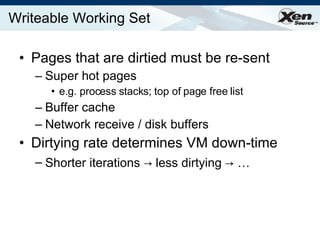

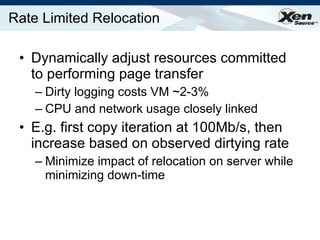

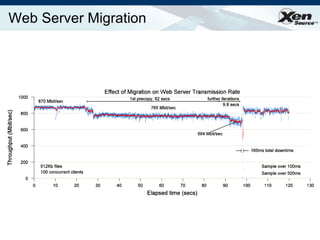

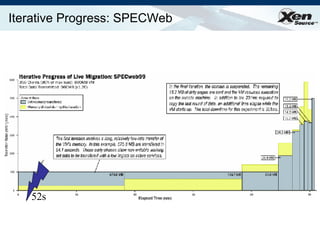

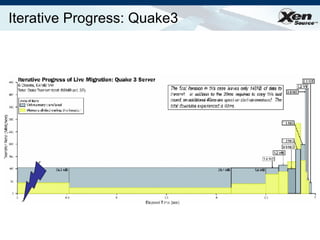

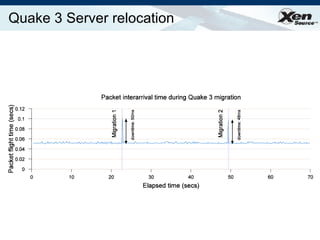

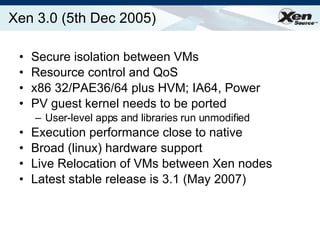

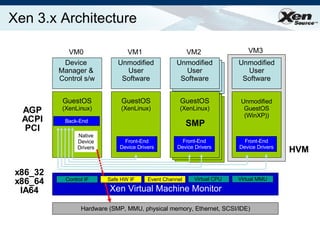

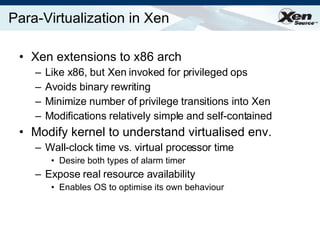

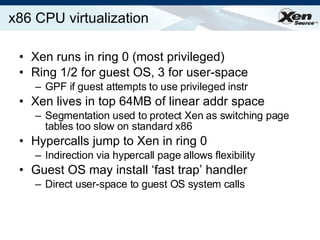

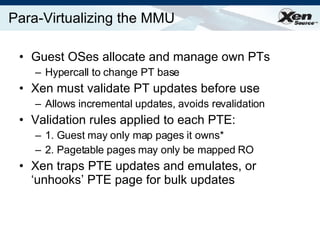

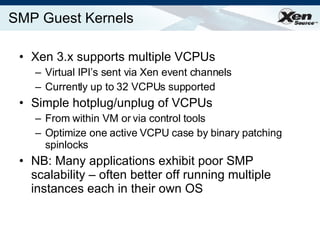

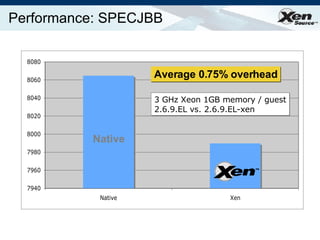

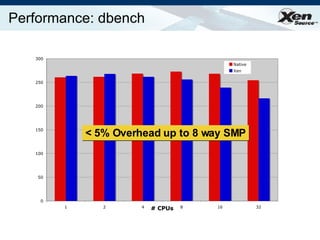

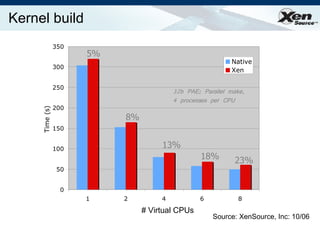

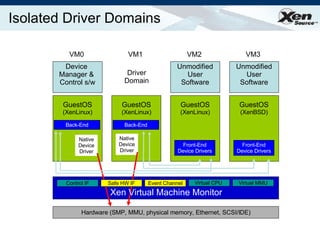

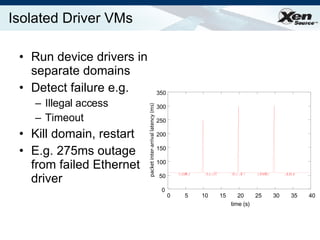

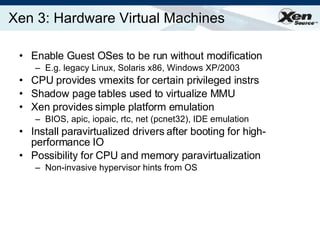

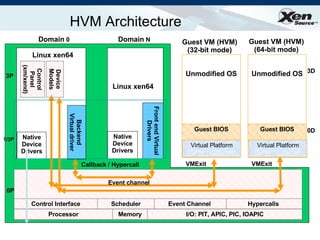

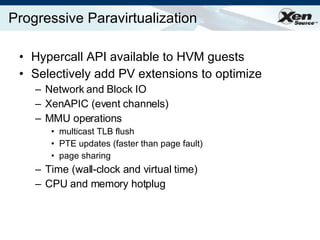

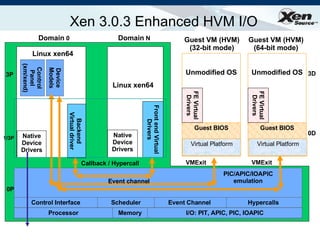

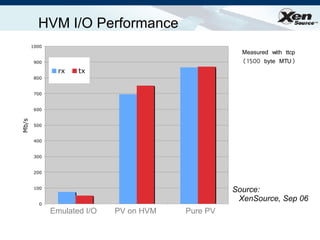

The document summarizes virtualization with the Xen hypervisor. It discusses how Xen allows multiple operating systems to run on a single machine with low overhead through para-virtualization. It describes Xen's architecture, performance benefits, and how it enables hardware virtualization, virtual appliances, and live migration of virtual machines between physical servers.

![HW Virtualization Summary CPU virtualization available today lets Xen support legacy/proprietary OSes Additional platform protection imminent protect Xen from IO devices full IOMMU extensions coming soon MMU virtualization also coming soon: avoid the need for s/w shadow page tables should improve performance and reduce complexity Device virtualization arriving from various folks: networking already here (ethernet, infiniband) [remote] storage in the works (NPIV, VSAN) graphics and other devices sure to follow…](https://image.slidesharecdn.com/xeneuropar07-1226021954364054-8/85/Xen-Euro-Par07-29-320.jpg)