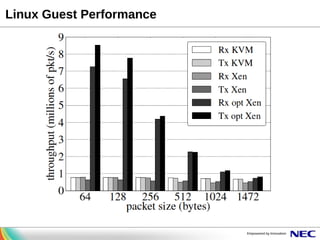

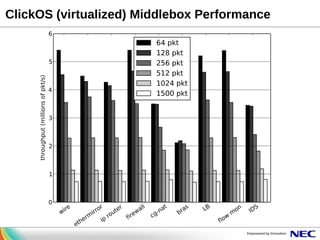

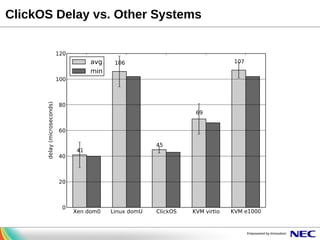

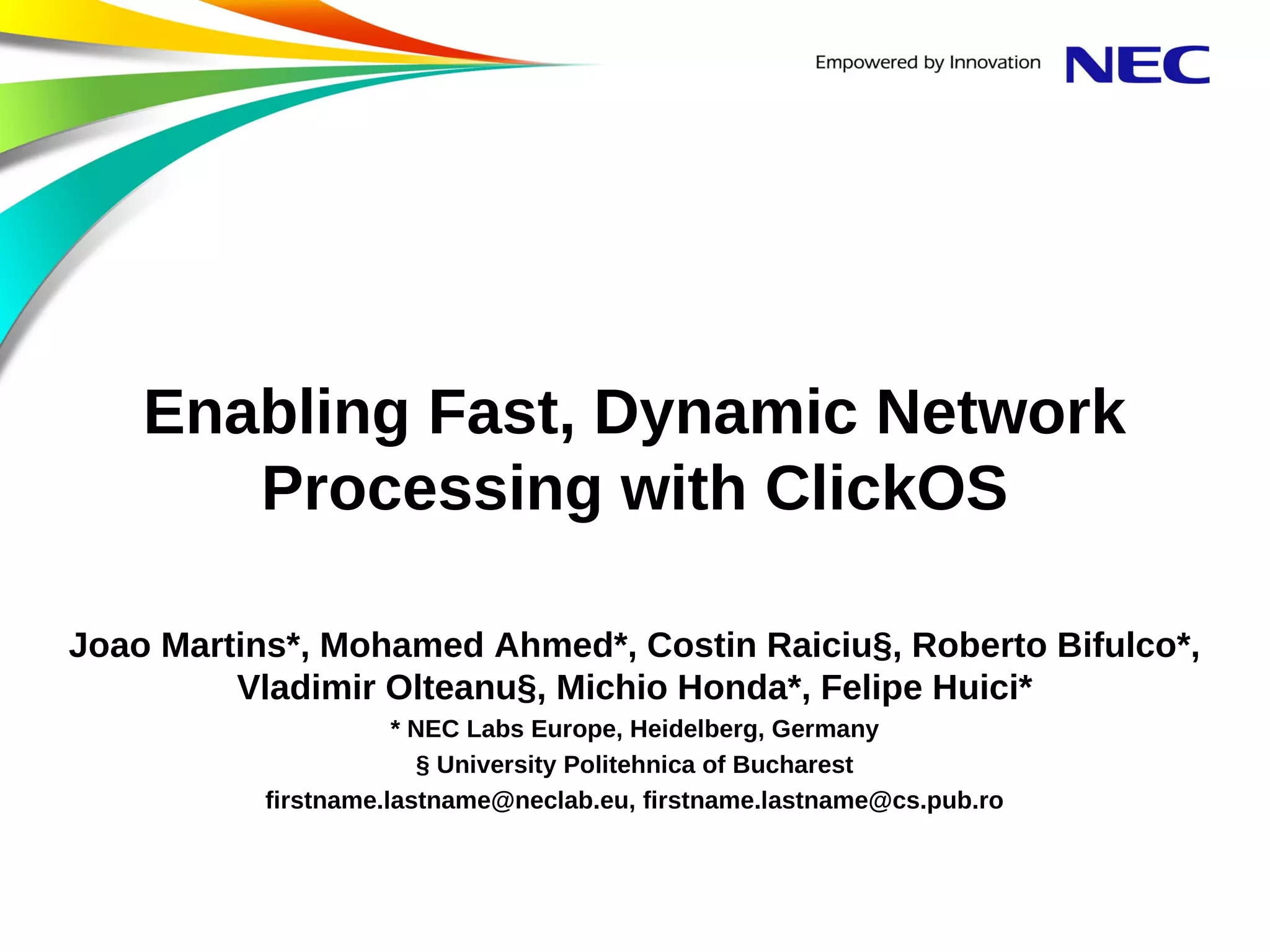

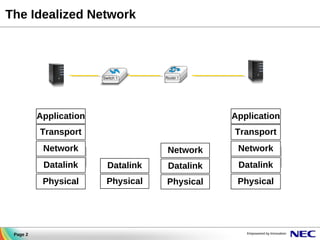

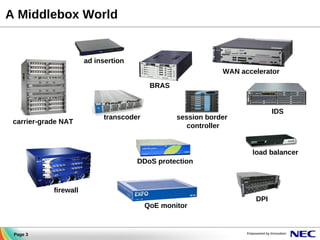

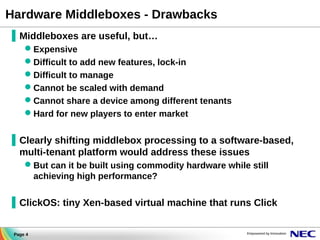

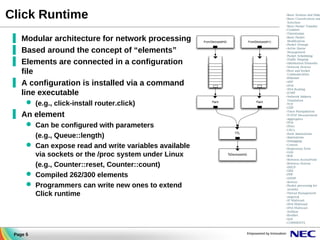

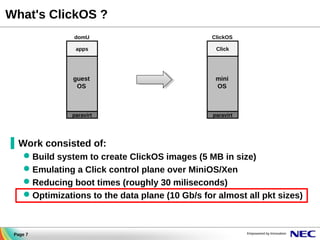

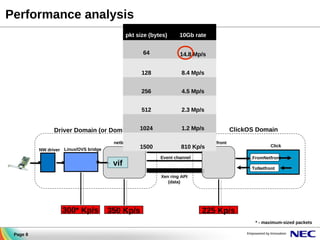

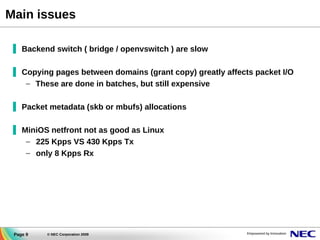

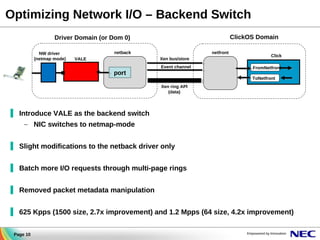

The document discusses ClickOS, a lightweight Xen-based virtual machine designed for efficient network processing, overcoming limitations of traditional middleboxes. It highlights its modular architecture, rapid boot times, and ability to deliver high throughput while addressing scalability and management issues. Future developments aim to enhance performance and support increasingly consolidated virtual machine deployments.

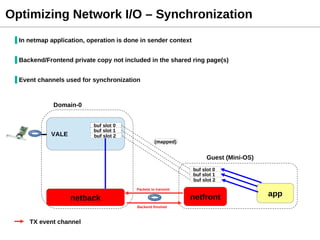

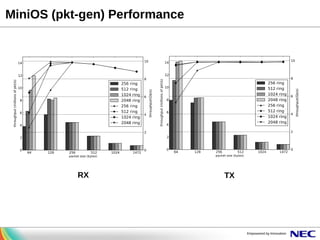

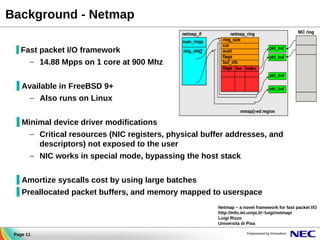

![A simple (click-based) firewall example

in

:: FromNetFront(DEVMAC 00:11:22:33:44:55, BURST 1024);

out

:: ToNetFront(DEVMAC 00:11:22:33:44:55, BURST 1);

filter :: IPFilter(

allow src host 10.0.0.1 && dst host 10.1.0.1 && udp,

drop all);

in -> CheckIPHeader(14) -> filter

filter[0] -> Print(“allow”) -> out;

filter[1] -> Print(“drop”) -> Discard();

Page 6](https://image.slidesharecdn.com/clickosxendevsummit2013-131104104253-phpapp01/85/XPDS13-Enabling-Fast-Dynamic-Network-Processing-with-ClickOS-Joao-Martins-NEC-6-320.jpg)

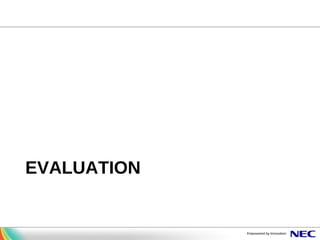

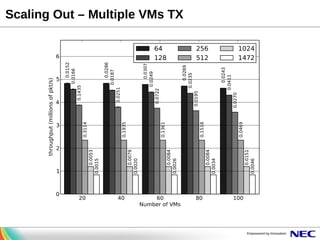

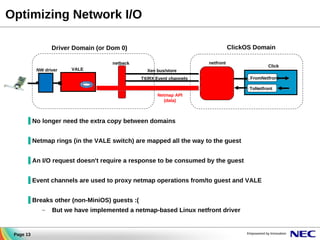

![Optimizing Network I/O – Initialization and Memory usage

▐ Netmap buffers are contiguous pages in guest memory

KB

# grants

slots

(per ring)

(per ring)

64

135

33

128

266

65

Driver Domain

256

528

130

VALE

512

1056

259

1024

2117

516

2048

4231

1033

▐ Buffers are 2k in size, each page fits 2 buffers

▐ Ring fits 1 page for 64 and 128 slots; (2+ for 256+ slots)

buf slot [0]

buf slot [1]

buf slot [2]

netmap

buffers pool

Vale

Mini-OS

Netback (Xen)

3. ring/bufs pages granted

netfront

app.

netmap API

netback

1. opens netmap device

2. registers a VALE port

Initialization

4. ring grant refs read from the xenstore

buffer refs read from the mapped ring slot](https://image.slidesharecdn.com/clickosxendevsummit2013-131104104253-phpapp01/85/XPDS13-Enabling-Fast-Dynamic-Network-Processing-with-ClickOS-Joao-Martins-NEC-14-320.jpg)