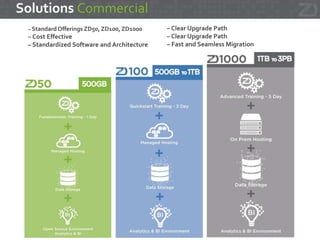

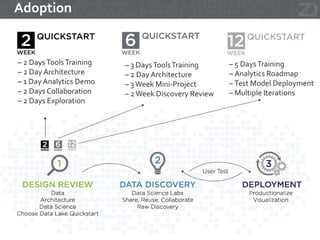

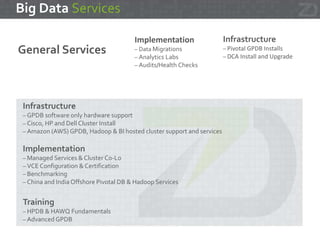

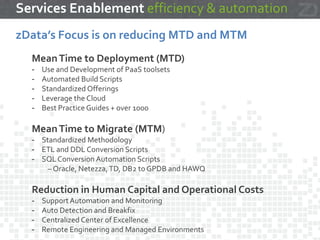

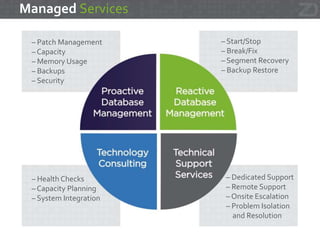

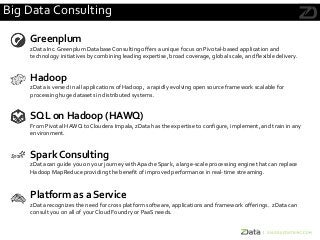

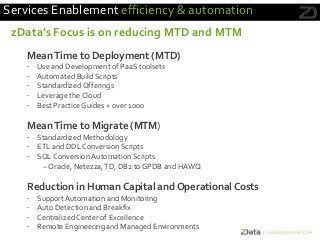

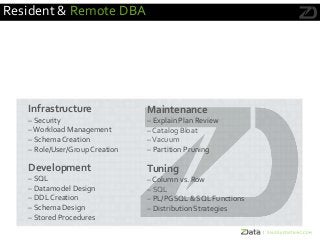

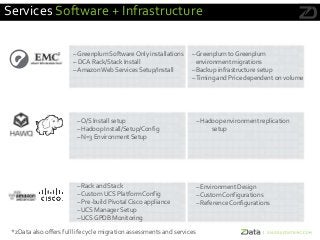

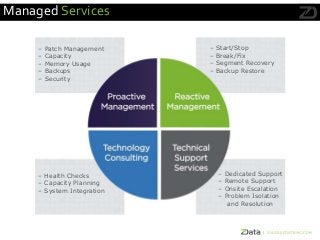

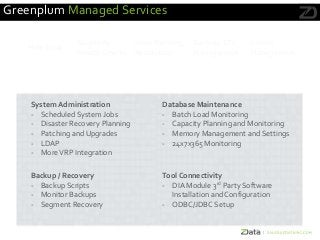

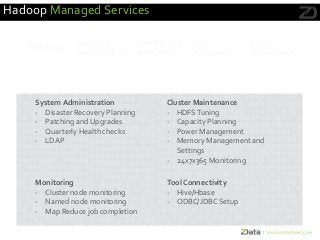

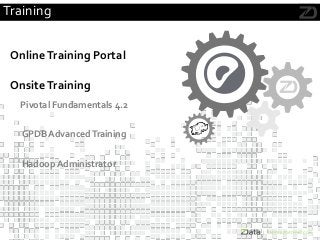

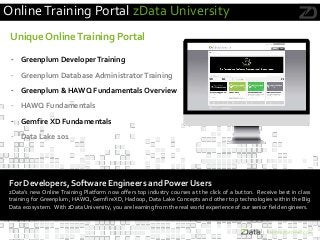

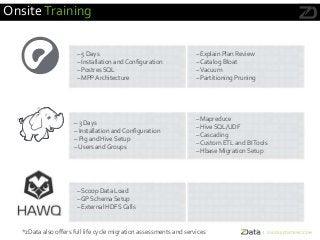

Zdata Inc. offers comprehensive enterprise big data solutions as a preferred partner for major tech companies, specializing in software, hardware, and services procurement. Their services include online and onsite training, pilot programs for big data solutions, and extensive consulting on various platforms such as Greenplum and Hadoop, with a focus on enterprise data lake adoption. Zdata also provides managed services, migration expertise, and a unique online training portal to support clients in maximizing their big data capabilities.