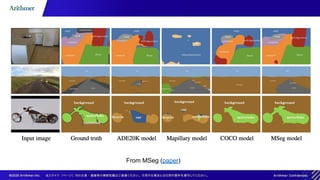

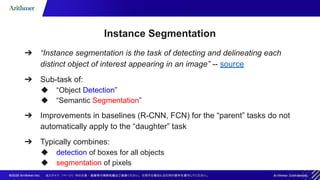

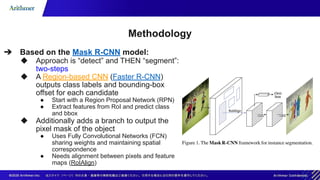

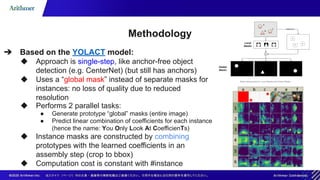

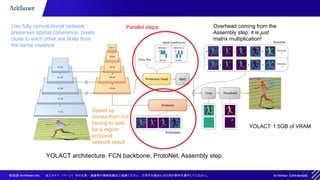

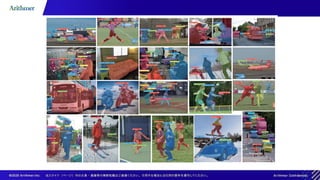

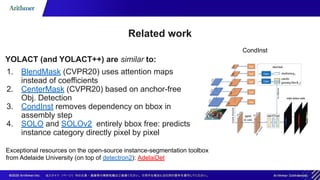

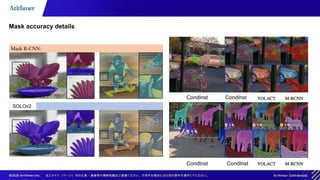

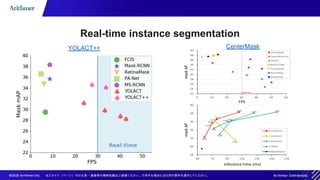

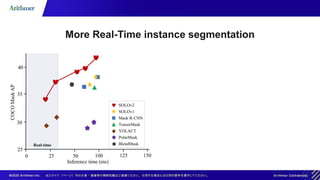

The document outlines methodologies for real-time instance segmentation, highlighting the use of Mask R-CNN and YOLACT models for detecting and delineating objects in images. It details the differences between the two-step approach of Mask R-CNN and the single-step approach of YOLACT, emphasizing the advantages of each technique in terms of speed and efficiency. Additionally, it references related works and resources for implementing instance segmentation using open-source toolkits.