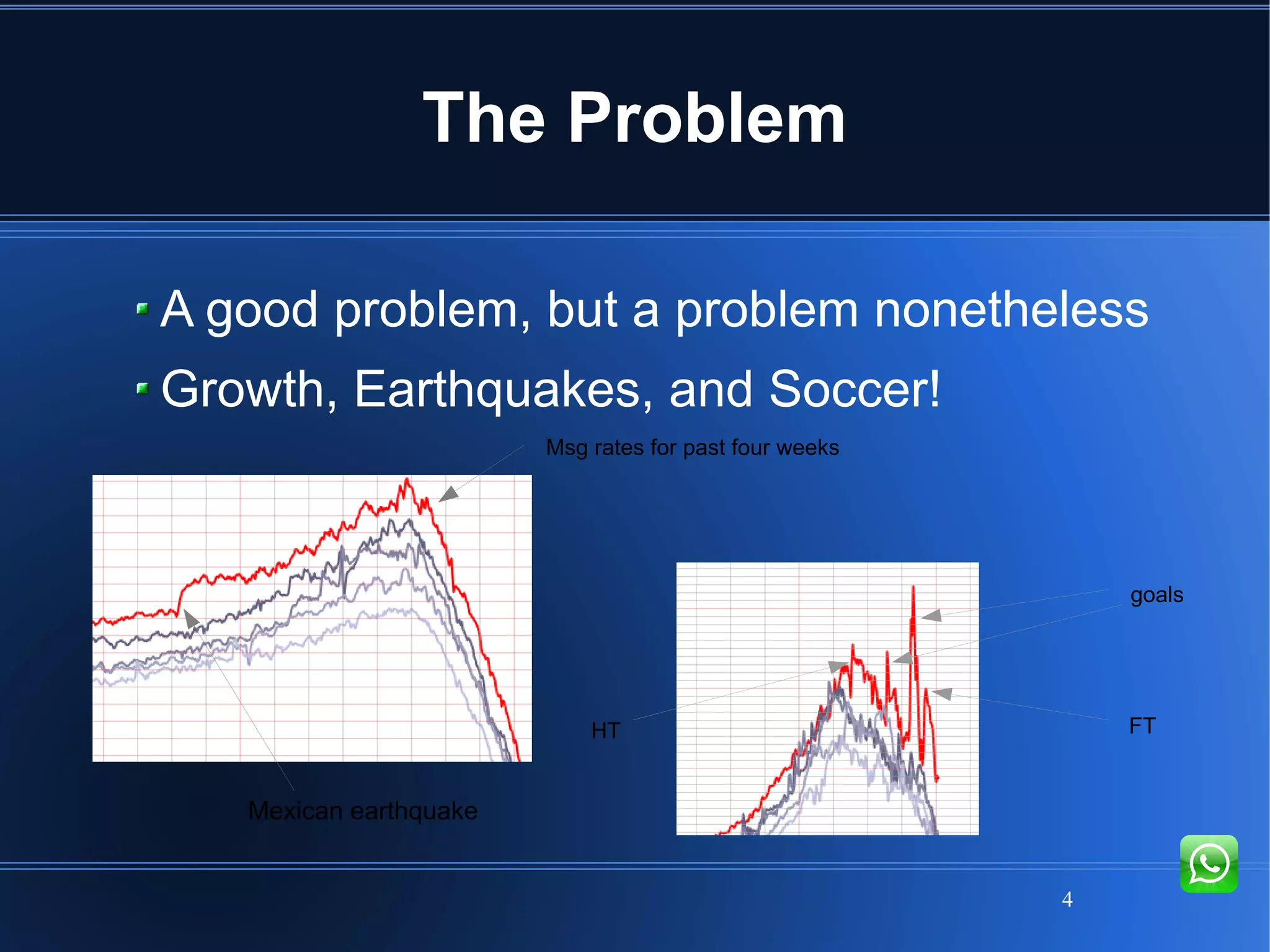

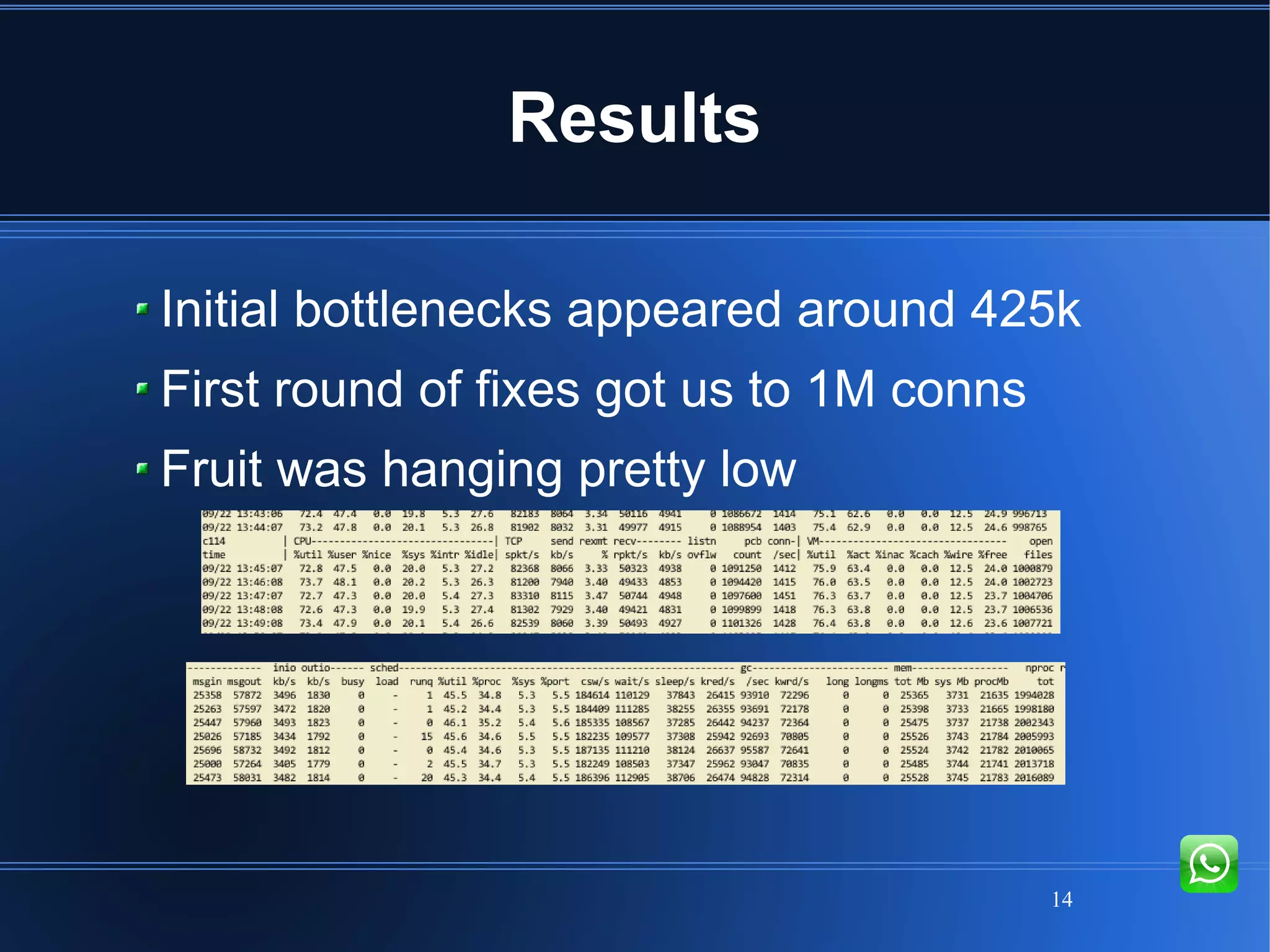

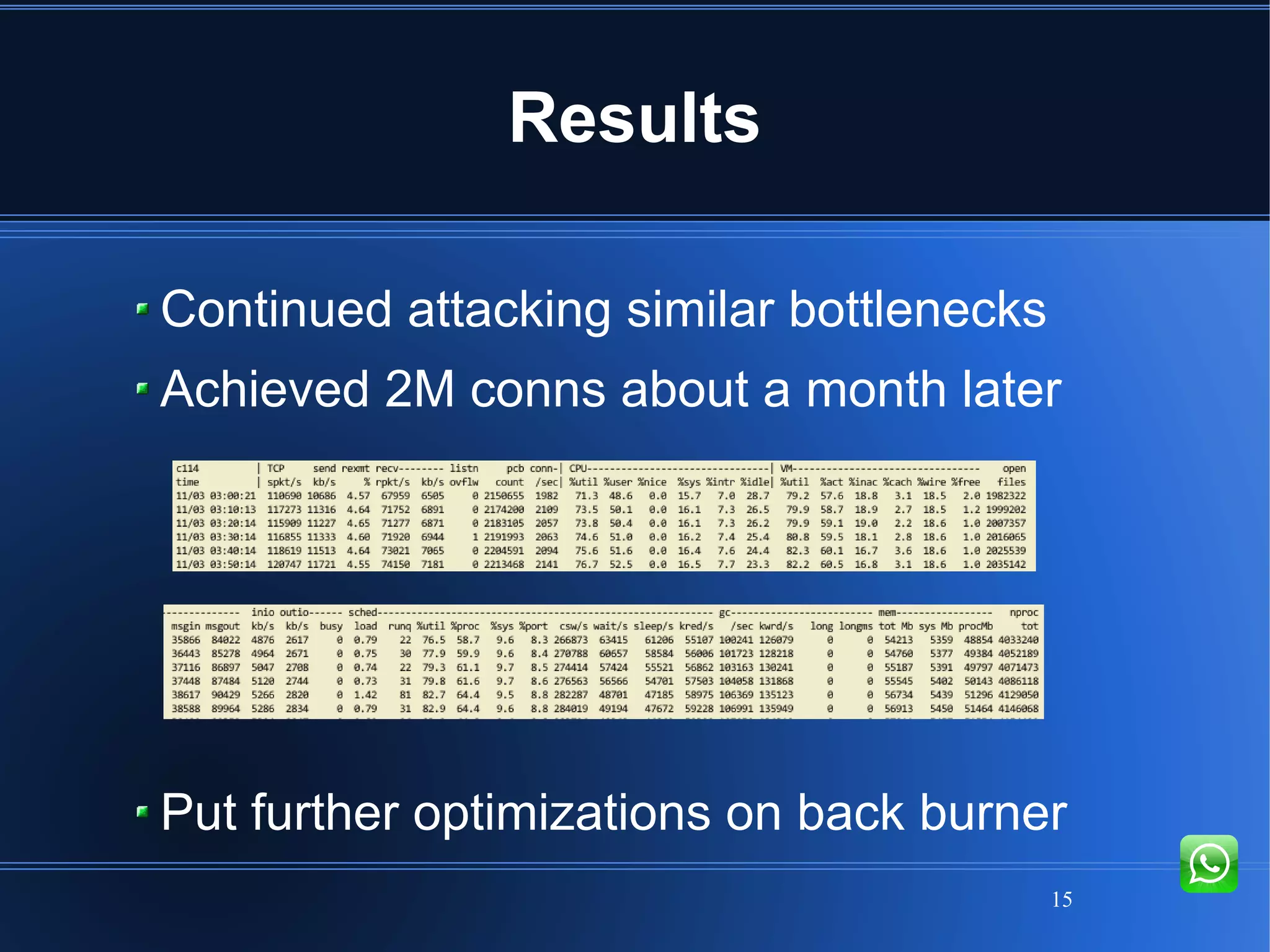

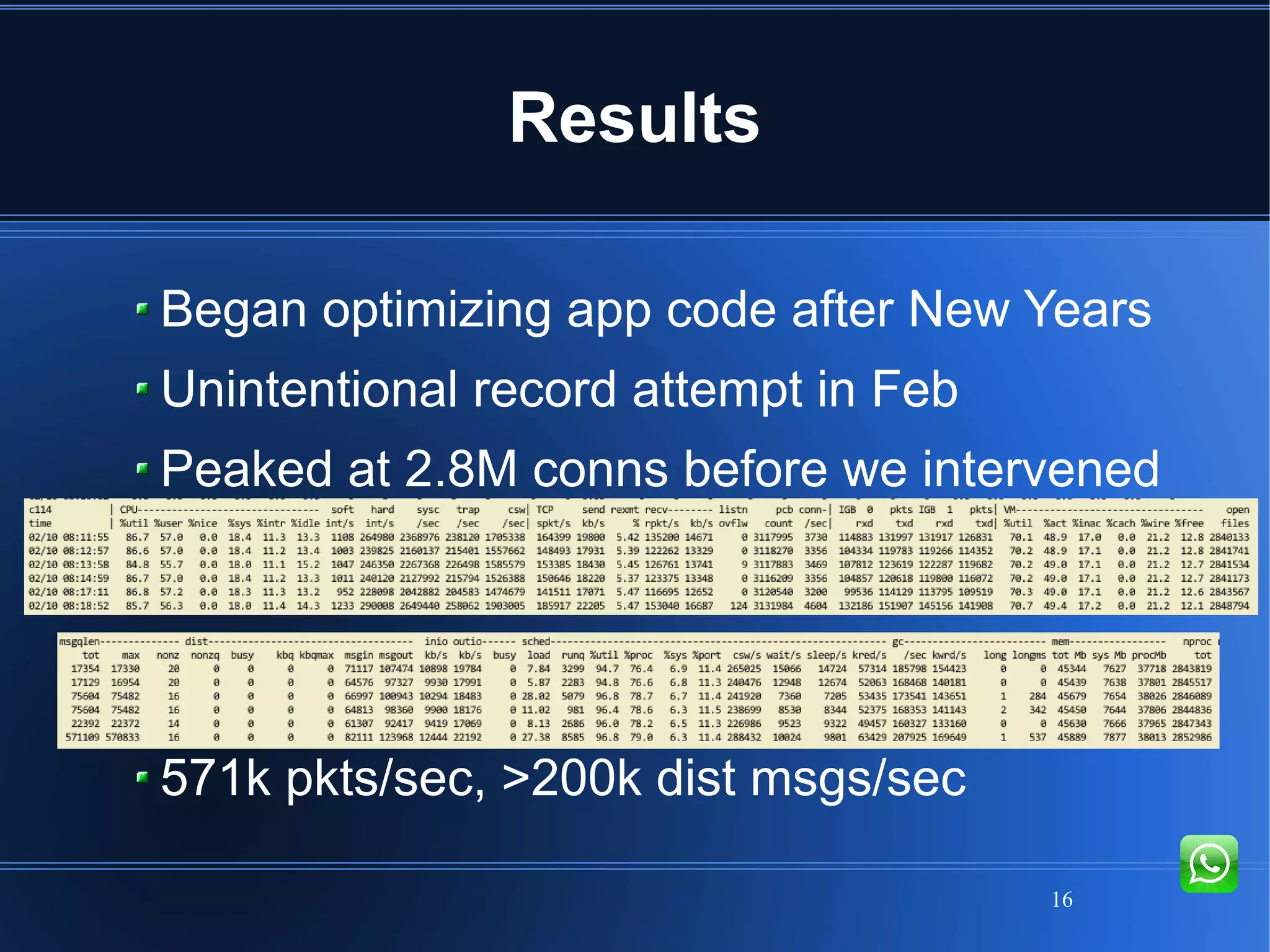

The document presents strategies employed by WhatsApp to scale to millions of simultaneous connections using Erlang and FreeBSD. Initial challenges included server load handling and connection bottlenecks, which were addressed through various performance optimizations and tools. Ultimately, the company achieved successes in improving system scalability, reaching a peak of 2.8 million connections during peak usage periods.

![30

Specific Scalability Fixes

Operability fixes

Added [prepend] option to erlang:send

Added process_flag(flush_message_queue)](https://image.slidesharecdn.com/efsf2012-whatsapp-scaling-160126184450/75/Whatsapp-s-Architecture-30-2048.jpg)