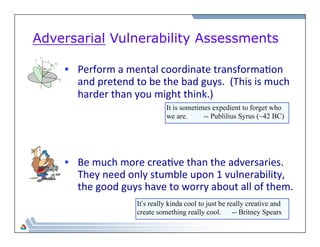

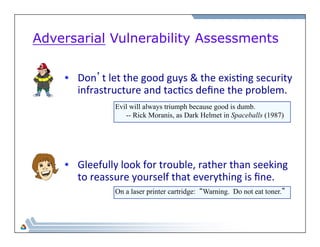

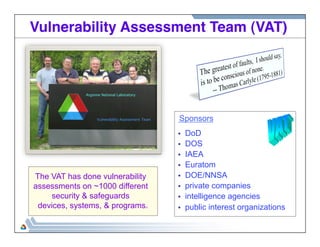

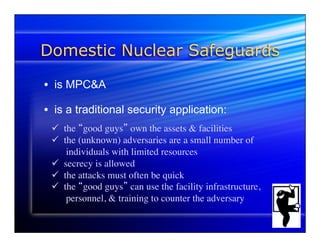

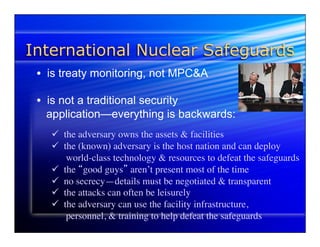

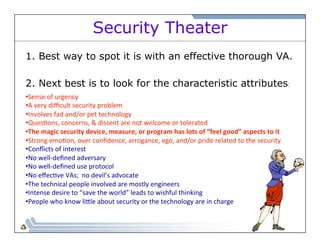

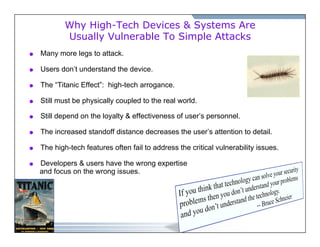

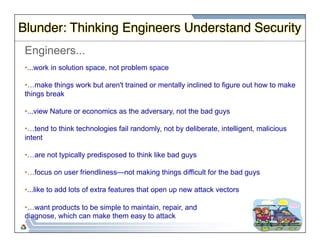

This document discusses vulnerability assessments of security and safeguard devices and programs. It describes how vulnerability assessments have been conducted on over 1,000 different systems and devices. It outlines some key differences between domestic and international nuclear safeguard programs and notes that similar hardware and strategies are sometimes incorrectly used for both applications. It also discusses several common problems with international safeguard programs and outlines attributes of "security theater".

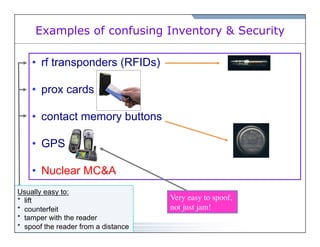

![(Incidentally, Prox Cards are RFIDs!)

[But then most (all?) access control and biometric devices are easy to defeat.]](https://image.slidesharecdn.com/johnstonngsitalk2011-141006172113-conversion-gate02/85/Vulnerability-Assessment-Physical-Security-and-Nuclear-Safeguards-24-320.jpg)