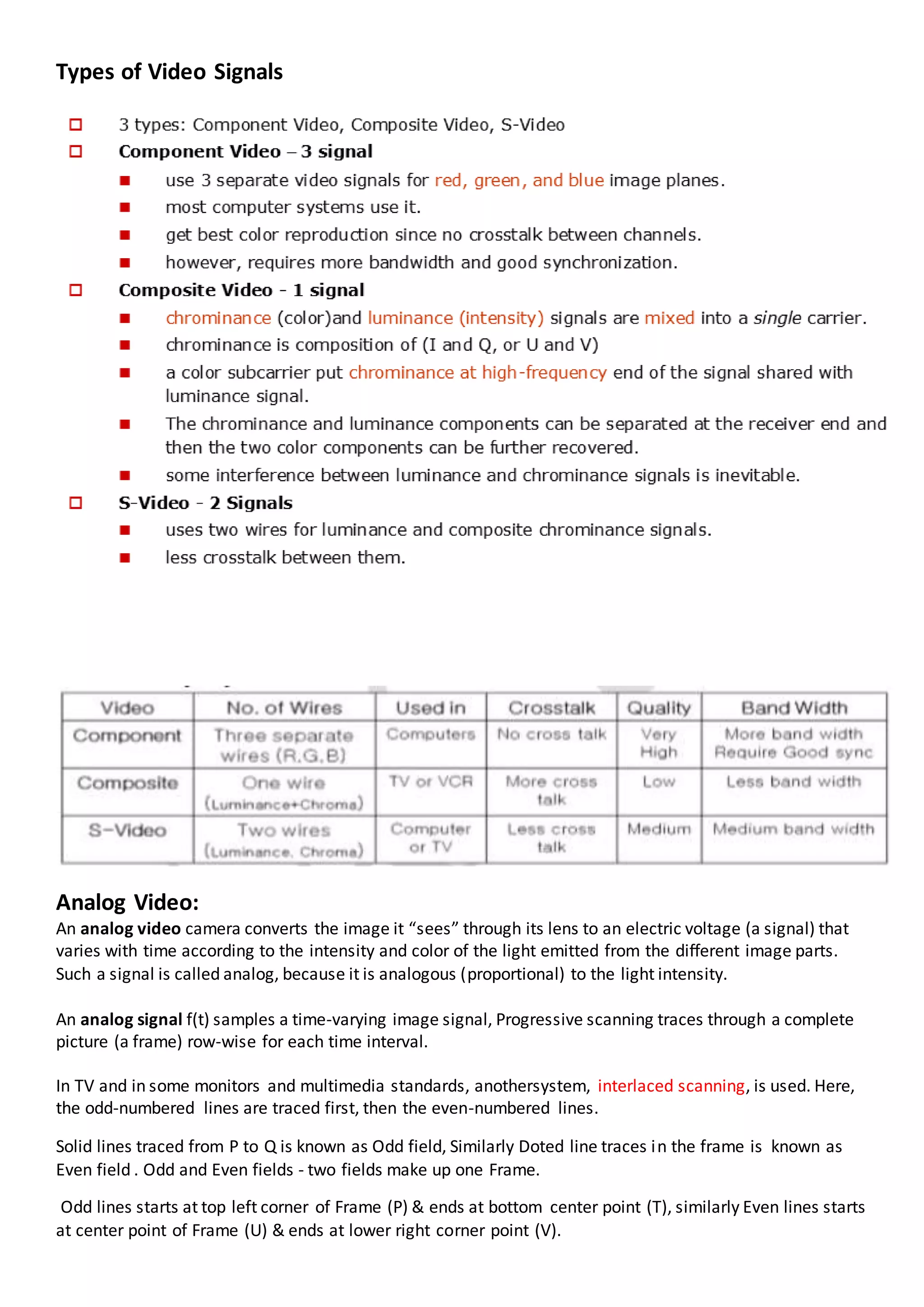

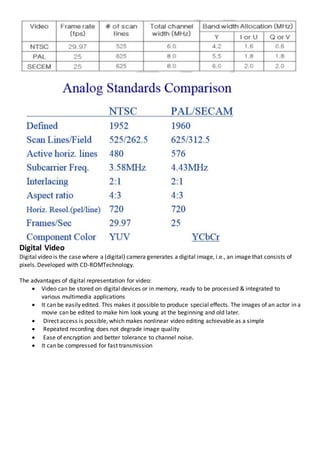

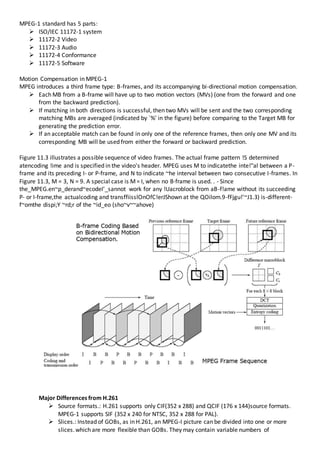

An analog video camera converts light intensity to an electric signal that varies over time. There are different types of analog video signals including NTSC, PAL, and SECAM. Digital video represents images as pixels. Digital video compression uses chroma subsampling and different schemes like 4:4:4, 4:2:2, and 4:2:0. Popular digital video compression standards include MPEG-1, MPEG-2, H.261, and H.263 which use techniques like intra and inter frames, motion compensation, and temporal redundancy to reduce file sizes.