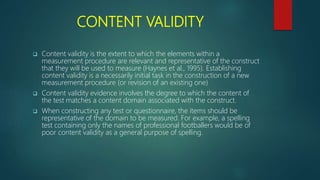

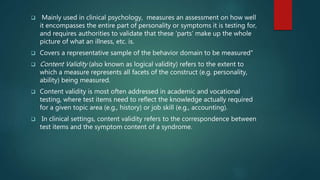

The document discusses various types of validity in psychometrics and research. It defines validity as the degree to which a test measures what it claims to measure. The main types of validity discussed are content validity, criterion-related validity (including concurrent and predictive validity), construct validity, and face validity. Content validity refers to how well a test represents the domain it is intended to measure. Criterion-related validity compares test scores to external outcomes. Construct validity examines if a test aligns with theoretical constructs. Face validity is simply whether a test appears valid at face value.