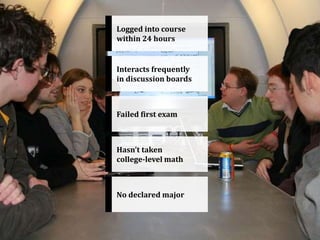

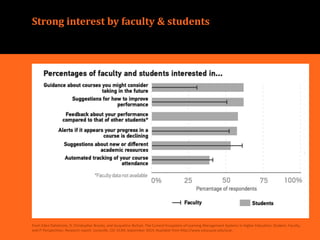

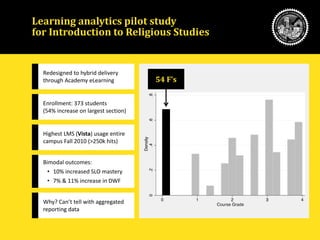

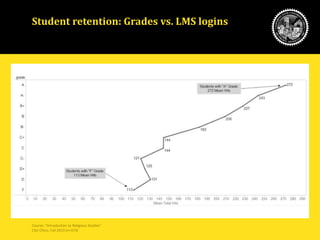

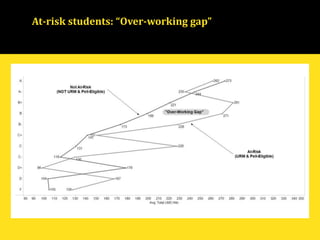

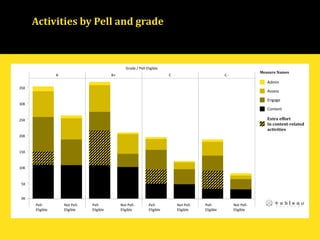

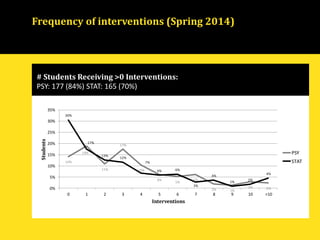

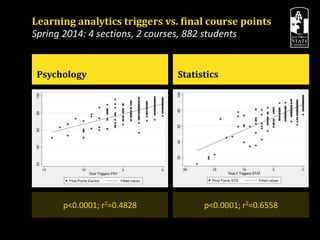

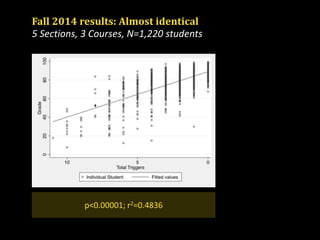

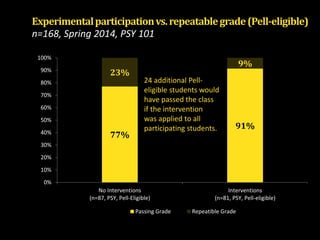

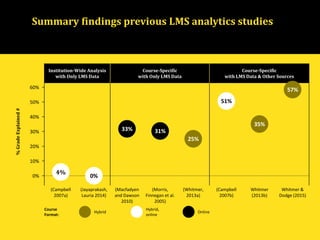

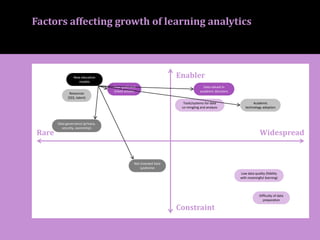

The document discusses the use of learning analytics in higher education to enhance student learning and improve academic technology. It outlines key research findings, including the effectiveness of learning analytics triggers and interventions, and emphasizes the importance of utilizing existing data to inform educational practices. Additionally, the author calls for a proactive approach to leading advancements in learning analytics within the academic community.