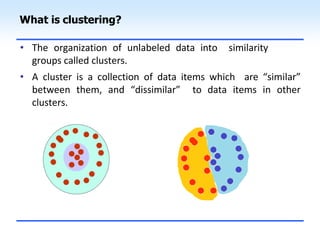

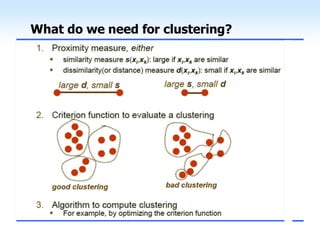

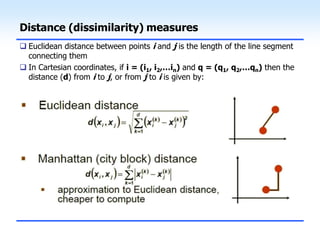

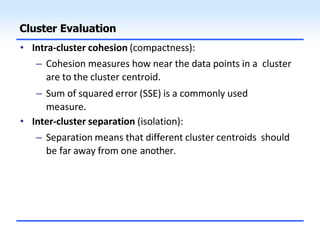

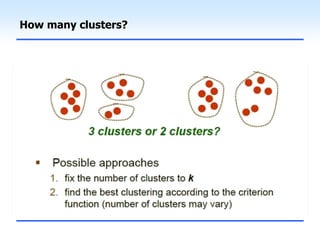

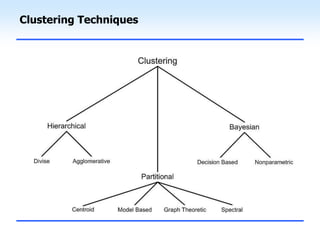

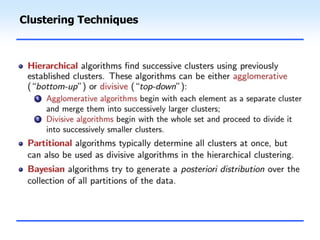

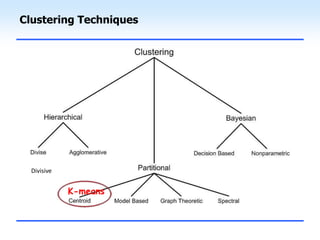

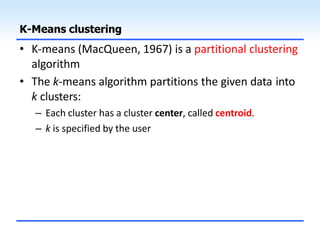

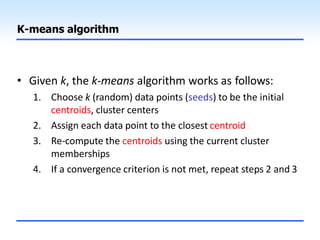

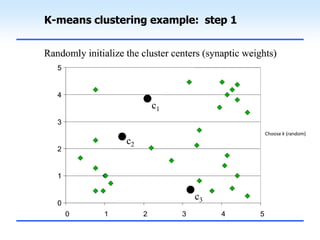

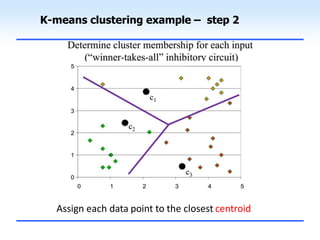

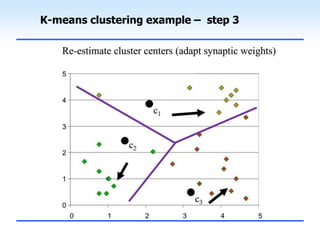

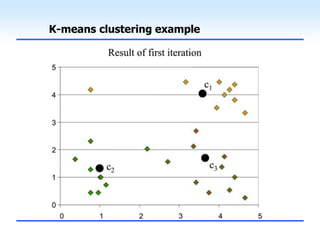

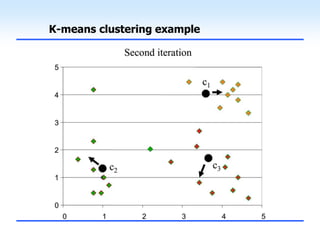

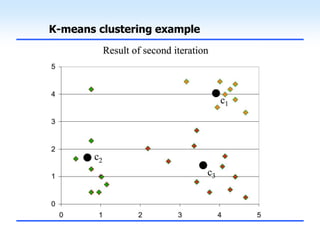

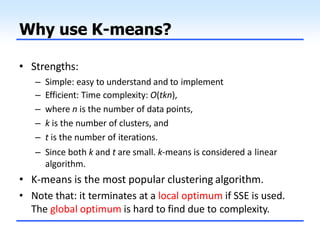

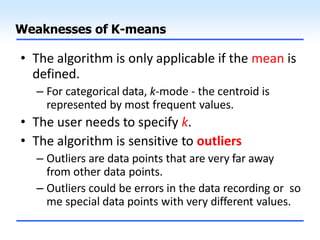

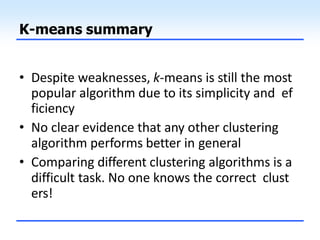

This document discusses unsupervised learning and clustering. It defines unsupervised learning as modeling the underlying structure or distribution of input data without corresponding output variables. Clustering is described as organizing unlabeled data into groups of similar items called clusters. The document focuses on k-means clustering, describing it as a method that partitions data into k clusters by minimizing distances between points and cluster centers. It provides details on the k-means algorithm and gives examples of its steps. Strengths and weaknesses of k-means clustering are also summarized.