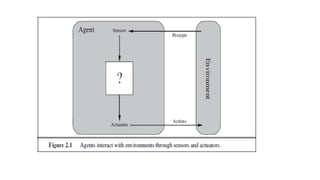

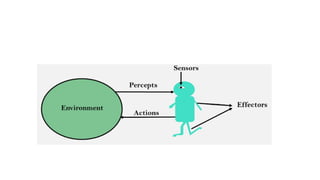

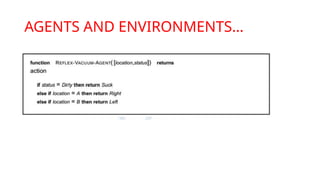

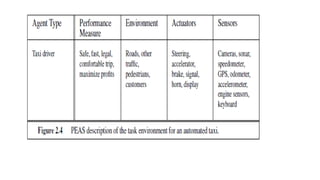

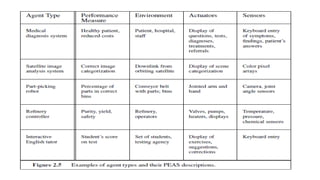

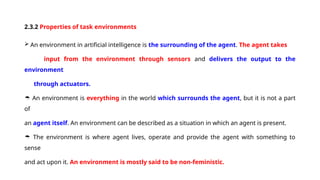

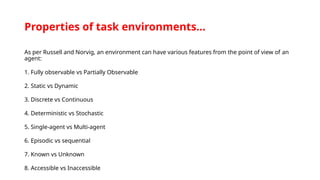

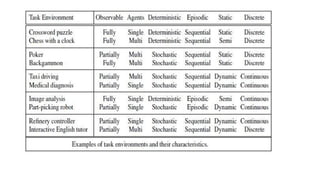

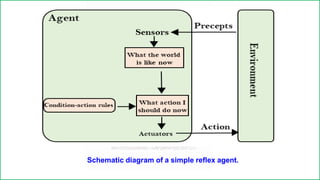

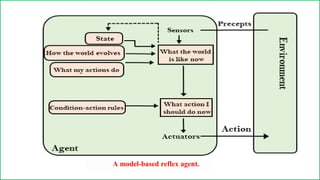

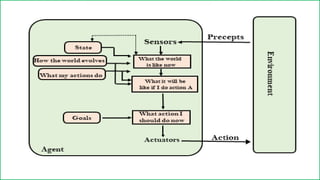

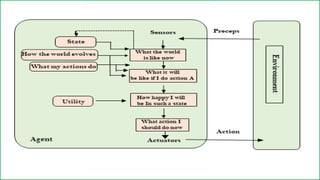

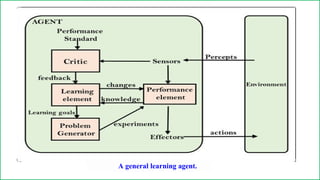

The document discusses intelligent agents, defining them as entities that perceive their environment and act upon it through various means, such as sensors and actuators. It details the nature of different agents, the environments they operate in, and concepts like rationality, learning, and autonomy, emphasizing how agents can adapt and improve their performance over time. Additionally, it categorizes agents based on their intelligence and capability, ranging from simple reflex agents to learning agents, while outlining the importance of task environments in agent design.