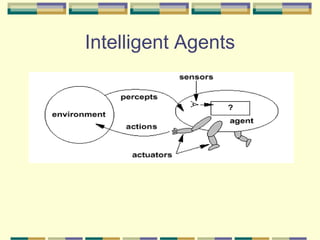

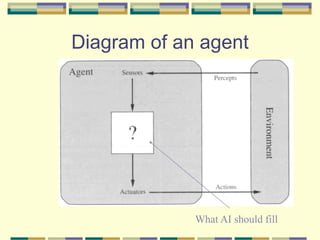

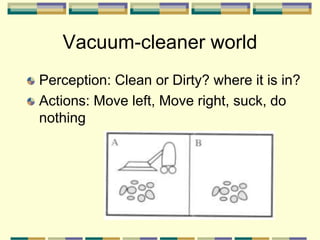

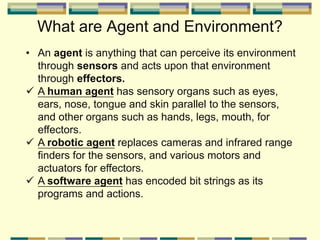

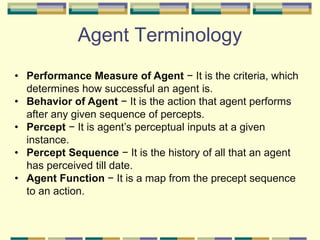

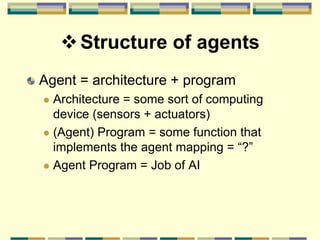

The document discusses different types of intelligent agents in artificial intelligence. It defines an agent as anything that can perceive its environment through sensors and act upon the environment through effectors. It then describes different types of agent programs:

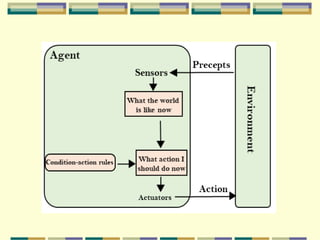

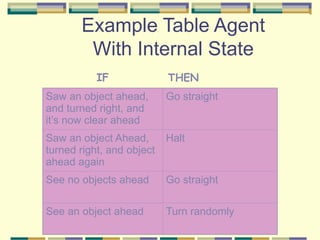

1) Simple reflex agents that take actions based solely on current percepts.

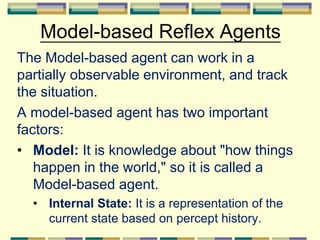

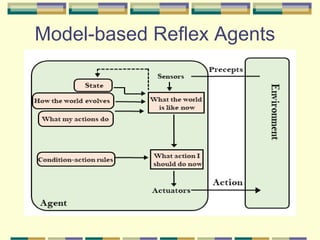

2) Model-based reflex agents that maintain an internal state based on past percepts allowing them to operate in partially observable environments.

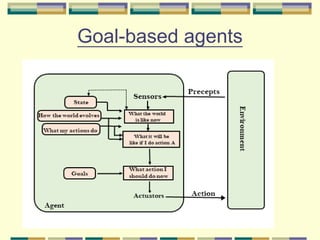

3) Goal-based agents that choose actions to achieve goals by considering long sequences of possible actions through planning.

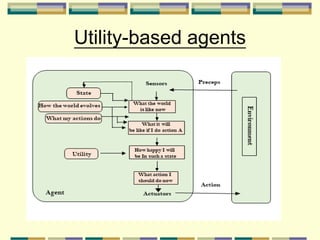

4) Utility-based agents that act not just based on goals but also to maximize utility or success at achieving goals.

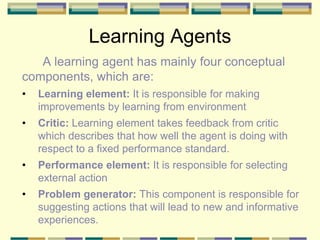

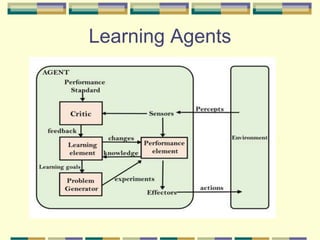

5) Learning agents that can