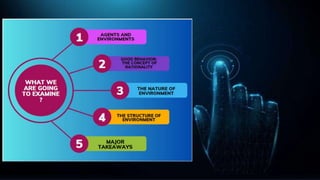

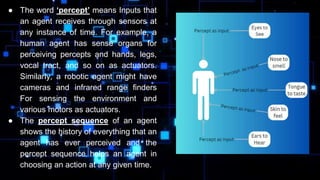

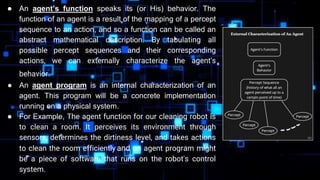

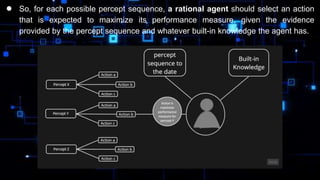

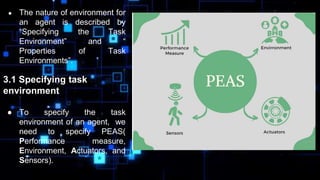

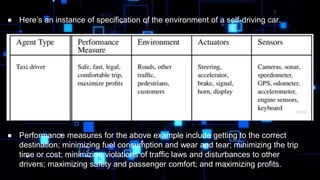

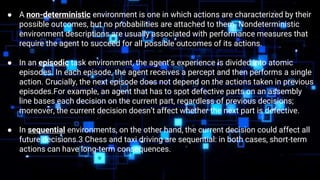

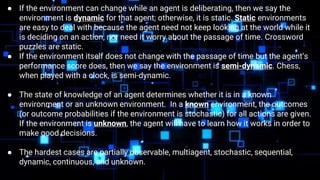

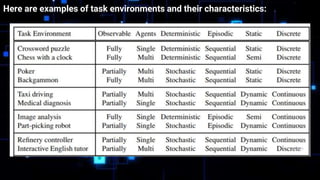

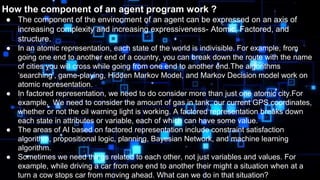

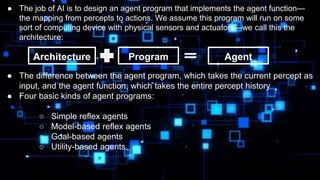

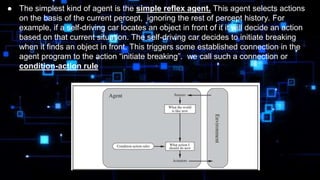

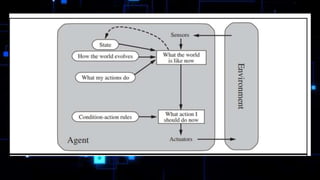

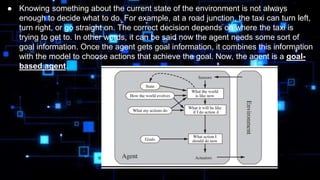

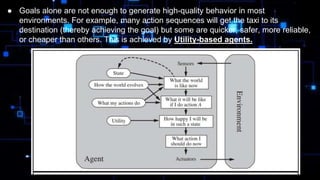

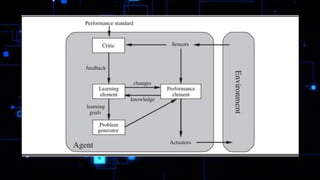

The document discusses the principles of intelligent agents in artificial intelligence, emphasizing how agents perceive their environment through sensors and act through actuators. It highlights the importance of rationality, performance measures, and the nature of task environments, detailing various agent types including simple reflex, model-based, goal-based, and utility-based agents. Additionally, it underscores the significance of learning and adapting based on prior knowledge and percept sequences to enhance an agent's autonomy and performance.