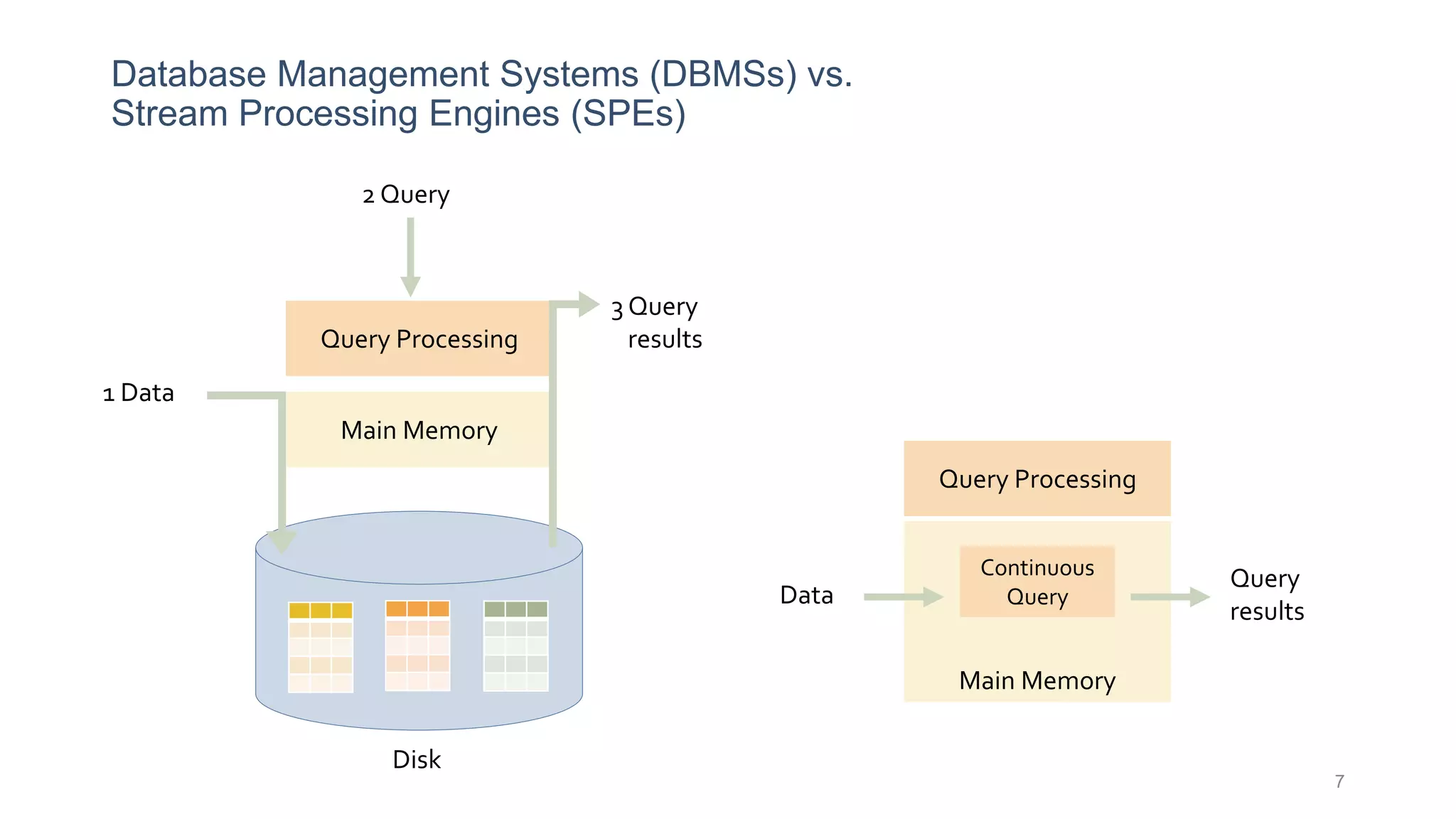

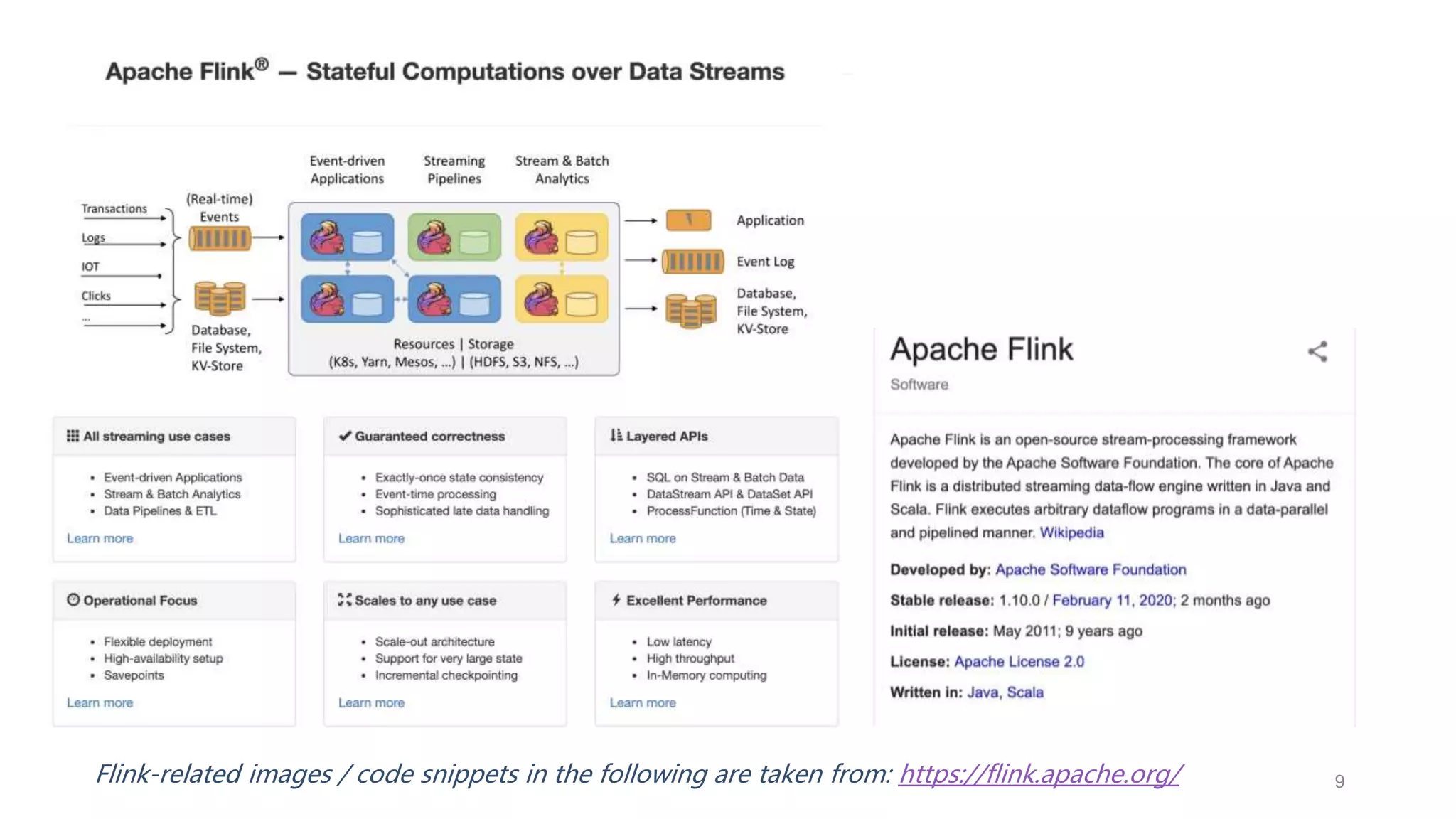

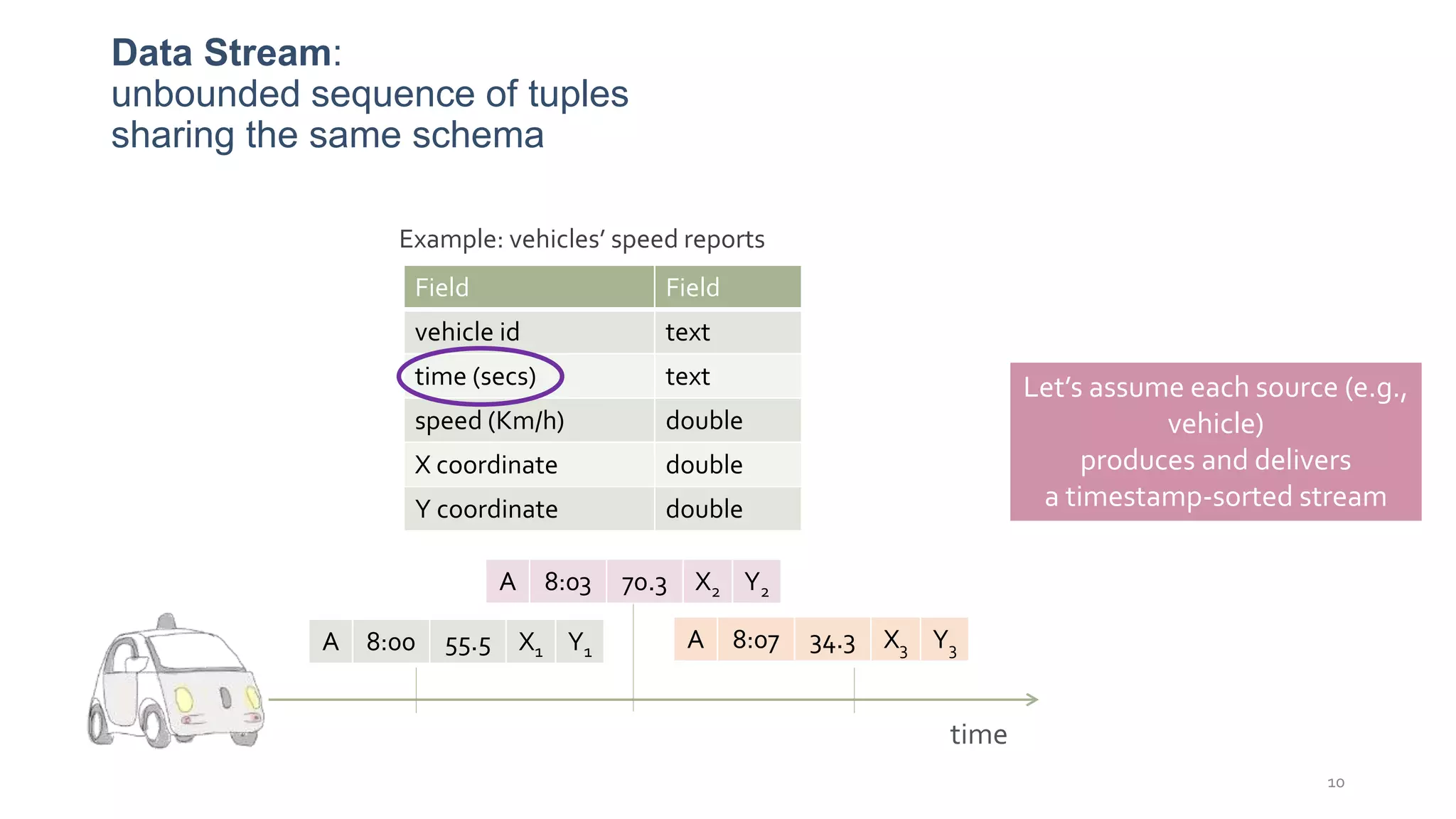

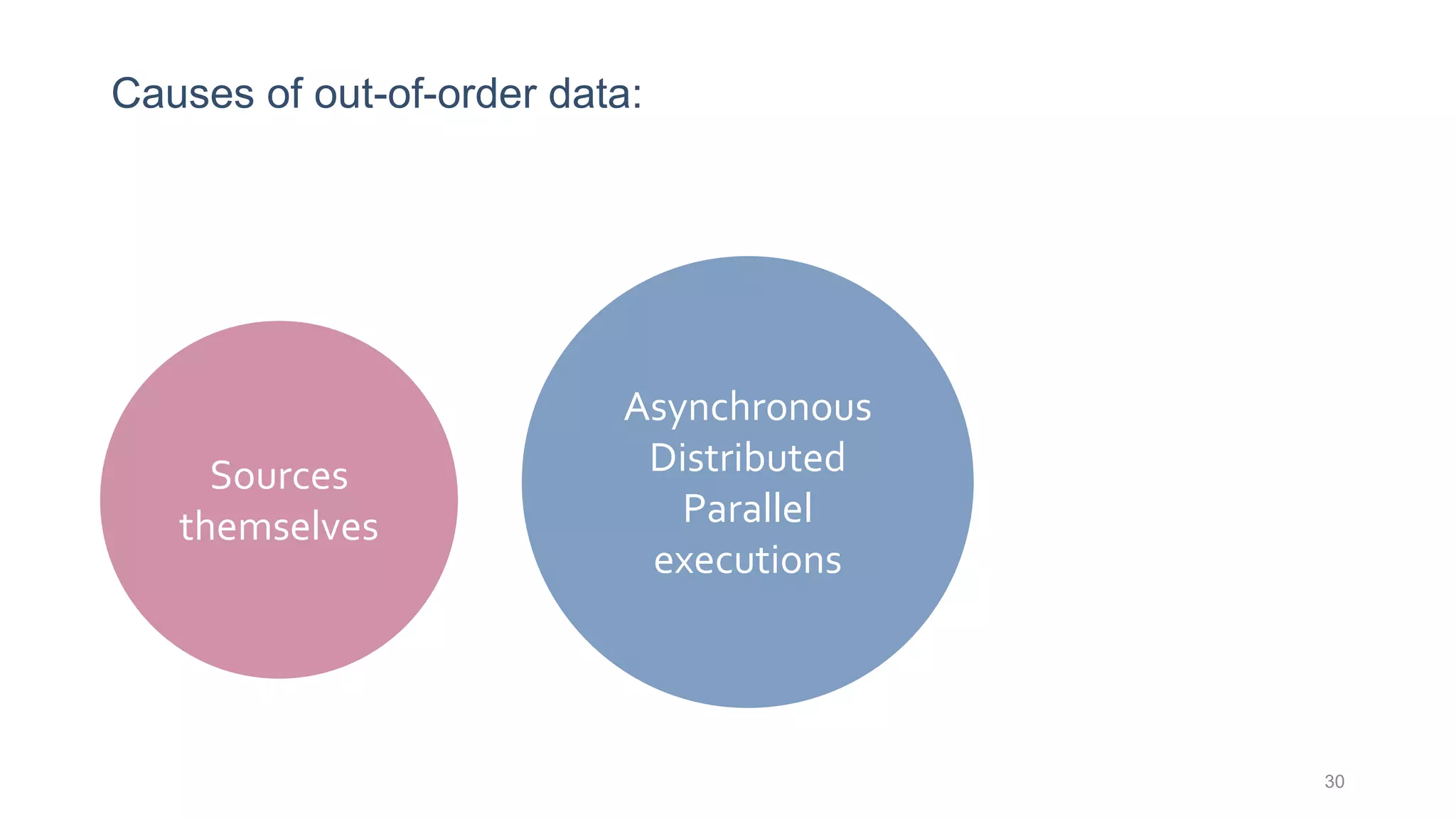

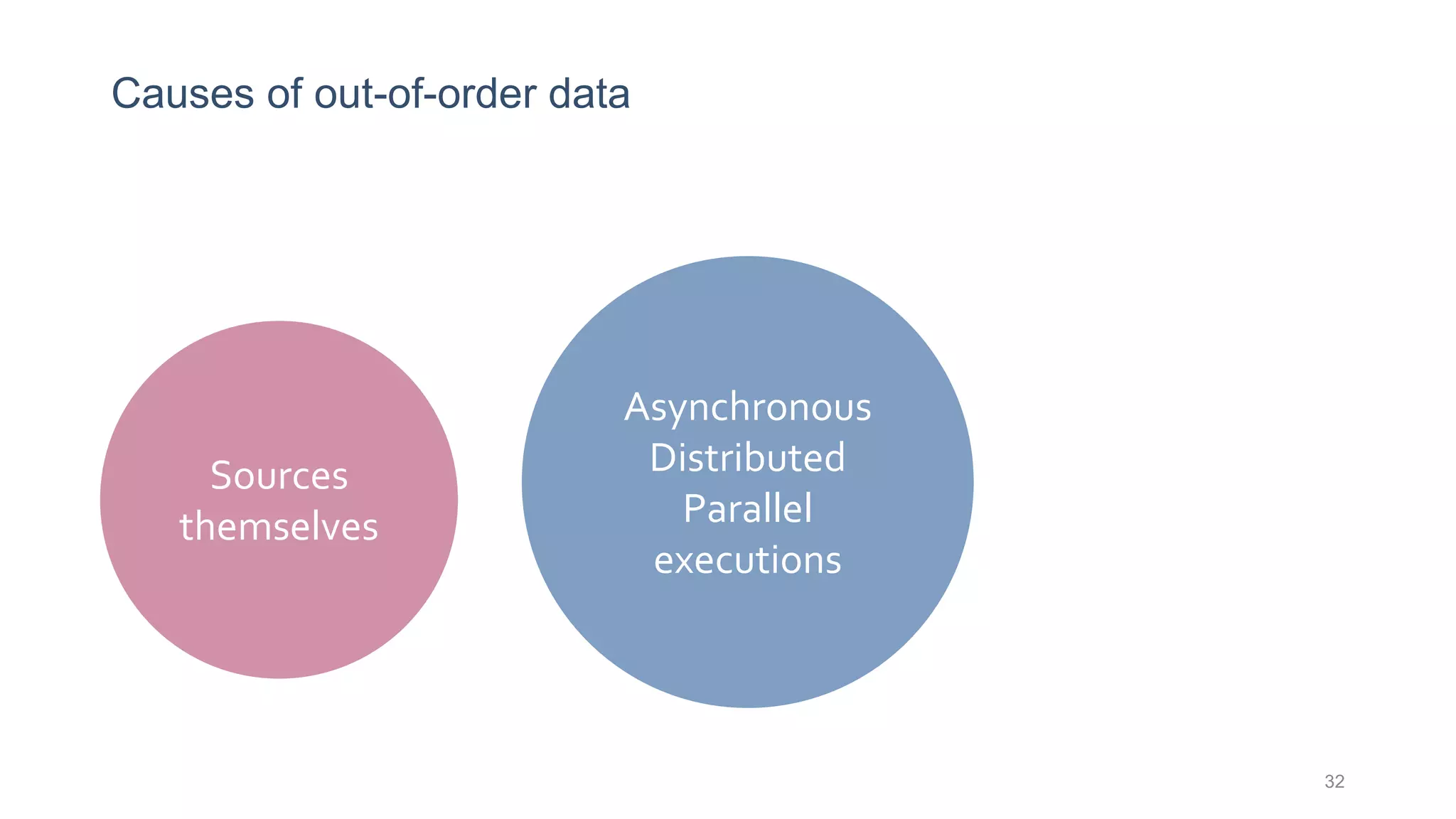

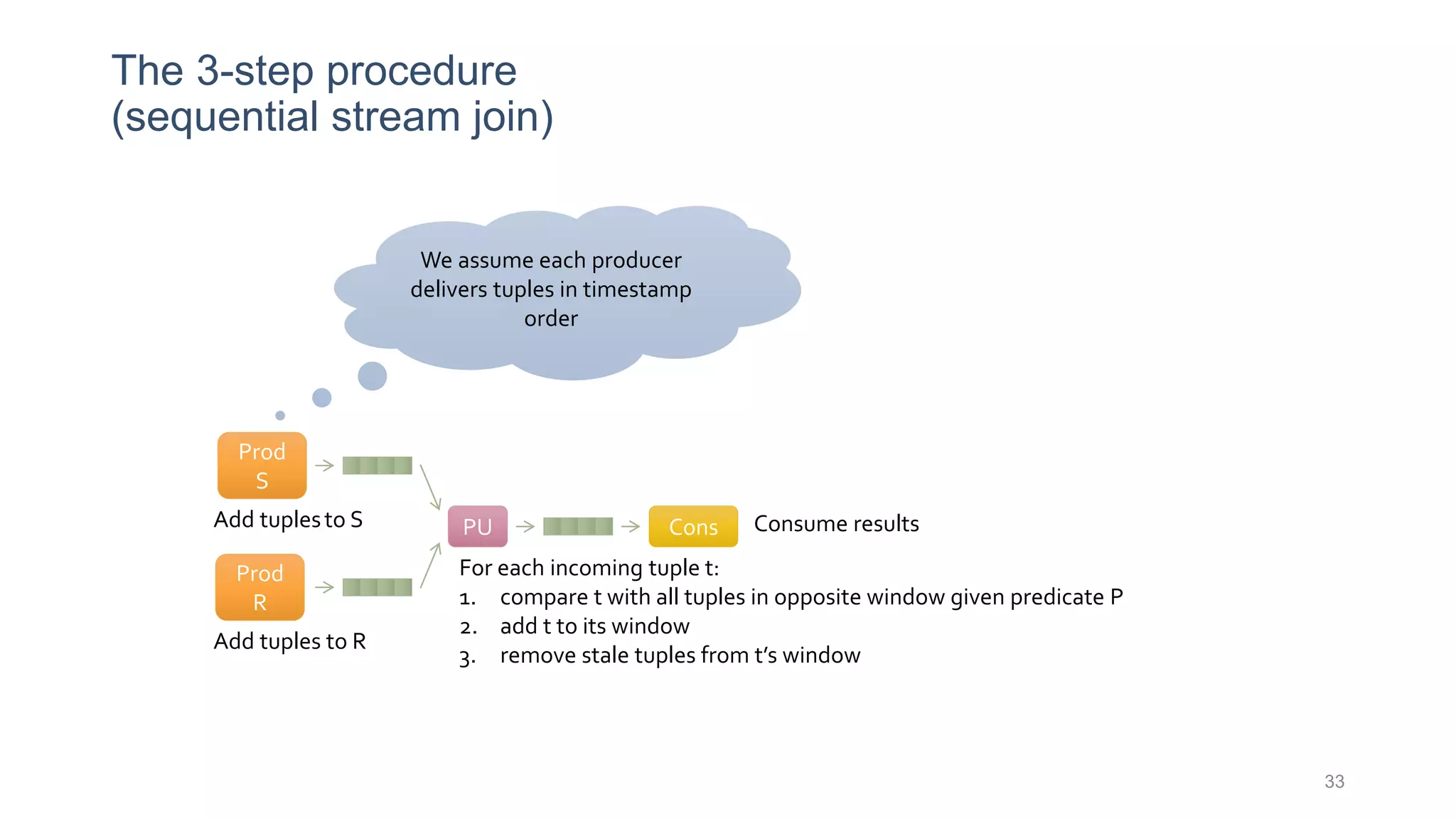

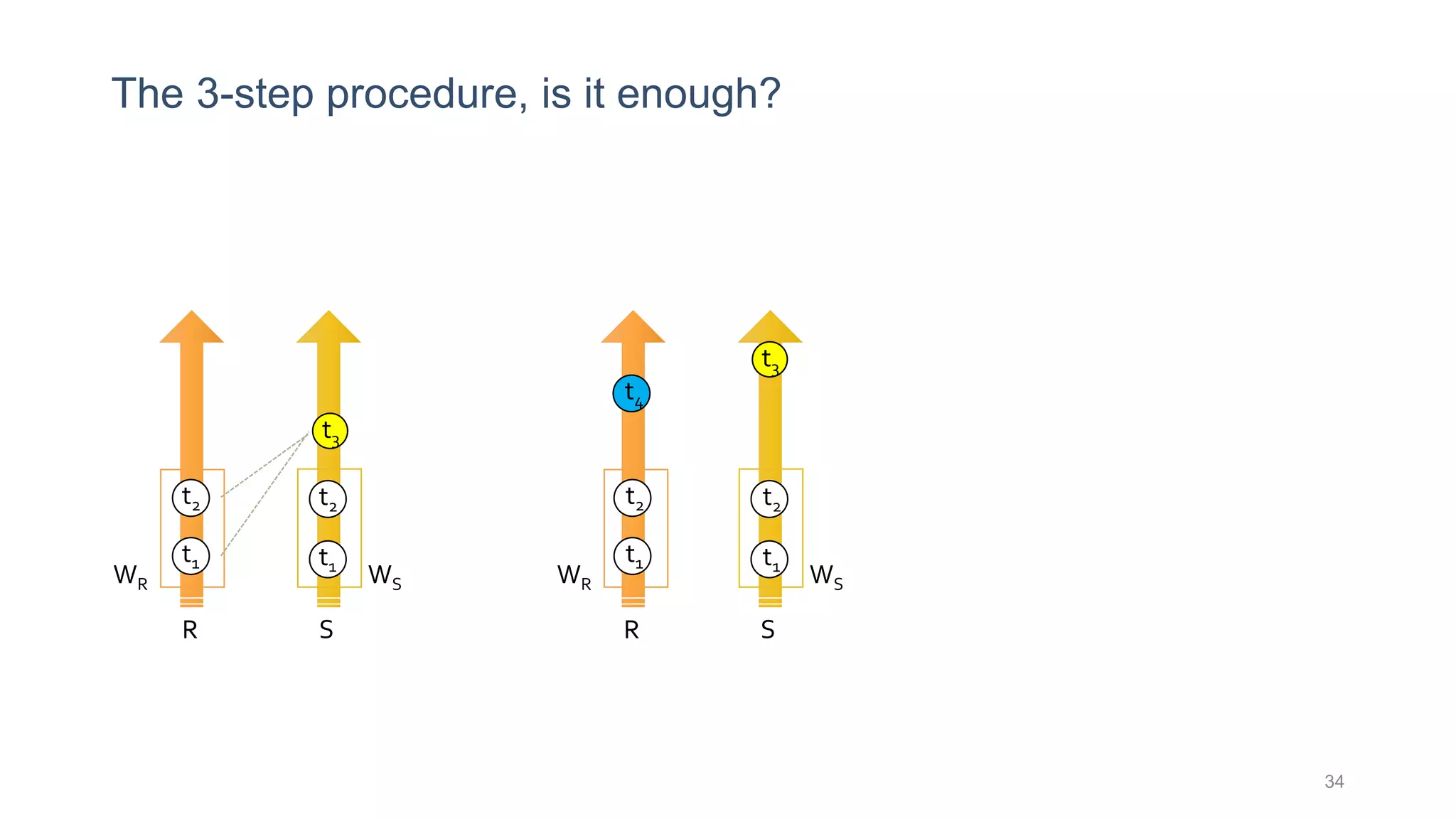

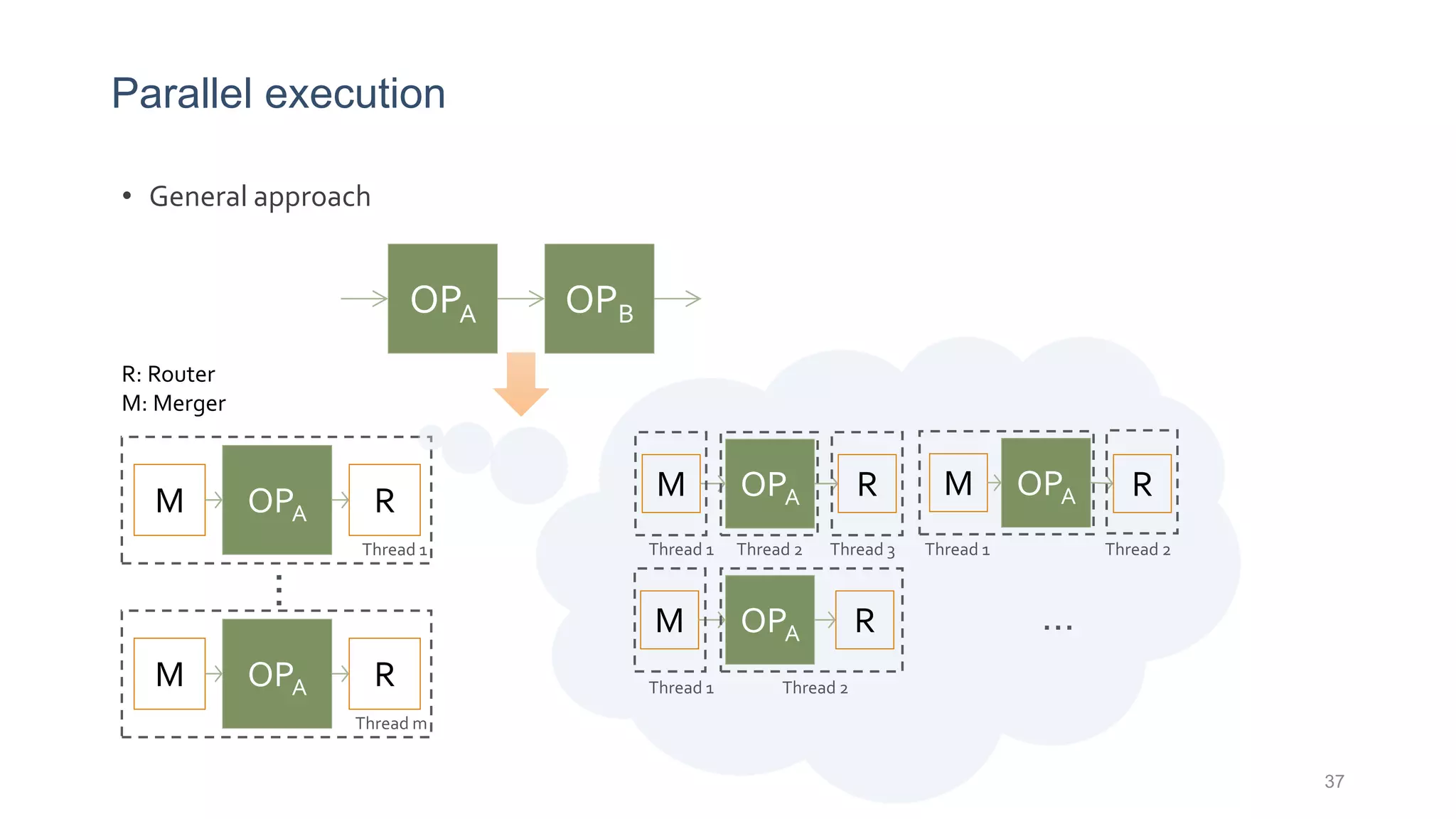

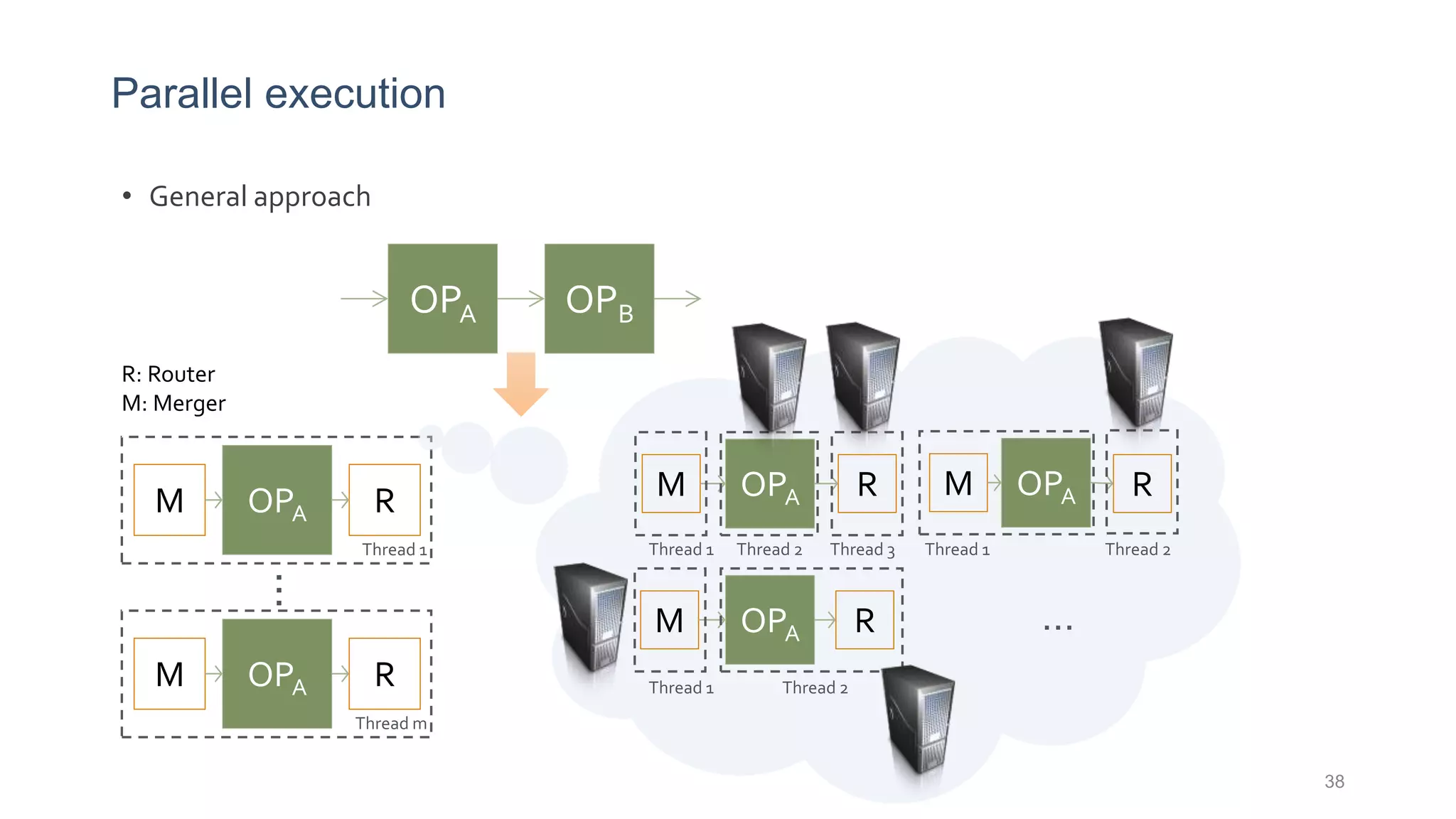

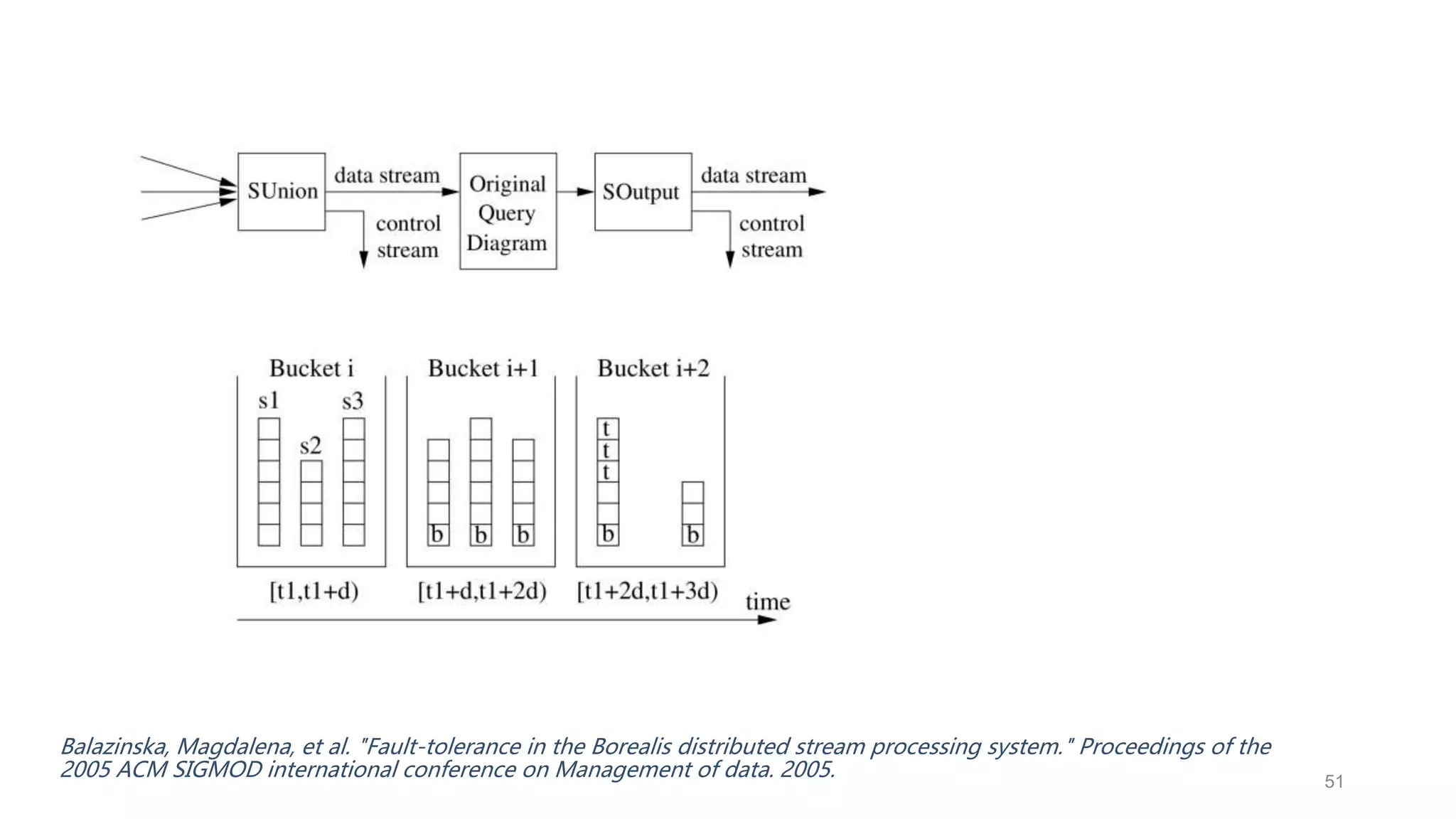

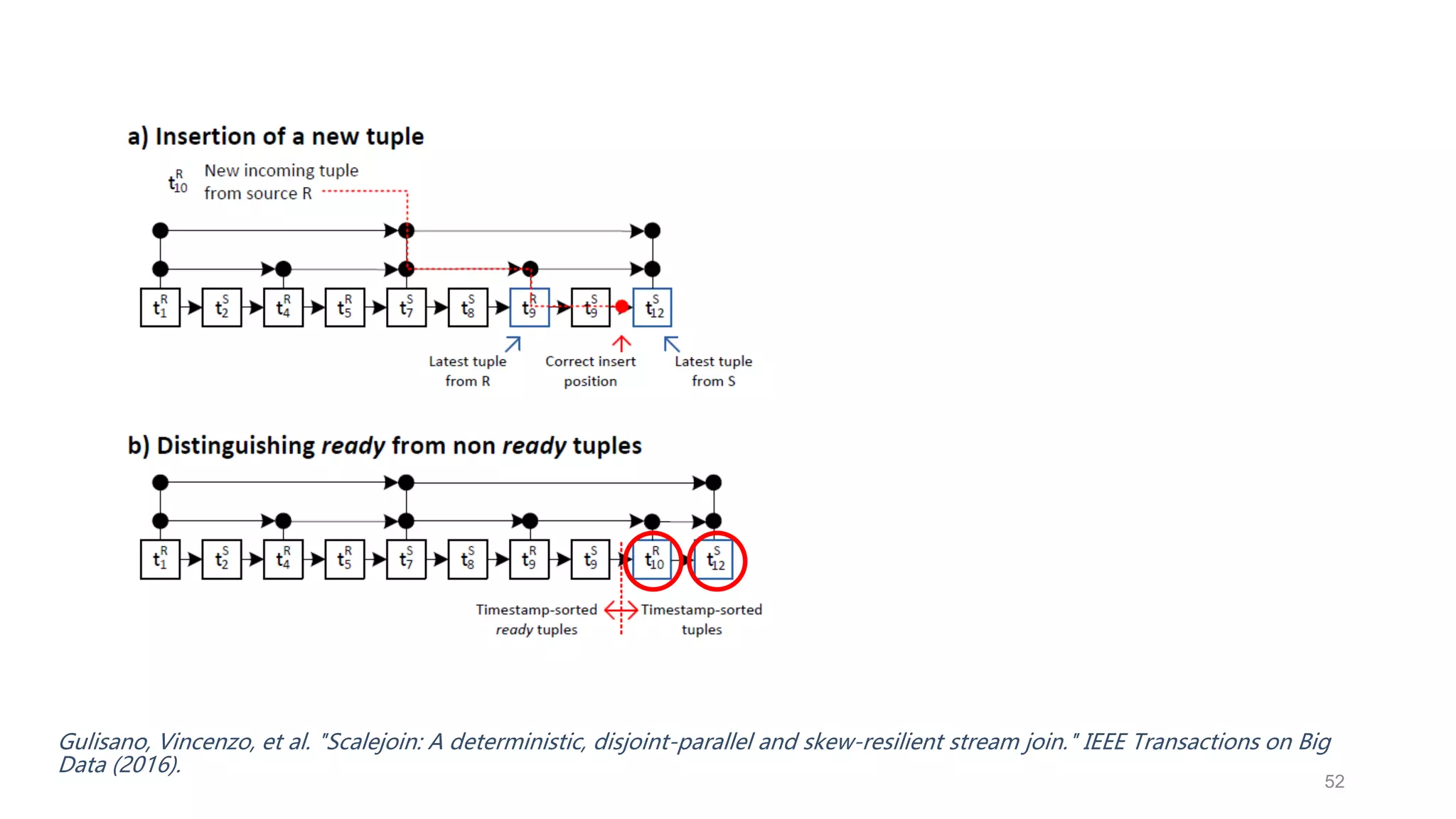

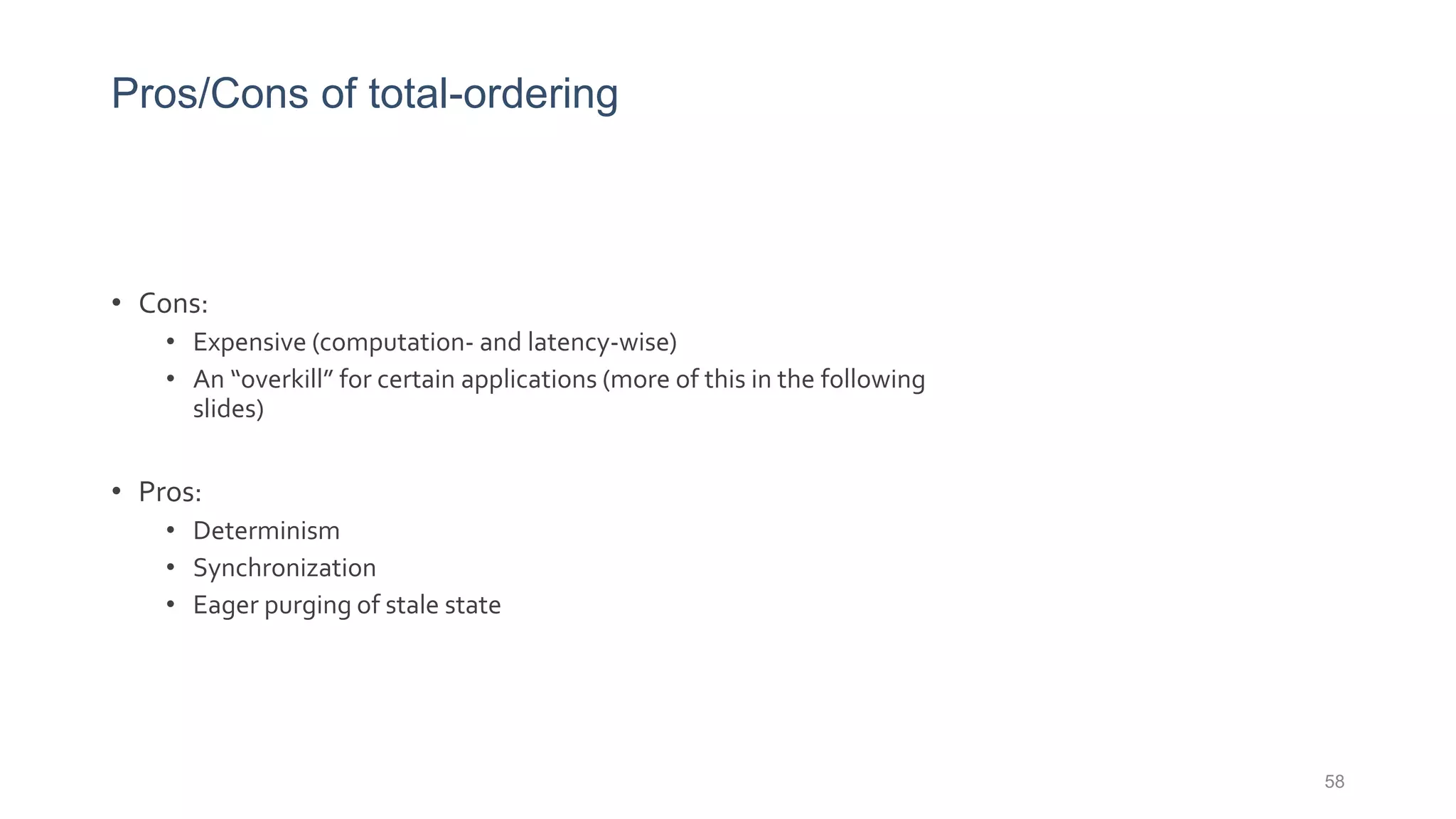

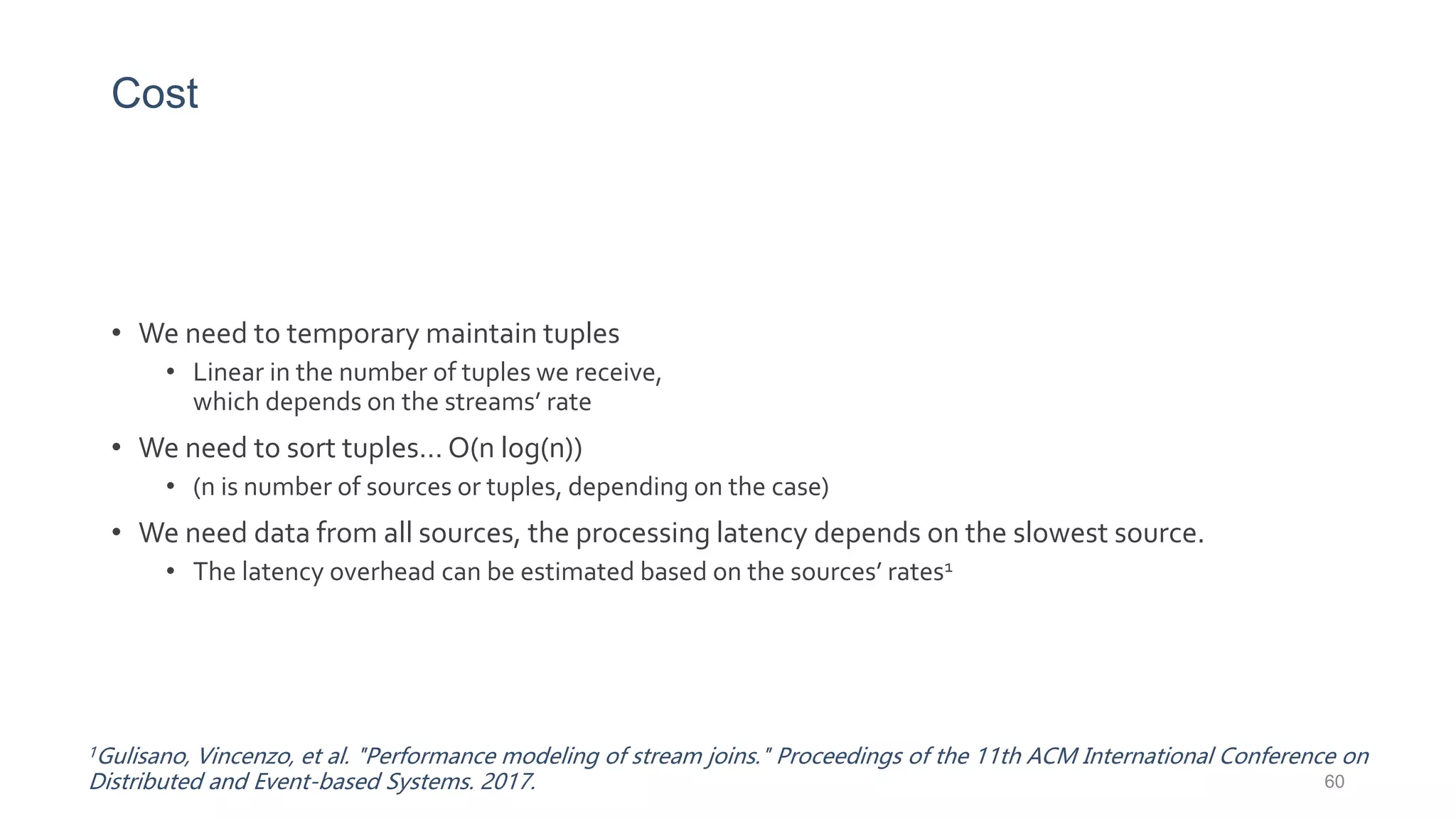

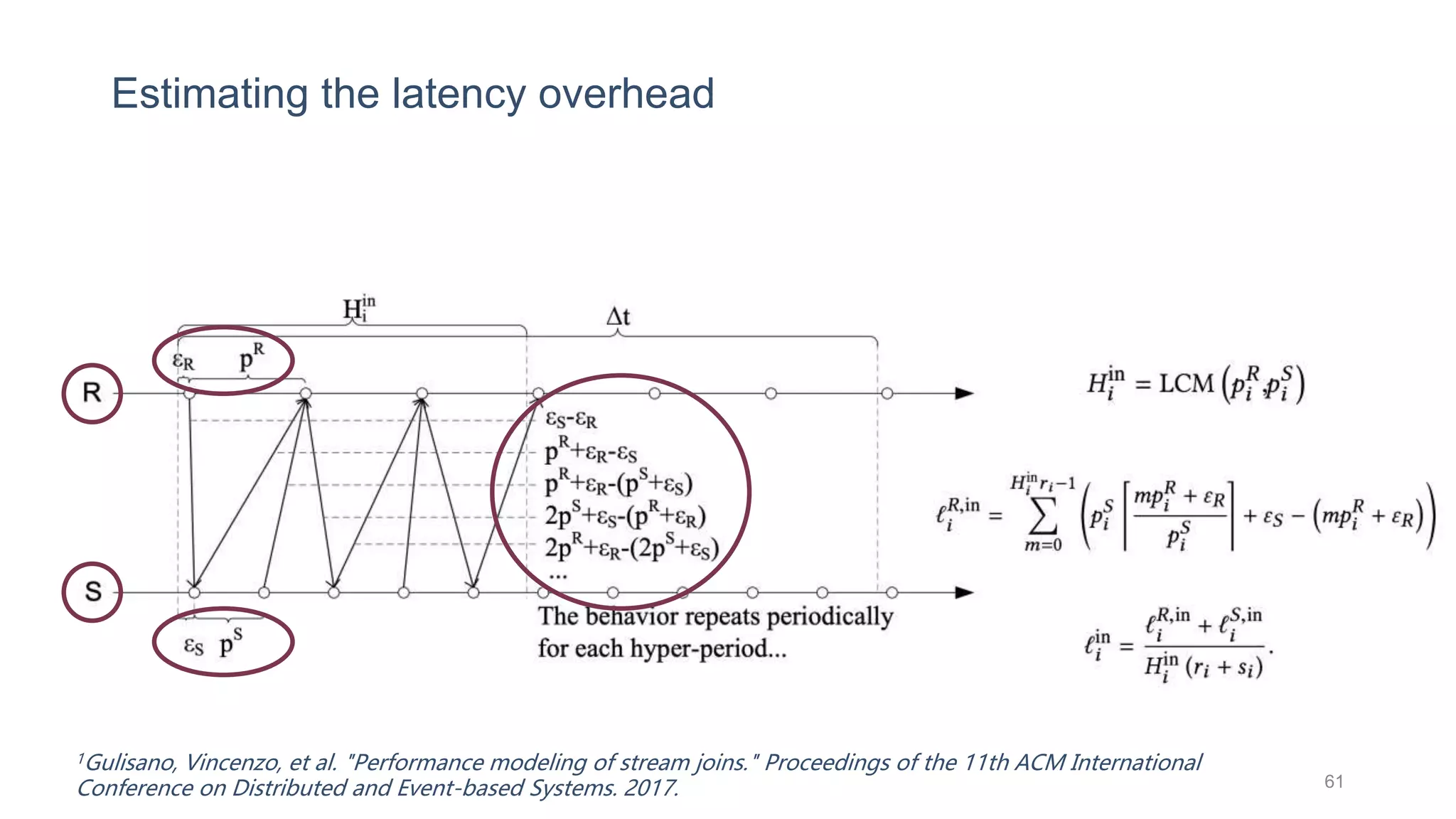

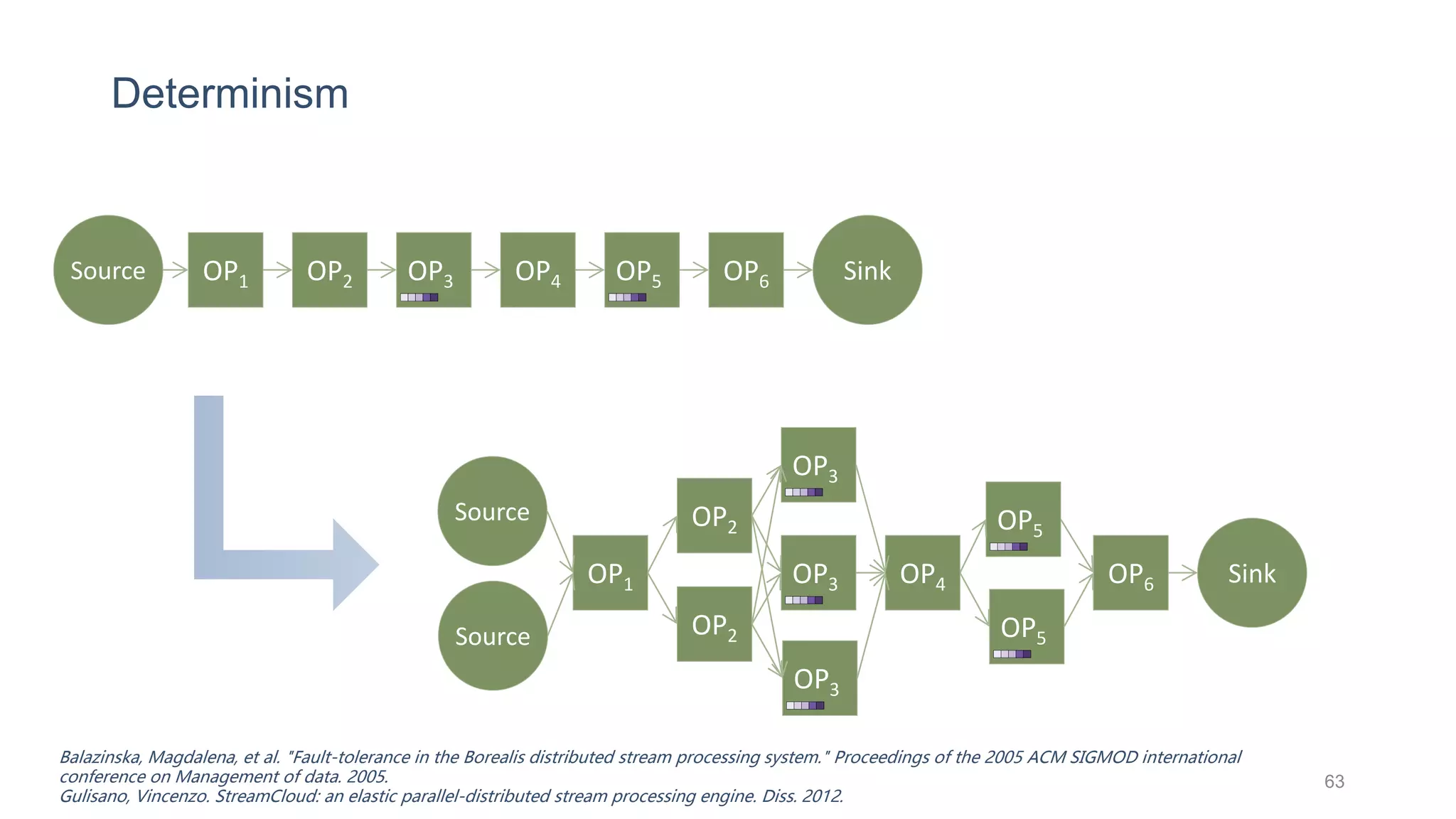

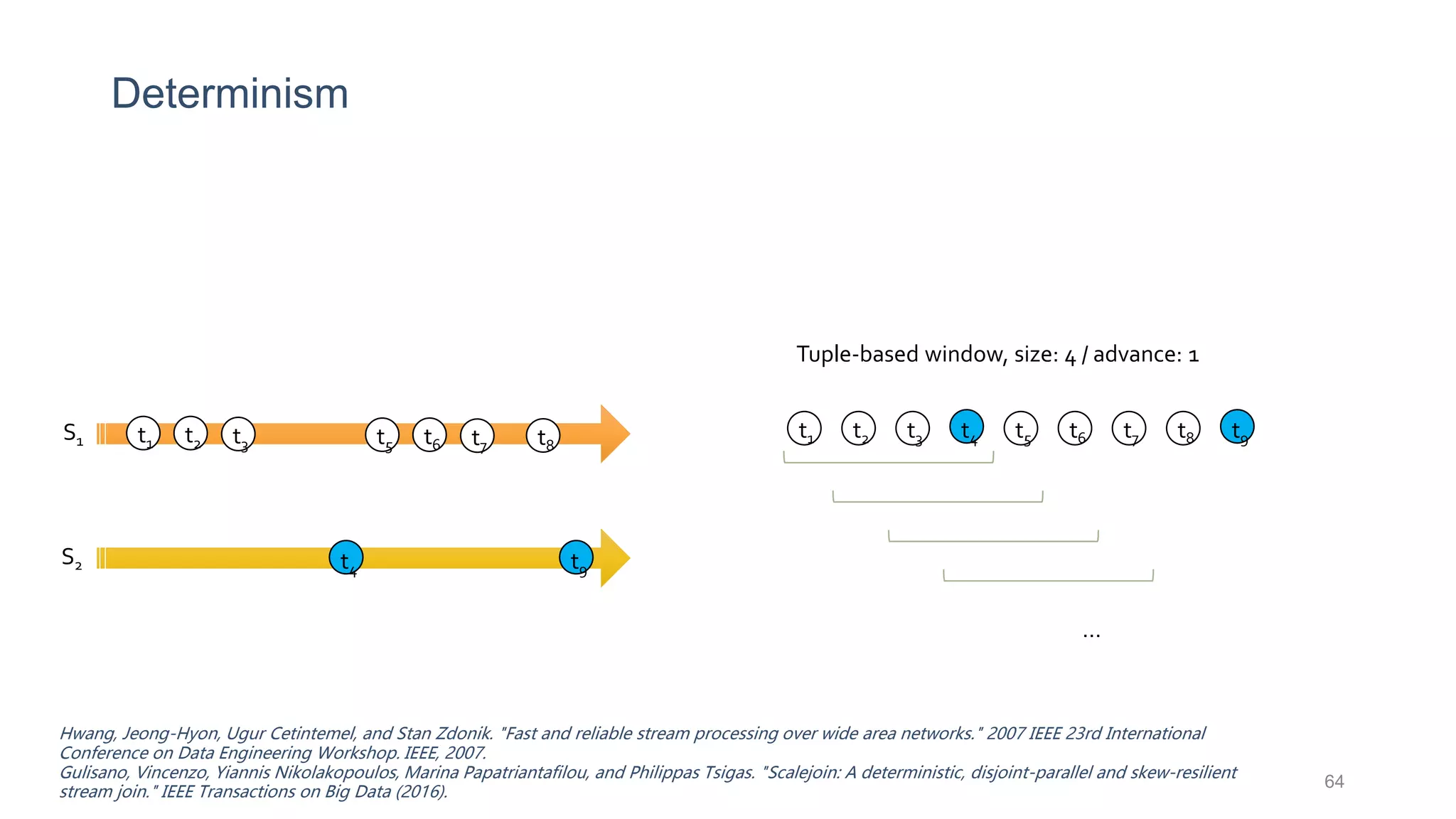

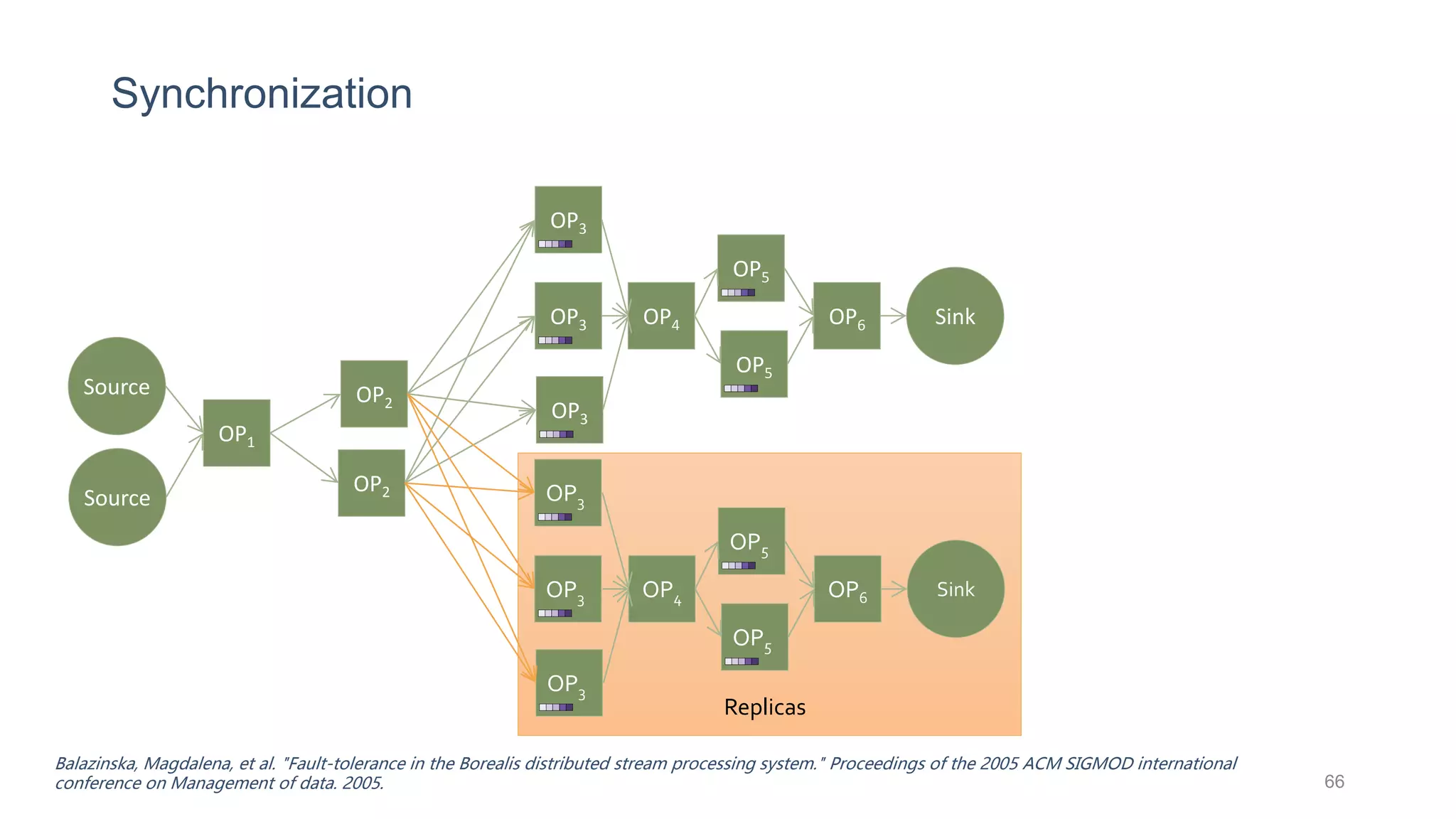

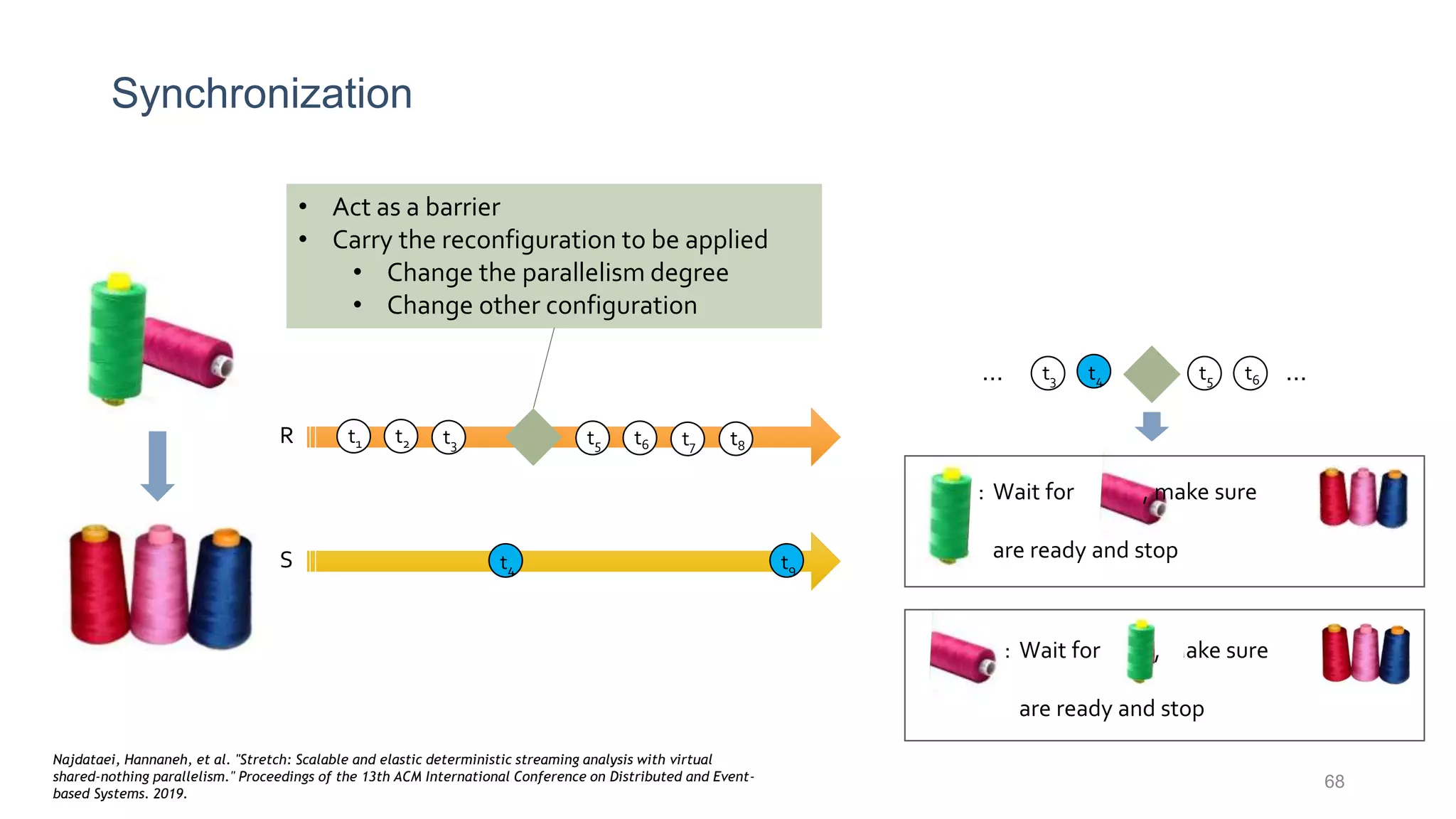

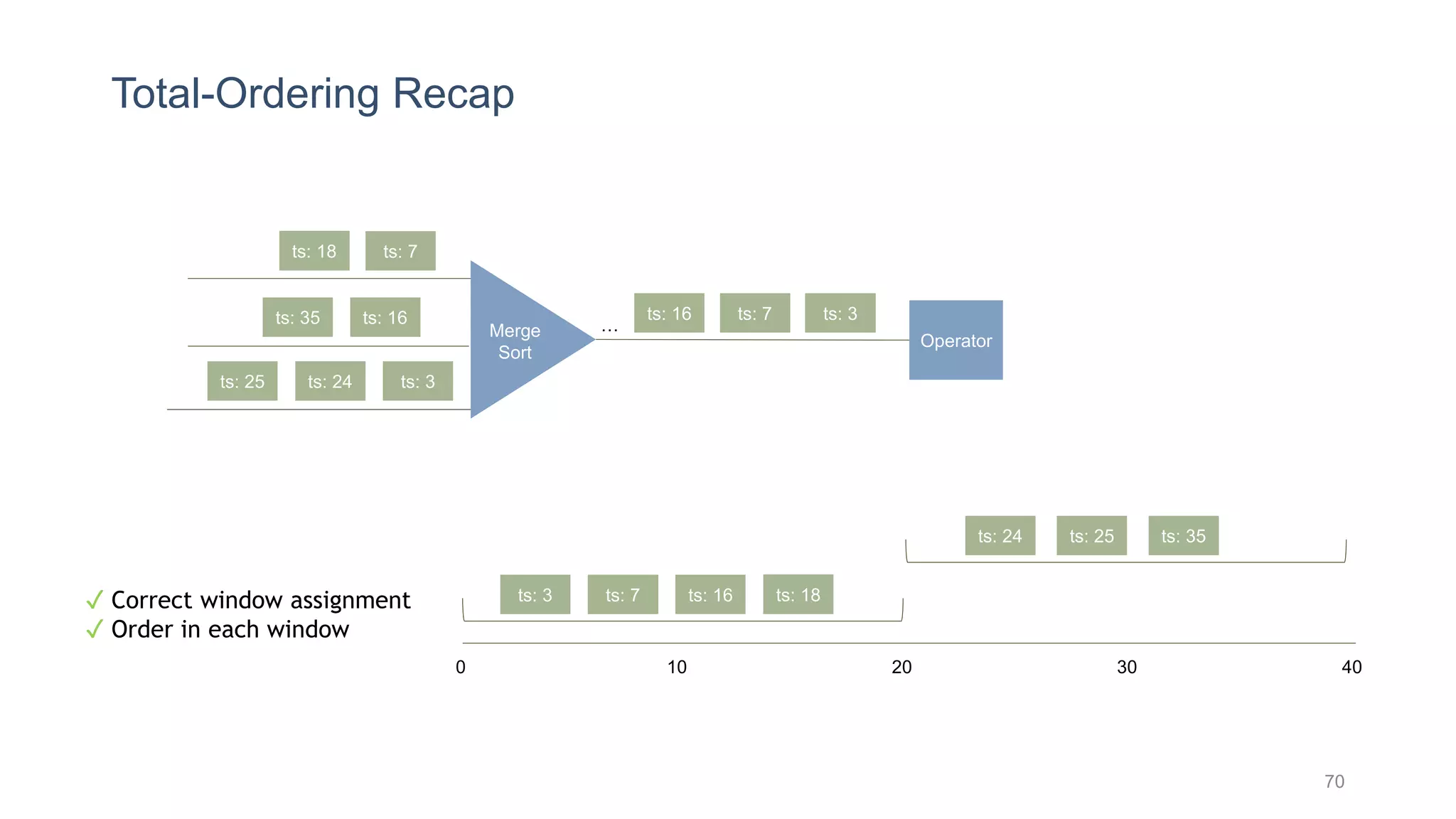

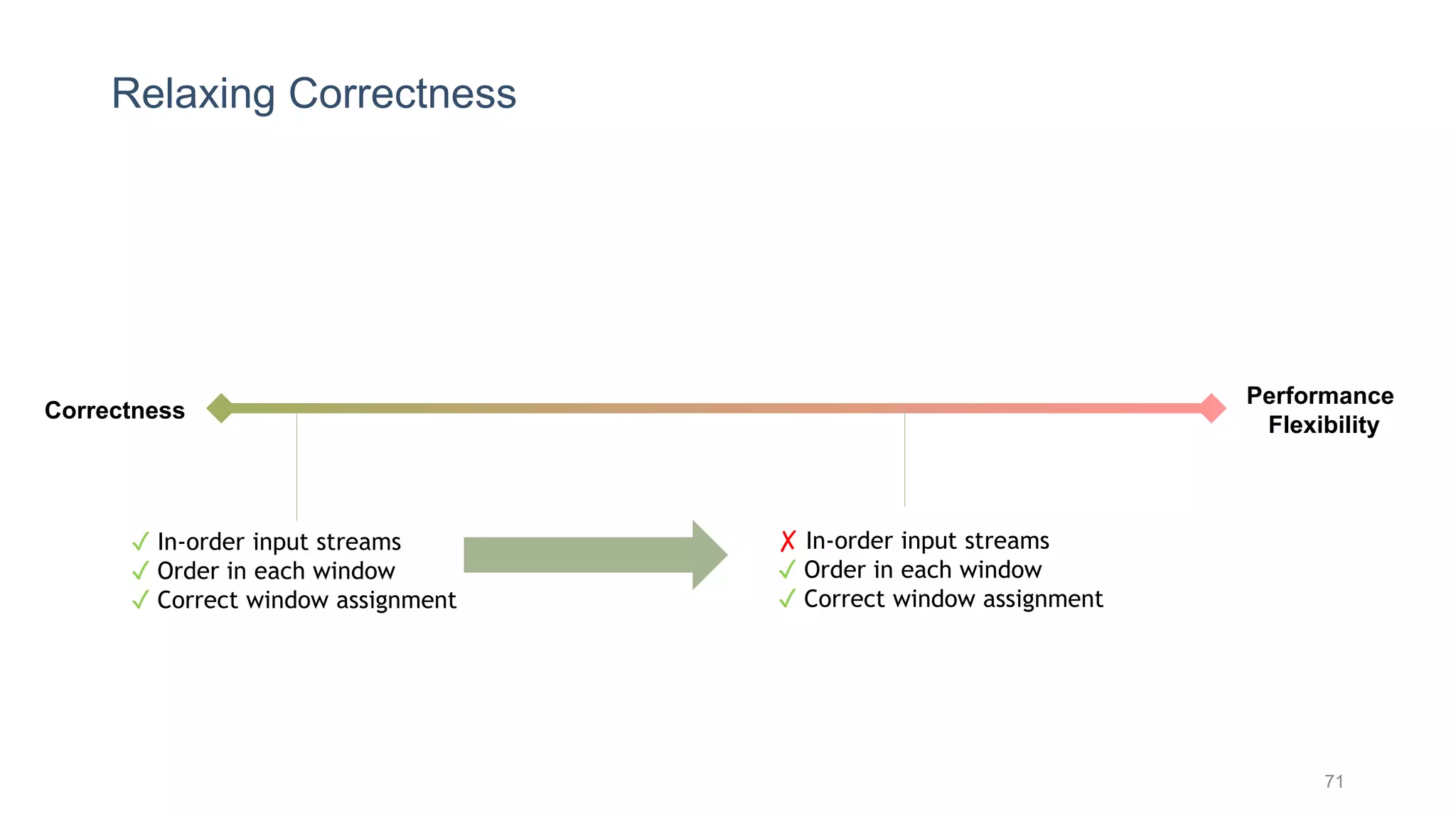

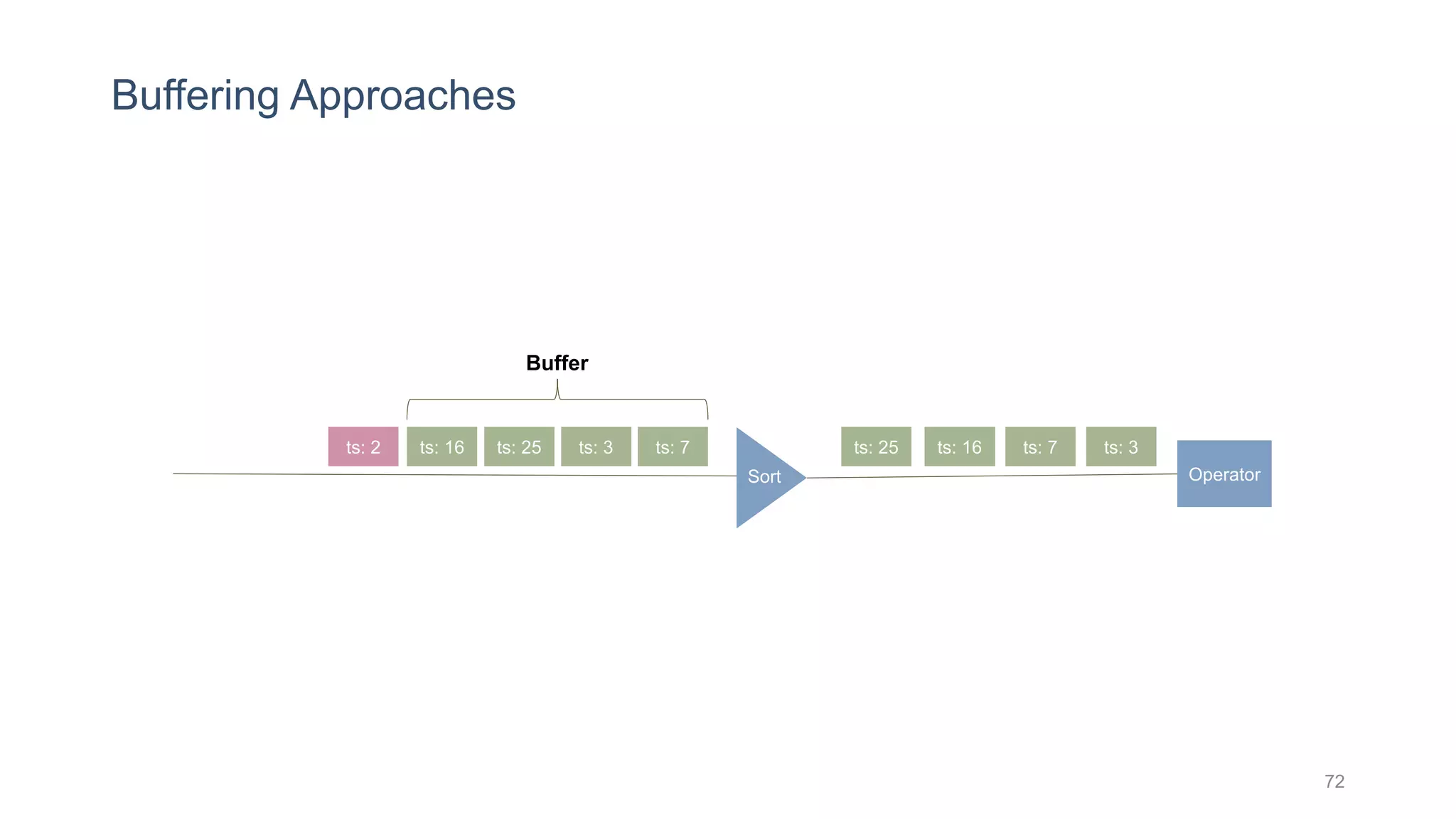

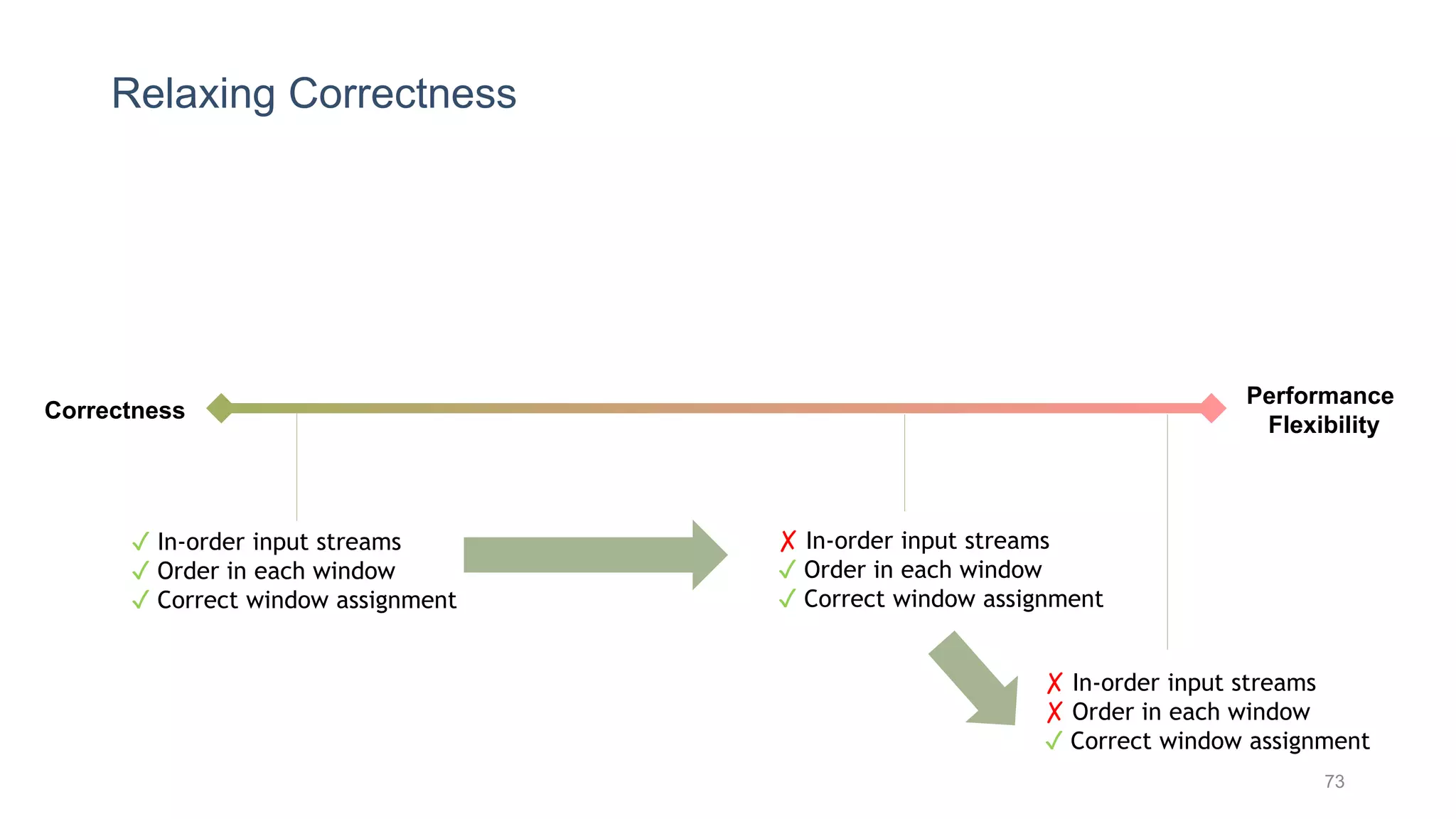

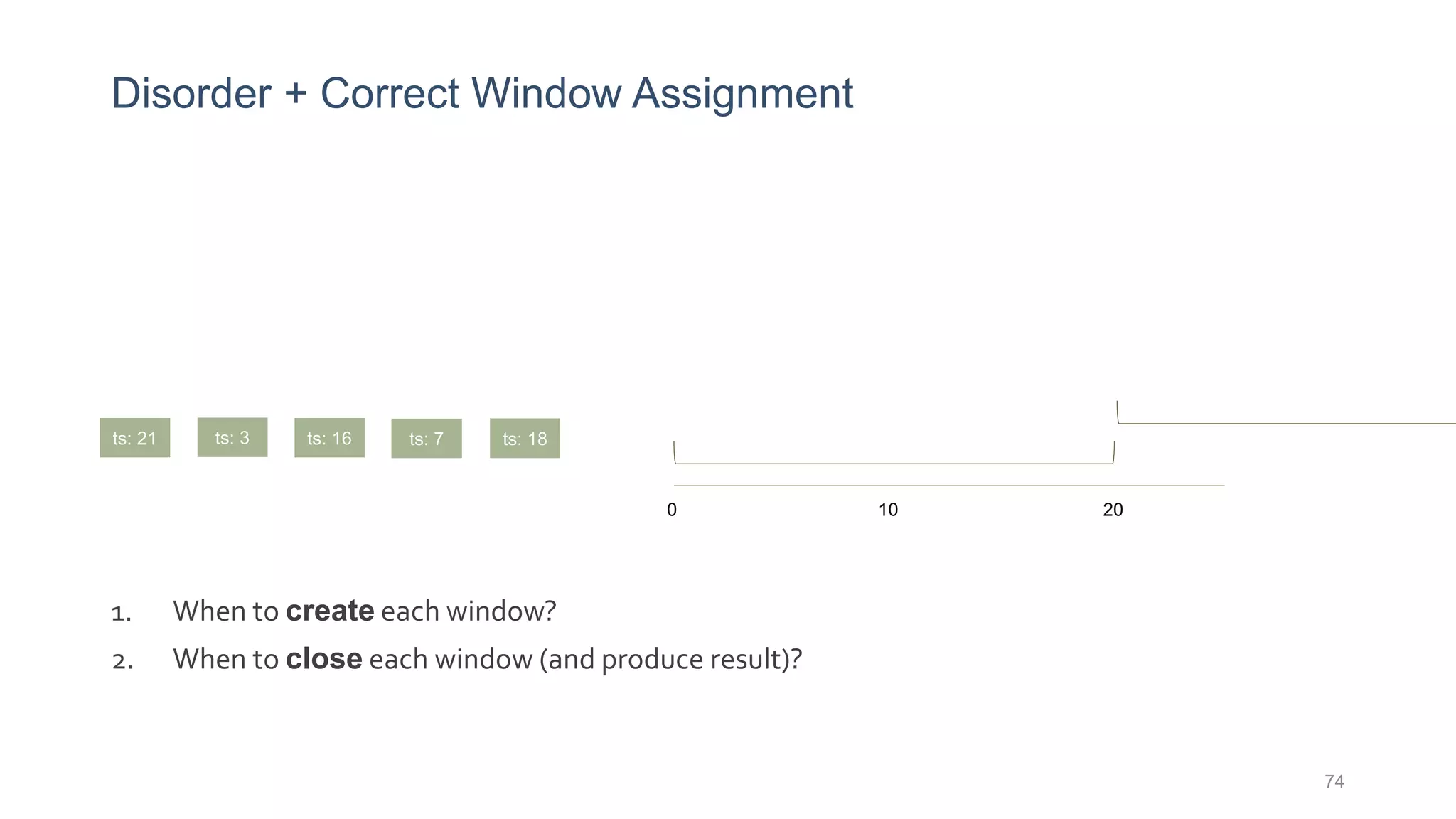

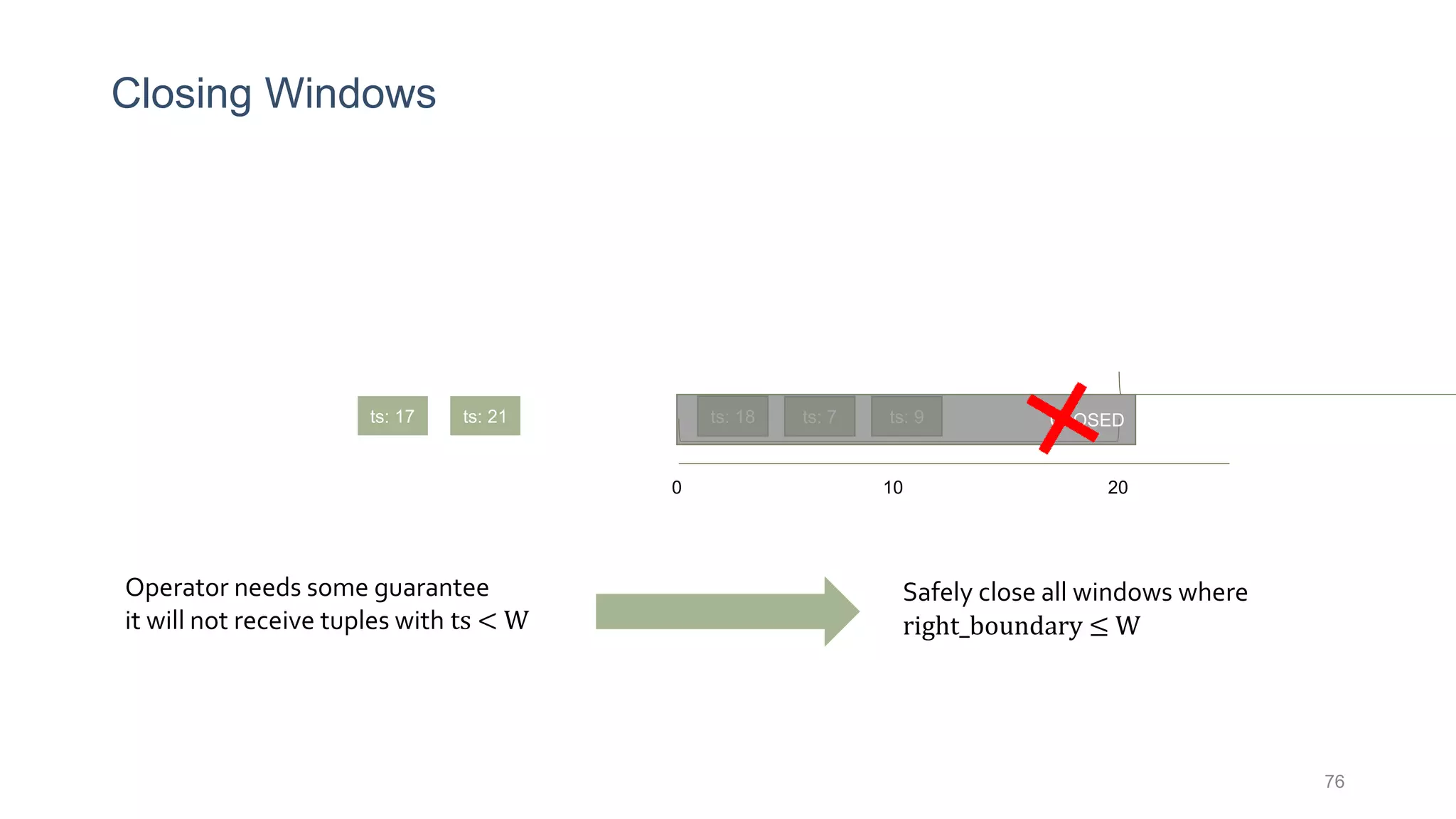

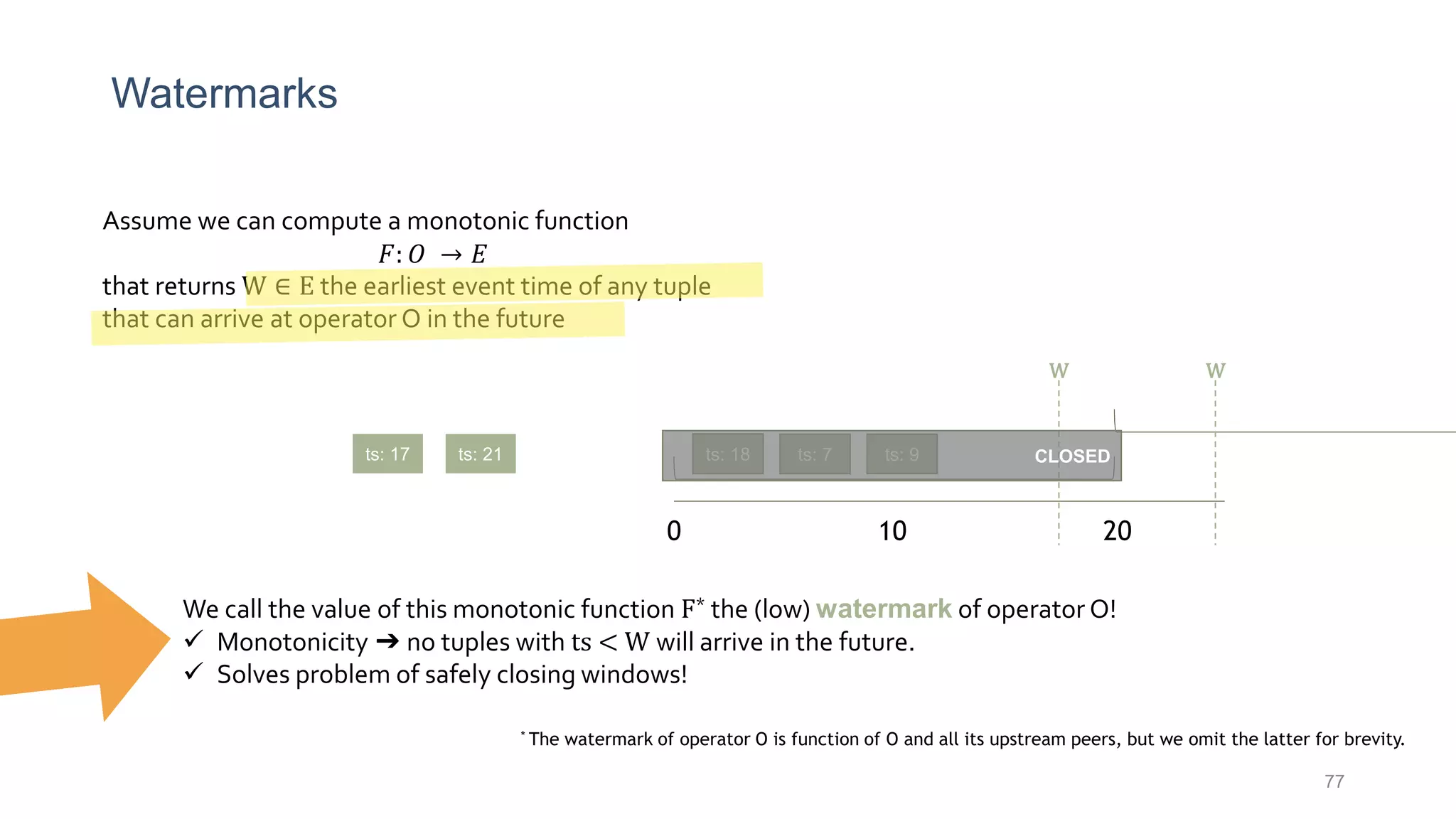

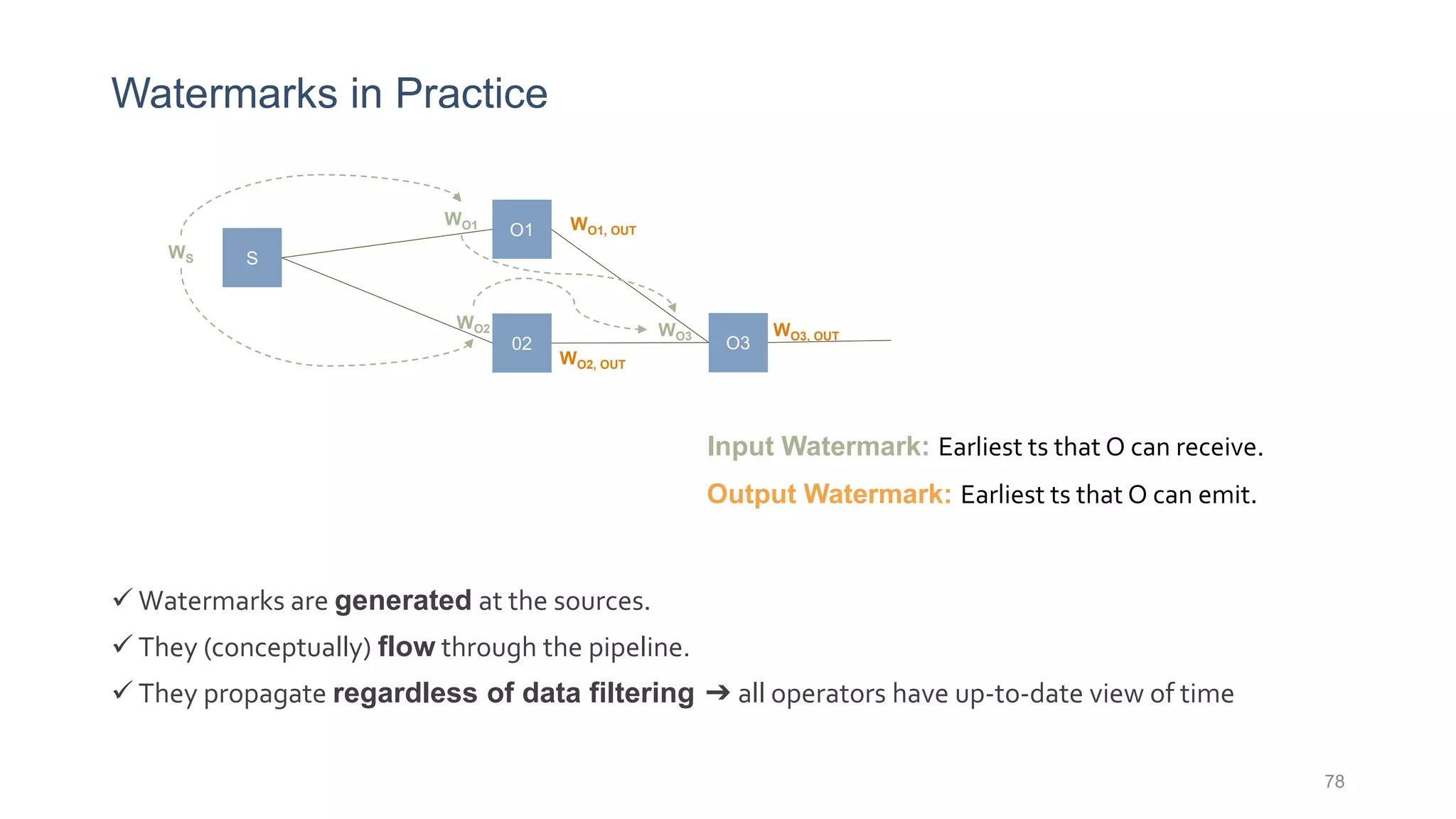

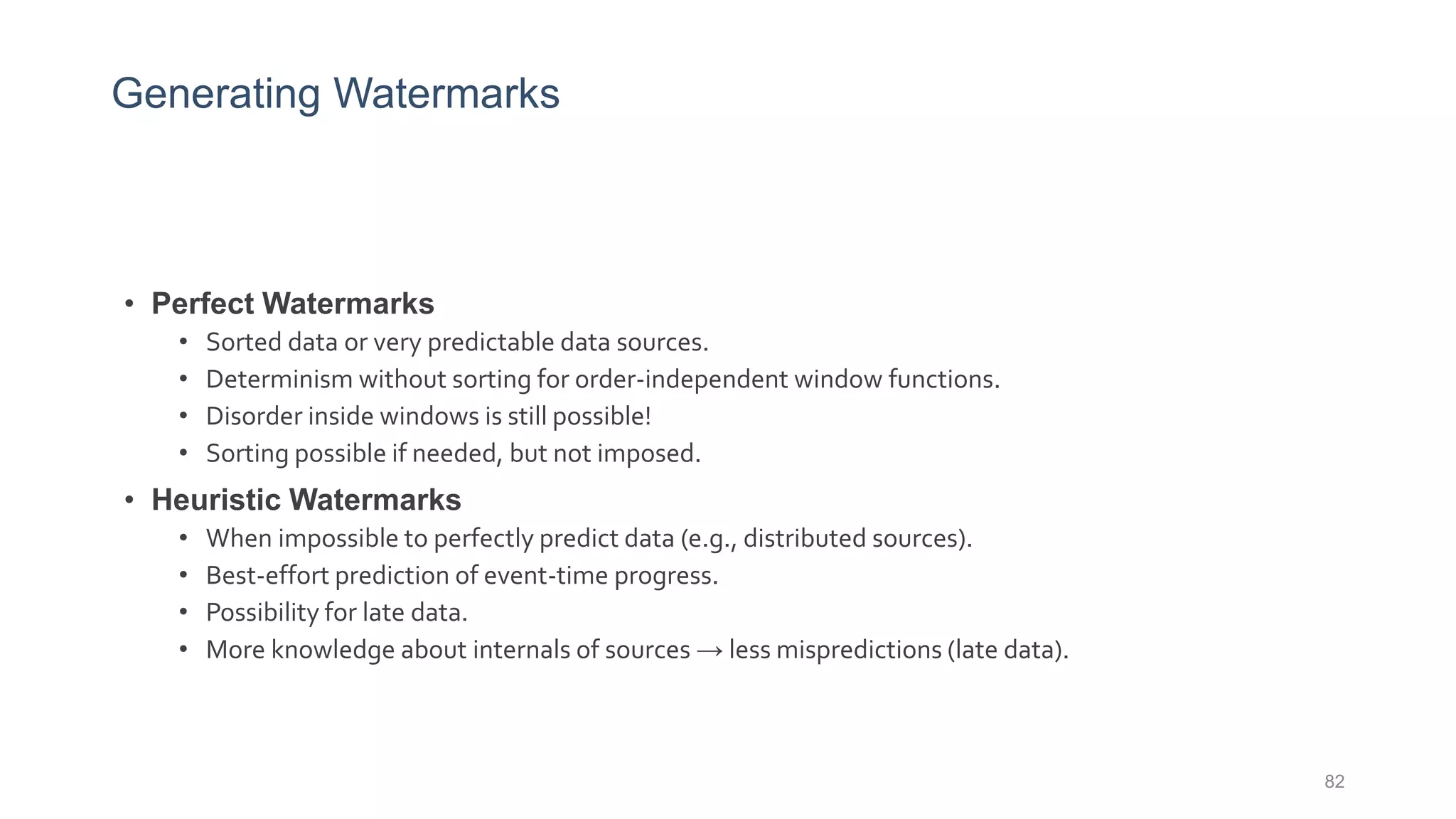

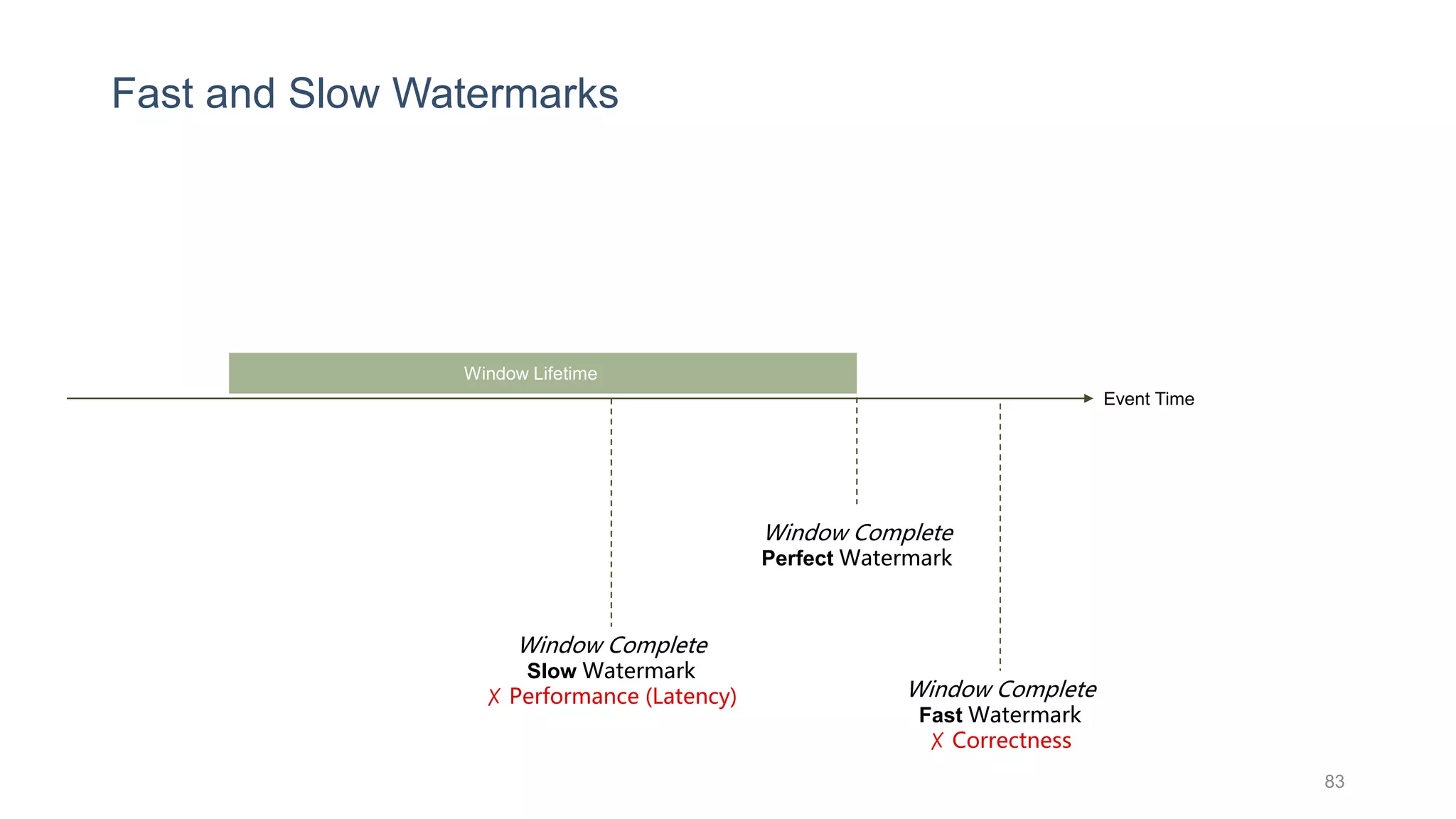

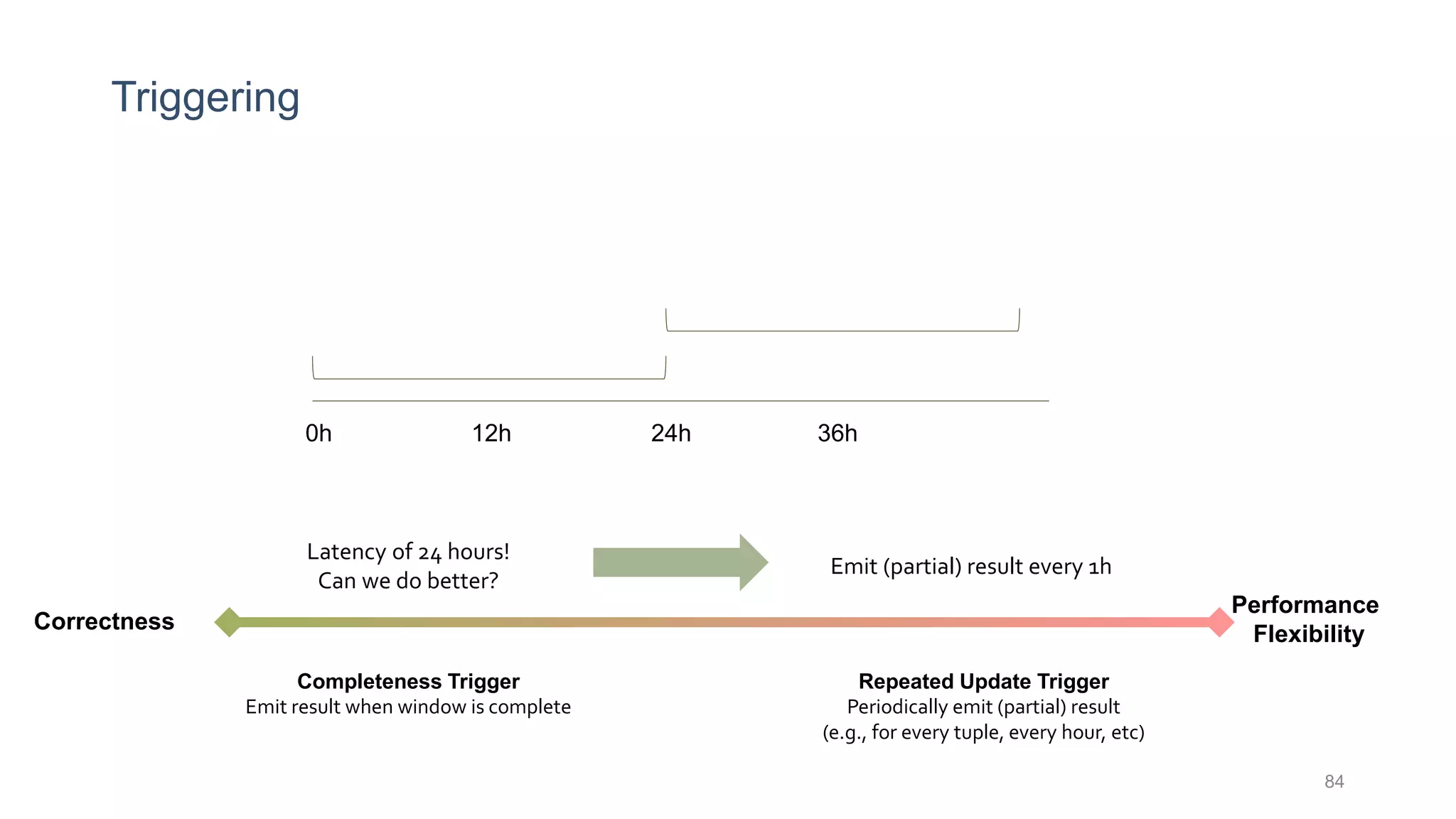

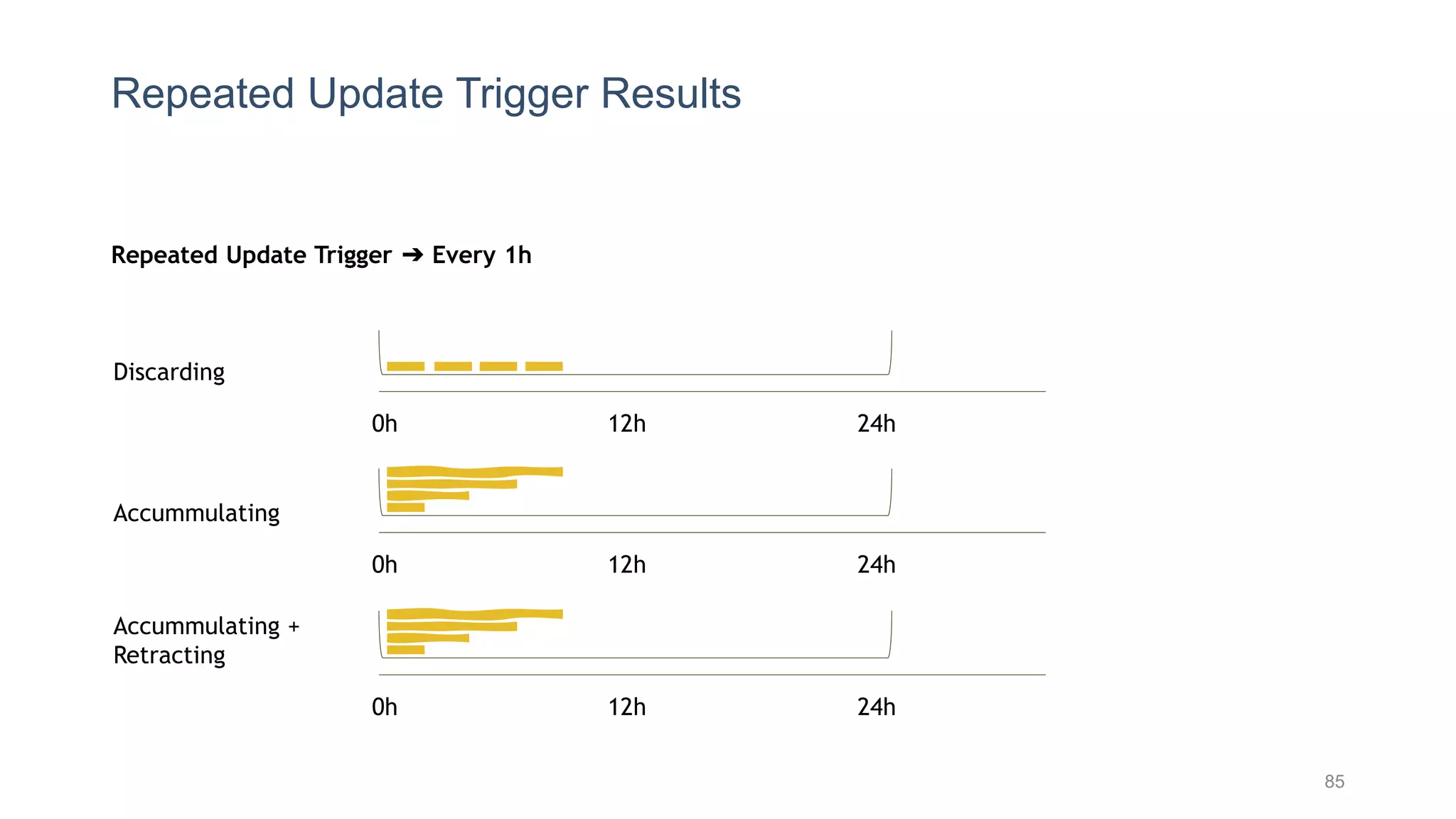

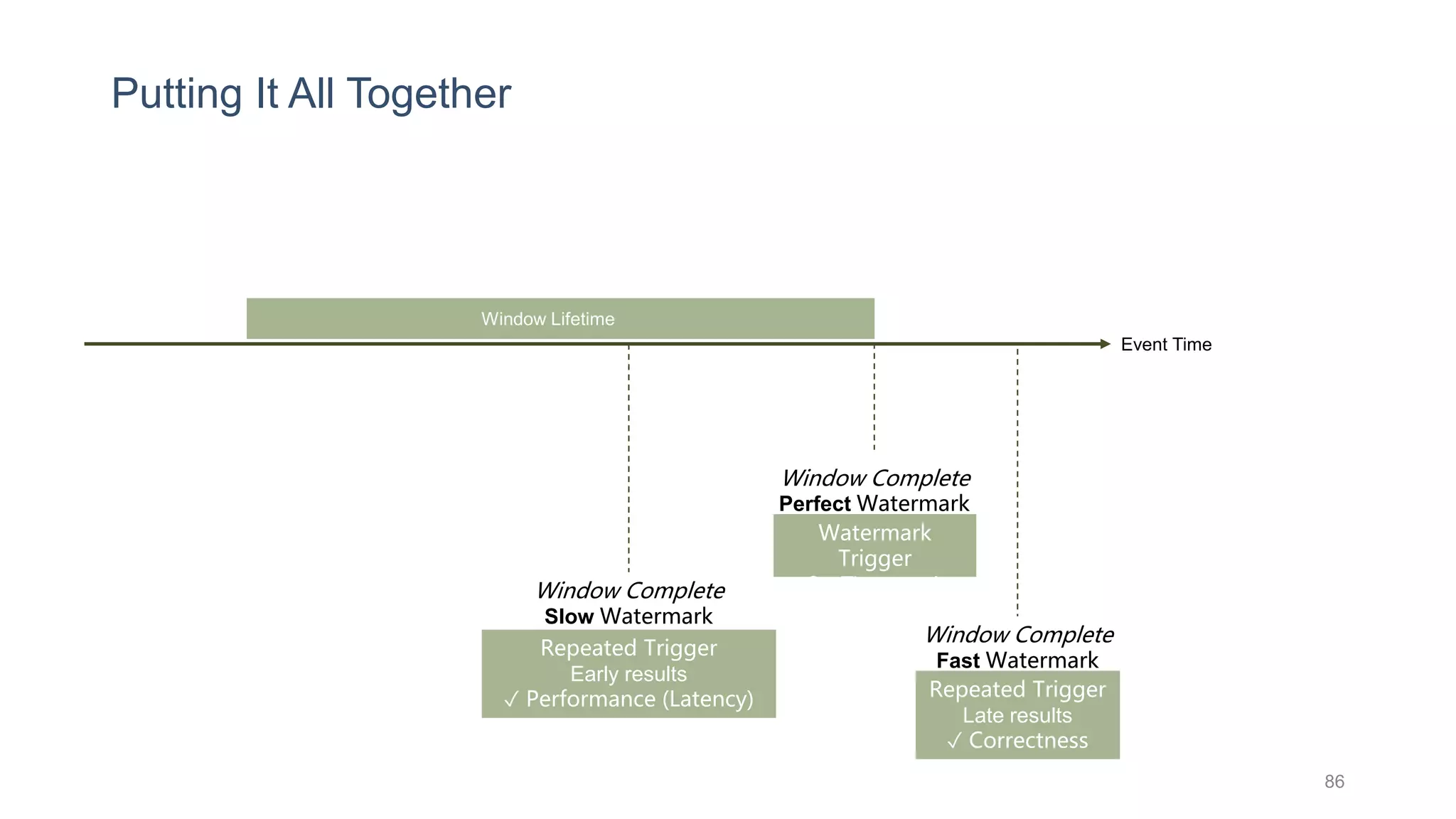

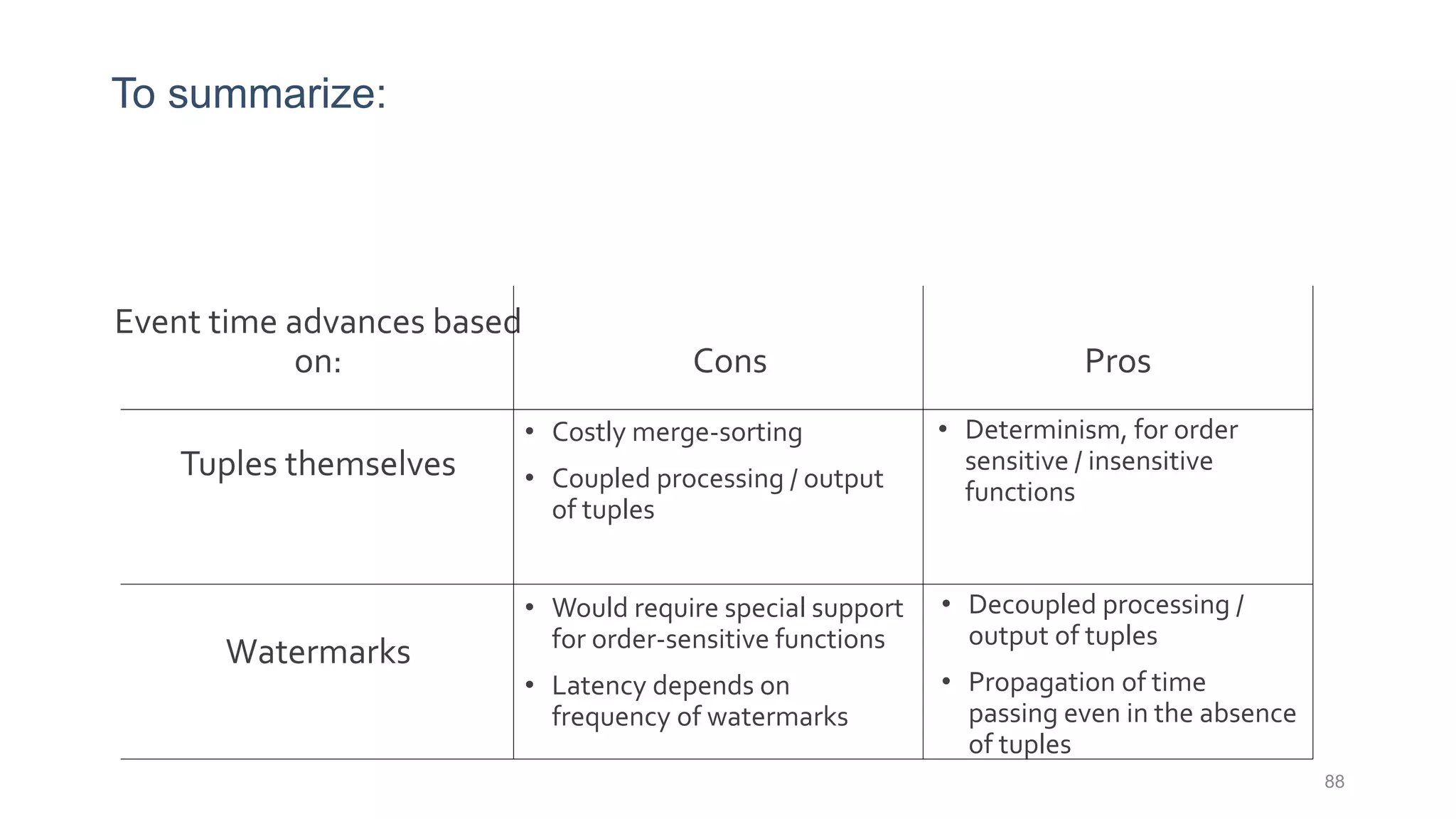

This document provides a tutorial on the role of event-time order in data streaming analysis. The agenda covers motivations and examples of data streaming and stream processing engines, causes of out-of-order data and solutions to enforce total ordering, pros and cons of total ordering, and relaxation of total ordering using watermarks. Enforcing total ordering through techniques like sorting tuples is computationally expensive but provides benefits like determinism and synchronization. However, it may be an overkill for some applications and increase latency.