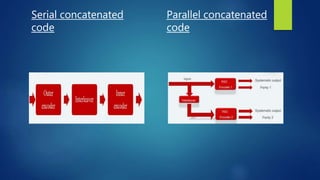

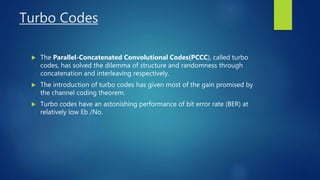

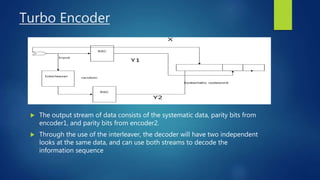

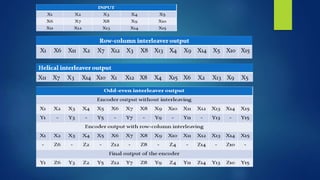

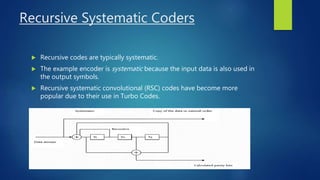

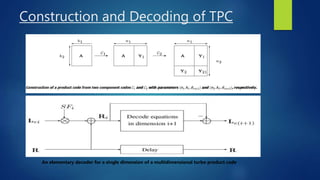

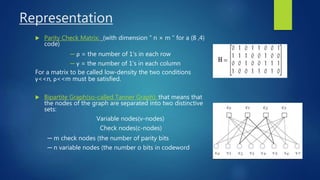

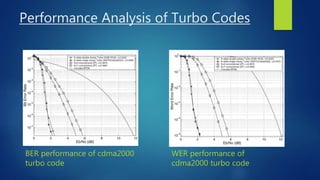

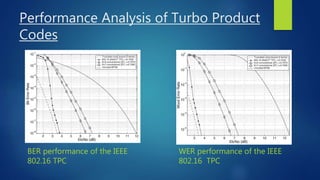

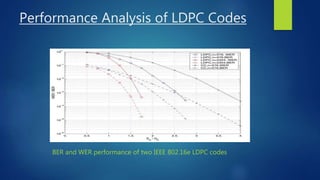

This document discusses turbo and turbo-like codes. It begins with an introduction to turbo codes, describing them as a class of high-performance error correction codes that were the first practical codes to closely approach channel capacity. It then covers channel coding, Shannon's theory, existing coding schemes like block codes and convolutional codes, and the need for better codes. The document spends significant time explaining turbo codes in detail, including their structure using parallel concatenated convolutional codes, interleaving, and iterative decoding. It also discusses related coding schemes like turbo product codes and low-density parity check codes. Finally, it reviews the performance, practical issues, applications in standards, and future trends of turbo and turbo-like codes.