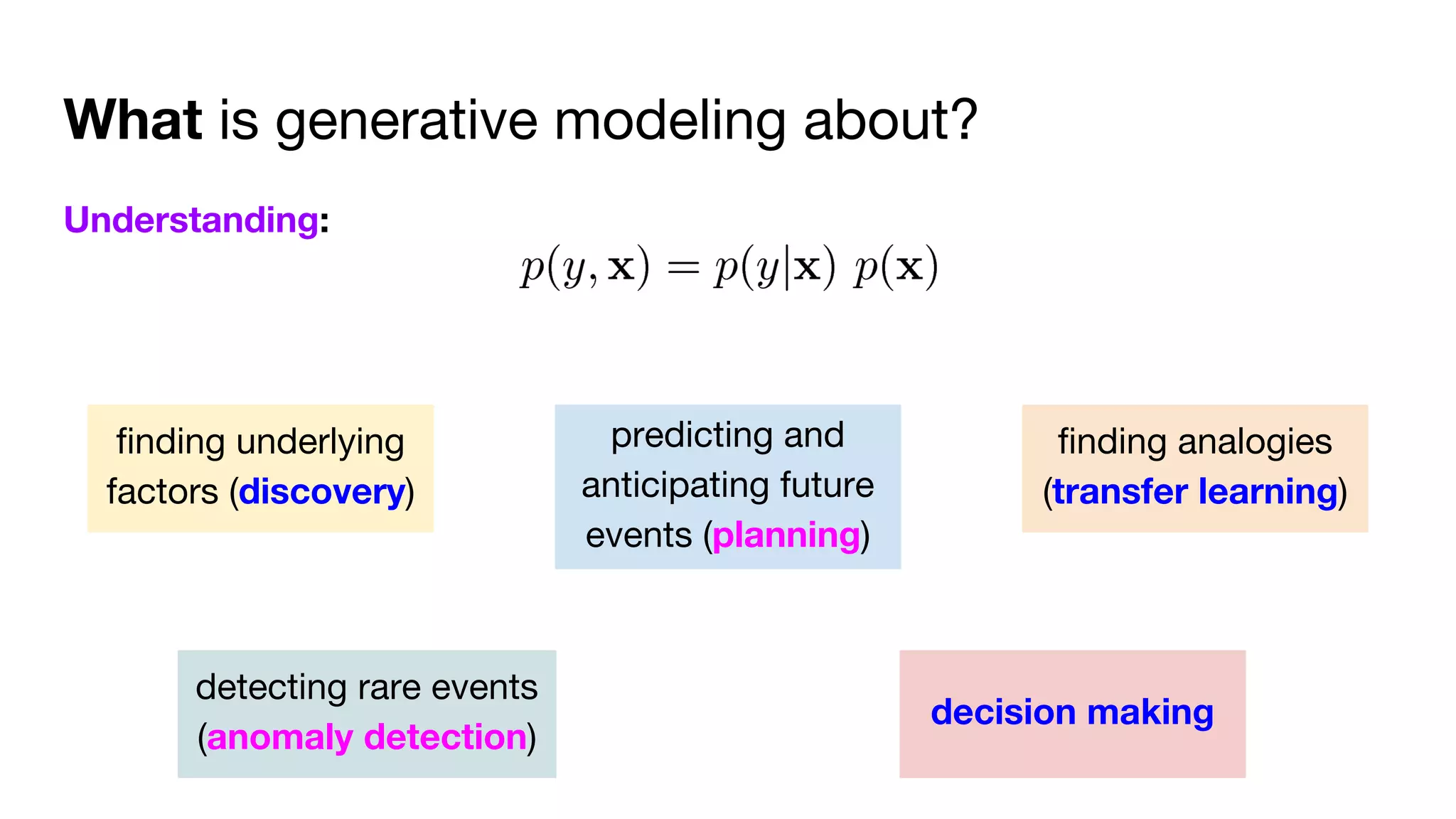

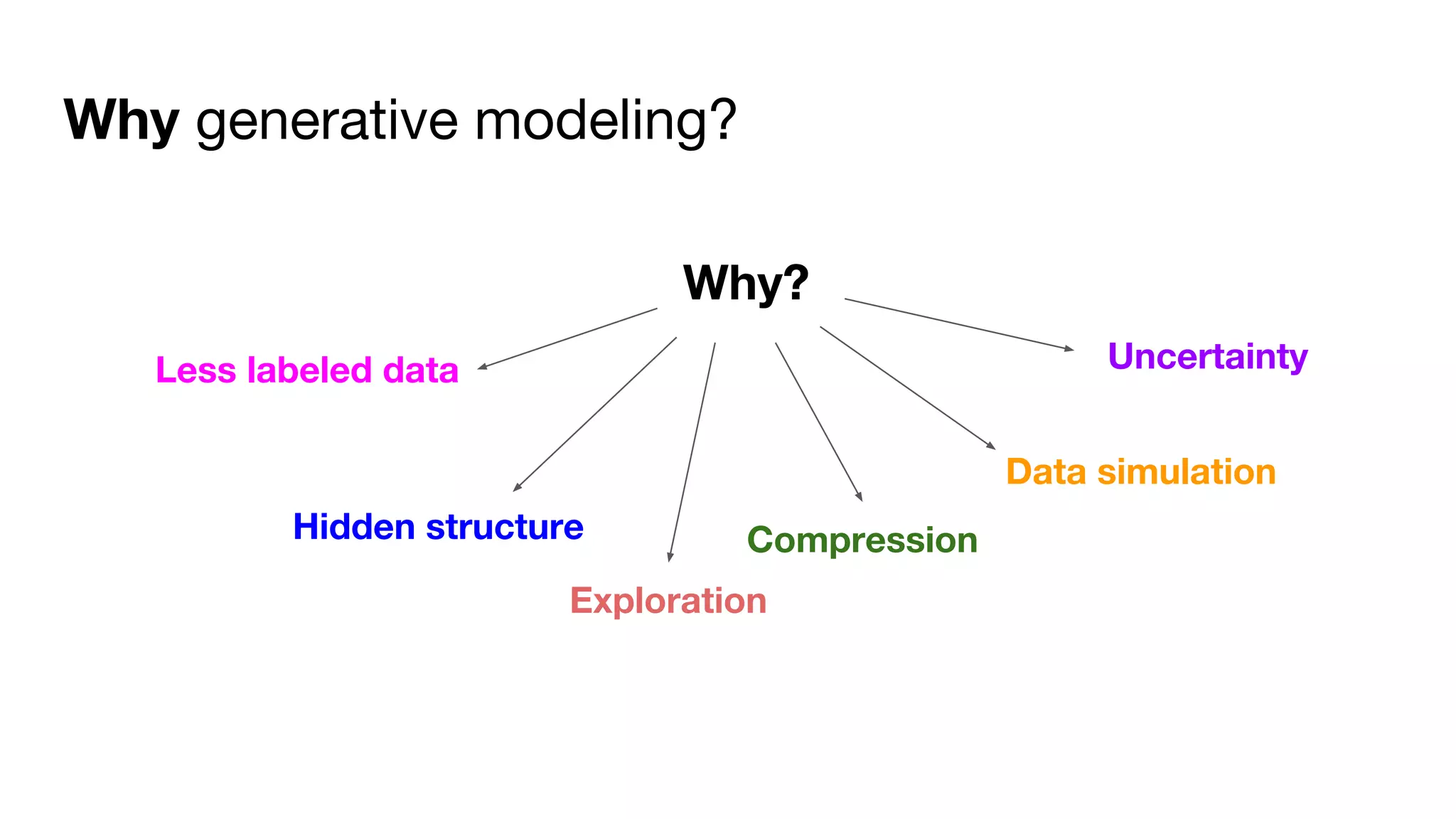

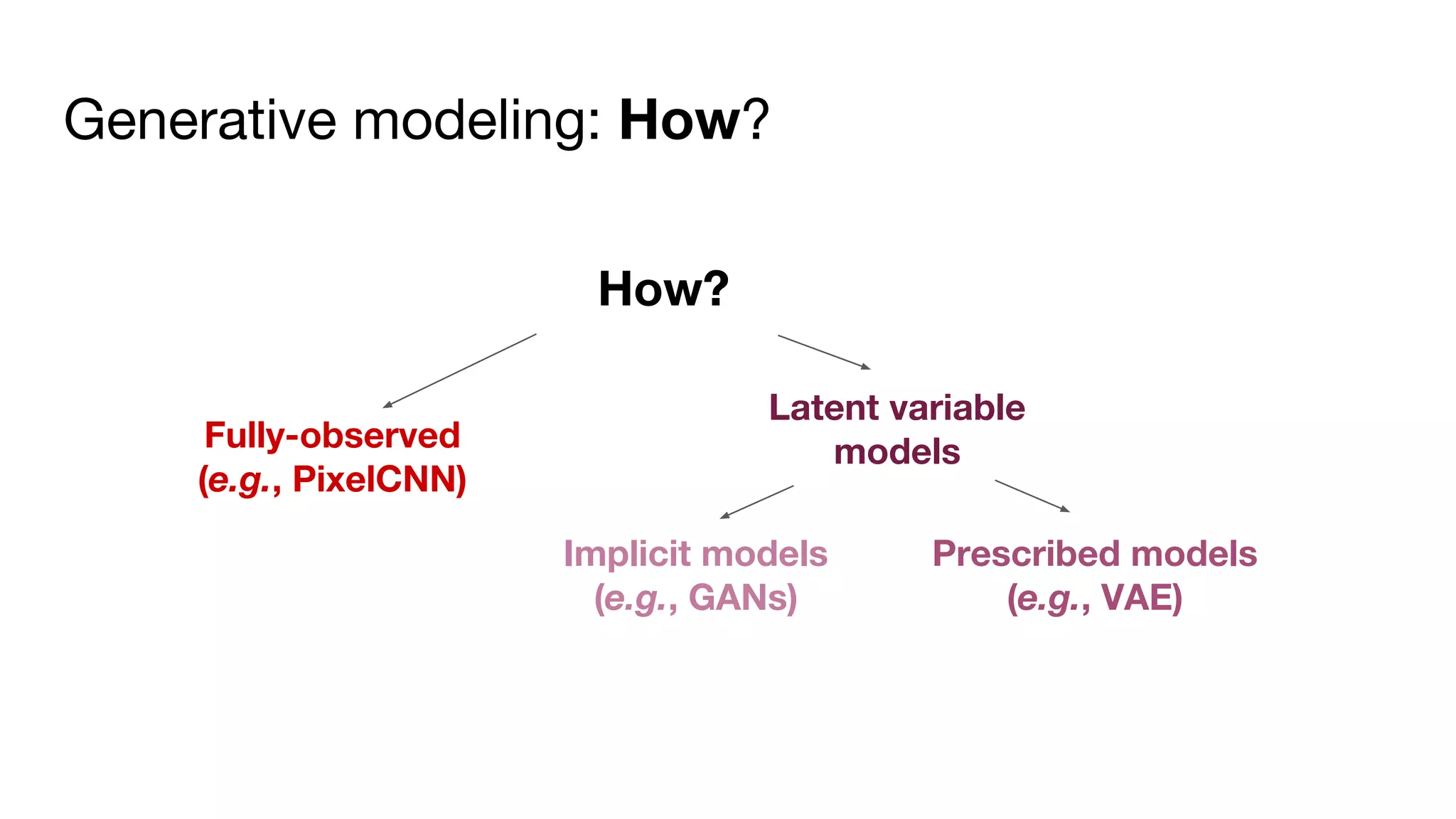

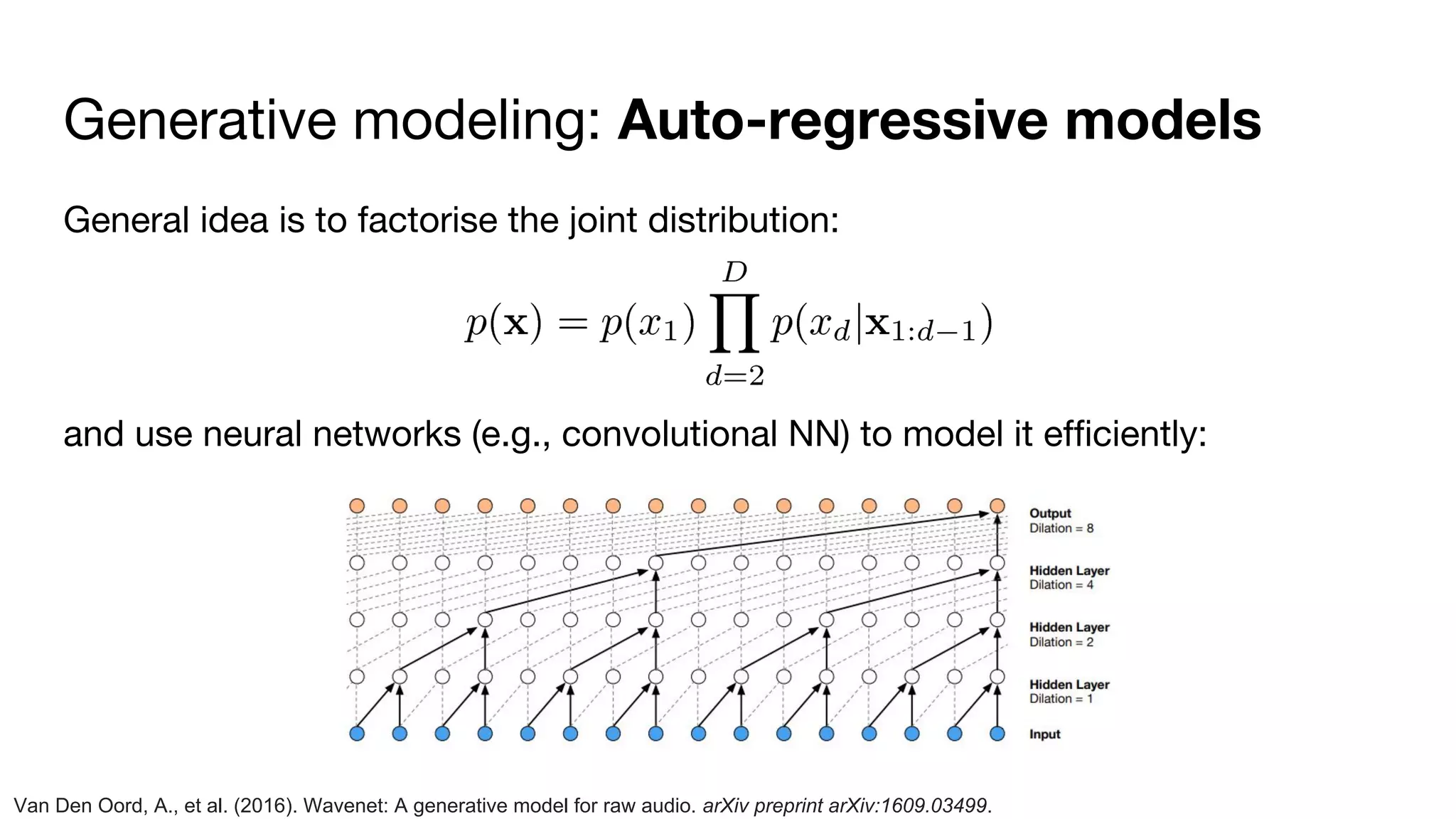

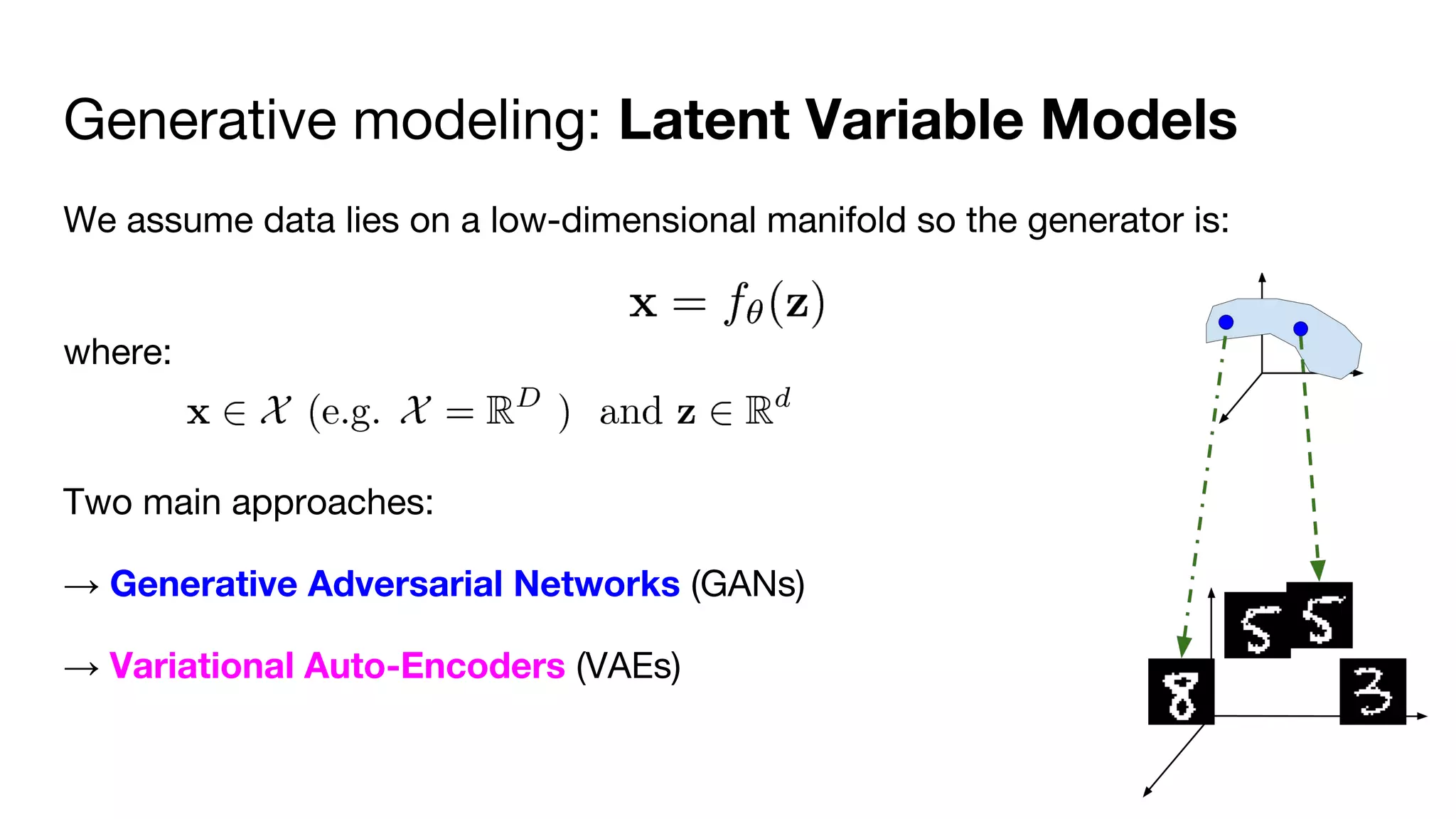

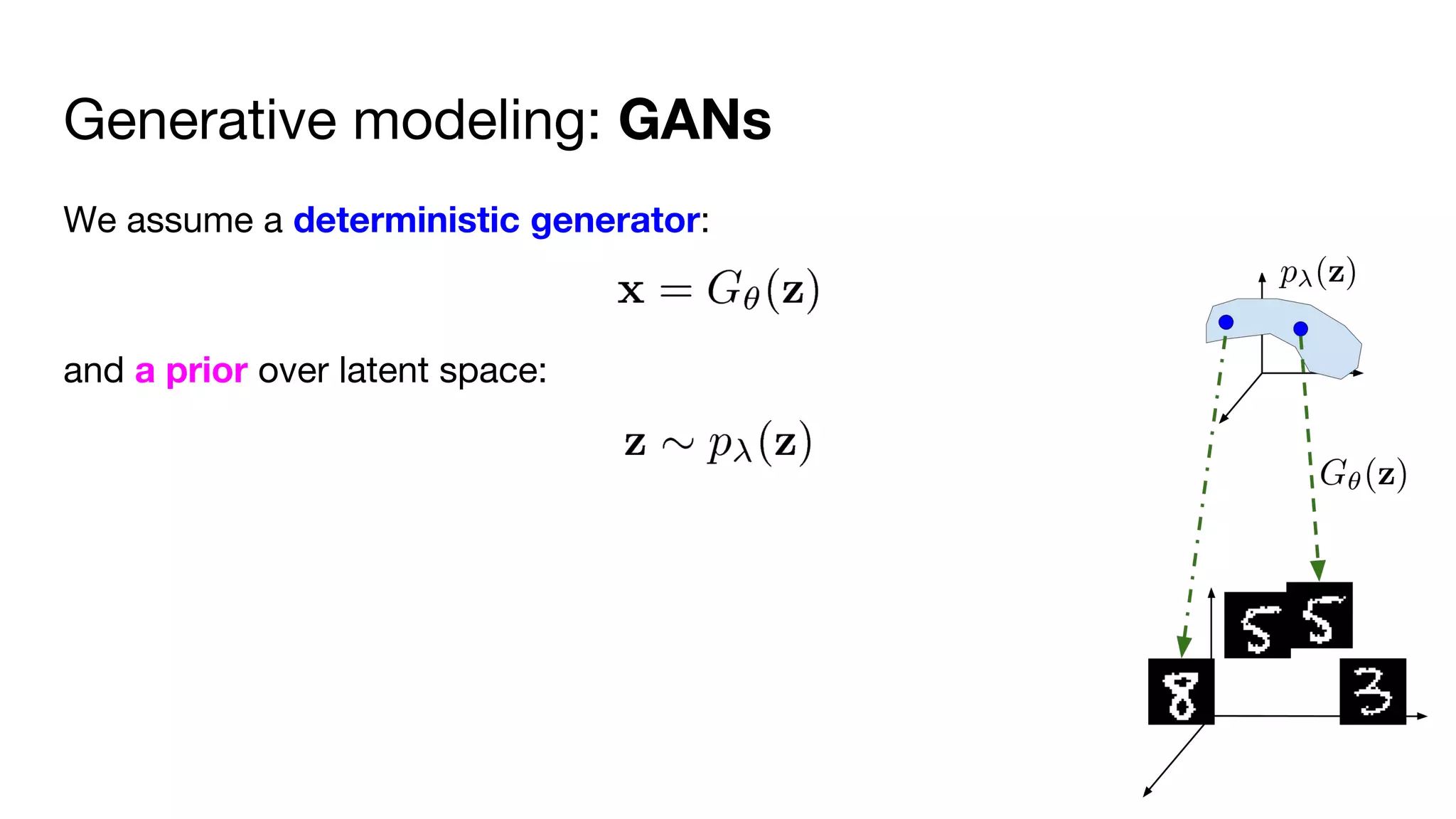

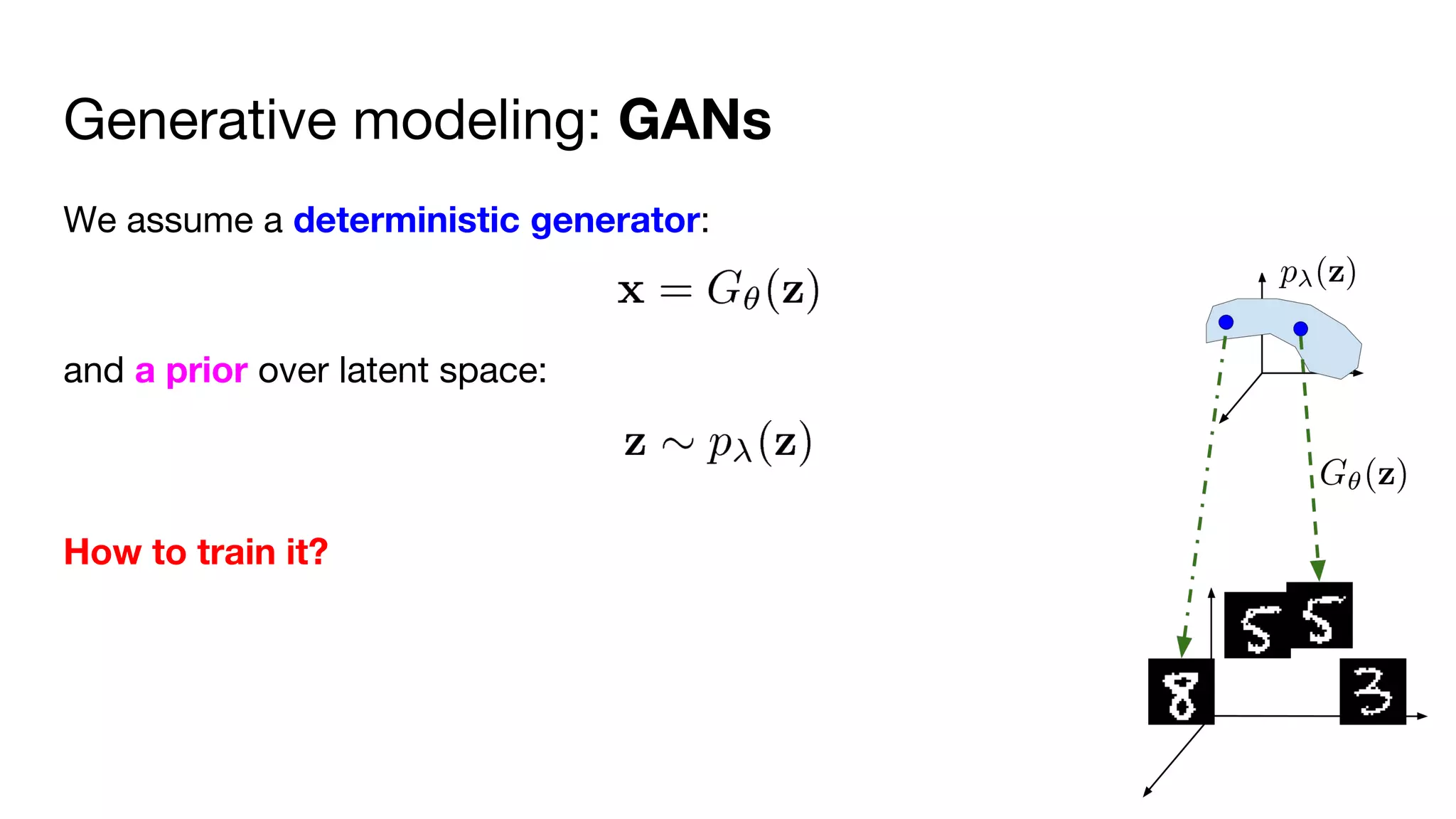

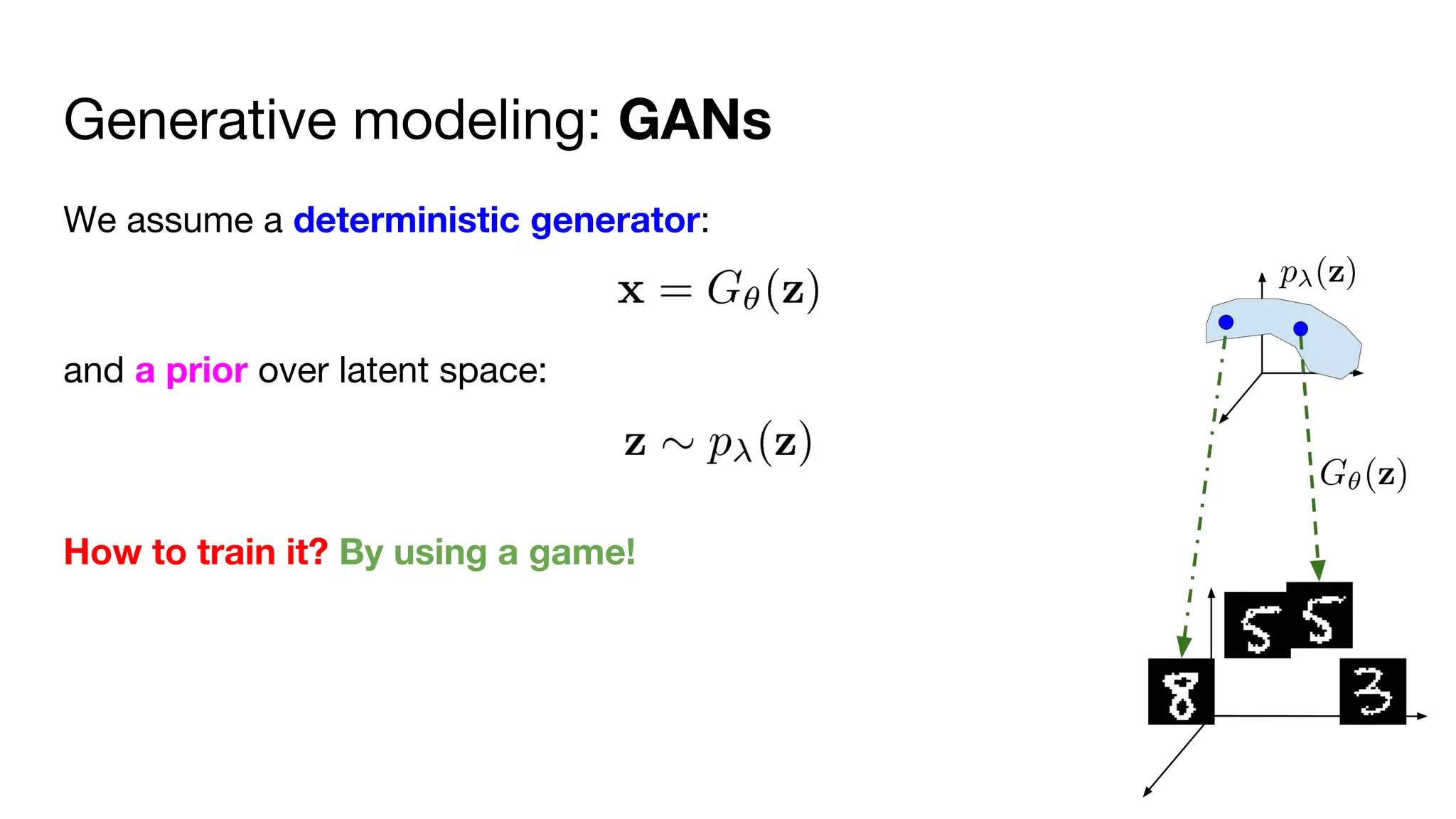

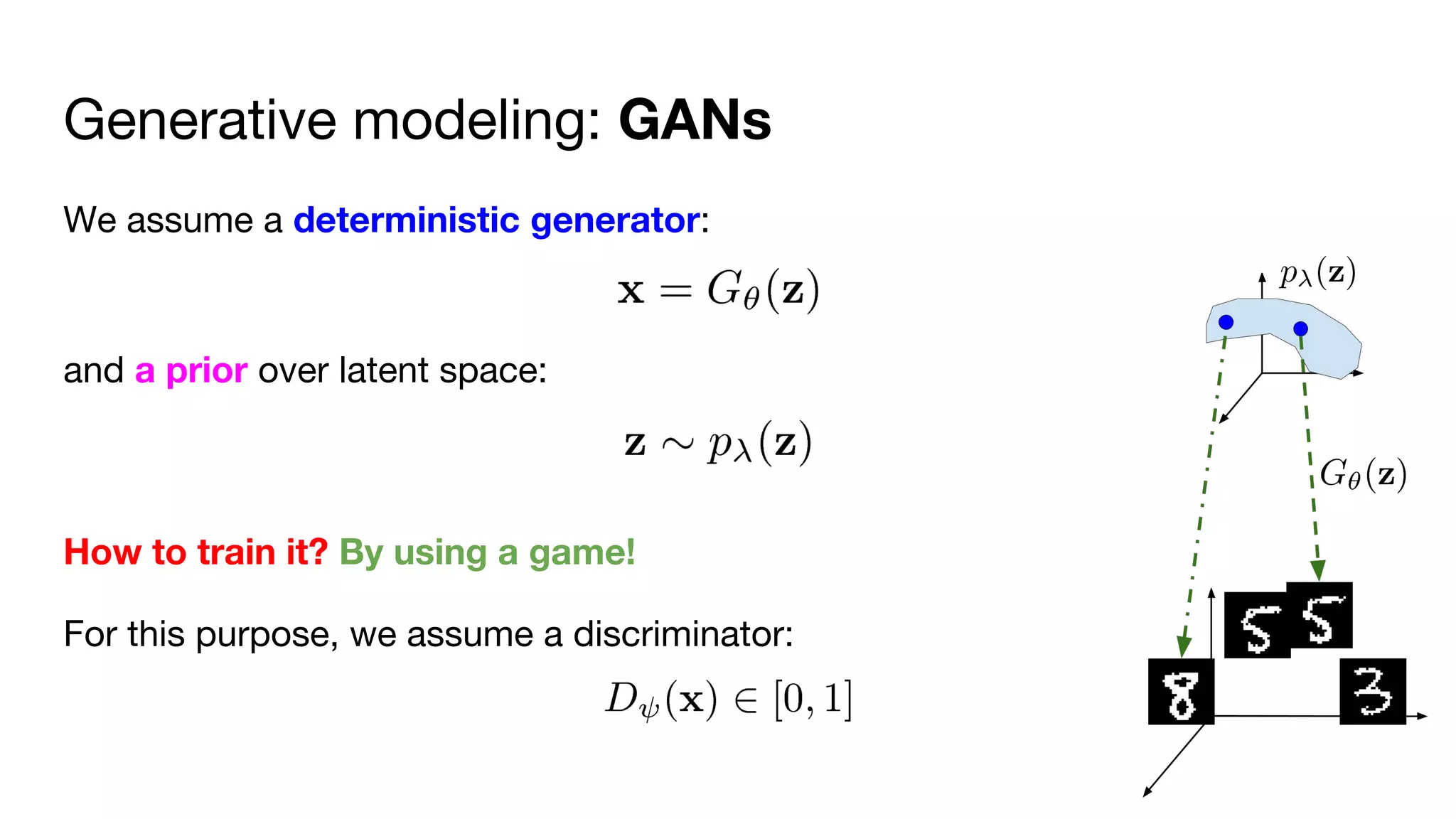

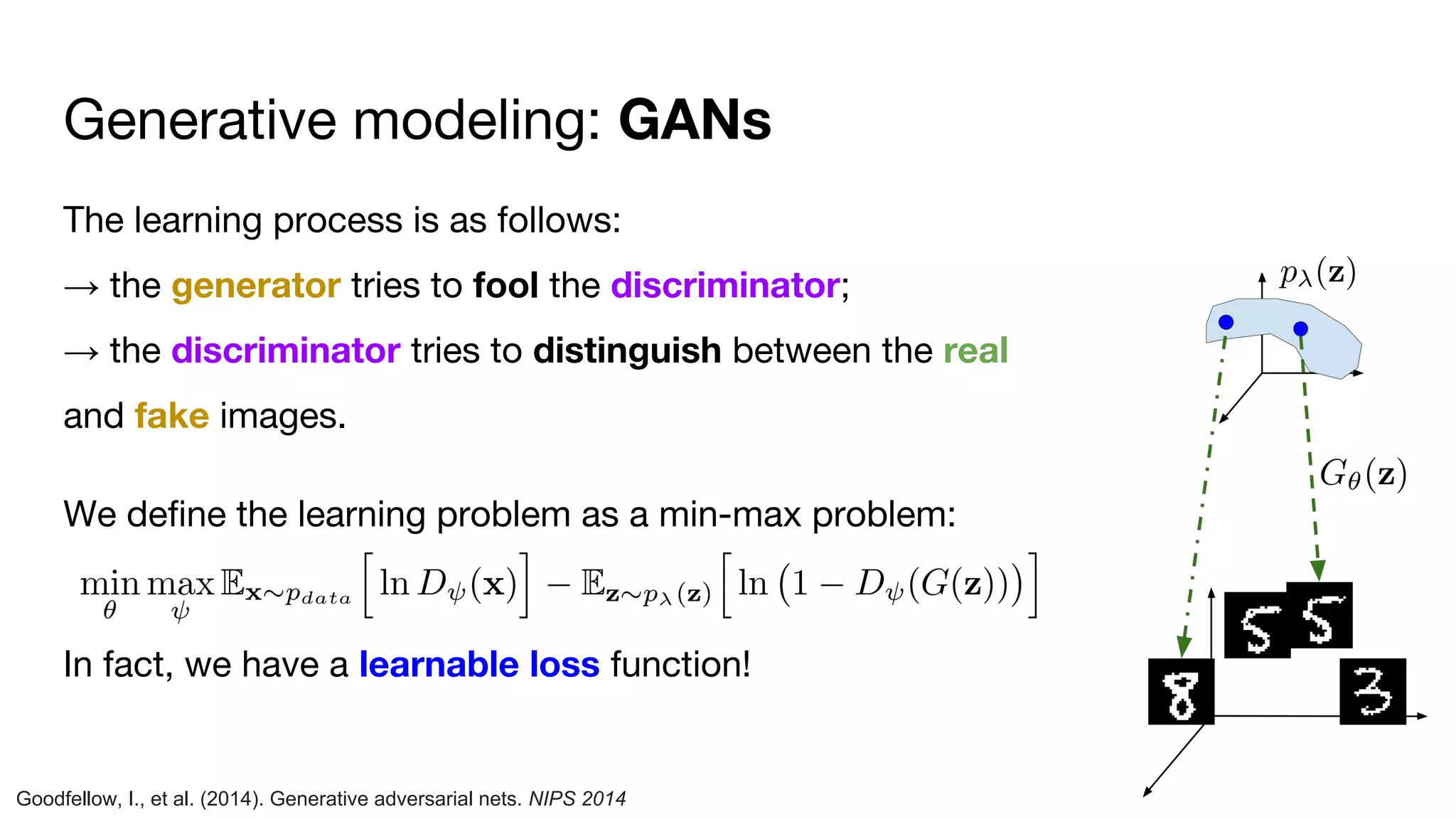

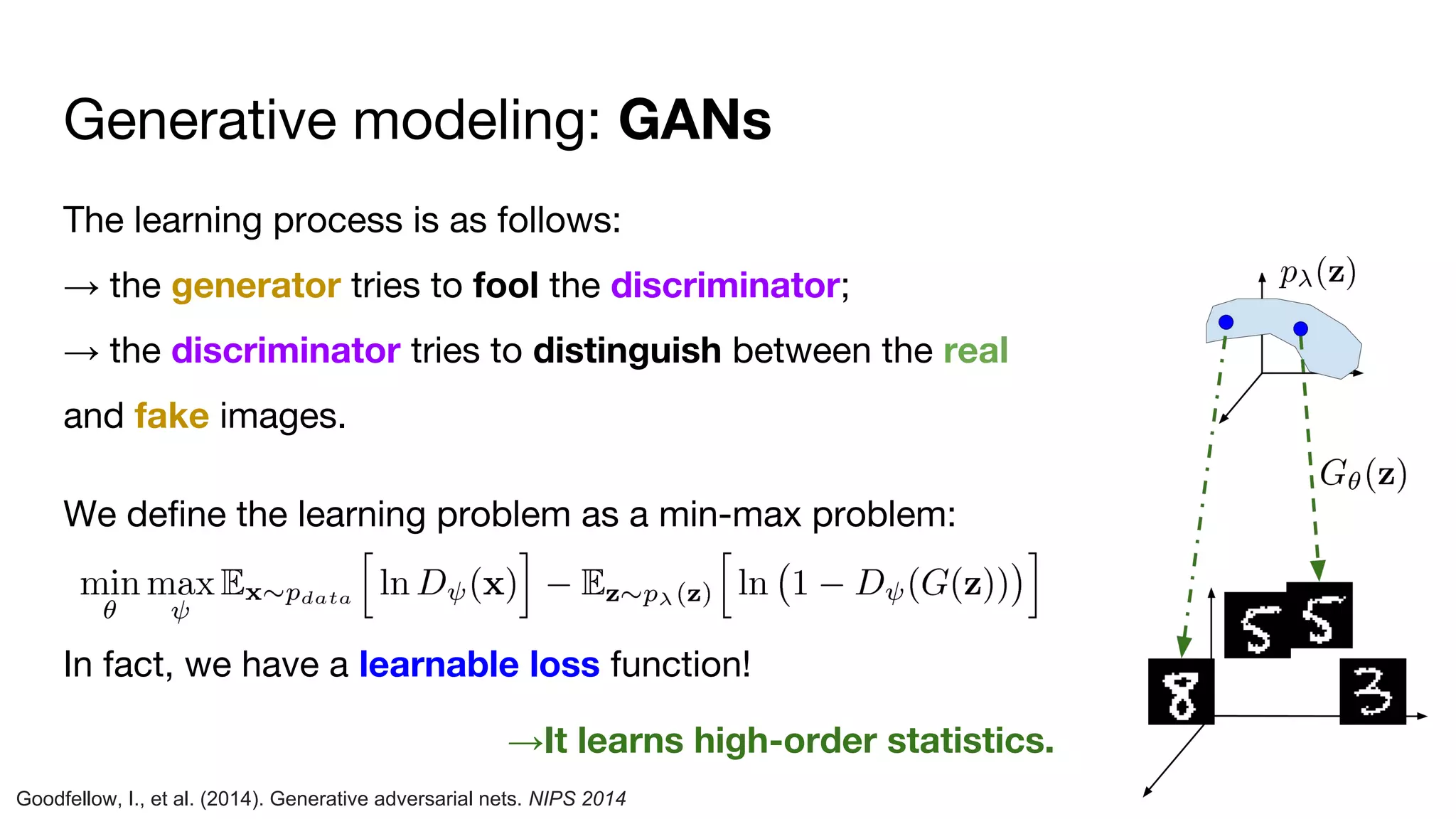

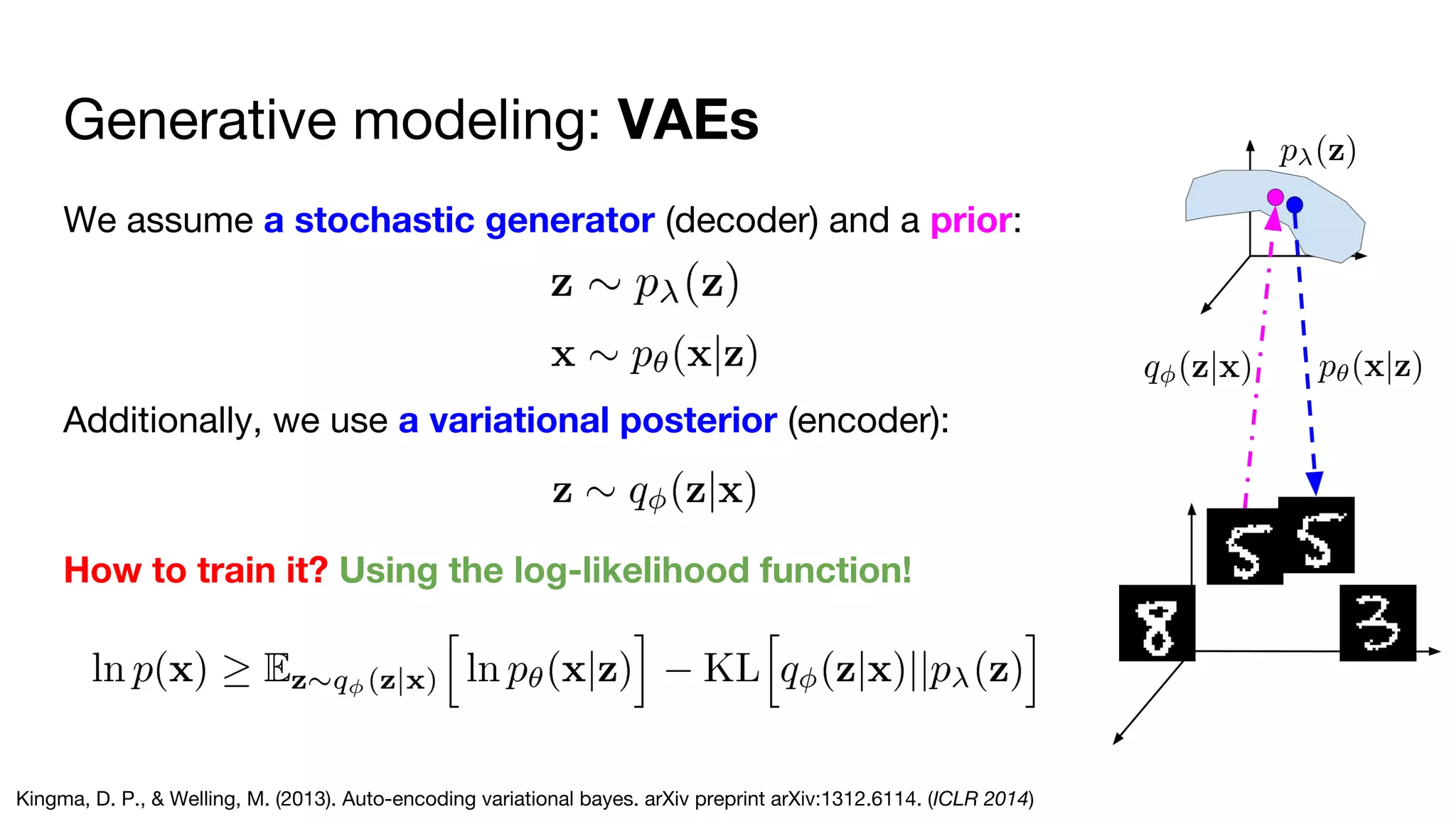

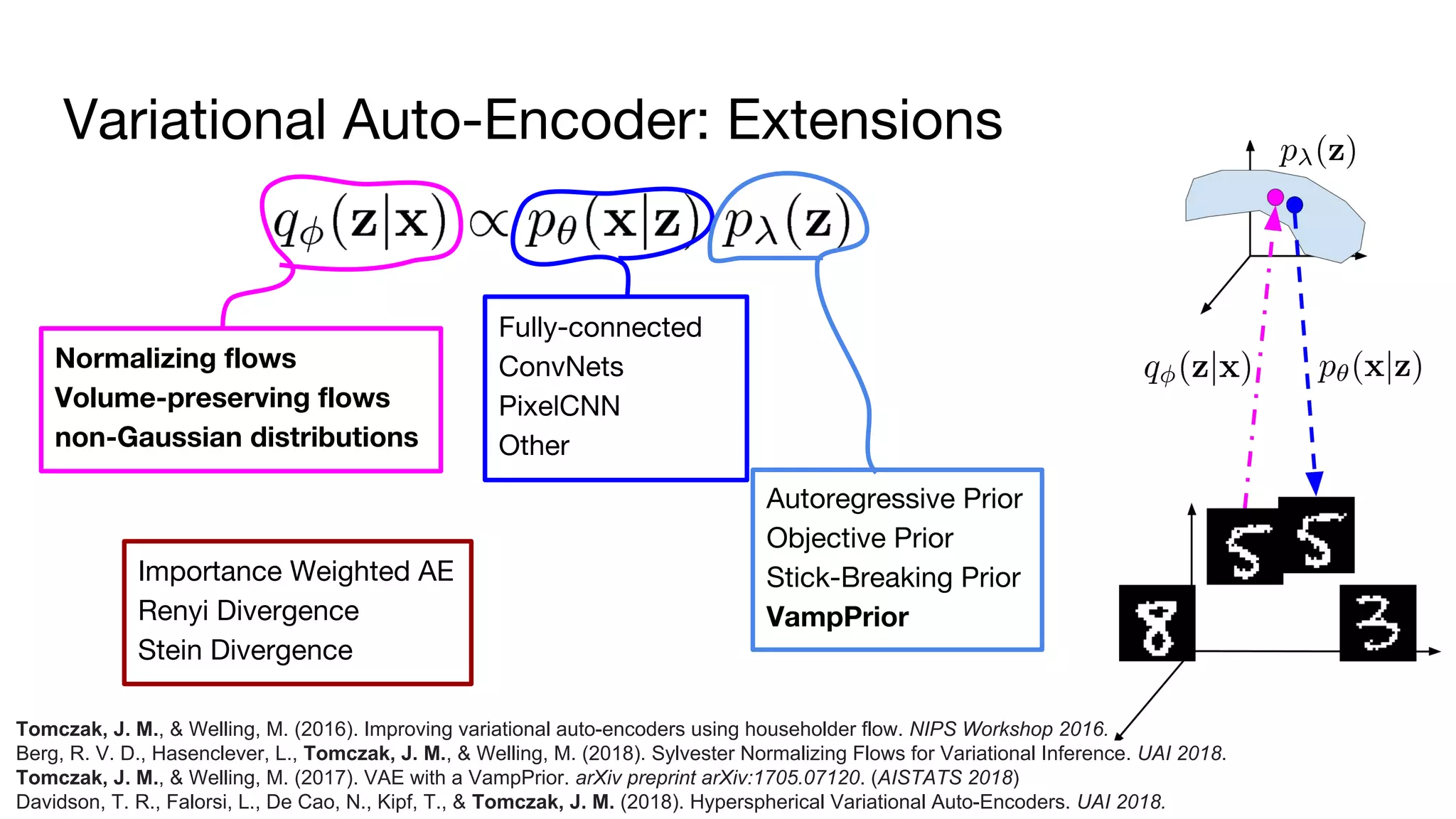

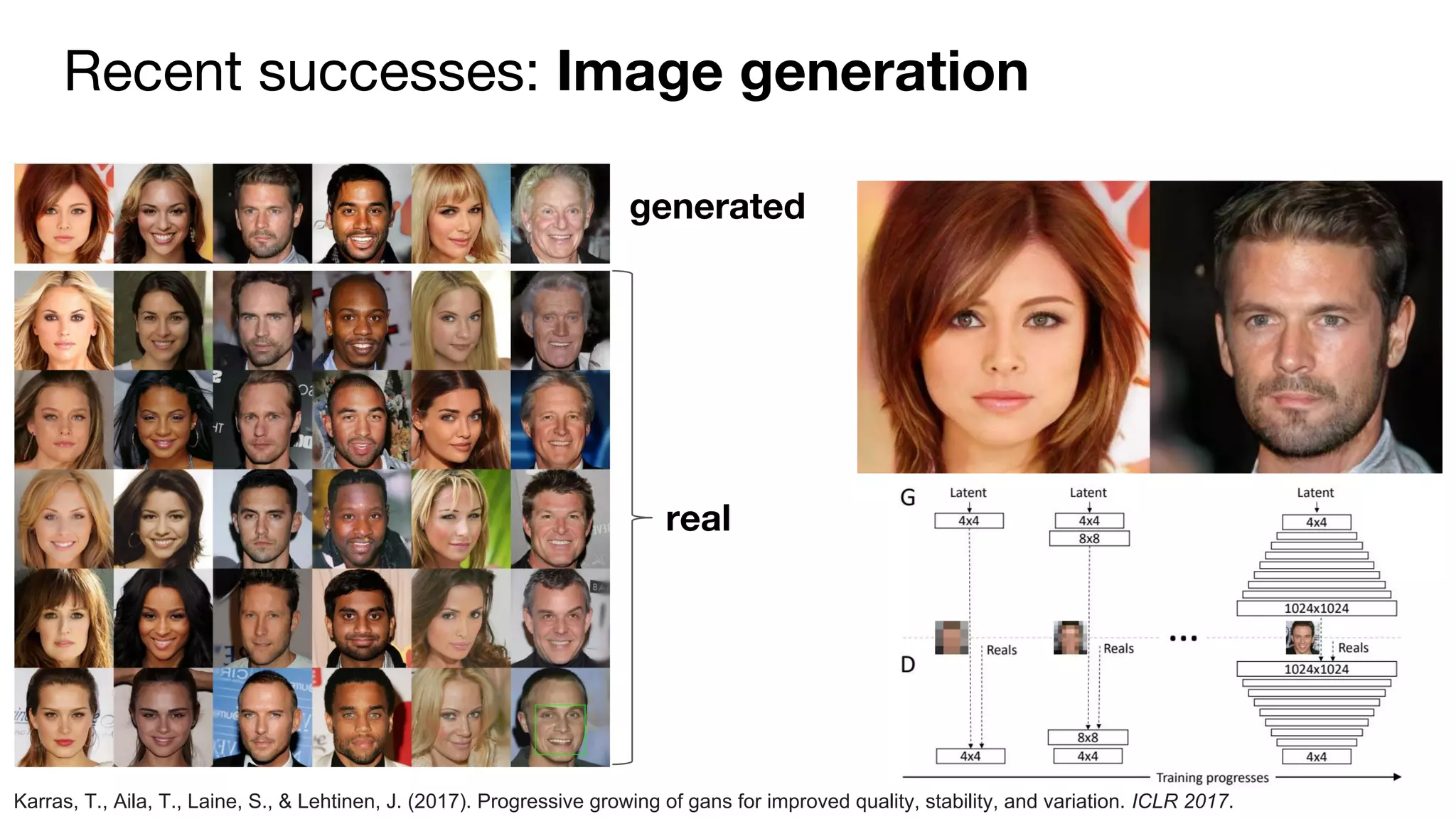

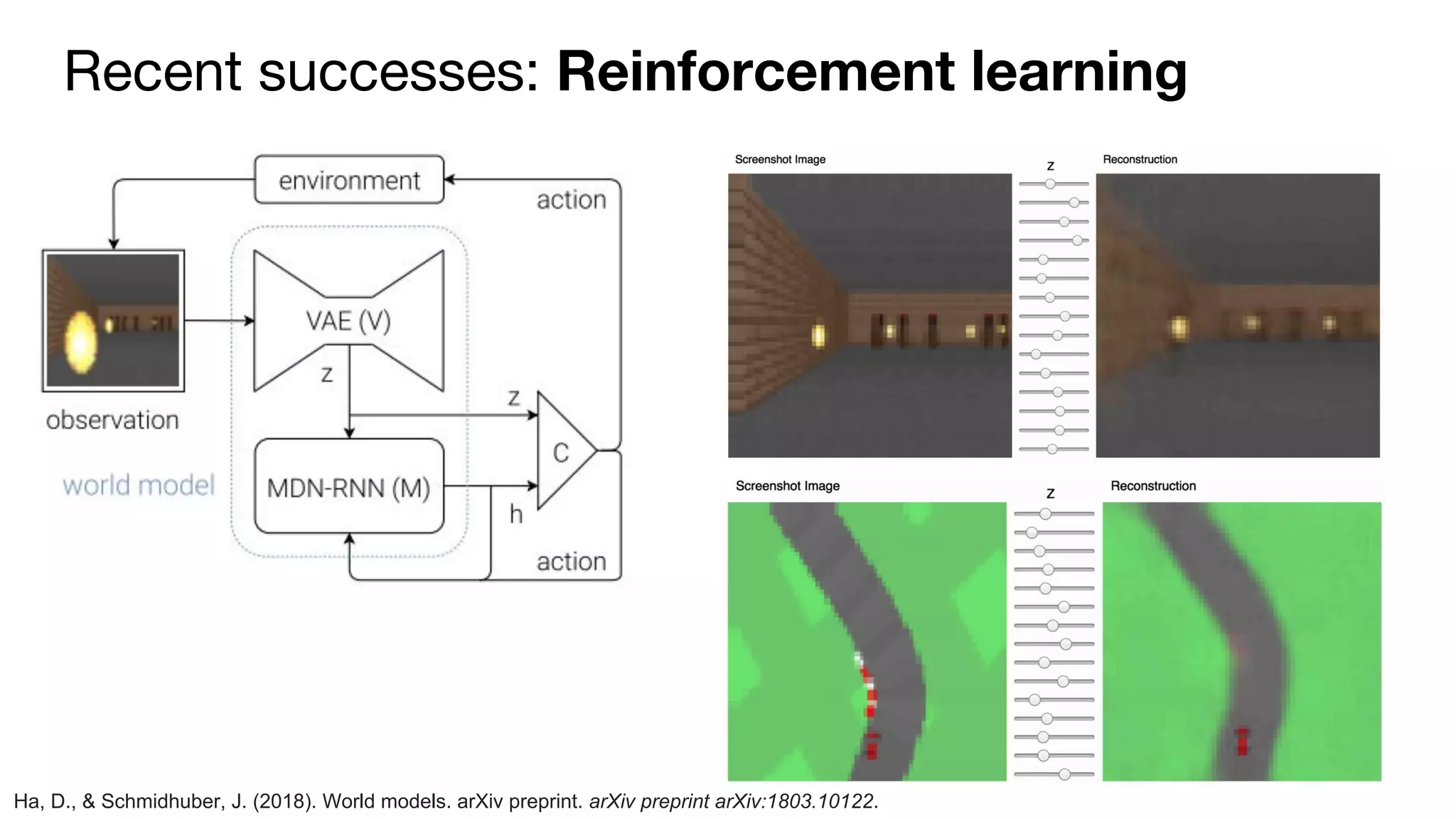

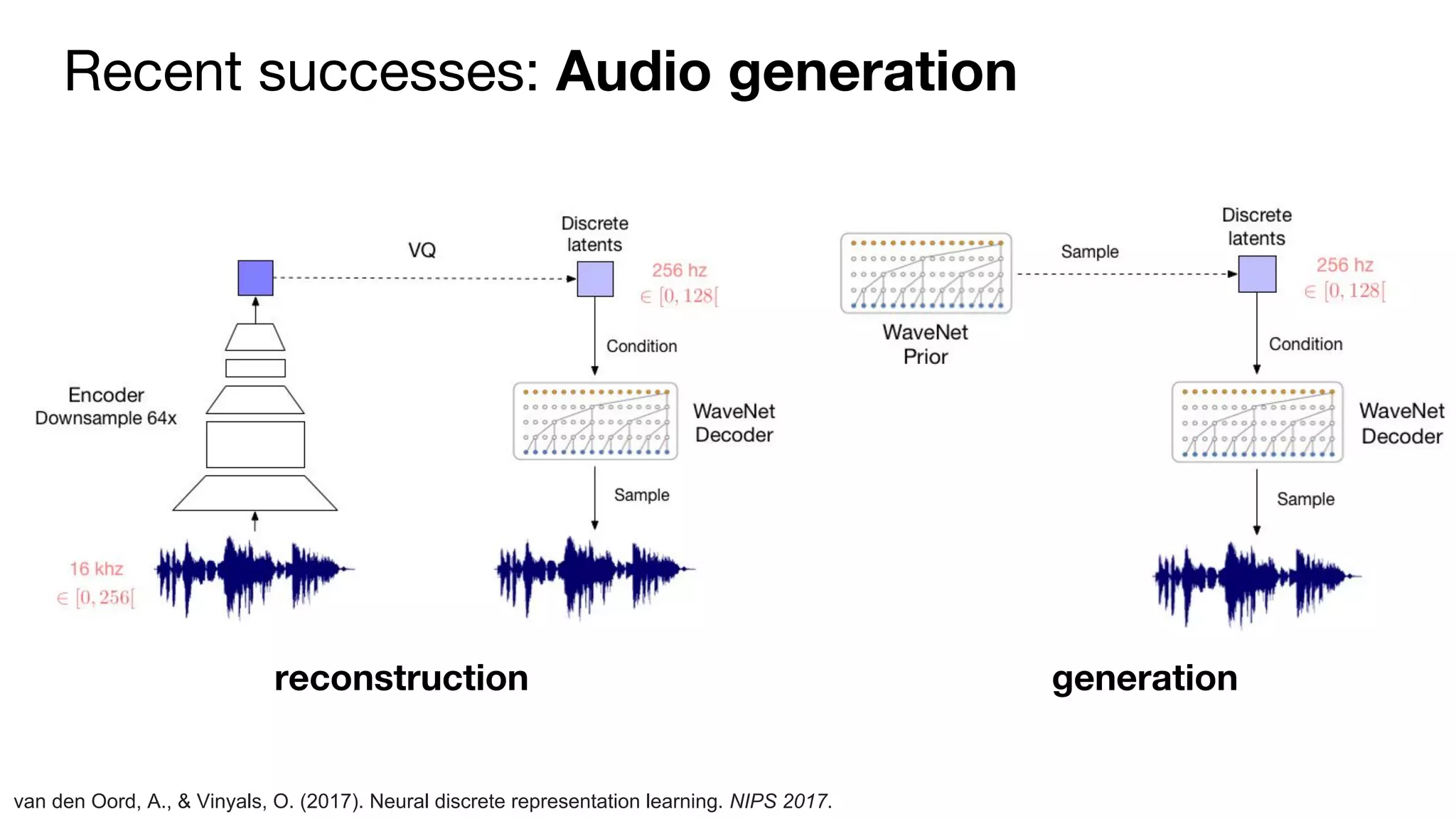

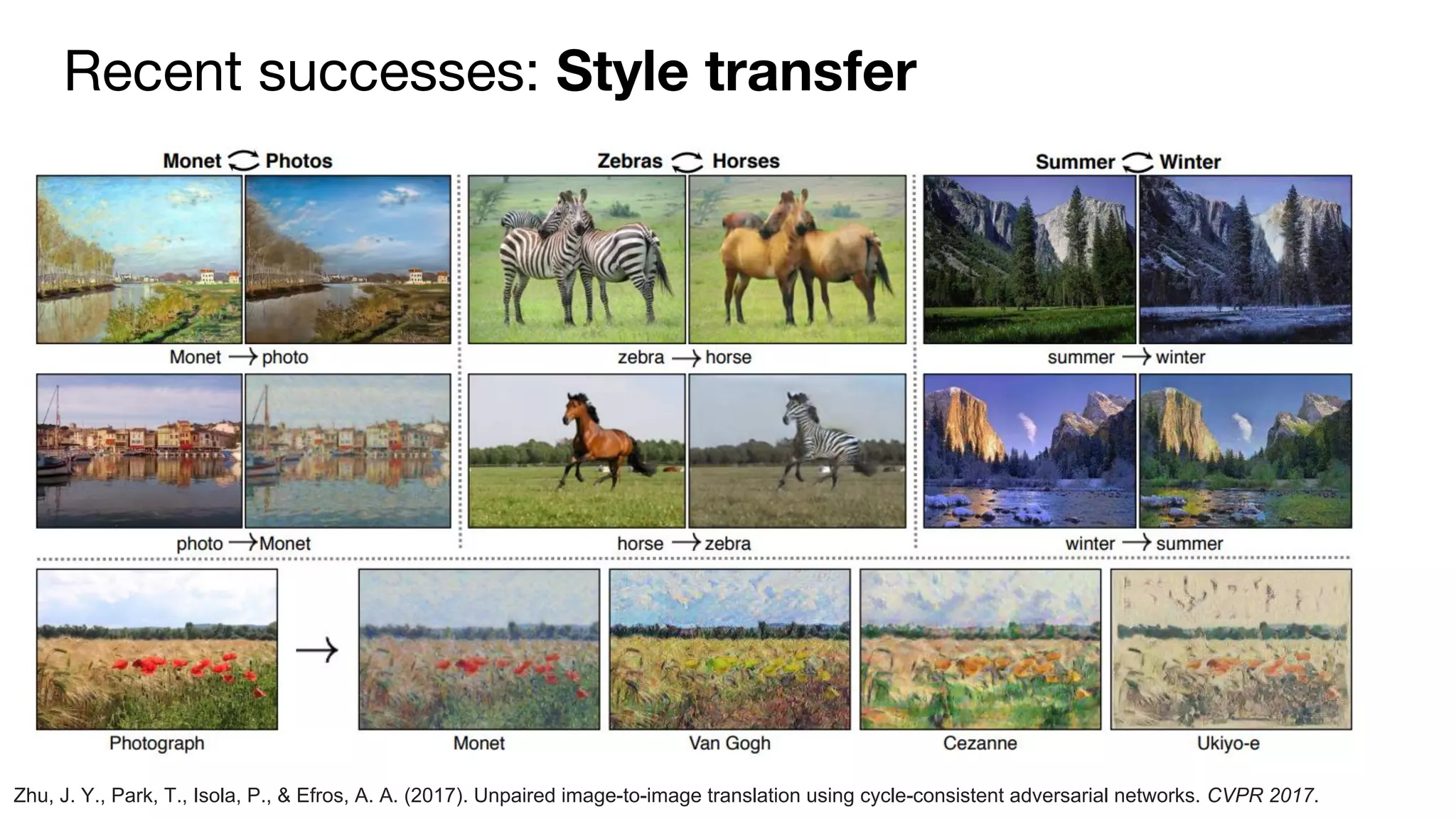

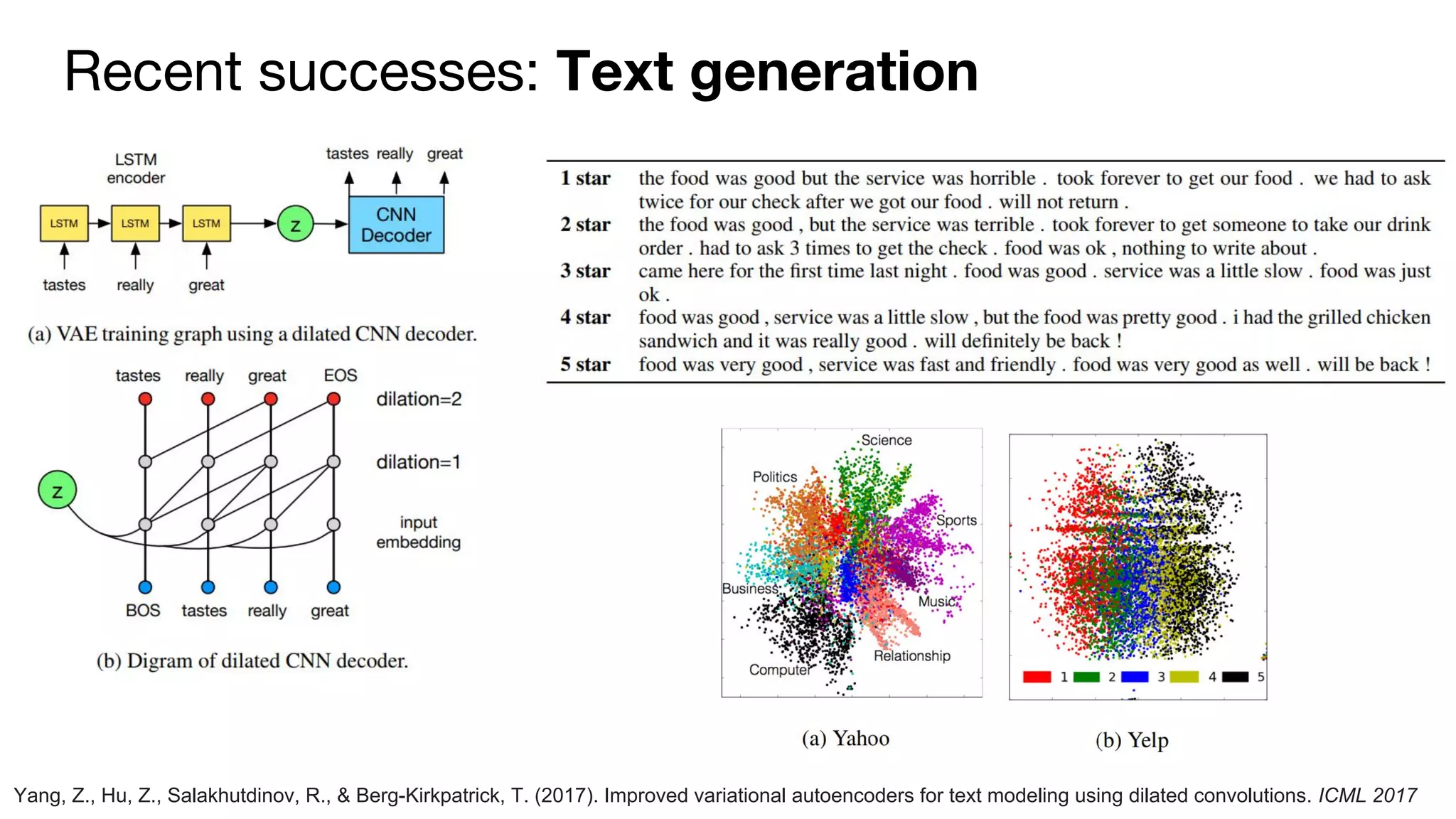

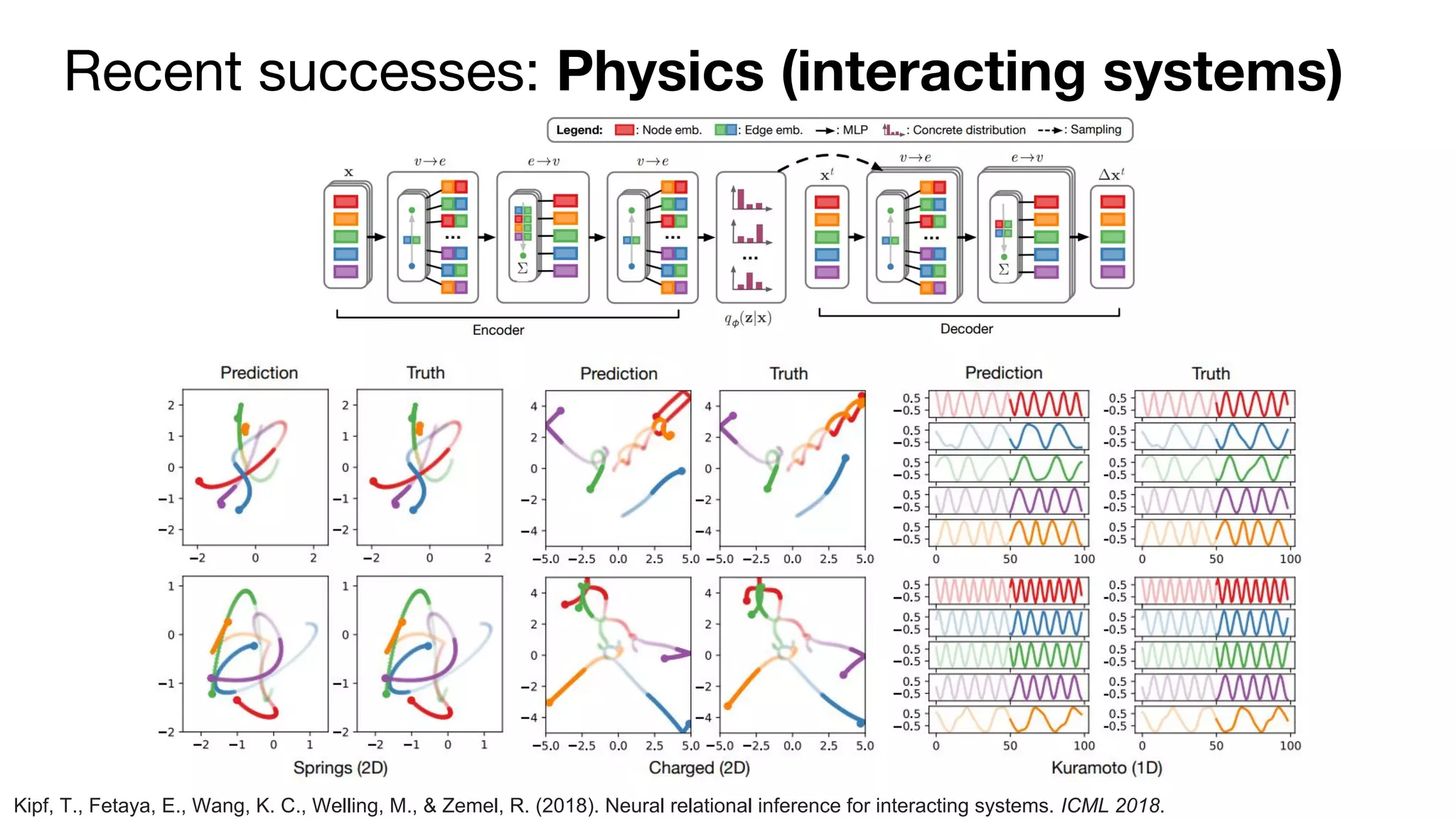

The document discusses deep generative models and their success. It explains that generative adversarial networks (GANs) and variational autoencoders (VAEs) are the two main approaches for generative modeling. GANs use a game-theoretic framework to train a generator network to produce realistic samples, while VAEs combine a generator with an encoder to maximize a variational lower bound on the data likelihood. The document outlines applications of generative models across many domains, including image generation, reinforcement learning, audio generation, and more. It concludes that generative modeling is key to achieving artificial intelligence and that future work includes improving generative models for video, better priors and decoders, and geometric methods.