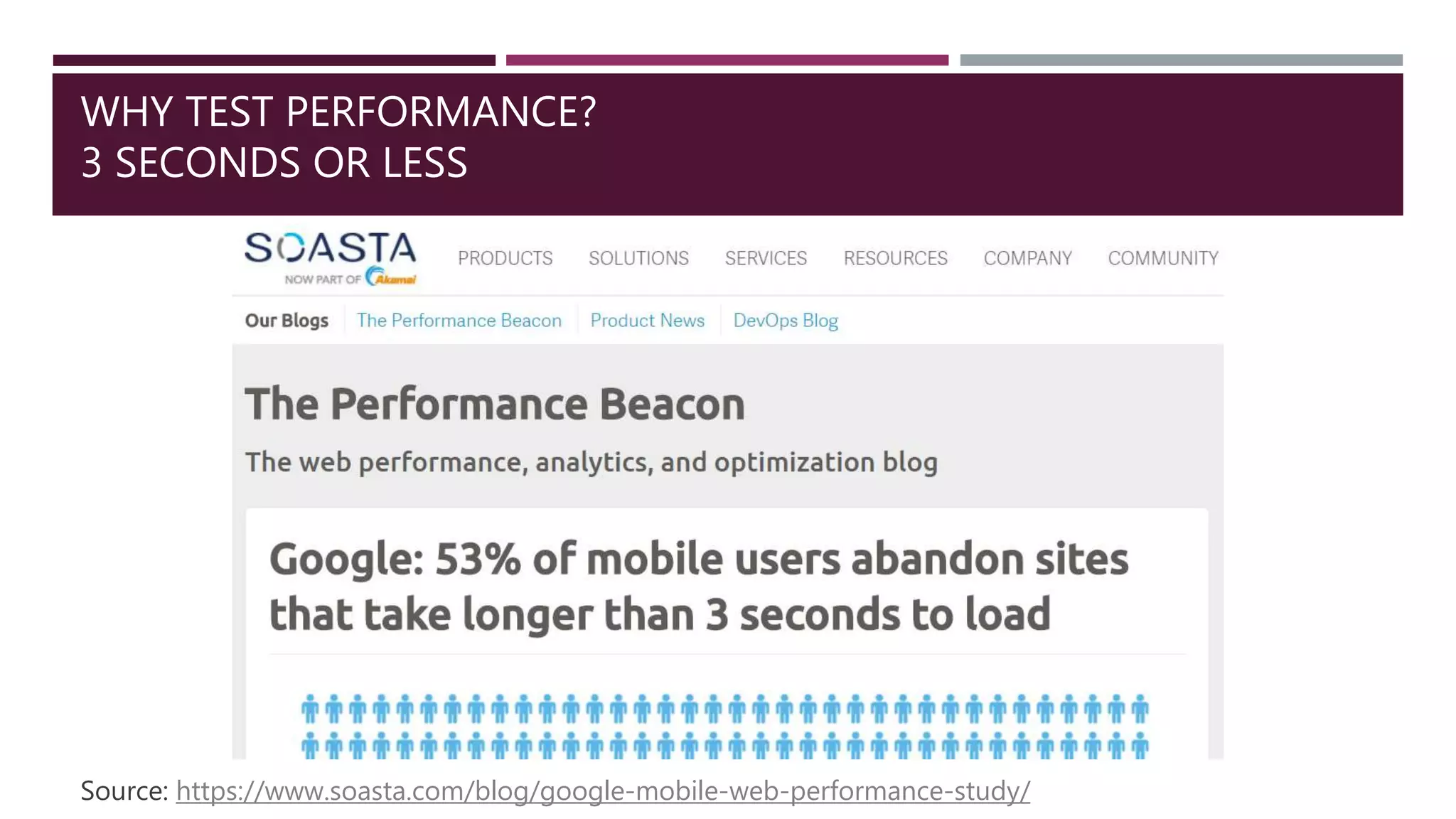

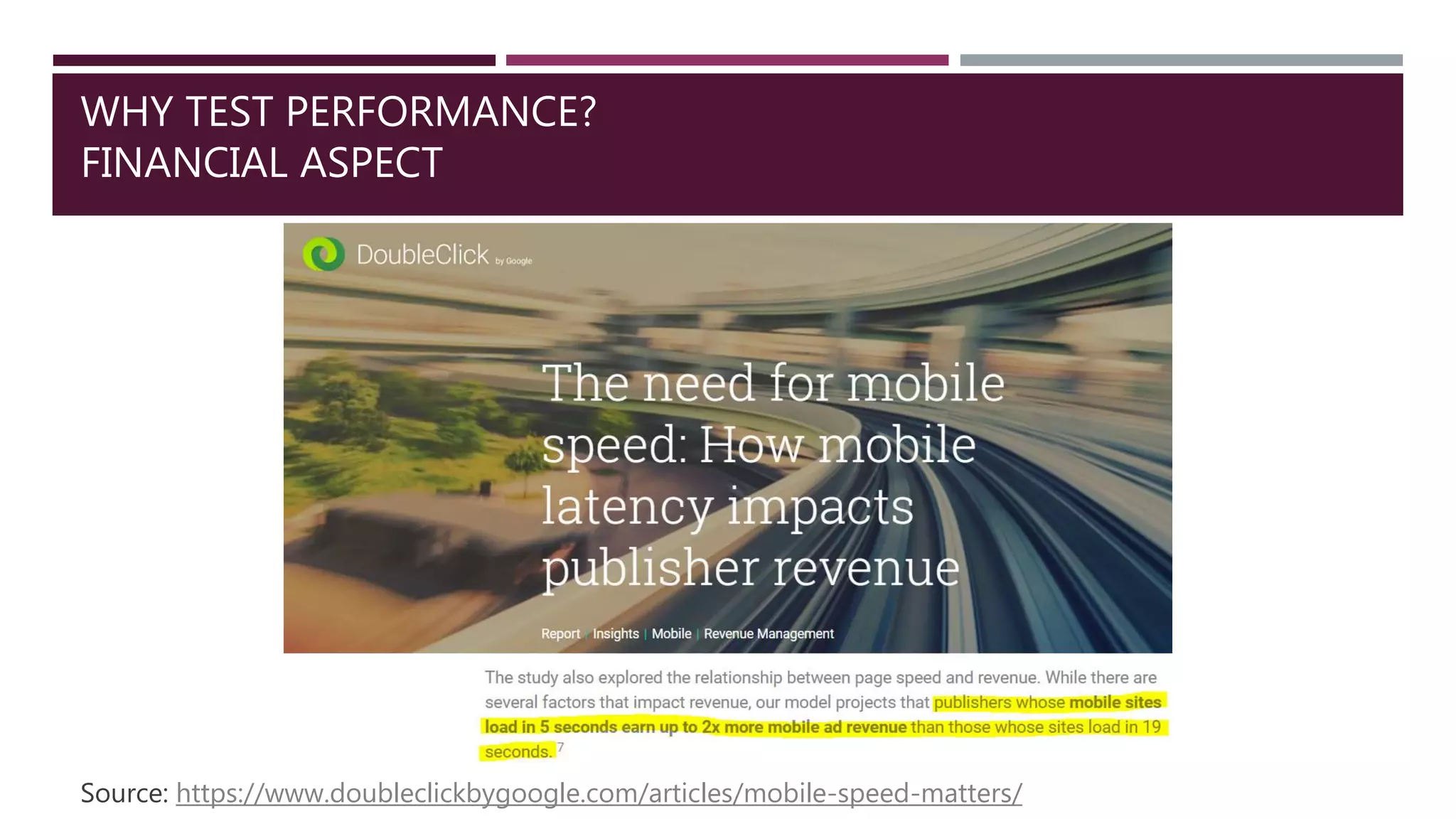

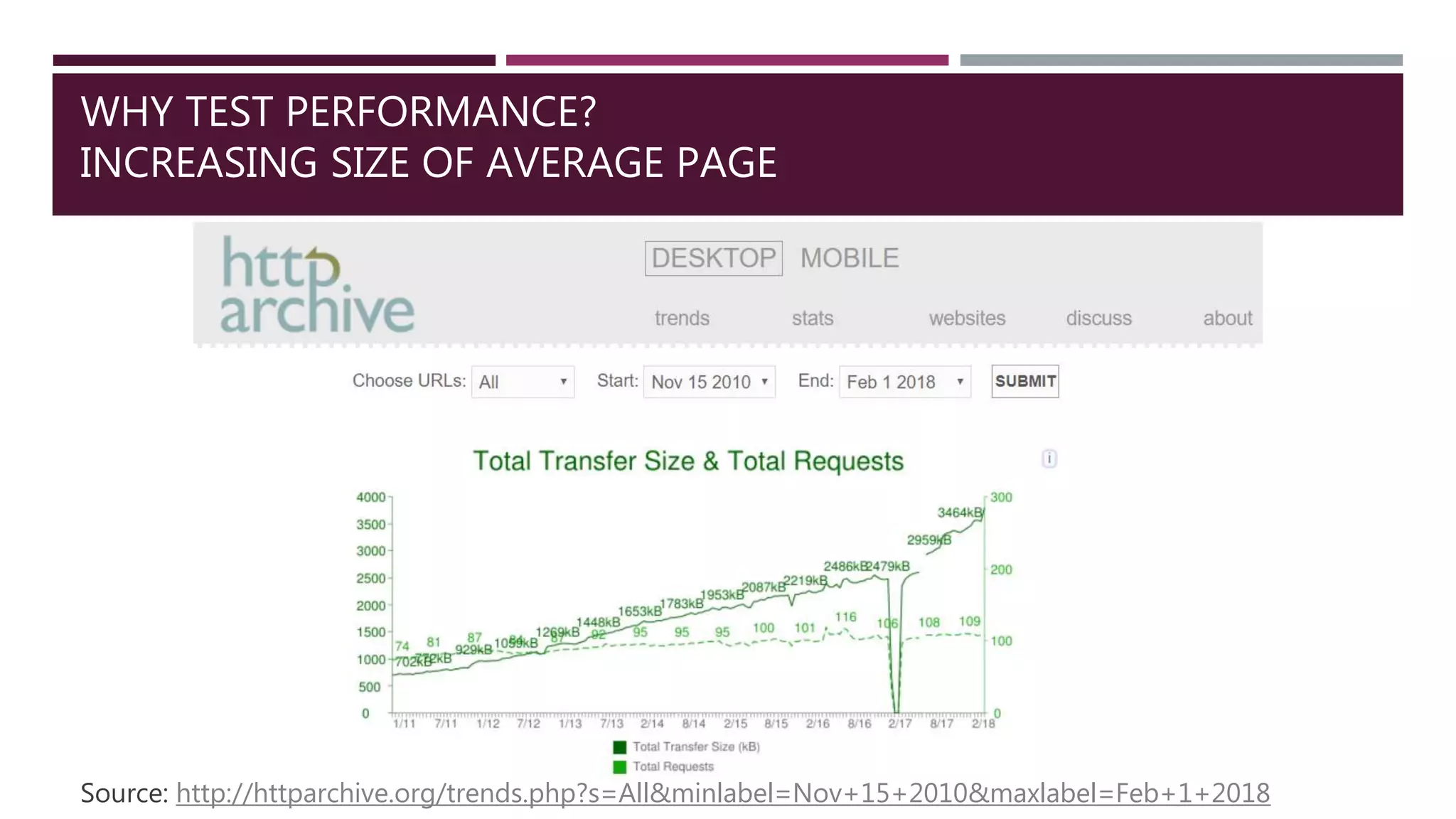

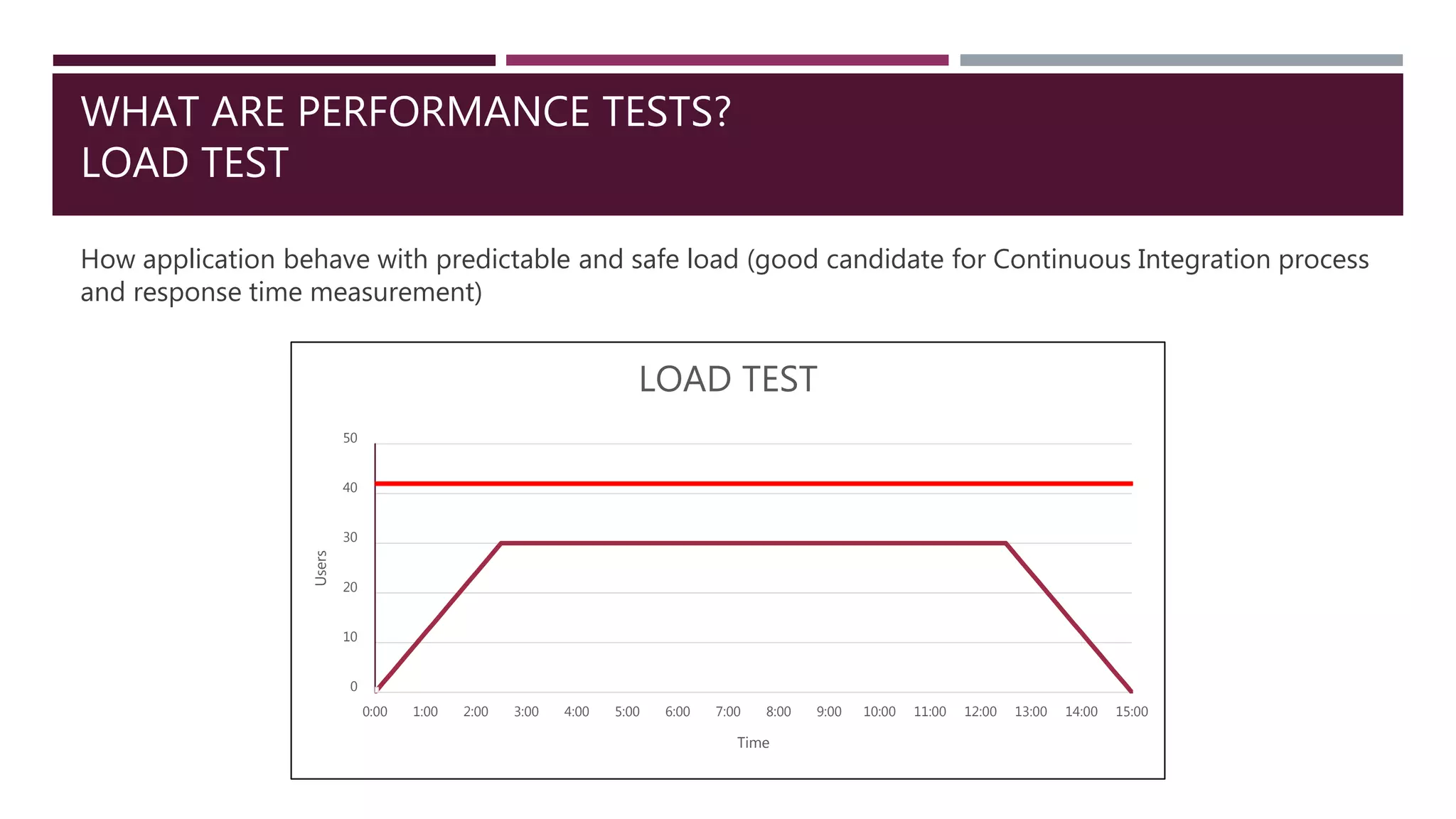

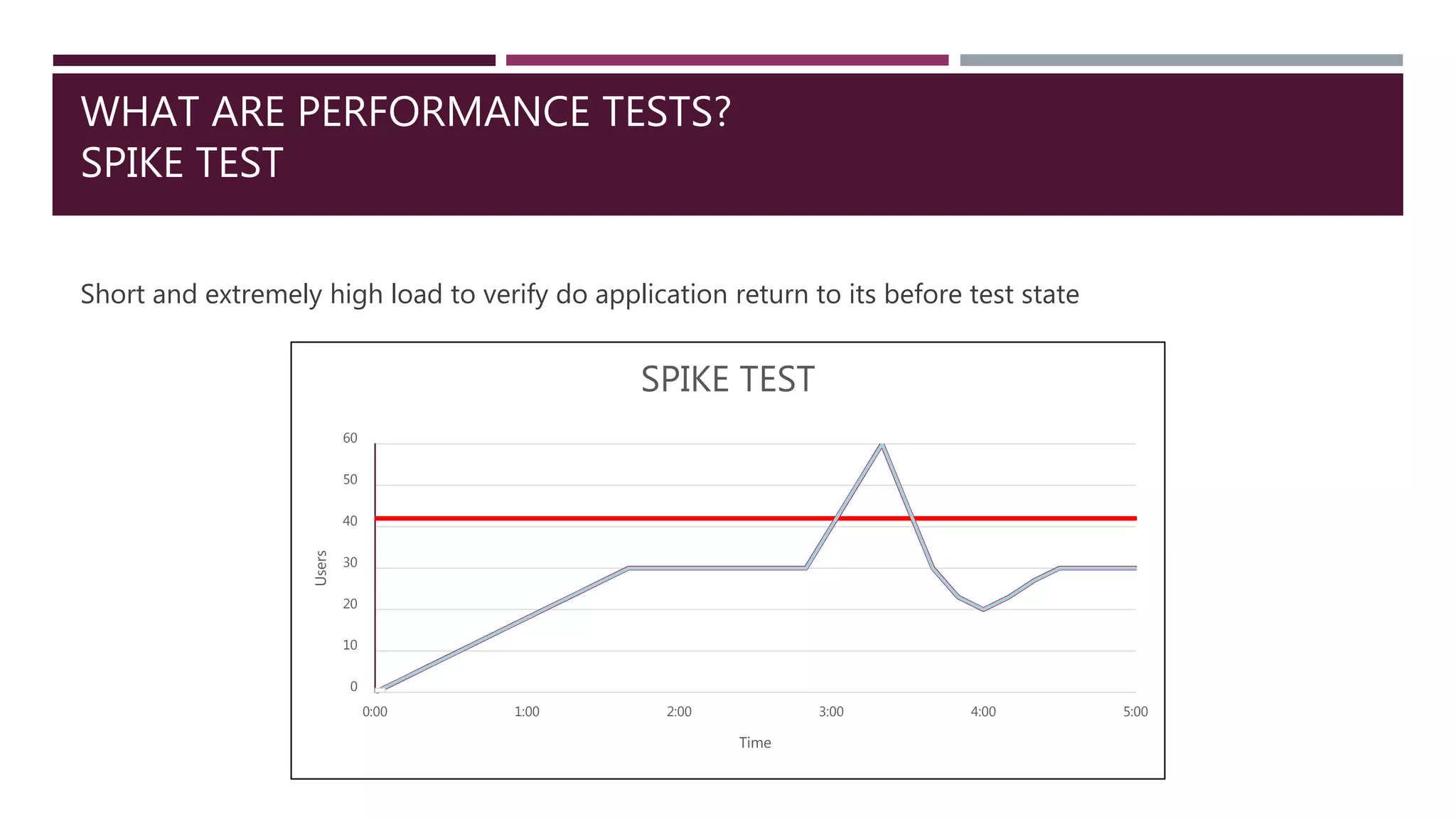

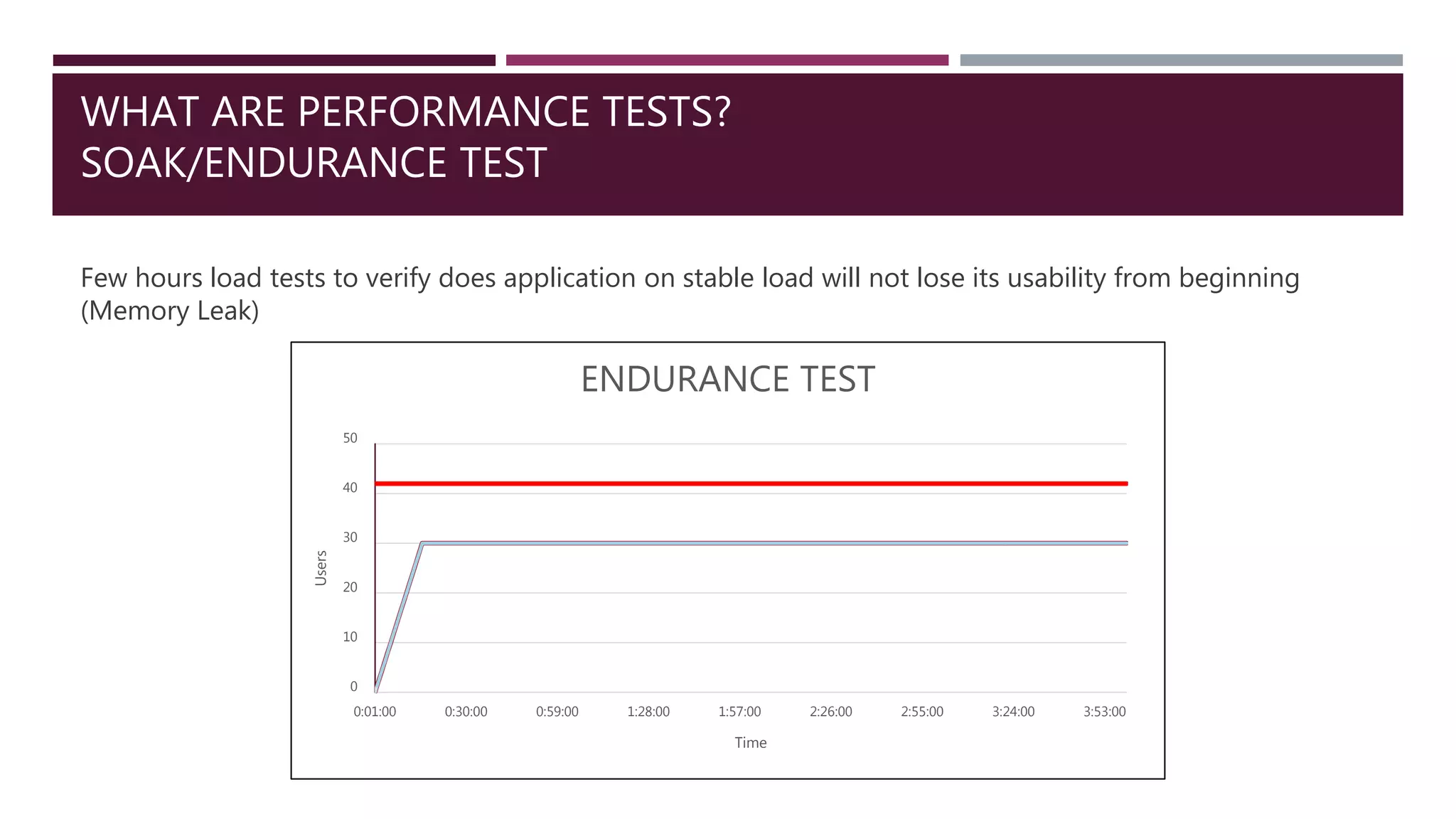

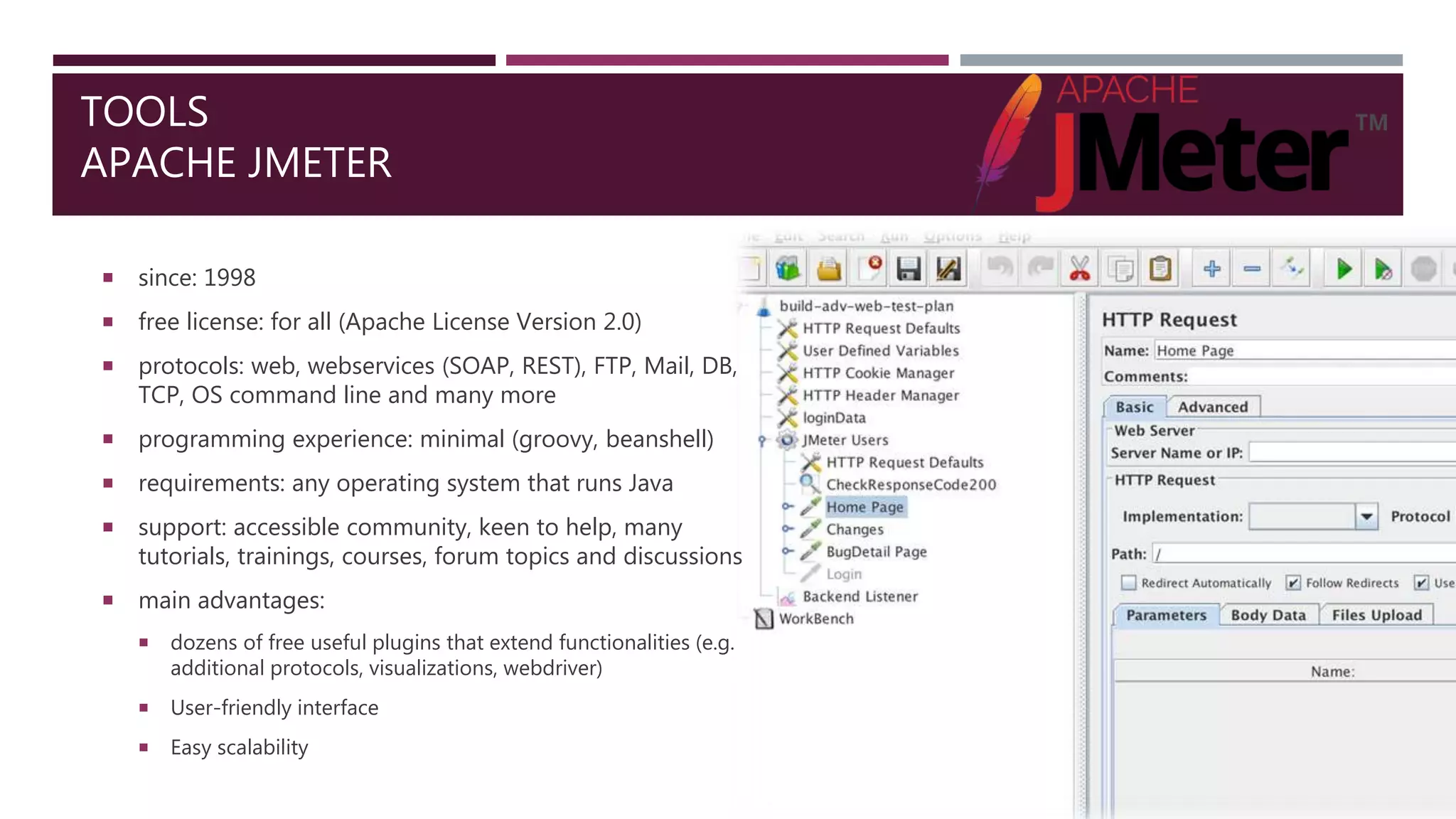

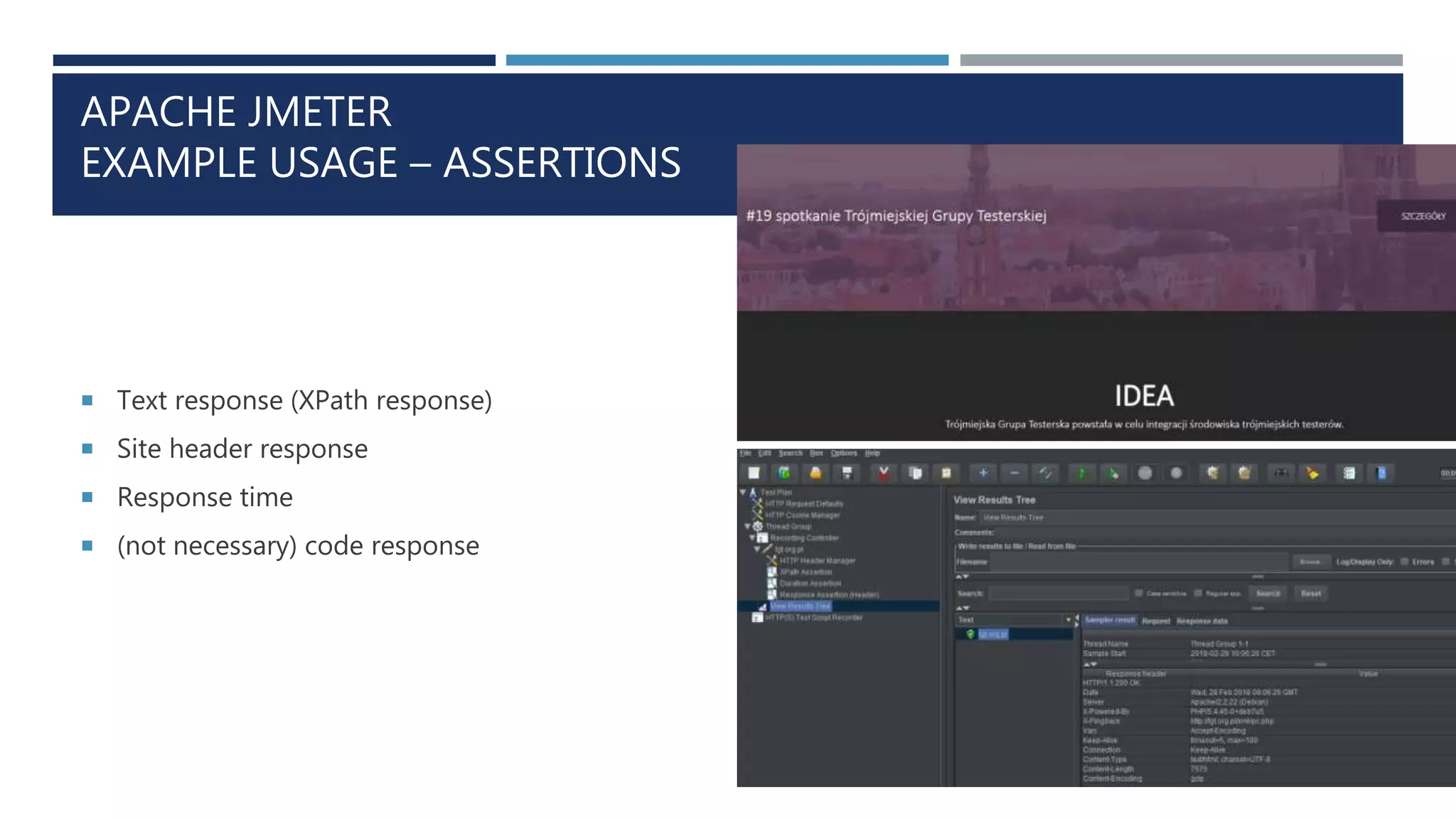

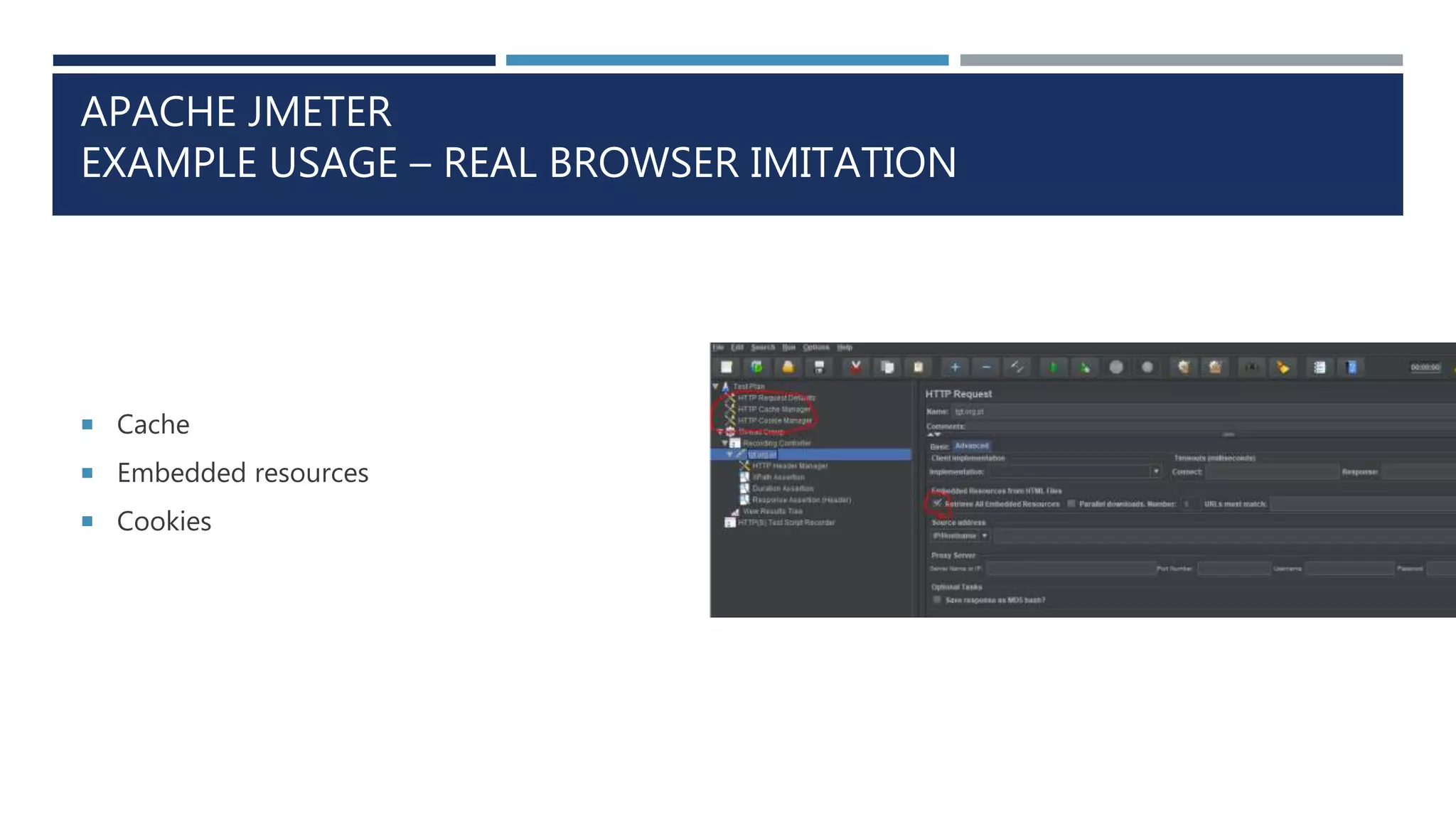

This document discusses the importance of performance testing web applications and services. It explains that performance tests help locate issues before public release, define system limits, and find bottlenecks. The document then defines different types of performance tests, including load, stress, spike, and endurance tests. It provides examples of tools for performance testing, such as Apache JMeter, and how to analyze test results. Overall, the document makes a case for regularly performance testing software to ensure expected quality and response times.