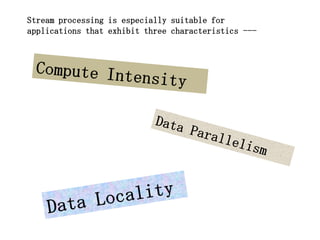

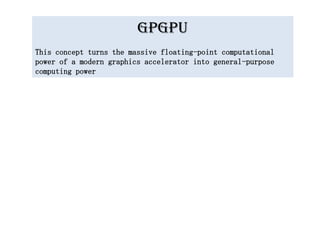

Stream processing is a computer programming paradigm that allows for parallel processing of data streams. It involves applying the same kernel function to each element in a stream. Stream processing is suitable for applications involving large datasets where each data element can be processed independently, such as audio, video, and signal processing. Modern GPUs use a stream processing approach to achieve high performance by running kernels on multiple data elements simultaneously.

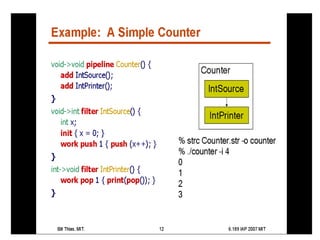

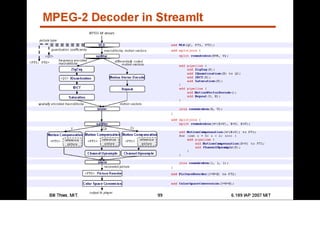

![A stream processing language for programs based

on streams of data

e.g Audio, video, DSP, networking,

and cryptographic processing kernels

HDTV editing, radar tracking, microphone arrays,

cellphone base stations, graphics

[Thies 2002]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-16-320.jpg)

![A high-level, architecture-independent language

for streaming applications

1. Improves programmer productivity (vs.

Java, C)

2. Offers scalable performance on multicores

[Thies 2002]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-17-320.jpg)

![“The processing power of just 5,000 ATI processors is

also enough to rival that of the existing 200,000

computers currently involved in the Folding@home project”

[Ref 1]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-88-320.jpg)

![“The processing power of just 5,000 ATI processors is

also enough to rival that of the existing 200,000

computers currently involved in the Folding@home project”

“..it is estimated that if a mere 10,000 computers were to

each use an ATI processor to conduct folding research, that

the Folding@home program would effectively perform faster

than the fastest supercomputer in existence

today, surpassing the 1 petaFLOP level “- 2007

November 10, 2011- Folding@home 6.0 petaFlop where

8.162 petaFLOP ( K computer) [Ref 1]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-89-320.jpg)

![Application Processor:

Executes application code like

MPEG decoding

Sequences the instructions and

issues them to Stream clients

e.g KEU and

DRAM interface

[Kapasi 2003]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-92-320.jpg)

![Two Stream Clients:

KEU:

Programmable Kernel Execution

Unit

DRAM interface:

Provides access to global data

storage

[Kapasi 2003]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-93-320.jpg)

![KEU:

It has two stream level

instructions:

1. load_kernel – loads

compiled kernel function in

the local instruction

storage inside the KEU

2. run_kernel – executes the

kernel

[Kapasi 2003]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-94-320.jpg)

![DRAM interface:

Two stream level instructions

as well –

1. load_stream – loads an

entire stream from SRF

2. store_stream – stores a

stream into SRF

[Kapasi 2003]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-95-320.jpg)

![Local register files (LRFs)

1. use for operands for

arithmetic operations

(similar to caches on CPUs)

2. exploit fine-grain locality

[Kapasi 2003]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-96-320.jpg)

![Stream register files

(SRFs)

1. capture coarse-grain

locality

2. efficiently transfer data

to and from the LRFs

[Kapasi 2003]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-97-320.jpg)

![[Kapasi 2003]](https://image.slidesharecdn.com/amaralppt-120316105646-phpapp02/85/Stream-Processing-98-320.jpg)