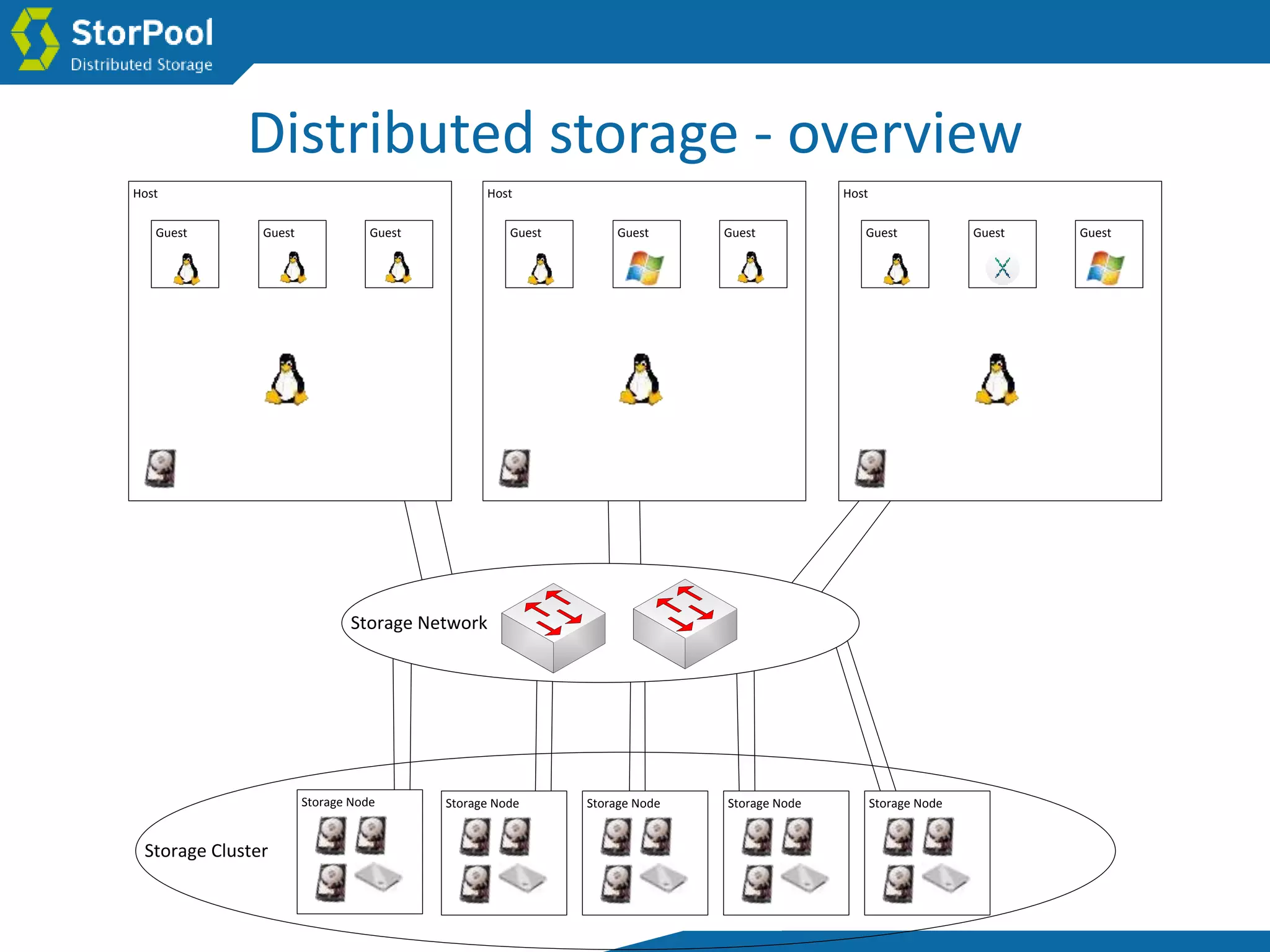

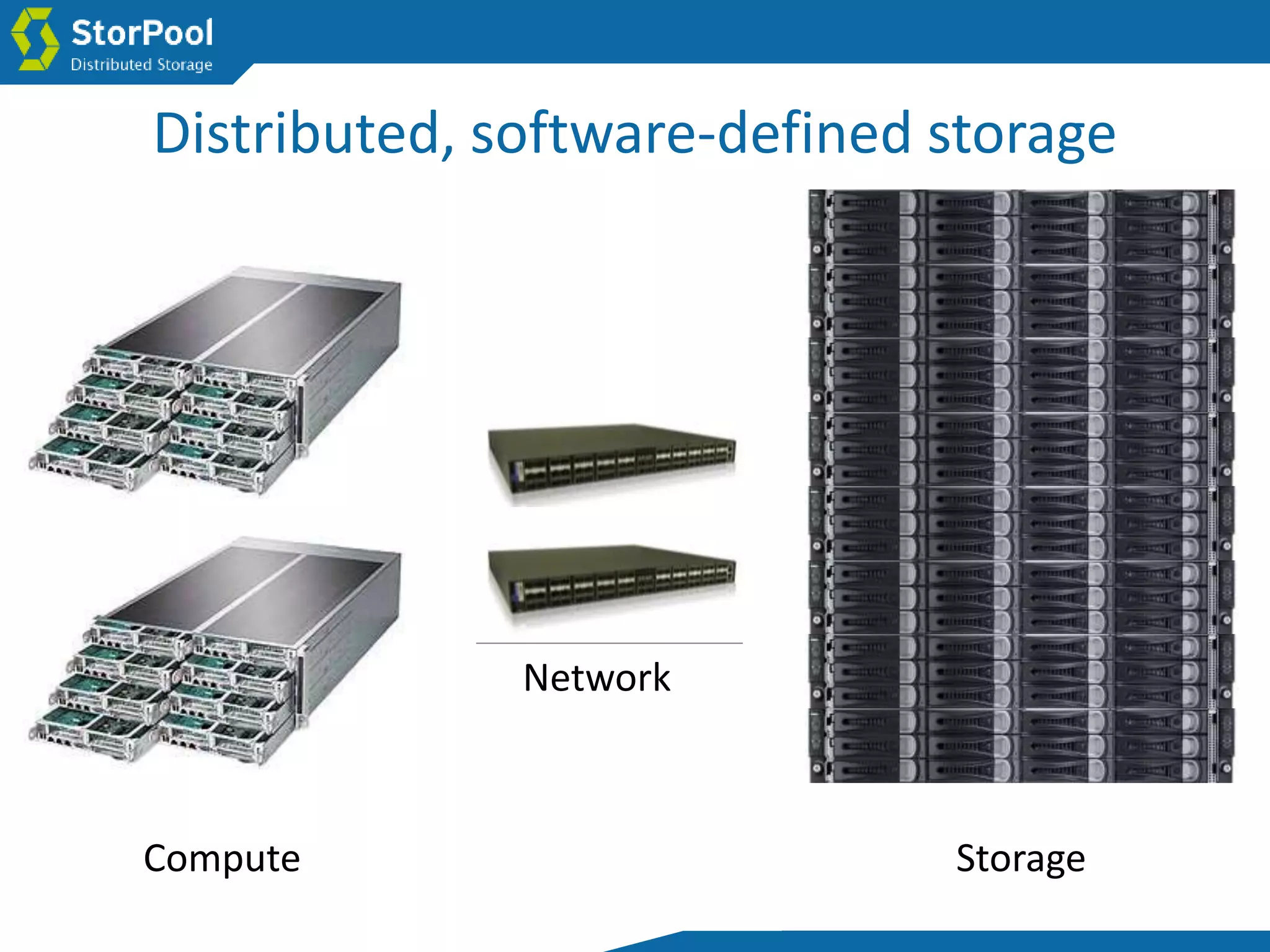

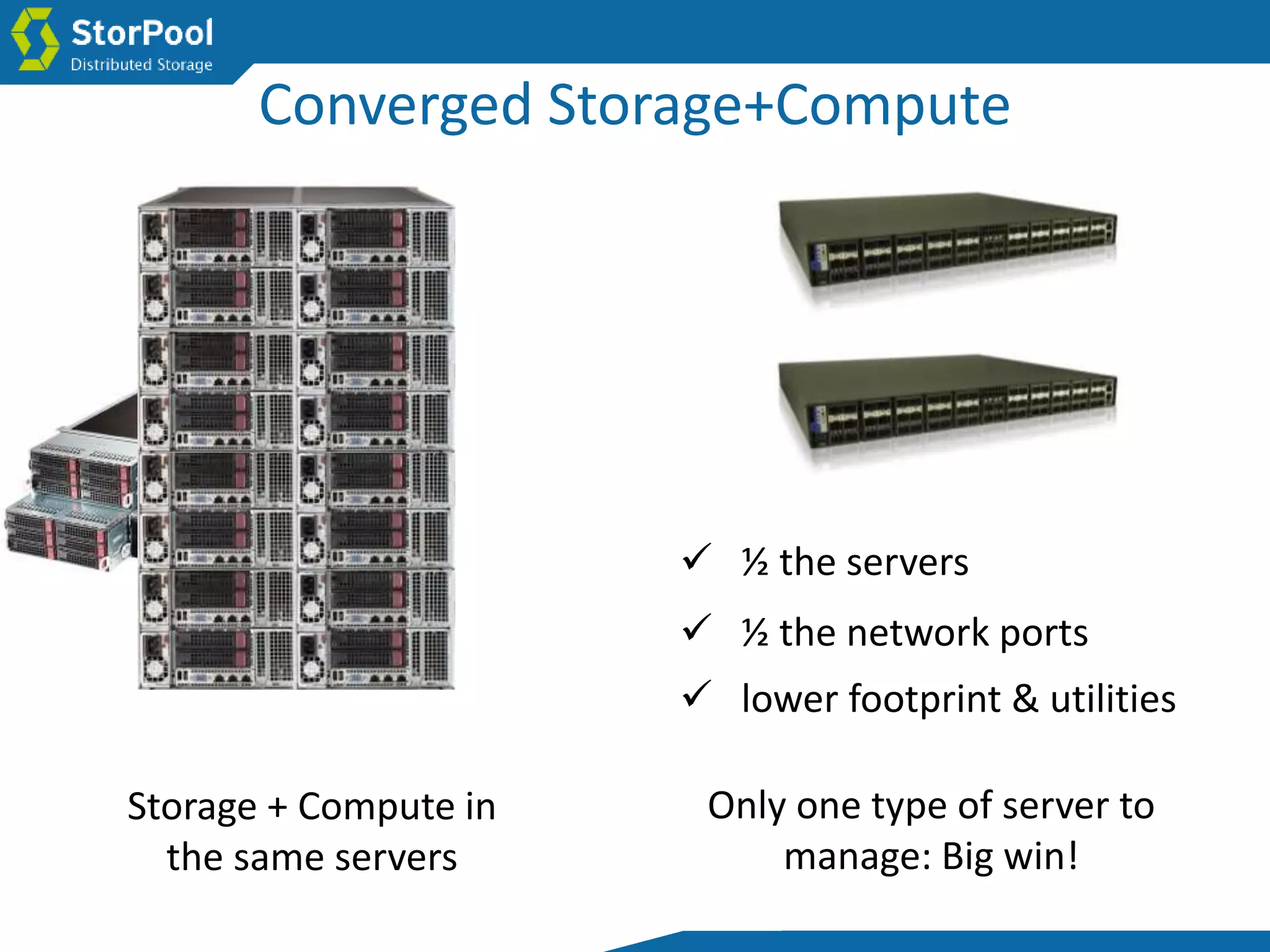

The document presents a solution called StorPool to address problems with today's storage solutions. StorPool provides distributed, software-defined storage using standard servers and drives in a flat network. It offers horizontal scalability, self-healing capabilities, and survives partial failures gracefully. StorPool storage nodes can be combined with compute servers for converged infrastructure, reducing costs versus separate storage and compute solutions. A demo then shows StorPool's basic functions like volume management, snapshots, placement groups, performance, and fault tolerance.