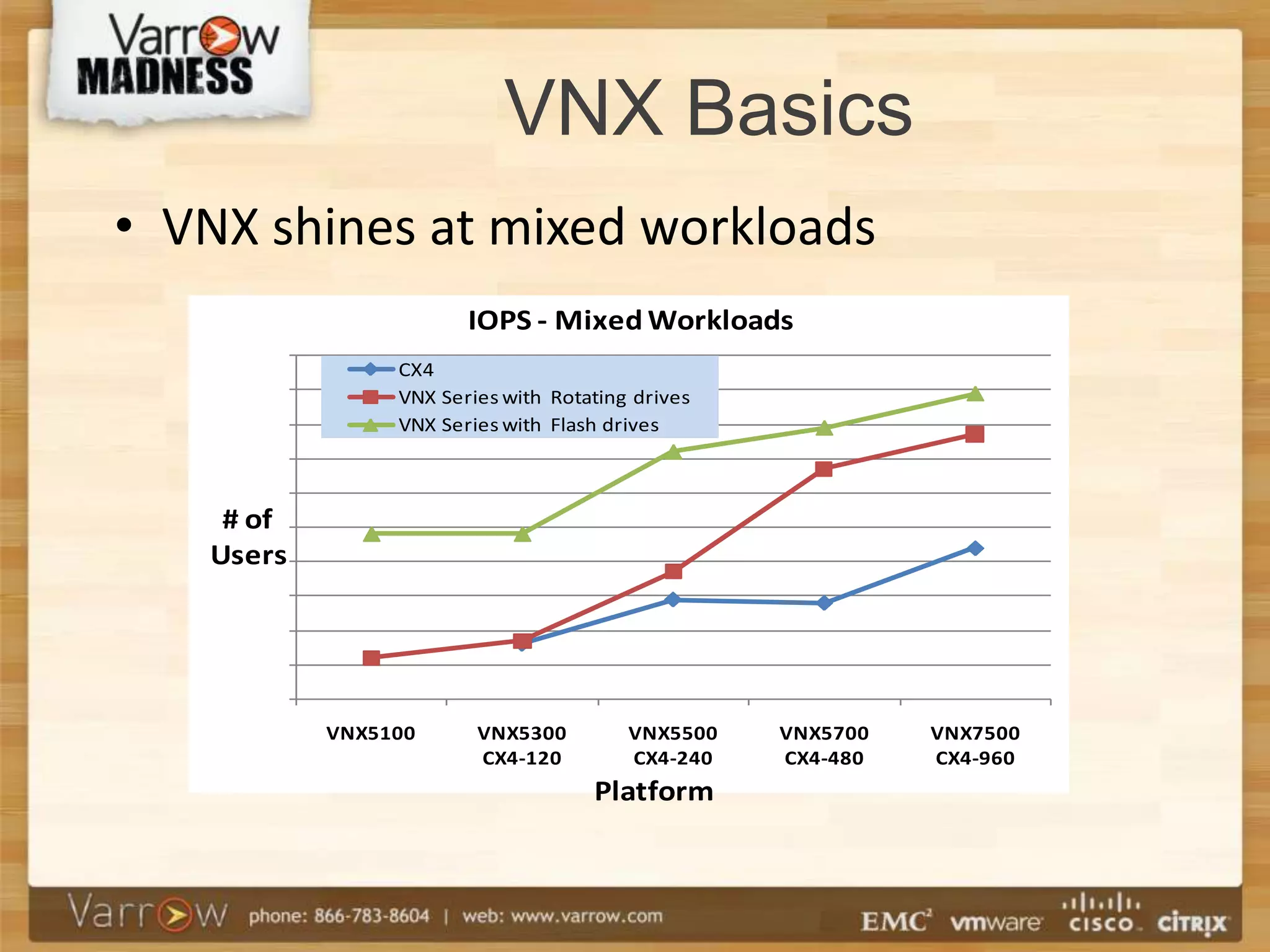

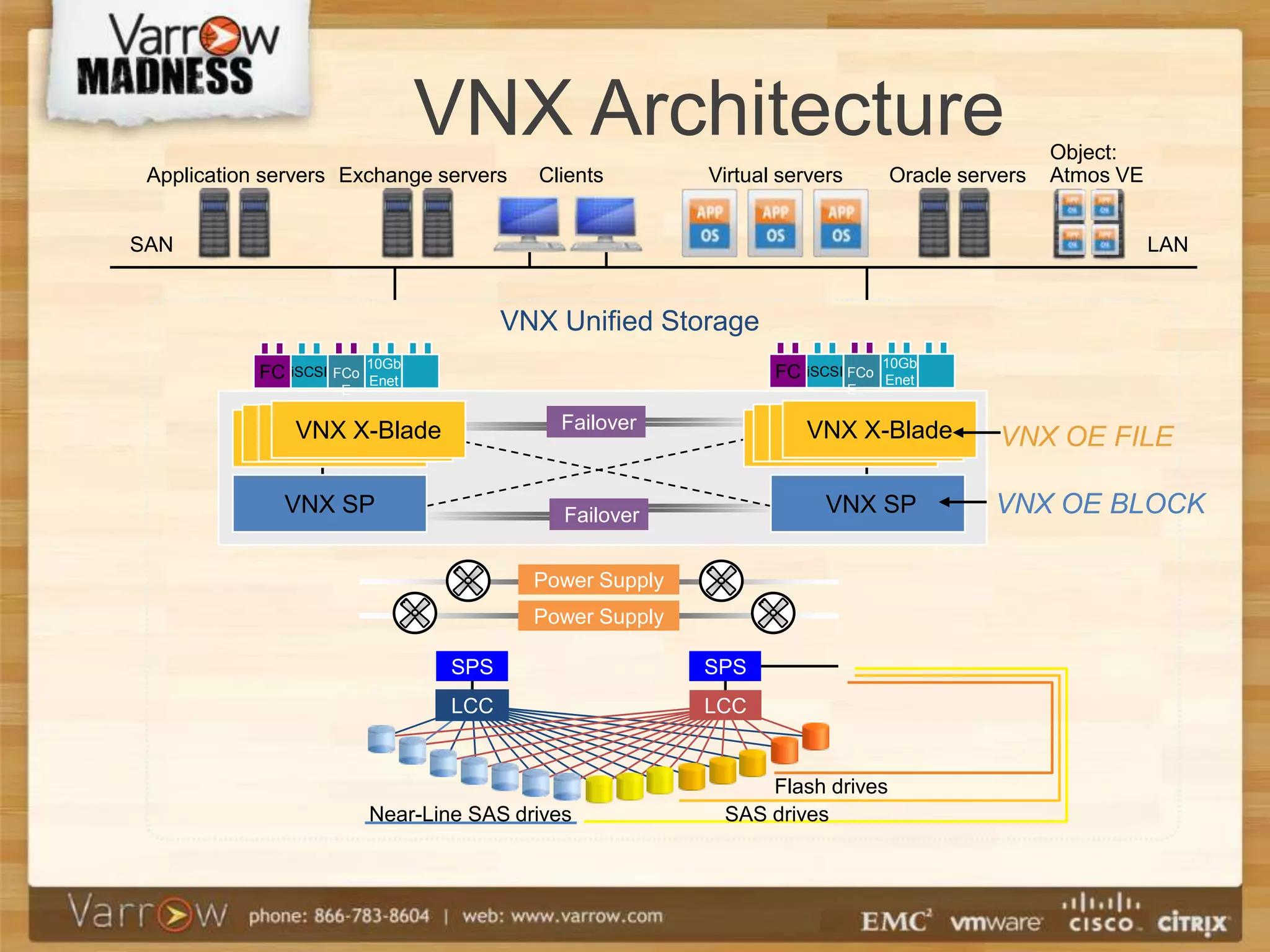

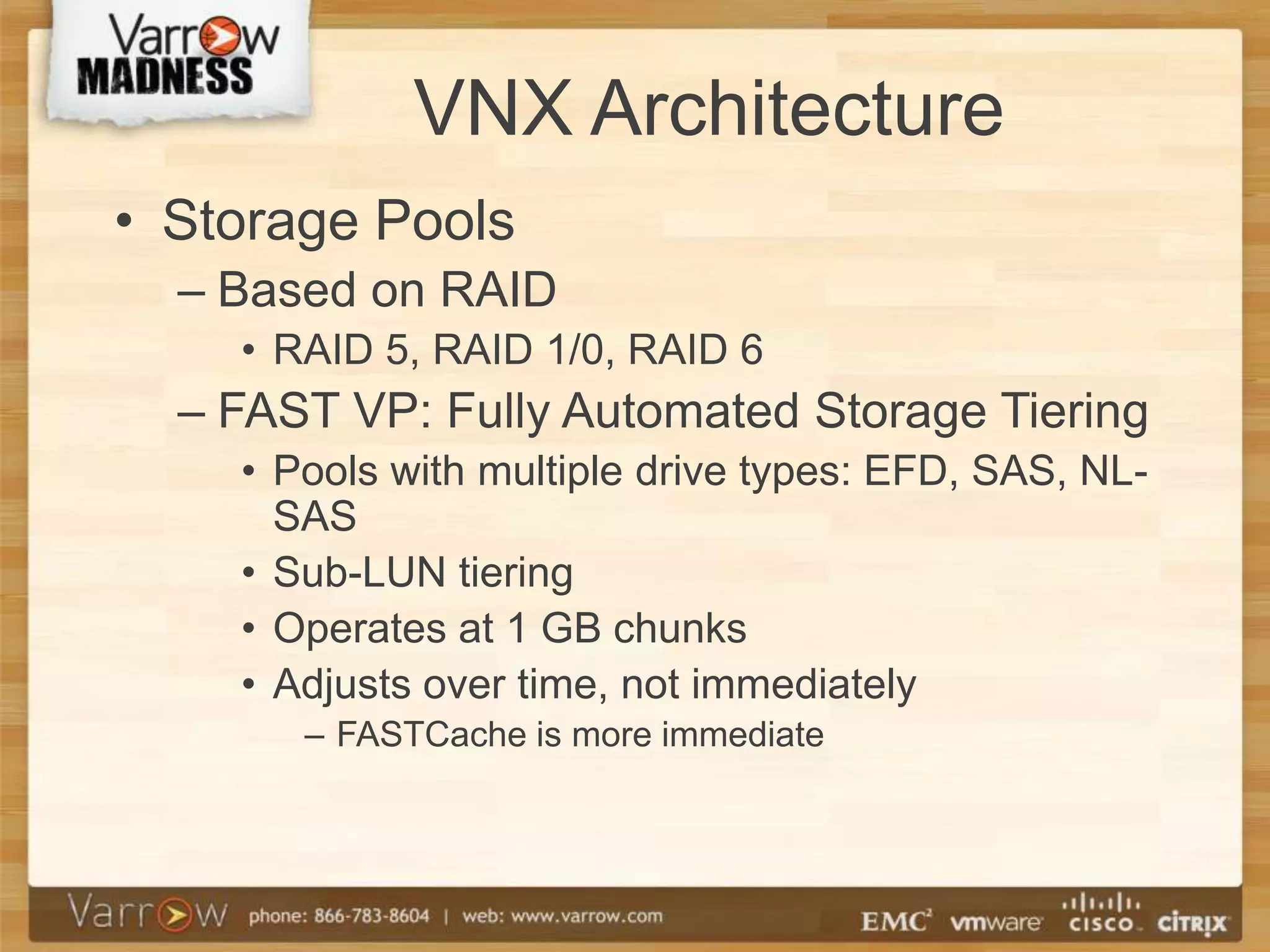

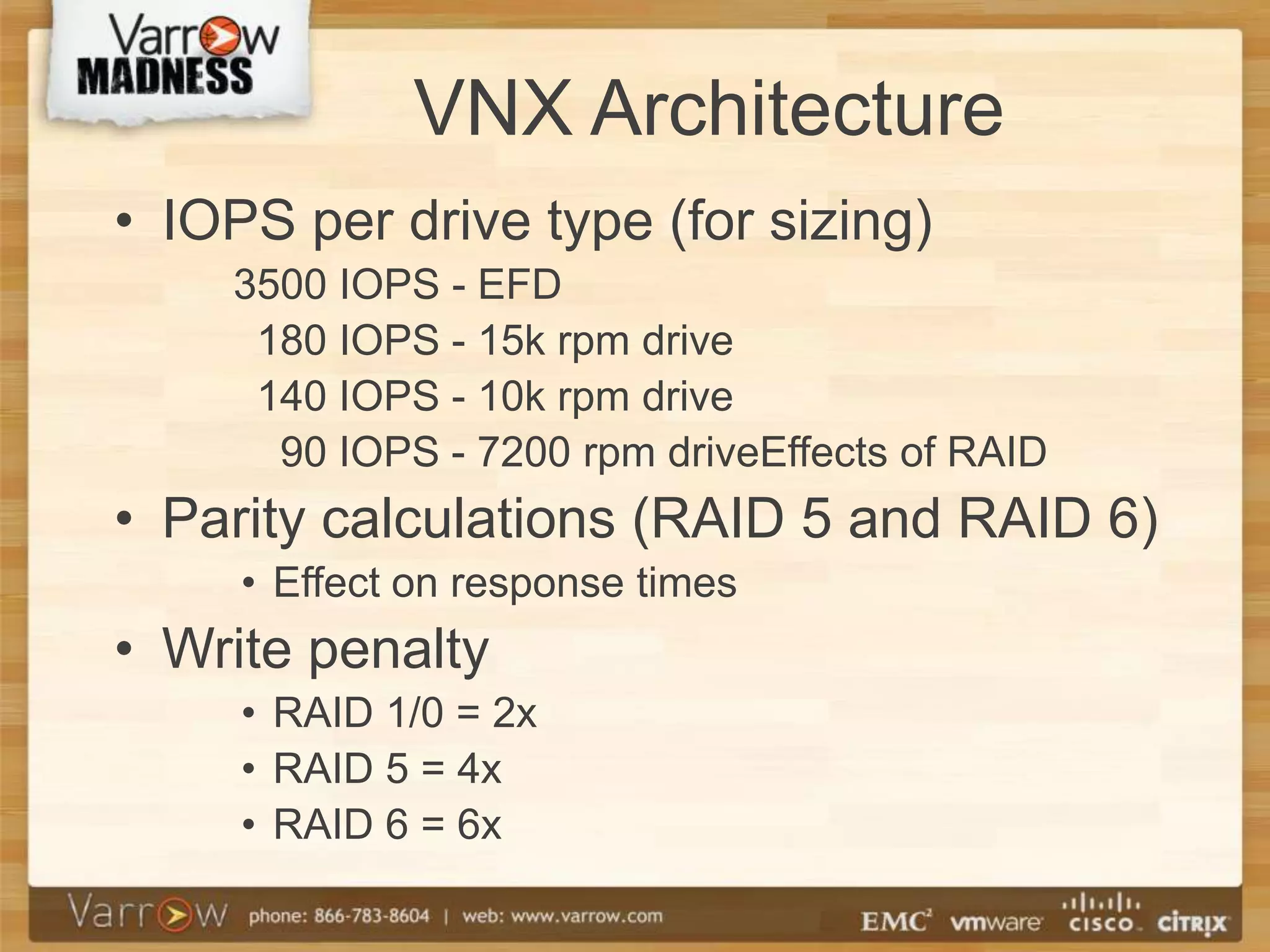

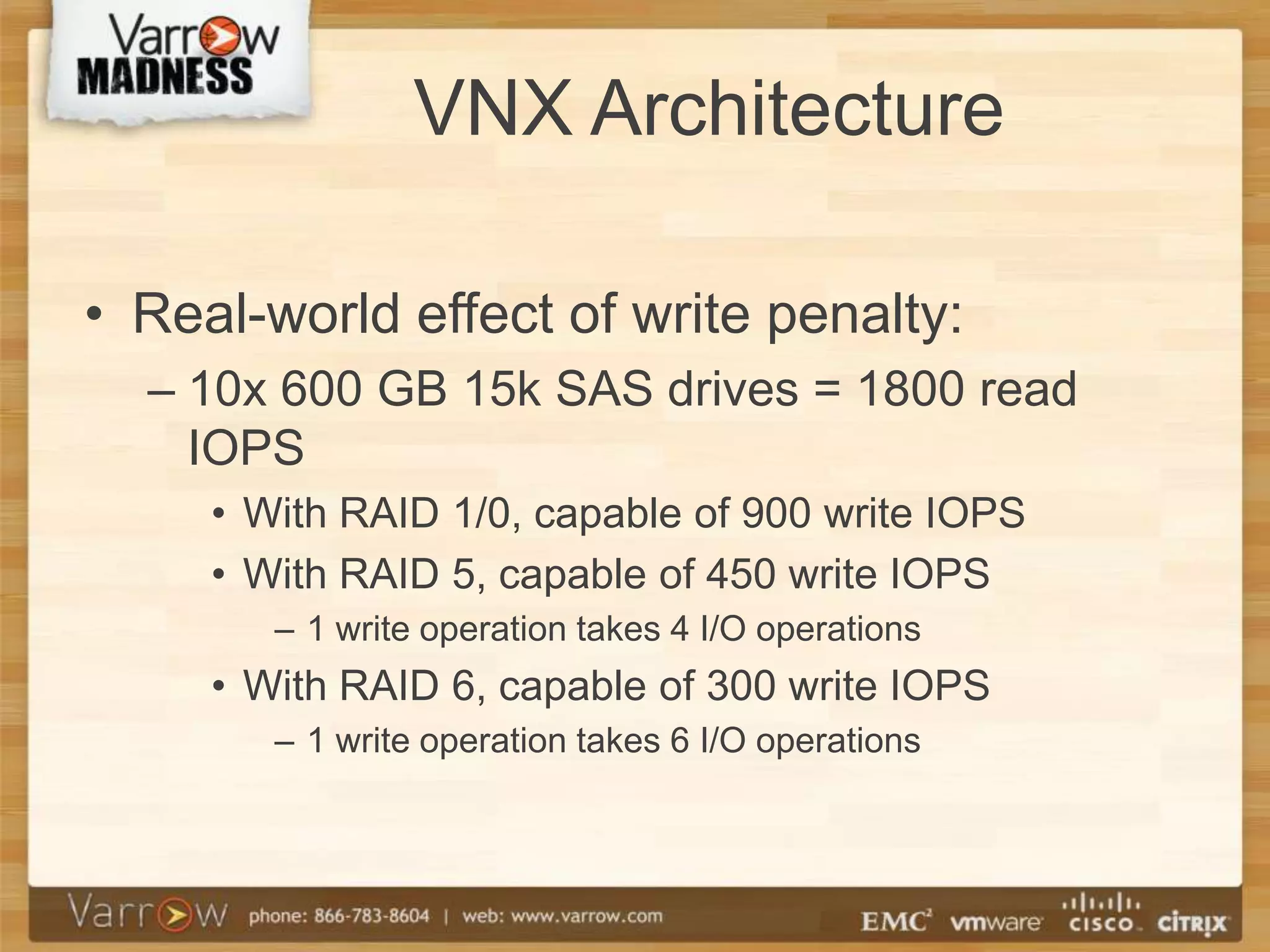

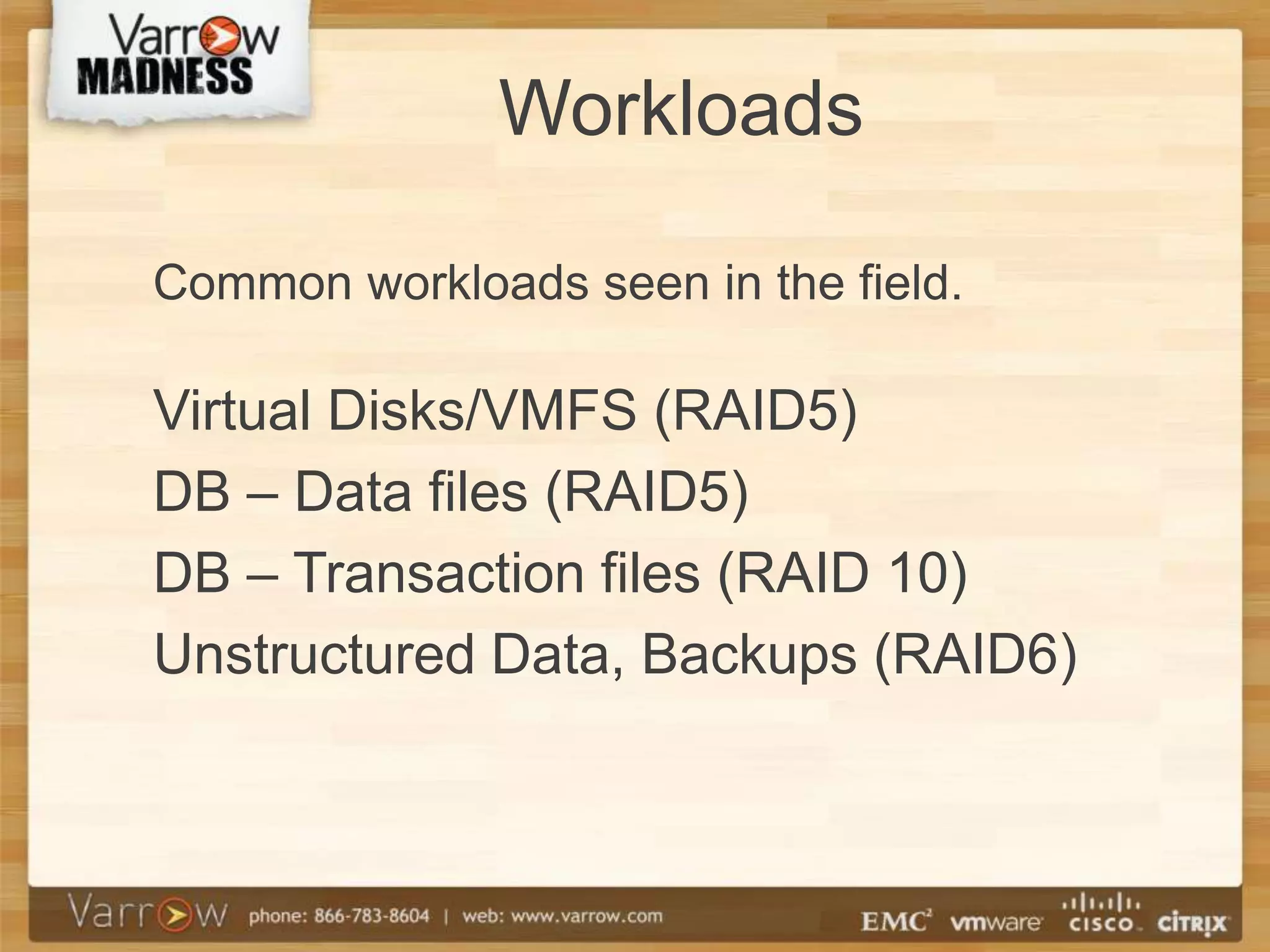

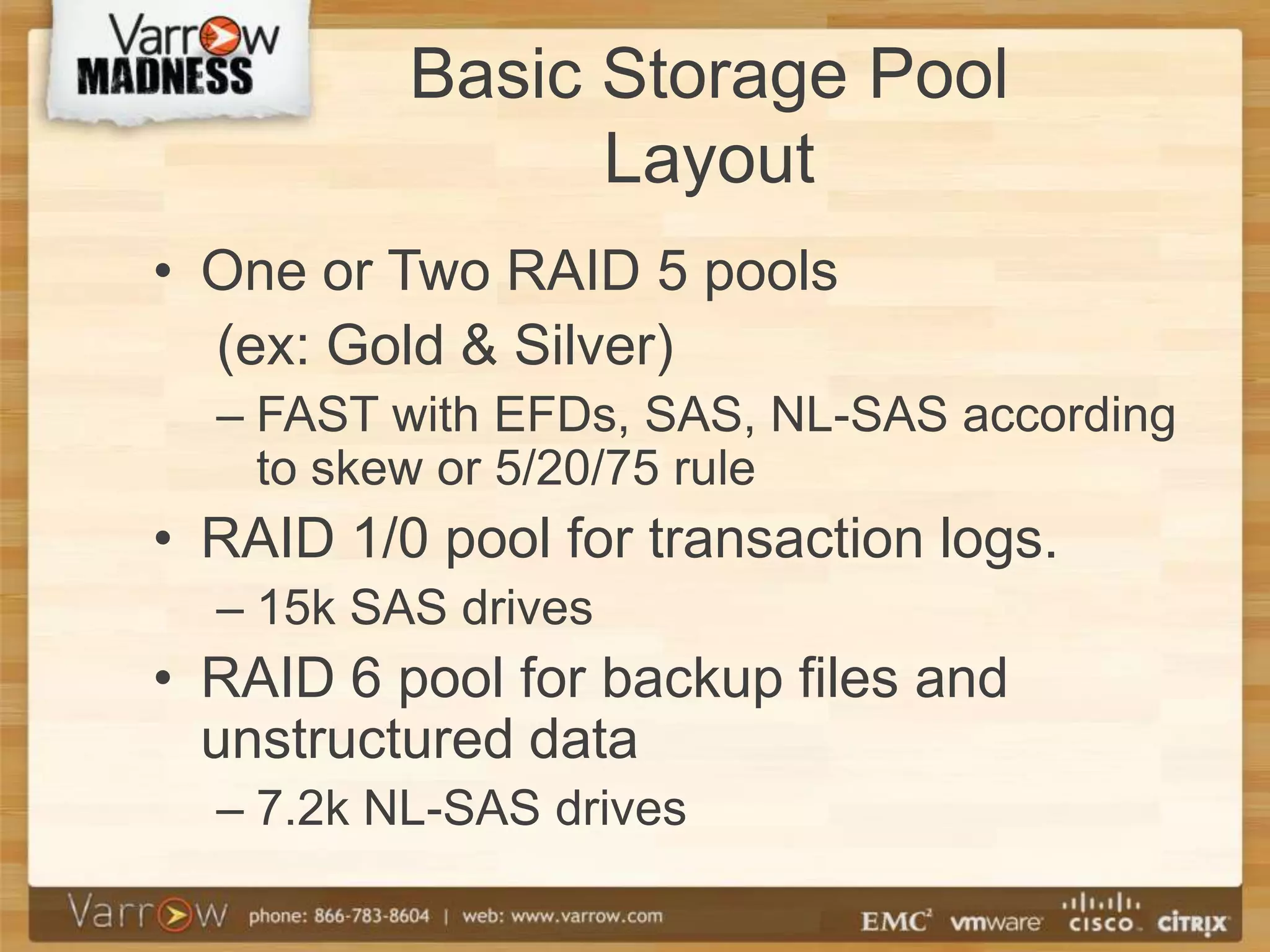

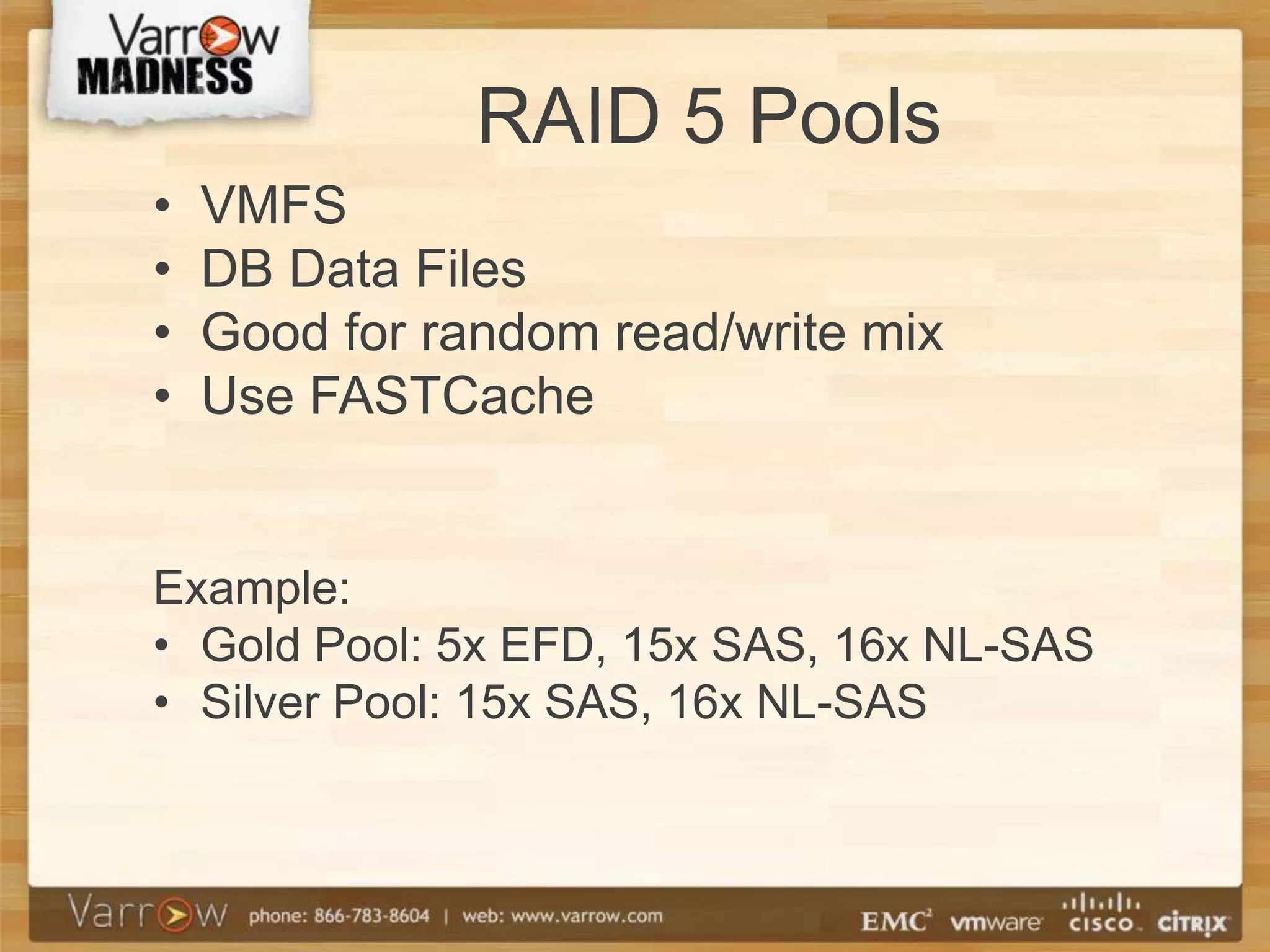

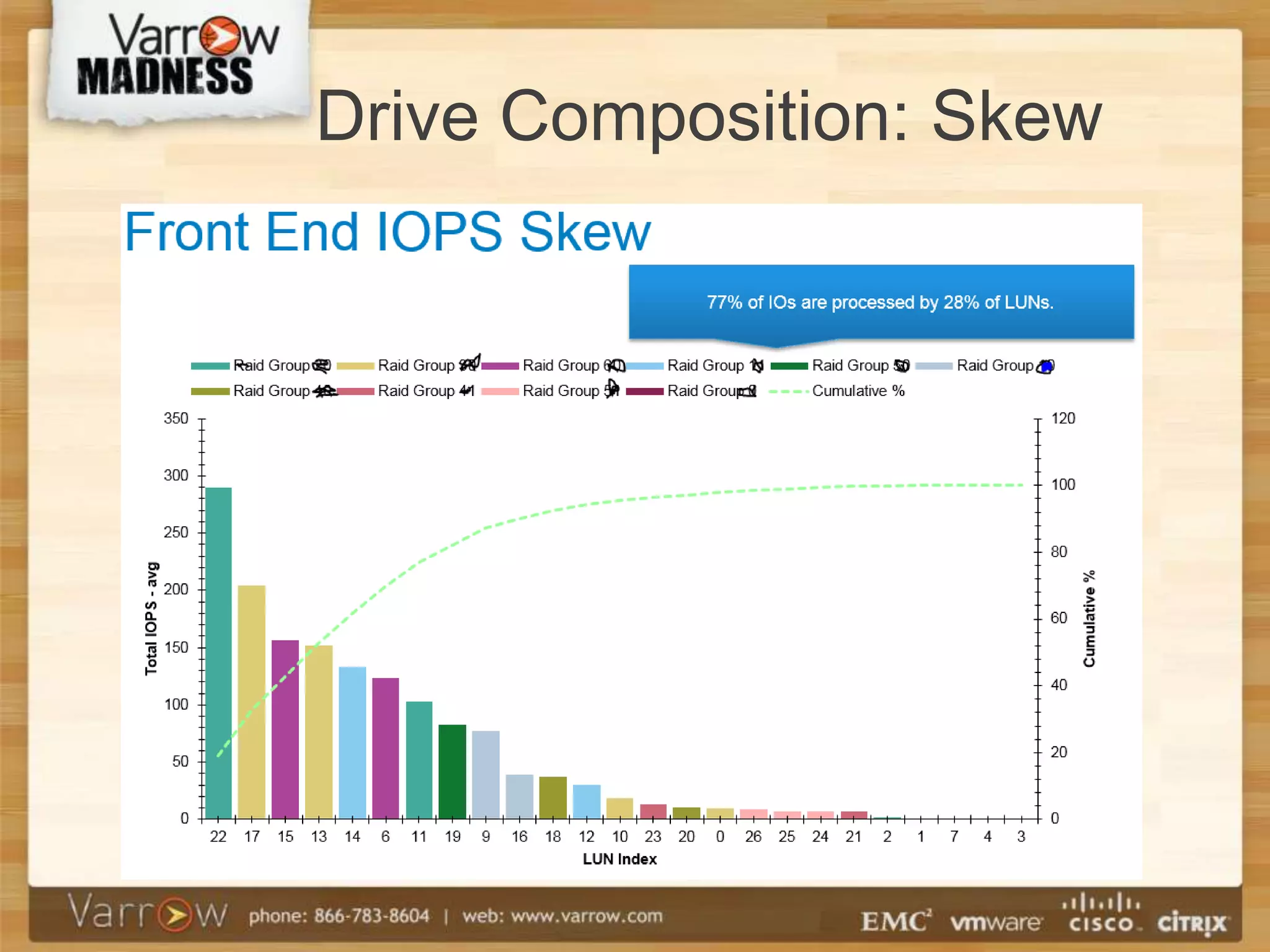

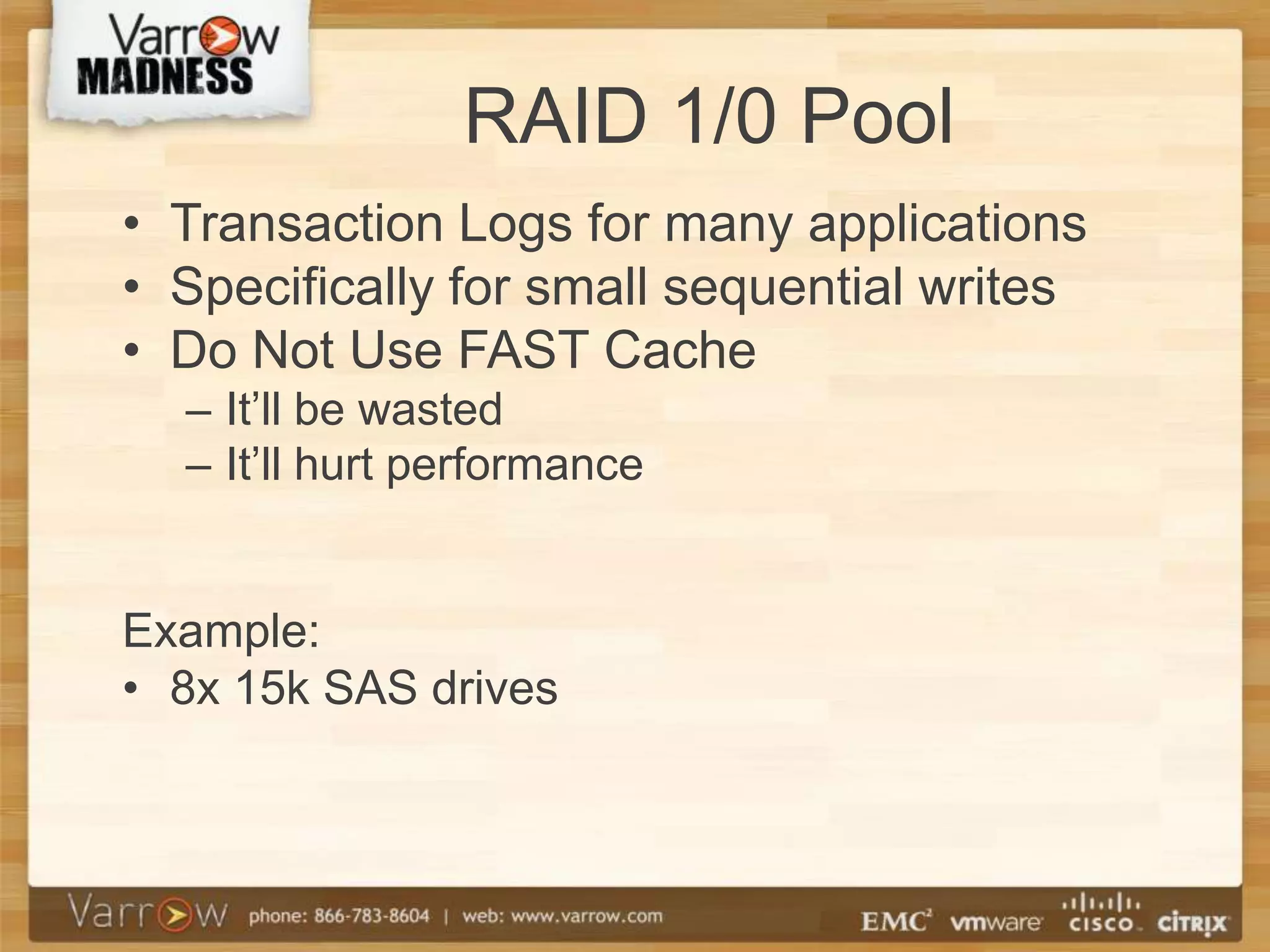

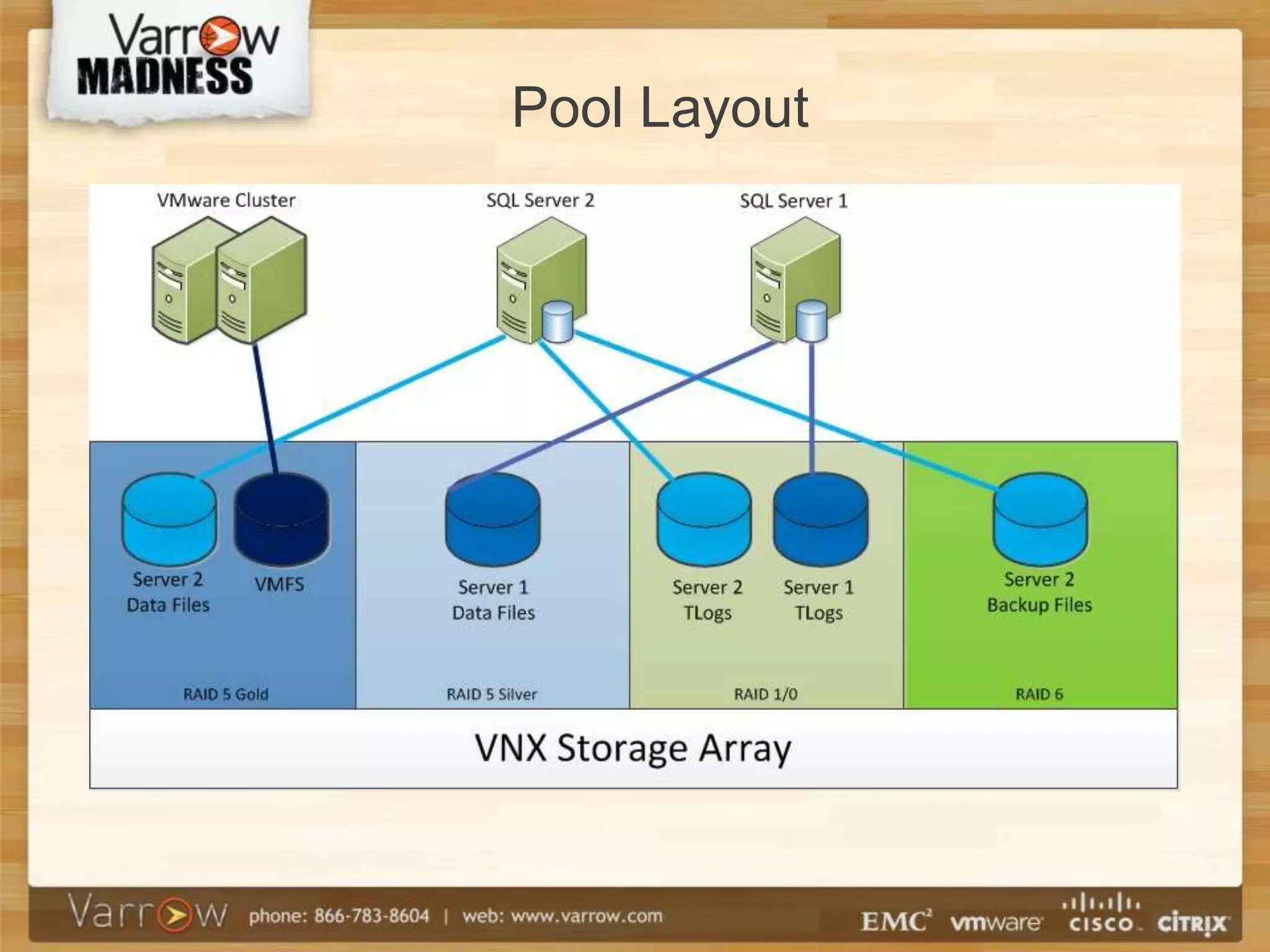

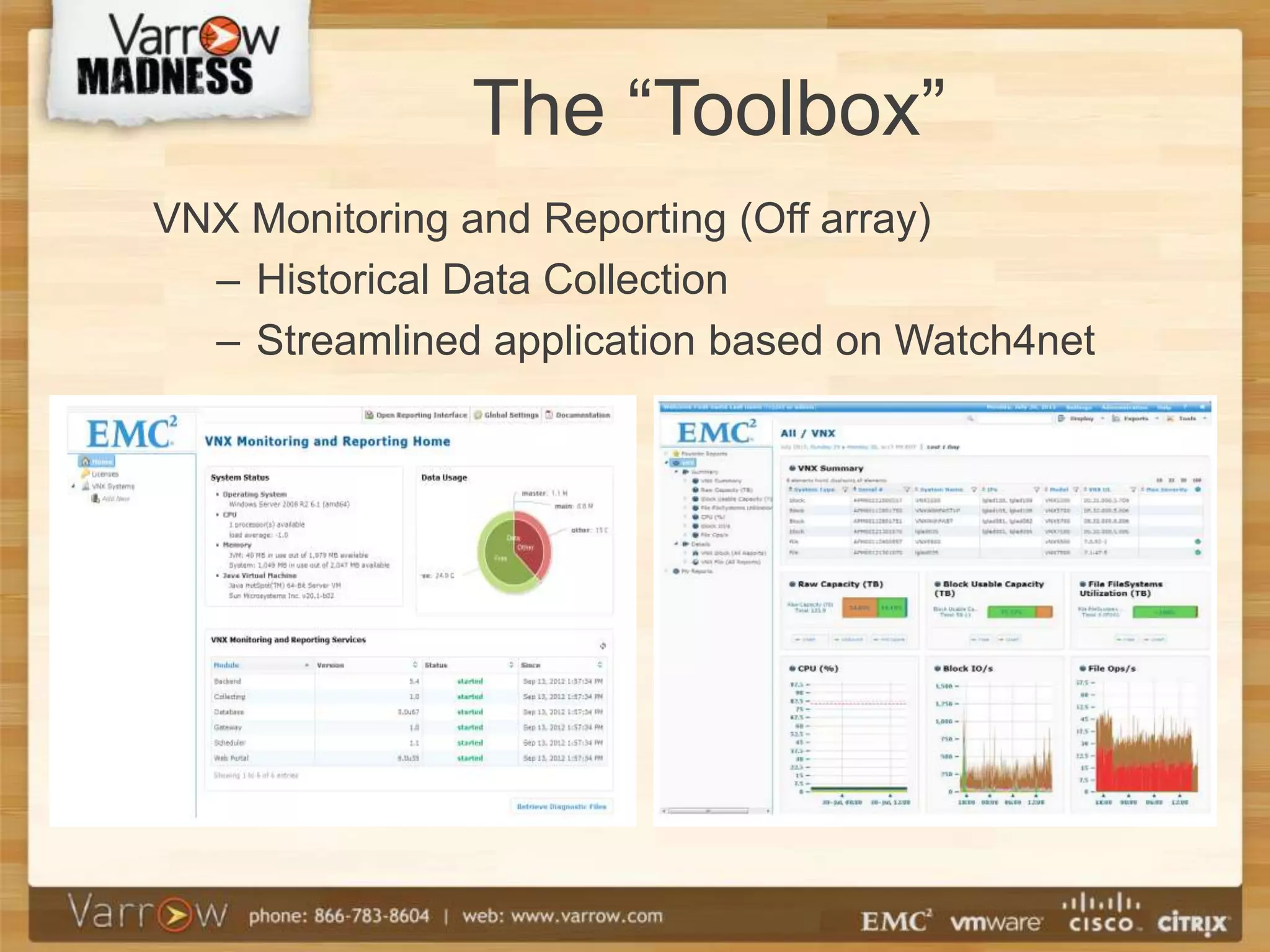

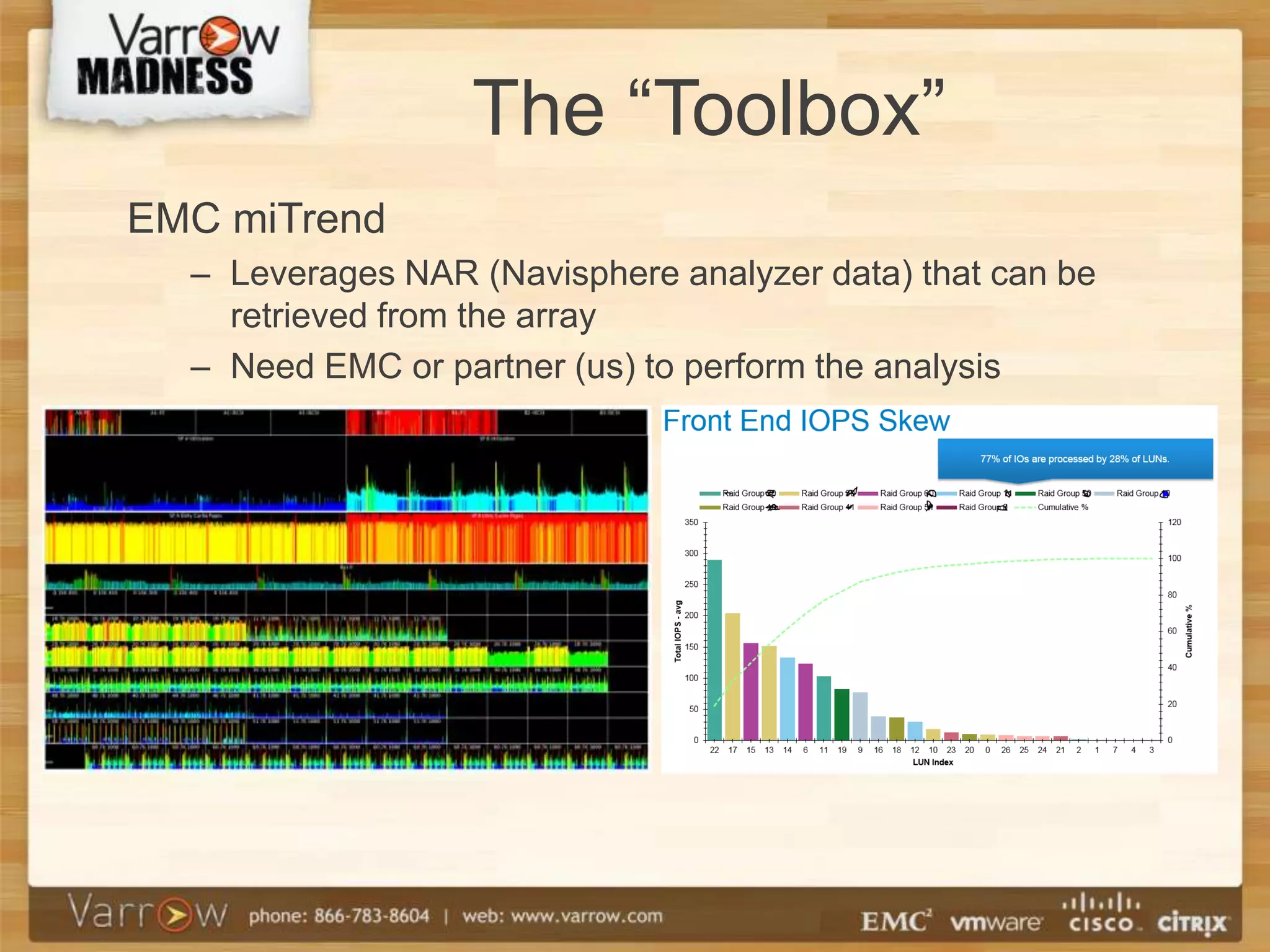

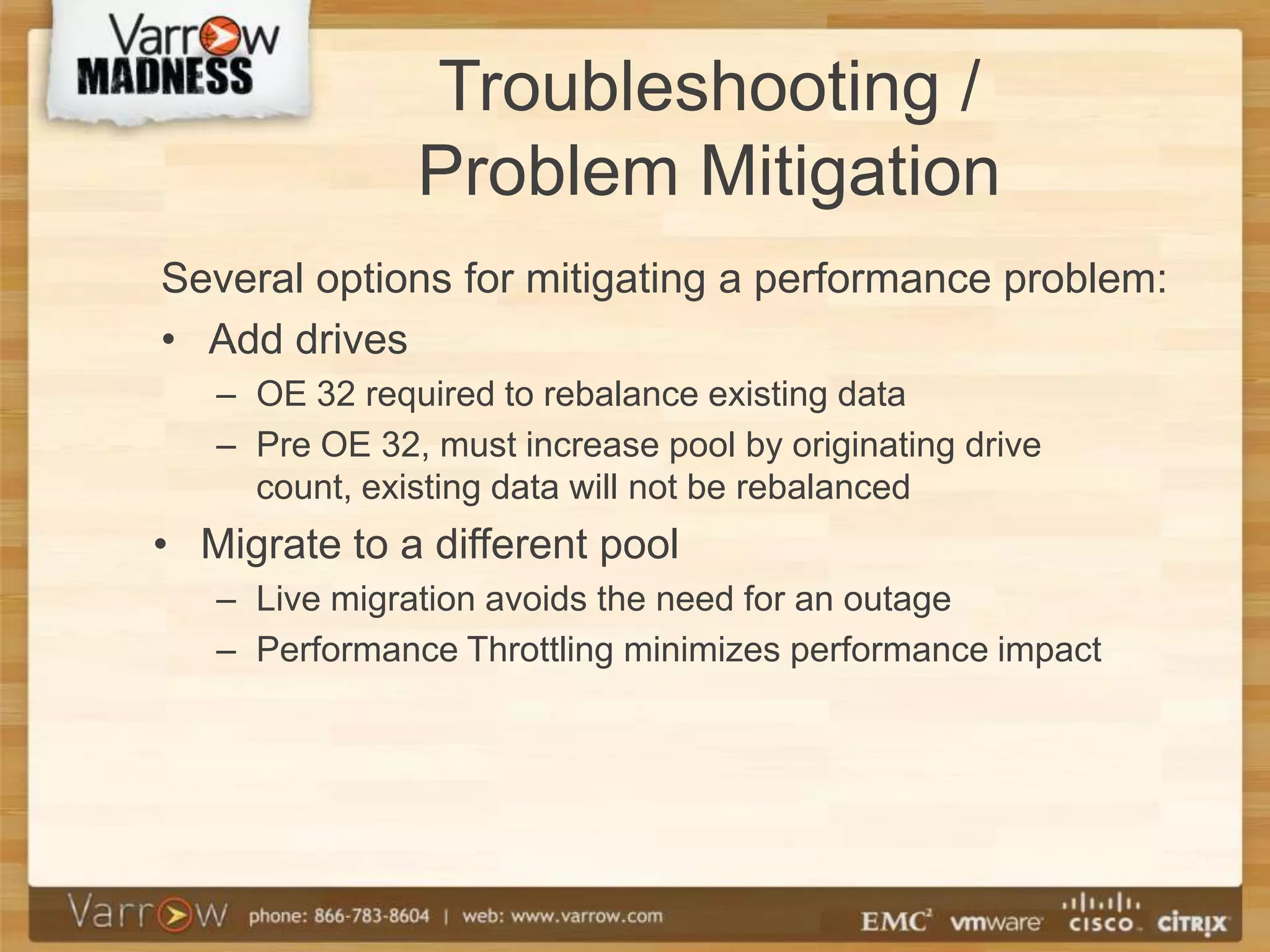

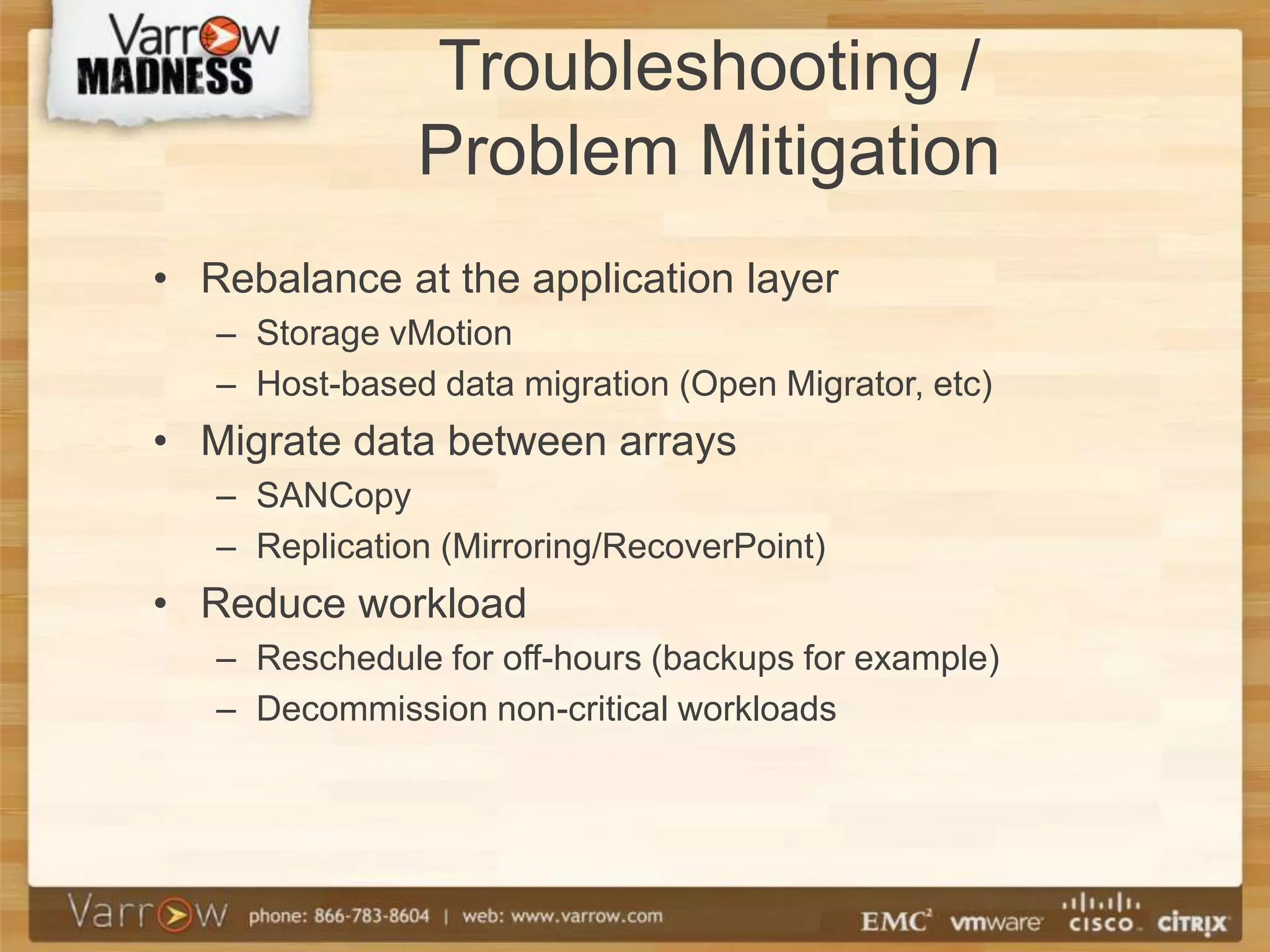

This document discusses mixed workloads on EMC VNX storage arrays. It begins by stating the goals of discussing how VNX storage pools work, how common workloads compare, which are compatible, and how to monitor and mitigate performance problems. It then provides an overview of VNX basics and architecture, including details on storage processors, cache, storage pools, and drive types and performance. Several common workloads are described. Ideal and realistic storage pool layouts are proposed. The document concludes with sections on monitoring, troubleshooting metrics, tools, and mitigation strategies.